【CUDA并行编程之八】Cuda实现Kmeans算法

本文主要介绍如何使用CUDA并行计算框架编程实现机器学习中的Kmeans算法,Kmeans算法的详细介绍在这里,本文重点在并行实现的过程。

当然还是简单的回顾一下kmeans算法的串行过程:

伪代码:

创建k个点作为起始质心(经常是随机选择)

当任意一个点的簇分配结果发生改变时

对数据集中的每个数据点

对每个质心

计算质心与数据点之间的距离

将数据点分配到距其最近的簇

对每一个簇,计算簇中所有点的均值并将均值作为质心①line03-04:将每个数据点到多个质心的距离计算进行并行化

②line05:将数据点到某个执行的距离计算进行并行化

KMEANS类:

class KMEANS

{

private:

int numClusters;

int numCoords;

int numObjs;

int *membership;//[numObjs]

char *filename;

float **objects;//[numObjs][numCoords] data objects

float **clusters;//[numClusters][unmCoords] cluster center

float threshold;

int loop_iterations;

public:

KMEANS(int k);

void file_read(char *fn);

void file_write();

void cuda_kmeans();

inline int nextPowerOfTwo(int n);

void free_memory();

virtual ~KMEANS();

};//KMEANS成员变量:

numClusters:中心点的个数numCoords:每个数据点的维度

numObjs:数据点的个数

membership:每个数据点所属类别的数组,维度为numObjs

filename:读入的文件名

objects:所有数据点,维度为[numObjs][numCoords]

clusters:中心点数据,维度为[numObjs][numCoords]

threshold:控制循环次数的一个域值

loop_iterations:循环的迭代次数

成员函数:

KMEANS(int k):含参构造函数。初始化成员变量

file_read(char *fn):读入文件数据并初始化object以及membership变量

file_write():将计算结果写回到结果文件中去

cuda_kmeans():kmeans计算的入口函数

nextPowerOfTwo(int n):它计算大于等于输入参数n的第一个2的幂次数。

free_memory():释放内存空间

~KMEANS():析构函数

并行的代码主要三个函数:

find_nearest_cluster(...)

compute_delta(...)

euclid_dist_2(...)

首先看一下函数euclid_dist_2(...):

__host__ __device__ inline static

float euclid_dist_2(int numCoords,int numObjs,int numClusters,float *objects,float *clusters,int objectId,int clusterId)

{

int i;

float ans = 0;

for( i=0;i再看一下函数compute_delta(...):

/*

* numIntermediates:The actual number of intermediates

* numIntermediates2:The next power of two

*/

__global__ static void compute_delta(int *deviceIntermediates,int numIntermediates, int numIntermediates2)

{

extern __shared__ unsigned int intermediates[];

intermediates[threadIdx.x] = (threadIdx.x < numIntermediates) ? deviceIntermediates[threadIdx.x] : 0 ;

__syncthreads();

//numIntermediates2 *must* be a power of two!

for(unsigned int s = numIntermediates2 /2 ; s > 0 ; s>>=1)

{

if(threadIdx.x < s)

{

intermediates[threadIdx.x] += intermediates[threadIdx.x + s];

}

__syncthreads();

}

if(threadIdx.x == 0)

{

deviceIntermediates[0] = intermediates[0];

}

}最后再看函数finid_nearest_cluster(...):

/*

* objects:[numCoords][numObjs]

* deviceClusters:[numCoords][numClusters]

* membership:[numObjs]

*/

__global__ static void find_nearest_cluster(int numCoords,int numObjs,int numClusters,float *objects, float *deviceClusters,int *membership ,int *intermediates)

{

extern __shared__ char sharedMemory[];

unsigned char *membershipChanged = (unsigned char *)sharedMemory;

float *clusters = deviceClusters;

membershipChanged[threadIdx.x] = 0;

int objectId = blockDim.x * blockIdx.x + threadIdx.x;

if( objectId < numObjs )

{

int index;

float dist,min_dist;

/*find the cluster id that has min distance to object*/

index = 0;

min_dist = euclid_dist_2(numCoords,numObjs,numClusters,objects,clusters,objectId,0);

for(int i=0;i 0 ;s>>=1)

{

if(threadIdx.x < s)

{

membershipChanged[threadIdx.x] += membershipChanged[threadIdx.x + s];//calculate all changed values and save result to membershipChanged[0]

}

__syncthreads();

}

if(threadIdx.x == 0)

{

intermediates[blockIdx.x] = membershipChanged[0];

}

#endif

}

}//find_nearest_cluster 这三个函数将所有能够并行的地方都进行了并行,实现了整体算法的并行化~

在此呈上全部代码:

kmeans.h:

#ifndef _H_KMEANS

#define _H_KMEANS

#include

#define malloc2D(name, xDim, yDim, type) do { \

name = (type **)malloc(xDim * sizeof(type *)); \

assert(name != NULL); \

name[0] = (type *)malloc(xDim * yDim * sizeof(type)); \

assert(name[0] != NULL); \

for (size_t i = 1; i < xDim; i++) \

name[i] = name[i-1] + yDim; \

} while (0)

double wtime(void);

#endif

wtime.cu:

#include

#include

#include

double wtime(void)

{

double now_time;

struct timeval etstart;

struct timezone tzp;

if (gettimeofday(&etstart, &tzp) == -1)

perror("Error: calling gettimeofday() not successful.\n");

now_time = ((double)etstart.tv_sec) + /* in seconds */

((double)etstart.tv_usec) / 1000000.0; /* in microseconds */

return now_time;

}

#include

#include

#include

#include

#include

#include

#include

#include

#include "kmeans.h"

using namespace std;

const int MAX_CHAR_PER_LINE = 1024;

class KMEANS

{

private:

int numClusters;

int numCoords;

int numObjs;

int *membership;//[numObjs]

char *filename;

float **objects;//[numObjs][numCoords] data objects

float **clusters;//[numClusters][unmCoords] cluster center

float threshold;

int loop_iterations;

public:

KMEANS(int k);

void file_read(char *fn);

void file_write();

void cuda_kmeans();

inline int nextPowerOfTwo(int n);

void free_memory();

virtual ~KMEANS();

};

KMEANS::~KMEANS()

{

free(membership);

free(clusters[0]);

free(clusters);

free(objects[0]);

free(objects);

}

KMEANS::KMEANS(int k)

{

threshold = 0.001;

numObjs = 0;

numCoords = 0;

numClusters = k;

filename = NULL;

loop_iterations = 0;

}

void KMEANS::file_write()

{

FILE *fptr;

char outFileName[1024];

//output:the coordinates of the cluster centres

sprintf(outFileName,"%s.cluster_centres",filename);

printf("Writingcoordinates of K=%d cluster centers to file \"%s\"\n",numClusters,outFileName);

fptr = fopen(outFileName,"w");

for(int i=0;i> 1 | n;

n = n >> 2 | n;

n = n >> 4 | n;

n = n >> 8 | n;

n = n >> 16 | n;

//n = n >> 32 | n; // for 64-bit ints

return ++n;

}

__host__ __device__ inline static

float euclid_dist_2(int numCoords,int numObjs,int numClusters,float *objects,float *clusters,int objectId,int clusterId)

{

int i;

float ans = 0;

for( i=0;i 0 ; s>>=1)

{

if(threadIdx.x < s)

{

intermediates[threadIdx.x] += intermediates[threadIdx.x + s];

}

__syncthreads();

}

if(threadIdx.x == 0)

{

deviceIntermediates[0] = intermediates[0];

}

}

/*

* objects:[numCoords][numObjs]

* deviceClusters:[numCoords][numClusters]

* membership:[numObjs]

*/

__global__ static void find_nearest_cluster(int numCoords,int numObjs,int numClusters,float *objects, float *deviceClusters,int *membership ,int *intermediates)

{

extern __shared__ char sharedMemory[];

unsigned char *membershipChanged = (unsigned char *)sharedMemory;

float *clusters = deviceClusters;

membershipChanged[threadIdx.x] = 0;

int objectId = blockDim.x * blockIdx.x + threadIdx.x;

if( objectId < numObjs )

{

int index;

float dist,min_dist;

/*find the cluster id that has min distance to object*/

index = 0;

min_dist = euclid_dist_2(numCoords,numObjs,numClusters,objects,clusters,objectId,0);

for(int i=0;i 0 ;s>>=1)

{

if(threadIdx.x < s)

{

membershipChanged[threadIdx.x] += membershipChanged[threadIdx.x + s];//calculate all changed values and save result to membershipChanged[0]

}

__syncthreads();

}

if(threadIdx.x == 0)

{

intermediates[blockIdx.x] = membershipChanged[0];

}

#endif

}

}//find_nearest_cluster

void KMEANS::cuda_kmeans()

{

int index,loop = 0;

int *newClusterSize;//[numClusters]:no.objects assigned in each new cluster

float delta; //% of objects changes their clusters

float **dimObjects;//[numCoords][numObjs]

float **dimClusters;

float **newClusters;//[numCoords][numClusters]

float *deviceObjects; //[numCoords][numObjs]

float *deviceClusters; //[numCoords][numclusters]

int *deviceMembership;

int *deviceIntermediates;

//Copy objects given in [numObjs][numCoords] layout to new [numCoords][numObjs] layout

malloc2D(dimObjects,numCoords,numObjs,float);

for(int i=0;i>>(numCoords,numObjs,numClusters,deviceObjects,deviceClusters,deviceMembership,deviceIntermediates);

cudaDeviceSynchronize();

compute_delta<<<1,numReductionThreads,reductionBlockSharedDataSize>>>(deviceIntermediates,numClusterBlocks,numReductionThreads);

cudaDeviceSynchronize();

int d;

cudaMemcpy(&d,deviceIntermediates,sizeof(int),cudaMemcpyDeviceToHost);

delta = (float)d;

cudaMemcpy(membership,deviceMembership,numObjs*sizeof(int),cudaMemcpyDeviceToHost);

for(int i=0;i 0)

dimClusters[j][i] = newClusters[j][i]/newClusterSize[i];

newClusters[j][i] = 0.0;//set back to 0

}

newClusterSize[i] = 0 ; //set back to 0

}

delta /= numObjs;

}while( delta > threshold && loop++ < 500 );

loop_iterations = loop + 1;

malloc2D(clusters,numClusters,numCoords,float);

for(int i=0;i

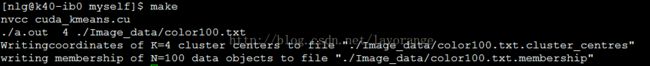

target:

nvcc cuda_kmeans.cu

./a.out 4 ./Image_data/color100.txt所有代码和文件数据在这里:http://pan.baidu.com/s/1jIdslYQ

运行代码:

kmeans的cuda实现代码相对复杂,在阅读的过程中可能会有困难,有问题请留言~

Author:忆之独秀

Email:[email protected]

注明出处:http://blog.csdn.net/lavorange/article/details/41942323