android音频编辑(裁剪,合成)(1)

公司最近在做自己的项目,关于音视频编辑,还有图片的编辑方面。上网搜了一下,哇!很烦,大都用的FFmpeg进行编码、解码,再进行

相应的操作!国外也有大牛,封装了jar,大家搜一下就很多了!在这也不多说了,用FFmpeg进行格式转换,裁剪等等操作的,也可以在

GitHub上搜一下,有安卓版的已经编译好的开源项目demo(大多用的FFmpeg的命令行进行操作)!好吧!本来还想多向公司争取点时间

对这方面好好研究一下!既然都有现成的了,就拉过来改吧改吧!(一向讨厌伸手主义,但是貌似自己也入坑了!妈蛋,谁让时间不够用

呢!)

音频录制

音频编辑分两块:一、录音;二、音频编辑

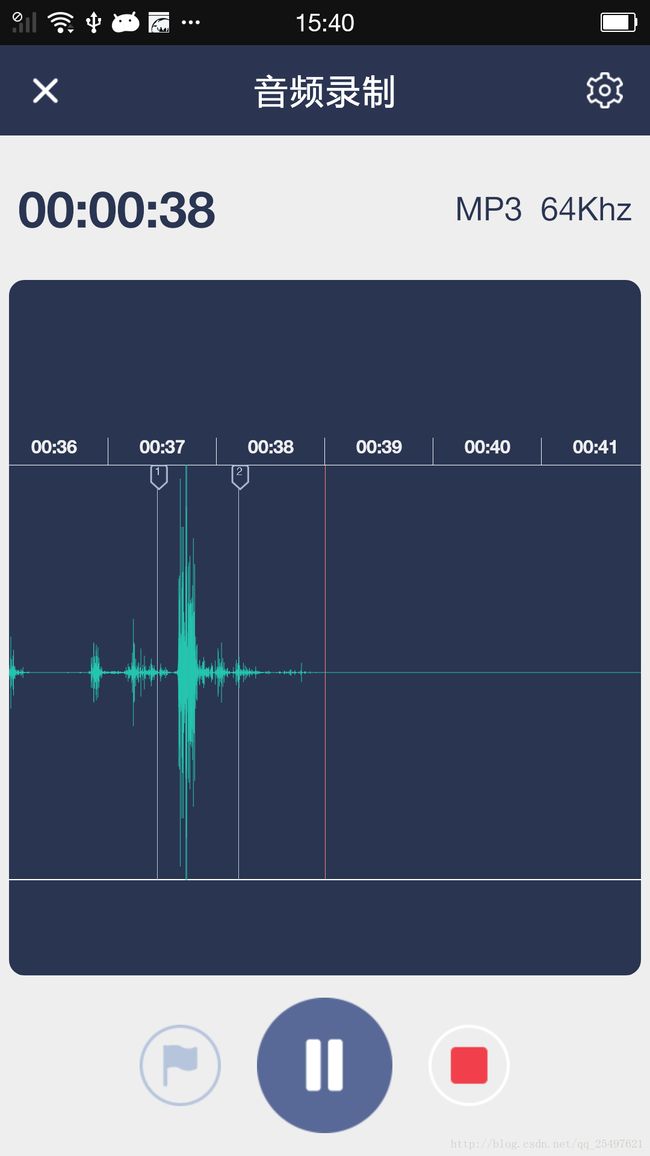

录音的界面如下(很炫!有没有!哈哈):

(这是我从GitHub上找的一个demo,改了很长时间才实现的)

主要功能:

(1)、音频的录制

(2)、录制过程中添加标记

(3)、录音的暂停开始

(4)、录音完成(pcm格式转为wav格式)

下面对界面的制作稍加分析:

注:源代码也不是我写的,我只是在其上作了修改和添加(勿喷)!

画布类:

package com.jwzt.jwzt_procaibian.widget;

import android.content.Context;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Rect;

import android.util.AttributeSet;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

/**

* 该类只是一个初始化surfaceview的封装

* @author tcx

*/

public class WaveSurfaceView extends SurfaceView implements SurfaceHolder.Callback{

private SurfaceHolder holder;

private int line_off;//上下边距距离

public int getLine_off() {

return line_off;

}

public void setLine_off(int line_off) {

this.line_off = line_off;

}

public WaveSurfaceView(Context context, AttributeSet attrs) {

super(context, attrs);

this.holder = getHolder();

holder.addCallback(this);

}

/**

* @author tcx

* init surfaceview

*/

public void initSurfaceView( final SurfaceView sfv){

new Thread(){

public void run() {

Canvas canvas = sfv.getHolder().lockCanvas(

new Rect(0, 0, sfv.getWidth(), sfv.getHeight()));// 关键:获取画布

if(canvas==null){

return;

}

//canvas.drawColor(Color.rgb(241, 241, 241));// 清除背景

canvas.drawARGB(255, 42, 53, 82);

int height = sfv.getHeight()-line_off;

Paint paintLine =new Paint();

Paint centerLine =new Paint();

Paint circlePaint = new Paint();

circlePaint.setColor(Color.rgb(246, 131, 126));

paintLine.setColor(Color.rgb(255, 255, 255));

paintLine.setStrokeWidth(2);

circlePaint.setAntiAlias(true);

canvas.drawLine(sfv.getWidth()/2, 0, sfv.getWidth()/2, sfv.getHeight(), circlePaint);//垂直的线

centerLine.setColor(Color.rgb(39, 199, 175));

canvas.drawLine(0, line_off/2, sfv.getWidth(), line_off/2, paintLine);//最上面的那根线

canvas.drawLine(0, sfv.getHeight()-line_off/2-1, sfv.getWidth(), sfv.getHeight()-line_off/2-1, paintLine);//最下面的那根线

canvas.drawLine(0, height*0.5f+line_off/2, sfv.getWidth() ,height*0.5f+line_off/2, centerLine);//中心线

sfv.getHolder().unlockCanvasAndPost(canvas);// 解锁画布,提交画好的图像

};

}.start();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

initSurfaceView(this);

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

}

}

上面的这个类就很简单了,就是利用surfaceView制作一个画布

接下来就开始在画布上搞事情了

先初始化好录音机:

/**

* 初始化录音

*/

private void initAudio(){

recBufSize = AudioRecord.getMinBufferSize(FREQUENCY,

CHANNELCONGIFIGURATION, AUDIOENCODING);//设置录音缓冲区(一般为20ms,1280)

audioRecord = new AudioRecord(AUDIO_SOURCE,// 指定音频来源,这里为麦克风

FREQUENCY, // 16000HZ采样频率

CHANNELCONGIFIGURATION,// 录制通道

AUDIO_SOURCE,// 录制编码格式

recBufSize);

waveCanvas = new WaveCanvas();//在下面哦

waveCanvas.baseLine = waveSfv.getHeight() / 2;

waveCanvas.Start(audioRecord, recBufSize, waveSfv, mFileName, U.DATA_DIRECTORY, new Handler.Callback() {

@Override

public boolean handleMessage(Message msg) {

return true;

}

},(swidth-DensityUtil.dip2px(10))/2,this);

恩,接着看浪线怎么画:

**WaveCanvas类在此**

package com.jwzt.jwzt_procaibian.widget;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Date;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Set;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Paint.FontMetricsInt;

import android.graphics.Paint.Style;

import android.graphics.drawable.BitmapDrawable;

import android.graphics.Rect;

import android.media.AudioRecord;

import android.os.AsyncTask;

import android.os.Handler.Callback;

import android.os.Message;

import android.util.Log;

import android.view.SurfaceView;

import com.jwzt.jwzt_procaibian.R;

import com.jwzt.jwzt_procaibian.inter.CurrentPosInterface;

import com.jwzt.jwzt_procaibian.utils.Pcm2Wav;

/**

* 录音和写入文件使用了两个不同的线程,以免造成卡机现象

* 录音波形绘制

* @author tcx

*

*/

public class WaveCanvas {

private ArrayList inBuf = new ArrayList();//缓冲区数据

private ArrayList write_data = new ArrayList();//写入文件数据

public boolean isRecording = false;// 录音线程控制标记

private boolean isWriting = false;// 录音线程控制标记

private int line_off ;//上下边距的距离

public int rateX = 30;//控制多少帧取一帧

public int rateY = 1; // Y轴缩小的比例 默认为1

public int baseLine = 0;// Y轴基线

private AudioRecord audioRecord;

int recBufSize;

private int marginRight=30;//波形图绘制距离右边的距离

private int draw_time = 1000 / 200;//两次绘图间隔的时间

private float divider = 0.1f;//为了节约绘画时间,每0.2个像素画一个数据

long c_time;

private String savePcmPath ;//保存pcm文件路径

private String saveWavPath;//保存wav文件路径

private Paint circlePaint;

private Paint center;

private Paint paintLine;

private Paint mPaint;

private Context mContext;

private ArrayList markList=new ArrayList();

private int readsize;

private Map markMap=new HashMap();

private boolean isPause=false;

private CurrentPosInterface mCurrentPosInterface;

private Paint progressPaint;

private Paint paint;

private Paint bottomHalfPaint;

private Paint darkPaint;

private Paint markTextPaint;

private Bitmap markIcon;

private int bitWidth;

private int bitHeight;

private int start;

/**

* 开始录音

* @param audioRecord

* @param recBufSize

* @param sfv

* @param audioName

*/

public void Start(AudioRecord audioRecord, int recBufSize, SurfaceView sfv

,String audioName,String path,Callback callback,int width,Context context) {

this.audioRecord = audioRecord;

isRecording = true;

isWriting = true;

this.recBufSize = recBufSize;

savePcmPath = path + audioName +".pcm";

saveWavPath = path + audioName +".wav";

this.mContext=context;

init();

new Thread(new WriteRunnable()).start();//开线程写文件

new RecordTask(audioRecord, recBufSize, sfv, mPaint,callback).execute();

this.marginRight=width;

}

public void init(){

circlePaint = new Paint();//画圆

circlePaint.setColor(Color.rgb(246, 131, 126));//设置上圆的颜色

center = new Paint();

center.setColor(Color.rgb(39, 199, 175));// 画笔为color

center.setStrokeWidth(1);// 设置画笔粗细

center.setAntiAlias(true);

center.setFilterBitmap(true);

center.setStyle(Style.FILL);

paintLine =new Paint();

paintLine.setColor(Color.rgb(255, 255, 255));

paintLine.setStrokeWidth(2);// 设置画笔粗细

mPaint = new Paint();

mPaint.setColor(Color.rgb(39, 199, 175));// 画笔为color

mPaint.setStrokeWidth(1);// 设置画笔粗细

mPaint.setAntiAlias(true);

mPaint.setFilterBitmap(true);

mPaint.setStyle(Paint.Style.FILL);

//标记 部分画笔

progressPaint=new Paint();

progressPaint.setColor(mContext.getResources().getColor(R.color.vine_green));

paint=new Paint();

bottomHalfPaint=new Paint();

darkPaint=new Paint();

darkPaint.setColor(mContext.getResources().getColor(R.color.dark_black));

bottomHalfPaint.setColor(mContext.getResources().getColor(R.color.hui));

markTextPaint=new Paint();

markTextPaint.setColor(mContext.getResources().getColor(R.color.hui));

markTextPaint.setTextSize(18);

paint.setAntiAlias(true);

paint.setDither(true);

paint.setFilterBitmap(true);

markIcon=((BitmapDrawable)mContext.getResources().getDrawable(R.drawable.edit_mark)).getBitmap();

bitWidth = markIcon.getWidth();

bitHeight = markIcon.getHeight();

}

/**

* 停止录音

*/

public void Stop() {

isRecording = false;

isPause=true;

audioRecord.stop();

}

/**

* pause recording audio

*/

public void pause(){

isPause=true;

}

/**

* restart recording audio

*/

public void reStart(){

isPause=false;

}

/**

* 清楚数据

*/

public void clear(){

inBuf.clear();// 清除

}

/**

* 异步录音程序

* @author cokus

*

*/

class RecordTask extends AsyncTask {

private int recBufSize;

private AudioRecord audioRecord;

private SurfaceView sfv;// 画板

private Paint mPaint;// 画笔

private Callback callback;

private boolean isStart =false;

private Rect srcRect;

private Rect destRect;

private Rect bottomHalfBgRect;

public RecordTask(AudioRecord audioRecord, int recBufSize,

SurfaceView sfv, Paint mPaint,Callback callback) {

this.audioRecord = audioRecord;

this.recBufSize = recBufSize;

this.sfv = sfv;

line_off = ((WaveSurfaceView)sfv).getLine_off();

this.mPaint = mPaint;

this.callback = callback;

inBuf.clear();// 清除

}

@Override

protected Object doInBackground(Object... params) {

try {

short[] buffer = new short[recBufSize];

audioRecord.startRecording();// 开始录制

while (isRecording) {

while(!isPause){

// 从MIC保存数据到缓冲区

readsize = audioRecord.read(buffer, 0,

recBufSize);

synchronized (inBuf) {

for (int i = 0; i < readsize; i += rateX) {

inBuf.add(buffer[i]);

}

}

publishProgress();//更新主线程中的UI

if (AudioRecord.ERROR_INVALID_OPERATION != readsize) {

synchronized (write_data) {

byte bys[] = new byte[readsize*2];

//因为arm字节序问题,所以需要高低位交换

for (int i = 0; i < readsize; i++) {

byte ss[] = getBytes(buffer[i]);

bys[i*2] =ss[0];

bys[i*2+1] = ss[1];

}

write_data.add(bys);

}

}

}

}

isWriting = false;

} catch (Throwable t) {

Message msg = new Message();

msg.arg1 =-2;

msg.obj=t.getMessage();

callback.handleMessage(msg);

}

return null;

}

@Override

protected void onProgressUpdate(Object... values) {

long time = new Date().getTime();

if(time - c_time >= draw_time){

ArrayList buf = new ArrayList();

synchronized (inBuf) {

if (inBuf.size() == 0)

return;

while(inBuf.size() > (sfv.getWidth()-marginRight) / divider){

inBuf.remove(0);

}

buf = (ArrayList) inBuf.clone();// 保存

}

SimpleDraw(buf, sfv.getHeight()/2);// 把缓冲区数据画出来

c_time = new Date().getTime();

}

super.onProgressUpdate(values);

}

public byte[] getBytes(short s)

{

byte[] buf = new byte[2];

for (int i = 0; i < buf.length; i++)

{

buf[i] = (byte) (s & 0x00ff);

s >>= 8;

}

return buf;

}

/**

* 绘制指定区域

*

* @param buf

* 缓冲区

* @param baseLine

* Y轴基线

*/

void SimpleDraw(ArrayList buf, int baseLine) {

if (!isRecording)

return;

rateY = (65535 /2/ (sfv.getHeight()-line_off));

for (int i = 0; i < buf.size(); i++) {

byte bus[] = getBytes(buf.get(i));

buf.set(i, (short)((0x0000 | bus[1]) << 8 | bus[0]));//高低位交换

}

Canvas canvas = sfv.getHolder().lockCanvas(

new Rect(0, 0, sfv.getWidth(), sfv.getHeight()));// 关键:获取画布

if(canvas==null)

return;

// canvas.drawColor(Color.rgb(241, 241, 241));// 清除背景

canvas.drawARGB(255, 42, 53, 82);

start = (int) ((buf.size())* divider);

float py = baseLine;

float y;

if(sfv.getWidth() - start <= marginRight){//如果超过预留的右边距距离

start = sfv.getWidth() -marginRight;//画的位置x坐标

}

//TODO

canvas.drawLine(marginRight, 0, marginRight, sfv.getHeight(), circlePaint);//垂直的线

int height = sfv.getHeight()-line_off;

canvas.drawLine(0, line_off/2, sfv.getWidth(), line_off/2, paintLine);//最上面的那根线

mCurrentPosInterface.onCurrentPosChanged(start);

canvas.drawLine(0, height*0.5f+line_off/2, sfv.getWidth() ,height*0.5f+line_off/2, center);//中心线

canvas.drawLine(0, sfv.getHeight()-line_off/2-1, sfv.getWidth(), sfv.getHeight()-line_off/2-1, paintLine);//最下面的那根线

Map newMarkMap=new HashMap();

Iterator iterator = markMap.keySet().iterator();

while(iterator.hasNext()){

int key=iterator.next();

int pos = markMap.get(key);

destRect=new Rect((int)(pos-bitWidth/4), 0, (int)(pos-bitWidth/4)+bitWidth/2, bitHeight/2);

canvas.drawBitmap(markIcon, null, destRect, null);

String text=(key+1)+"";

float textWidth = markTextPaint.measureText(text);

FontMetricsInt fontMetricsInt = markTextPaint.getFontMetricsInt();

int fontHeight=fontMetricsInt.bottom-fontMetricsInt.top;

canvas.drawText(text, (pos-textWidth/2), fontHeight-8, markTextPaint);

canvas.drawLine(pos-bitWidth/16+2, bitHeight/2-2,pos-bitWidth/16+2, sfv.getWidth()-bitHeight/2,bottomHalfPaint );

newMarkMap.put(key, pos-3);

}

markMap=newMarkMap;

for (int i = 0; i < buf.size(); i++) {

y =buf.get(i)/rateY + baseLine;// 调节缩小比例,调节基准线

float x=(i) * divider;

if(sfv.getWidth() - (i-1) * divider <=marginRight){

x = sfv.getWidth()-marginRight;

}

canvas.drawLine(x, y, x,sfv.getHeight()-y, mPaint);//中间出波形

}

sfv.getHolder().unlockCanvasAndPost(canvas);// 解锁画布,提交画好的图像

}

}

/**

* 添加音频的标记点

*/

public void addCurrentPostion(){

markMap.put(markMap.size(), start);

}

/**

* 清除标记位置点

*/

public void clearMarkPosition(){

markMap.clear();

}

/**

* 当前位置变化监听

*/

public void setCurrentPostChangerLisener(CurrentPosInterface currentPosInterface){

mCurrentPosInterface = currentPosInterface;

}

/**

* 异步写文件

* @author cokus

*

*/

class WriteRunnable implements Runnable {

@Override

public void run() {

try {

FileOutputStream fos2wav = null;

File file2wav = null;

try {

file2wav = new File(savePcmPath);

if (file2wav.exists()) {

file2wav.delete();

}

fos2wav = new FileOutputStream(file2wav);// 建立一个可存取字节的文件

} catch (Exception e) {

e.printStackTrace();

}

while (isWriting || write_data.size() > 0) {

byte[] buffer = null;

synchronized (write_data) {

if(write_data.size() > 0){

buffer = write_data.get(0);

write_data.remove(0);

}

}

try {

if(buffer != null){

fos2wav.write(buffer);

fos2wav.flush();

}

} catch (IOException e) {

e.printStackTrace();

}

}

fos2wav.close();

Pcm2Wav p2w = new Pcm2Wav();//将pcm格式转换成wav 其实就尼玛加了一个44字节的头信息

p2w.convertAudioFiles(savePcmPath, saveWavPath);

} catch (Throwable t) {

}

}

}

}

“`

ok! 至此音频的录制功能到此结束!

额,忘了,还有一个pcm转wav的类。如下:

public void convertAudioFiles(String src, String target) throws Exception

{

FileInputStream fis = new FileInputStream(src);

FileOutputStream fos = new FileOutputStream(target);

byte[] buf = new byte[1024 * 1000];

int size = fis.read(buf);

int PCMSize = 0;

while (size != -1)

{

PCMSize += size;

size = fis.read(buf);

}

fis.close();

WaveHeader header = new WaveHeader();

header.fileLength = PCMSize + (44 - 8);

header.FmtHdrLeth = 16;

header.BitsPerSample = 16;

header.Channels = 1;

header.FormatTag = 0x0001;

header.SamplesPerSec = 16000;

header.BlockAlign = (short) (header.Channels * header.BitsPerSample / 8);

header.AvgBytesPerSec = header.BlockAlign * header.SamplesPerSec;

header.DataHdrLeth = PCMSize;

byte[] h = header.getHeader();

assert h.length == 44;

//write header

fos.write(h, 0, h.length);

//write data stream

fis = new FileInputStream(src);

size = fis.read(buf);

while (size != -1)

{

fos.write(buf, 0, size);

size = fis.read(buf);

}

fis.close();

fos.close();

}

对应的44字节头部信息:

package com.jwzt.jwzt_procaibian.utils;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

public class WaveHeader

{

public final char fileID[] = { ‘R’, ‘I’, ‘F’, ‘F’ };

public int fileLength;

public char wavTag[] = { 'W', 'A', 'V', 'E' };;

public char FmtHdrID[] = { 'f', 'm', 't', ' ' };

public int FmtHdrLeth;

public short FormatTag;

public short Channels;

public int SamplesPerSec;

public int AvgBytesPerSec;

public short BlockAlign;

public short BitsPerSample;

public char DataHdrID[] = { 'd', 'a', 't', 'a' };

public int DataHdrLeth;

public byte[] getHeader() throws IOException

{

ByteArrayOutputStream bos = new ByteArrayOutputStream();

WriteChar(bos, fileID);

WriteInt(bos, fileLength);

WriteChar(bos, wavTag);

WriteChar(bos, FmtHdrID);

WriteInt(bos, FmtHdrLeth);

WriteShort(bos, FormatTag);

WriteShort(bos, Channels);

WriteInt(bos, SamplesPerSec);

WriteInt(bos, AvgBytesPerSec);

WriteShort(bos, BlockAlign);

WriteShort(bos, BitsPerSample);

WriteChar(bos, DataHdrID);

WriteInt(bos, DataHdrLeth);

bos.flush();

byte[] r = bos.toByteArray();

bos.close();

return r;

}

private void WriteShort(ByteArrayOutputStream bos, int s)

throws IOException

{

byte[] mybyte = new byte[2];

mybyte[1] = (byte) ((s << 16) >> 24);

mybyte[0] = (byte) ((s << 24) >> 24);

bos.write(mybyte);

}

private void WriteInt(ByteArrayOutputStream bos, int n) throws IOException

{

byte[] buf = new byte[4];

buf[3] = (byte) (n >> 24);

buf[2] = (byte) ((n << 8) >> 24);

buf[1] = (byte) ((n << 16) >> 24);

buf[0] = (byte) ((n << 24) >> 24);

bos.write(buf);

}

private void WriteChar(ByteArrayOutputStream bos, char[] id)

{

for (int i = 0; i < id.length; i++)

{

char c = id[i];

bos.write(c);

}

}

}

好了,录制方面的东西就差不多了!

pcm转为wav格式其实就是多了44个头字节,合并的时候,我们需要更改头部标记文件长度的那个字节进行修改!然后再利用FFmpeg进行格式转化!当然,裁剪的话稍微有些麻烦!我们需要进行帧的计算,注释中也提到了,20ms一帧,采用的单声道,16000的采集参数,那每帧大概就是640!然后在裁剪的时候计算出音频总的帧数,进行计算裁剪!误差应该不超100ms!

这里就不给源码了,这个项目还在开发测试阶段,机型,屏幕什么的还没做适配,坑定还有很多bug需要修改!也希望,给在这方面开发的同学们,提供一点思路!很多东西也需要大家进行思考和学习!

有不对的地方也希望大家指正,共同进步!

下一篇再介绍裁剪部分的界面制作和功能实现!

Github地址(大家下载的时候顺便给个star也是对作者劳动成果的肯定,谢谢):

https://github.com/T-chuangxin/VideoMergeDemo