Opencv之图像拼接:基于Stitcher的多图和基于Surf的两图拼接

Opencv之图像拼接:基于Stitcher的多图和基于Surf的两图拼接

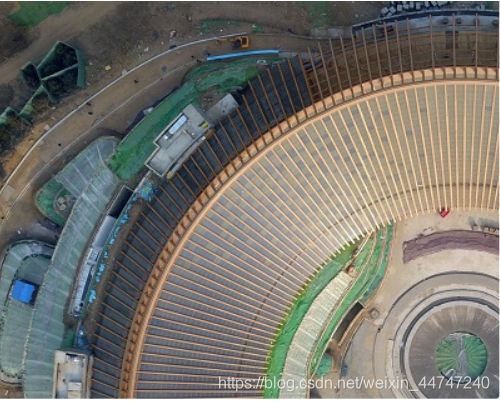

图像拼接算法,一种是调用opencv的stitcher算法,这种调用相对简单,但是其内部算法是很复杂的,这点可以从其cpp里看出,不断匹配运行,得到结果,在尝试过程中,兼容性不是很好,经常会出现无法拼接的情况,速度很慢,错误率较高。这里实现了四幅图像的拼接,效果还不错。

代码如下:

#include

基于surf拼接的代码如下:

#include