使用librdkafka的C++接口实现简单的生产者和消费者

一.编译librdkafka

环境:Fedora 20,32位

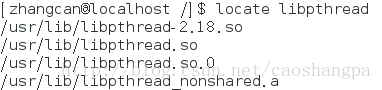

依赖:pthreads(必选),zlib(可选),libssl-dev(可选),libsasl2-dev(可选)

先查看自己的linux上是否安装了pthreads,指令如下:

# locate libpthread

因为我之前安过了,所以可以直接编译librdkafka,没有安的下个pthreads的源码——configure、make、make install。

开始编译librdkafka,指令如下:

# ./configure

# make

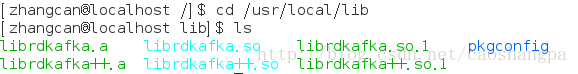

# make install头文件被默认安装到/usr/local/include/librdkafka目录

二.生产者

新建Qt控制台工程KafkaProducer,Pro文件如下:

#-------------------------------------------------

#

# Project created by QtCreator 2018-03-27T19:45:09

#

#-------------------------------------------------

QT -= gui core

TARGET = KafkaProducer

CONFIG += console

CONFIG -= app_bundle

TEMPLATE = app

SOURCES += main.cpp

INCLUDEPATH += /usr/local/include/librdkafka

LIBS += -L/usr/local/lib -lrdkafka

LIBS += -L/usr/local/lib -lrdkafka++

#include

#include

#include

#include

#include

#include

#include

#include "rdkafkacpp.h"

static bool run = true;

static void sigterm (int sig) {

run = false;

}

class ExampleDeliveryReportCb : public RdKafka::DeliveryReportCb {

public:

void dr_cb (RdKafka::Message &message) {

std::cout << "Message delivery for (" << message.len() << " bytes): " <<

message.errstr() << std::endl;

if (message.key())

std::cout << "Key: " << *(message.key()) << ";" << std::endl;

}

};

class ExampleEventCb : public RdKafka::EventCb {

public:

void event_cb (RdKafka::Event &event) {

switch (event.type())

{

case RdKafka::Event::EVENT_ERROR:

std::cerr << "ERROR (" << RdKafka::err2str(event.err()) << "): " <<

event.str() << std::endl;

if (event.err() == RdKafka::ERR__ALL_BROKERS_DOWN)

run = false;

break;

case RdKafka::Event::EVENT_STATS:

std::cerr << "\"STATS\": " << event.str() << std::endl;

break;

case RdKafka::Event::EVENT_LOG:

fprintf(stderr, "LOG-%i-%s: %s\n",

event.severity(), event.fac().c_str(), event.str().c_str());

break;

default:

std::cerr << "EVENT " << event.type() <<

" (" << RdKafka::err2str(event.err()) << "): " <<

event.str() << std::endl;

break;

}

}

};

int main ()

{

std::string brokers = "localhost";

std::string errstr;

std::string topic_str="test";

int32_t partition = RdKafka::Topic::PARTITION_UA;

RdKafka::Conf *conf = RdKafka::Conf::create(RdKafka::Conf::CONF_GLOBAL);

RdKafka::Conf *tconf = RdKafka::Conf::create(RdKafka::Conf::CONF_TOPIC);

conf->set("bootstrap.servers", brokers, errstr);

ExampleEventCb ex_event_cb;

conf->set("event_cb", &ex_event_cb, errstr);

signal(SIGINT, sigterm);

signal(SIGTERM, sigterm);

ExampleDeliveryReportCb ex_dr_cb;

conf->set("dr_cb", &ex_dr_cb, errstr);

RdKafka::Producer *producer = RdKafka::Producer::create(conf, errstr);

if (!producer) {

std::cerr << "Failed to create producer: " << errstr << std::endl;

exit(1);

}

std::cout << "% Created producer " << producer->name() << std::endl;

RdKafka::Topic *topic = RdKafka::Topic::create(producer, topic_str,

tconf, errstr);

if (!topic) {

std::cerr << "Failed to create topic: " << errstr << std::endl;

exit(1);

}

for (std::string line; run && std::getline(std::cin, line);) {

if (line.empty()) {

producer->poll(0);

continue;

}

RdKafka::ErrorCode resp =

producer->produce(topic, partition,

RdKafka::Producer::RK_MSG_COPY /* Copy payload */,

const_cast(line.c_str()), line.size(),

NULL, NULL);

if (resp != RdKafka::ERR_NO_ERROR)

std::cerr << "% Produce failed: " <<

RdKafka::err2str(resp) << std::endl;

else

std::cerr << "% Produced message (" << line.size() << " bytes)" <<

std::endl;

producer->poll(0);

}

run = true;

// 退出前处理完输出队列中的消息

while (run && producer->outq_len() > 0) {

std::cerr << "Waiting for " << producer->outq_len() << std::endl;

producer->poll(1000);

}

delete conf;

delete tconf;

delete topic;

delete producer;

RdKafka::wait_destroyed(5000);

return 0;

}

新建Qt控制台工程KafkaConsumer,Pro文件如下:

#-------------------------------------------------

#

# Project created by QtCreator 2018-03-28T16:27:54

#

#-------------------------------------------------

QT -= gui core

TARGET = KafkaConsumer

CONFIG += console

CONFIG -= app_bundle

TEMPLATE = app

SOURCES += main.cpp

INCLUDEPATH += /usr/local/include/librdkafka

LIBS += -L/usr/local/lib -lrdkafka

LIBS += -L/usr/local/lib -lrdkafka++

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include "rdkafkacpp.h"

static bool run = true;

static bool exit_eof = true;

static int eof_cnt = 0;

static int partition_cnt = 0;

static int verbosity = 1;

static long msg_cnt = 0;

static int64_t msg_bytes = 0;

static void sigterm (int sig) {

run = false;

}

class ExampleEventCb : public RdKafka::EventCb {

public:

void event_cb (RdKafka::Event &event) {

switch (event.type())

{

case RdKafka::Event::EVENT_ERROR:

std::cerr << "ERROR (" << RdKafka::err2str(event.err()) << "): " <<

event.str() << std::endl;

if (event.err() == RdKafka::ERR__ALL_BROKERS_DOWN)

run = false;

break;

case RdKafka::Event::EVENT_STATS:

std::cerr << "\"STATS\": " << event.str() << std::endl;

break;

case RdKafka::Event::EVENT_LOG:

fprintf(stderr, "LOG-%i-%s: %s\n",

event.severity(), event.fac().c_str(), event.str().c_str());

break;

case RdKafka::Event::EVENT_THROTTLE:

std::cerr << "THROTTLED: " << event.throttle_time() << "ms by " <<

event.broker_name() << " id " << (int)event.broker_id() << std::endl;

break;

default:

std::cerr << "EVENT " << event.type() <<

" (" << RdKafka::err2str(event.err()) << "): " <<

event.str() << std::endl;

break;

}

}

};

void msg_consume(RdKafka::Message* message, void* opaque) {

switch (message->err()) {

case RdKafka::ERR__TIMED_OUT:

//std::cerr << "RdKafka::ERR__TIMED_OUT"<len();

if (verbosity >= 3)

std::cerr << "Read msg at offset " << message->offset() << std::endl;

RdKafka::MessageTimestamp ts;

ts = message->timestamp();

if (verbosity >= 2 &&

ts.type != RdKafka::MessageTimestamp::MSG_TIMESTAMP_NOT_AVAILABLE) {

std::string tsname = "?";

if (ts.type == RdKafka::MessageTimestamp::MSG_TIMESTAMP_CREATE_TIME)

tsname = "create time";

else if (ts.type == RdKafka::MessageTimestamp::MSG_TIMESTAMP_LOG_APPEND_TIME)

tsname = "log append time";

std::cout << "Timestamp: " << tsname << " " << ts.timestamp << std::endl;

}

if (verbosity >= 2 && message->key()) {

std::cout << "Key: " << *message->key() << std::endl;

}

if (verbosity >= 1) {

printf("%.*s\n",

static_cast(message->len()),

static_cast(message->payload()));

}

break;

case RdKafka::ERR__PARTITION_EOF:

/* Last message */

if (exit_eof && ++eof_cnt == partition_cnt) {

std::cerr << "%% EOF reached for all " << partition_cnt <<

" partition(s)" << std::endl;

run = false;

}

break;

case RdKafka::ERR__UNKNOWN_TOPIC:

case RdKafka::ERR__UNKNOWN_PARTITION:

std::cerr << "Consume failed: " << message->errstr() << std::endl;

run = false;

break;

default:

/* Errors */

std::cerr << "Consume failed: " << message->errstr() << std::endl;

run = false;

}

}

class ExampleConsumeCb : public RdKafka::ConsumeCb {

public:

void consume_cb (RdKafka::Message &msg, void *opaque) {

msg_consume(&msg, opaque);

}

};

int main () {

std::string brokers = "localhost";

std::string errstr;

std::string topic_str="test";

std::vector topics;

std::string group_id="101";

RdKafka::Conf *conf = RdKafka::Conf::create(RdKafka::Conf::CONF_GLOBAL);

RdKafka::Conf *tconf = RdKafka::Conf::create(RdKafka::Conf::CONF_TOPIC);

//group.id必须设置

if (conf->set("group.id", group_id, errstr) != RdKafka::Conf::CONF_OK) {

std::cerr << errstr << std::endl;

exit(1);

}

topics.push_back(topic_str);

//bootstrap.servers可以替换为metadata.broker.list

conf->set("bootstrap.servers", brokers, errstr);

ExampleConsumeCb ex_consume_cb;

conf->set("consume_cb", &ex_consume_cb, errstr);

ExampleEventCb ex_event_cb;

conf->set("event_cb", &ex_event_cb, errstr);

conf->set("default_topic_conf", tconf, errstr);

signal(SIGINT, sigterm);

signal(SIGTERM, sigterm);

RdKafka::KafkaConsumer *consumer = RdKafka::KafkaConsumer::create(conf, errstr);

if (!consumer) {

std::cerr << "Failed to create consumer: " << errstr << std::endl;

exit(1);

}

std::cout << "% Created consumer " << consumer->name() << std::endl;

RdKafka::ErrorCode err = consumer->subscribe(topics);

if (err) {

std::cerr << "Failed to subscribe to " << topics.size() << " topics: "

<< RdKafka::err2str(err) << std::endl;

exit(1);

}

while (run) {

//5000毫秒未订阅到消息,触发RdKafka::ERR__TIMED_OUT

RdKafka::Message *msg = consumer->consume(5000);

msg_consume(msg, NULL);

delete msg;

}

consumer->close();

delete conf;

delete tconf;

delete consumer;

std::cerr << "% Consumed " << msg_cnt << " messages ("

<< msg_bytes << " bytes)" << std::endl;

//应用退出之前等待rdkafka清理资源

RdKafka::wait_destroyed(5000);

return 0;

}

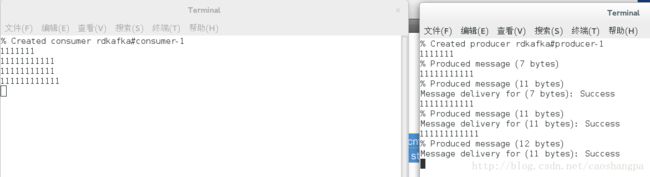

先启动zookeeper服务和kafka服务,详见:kafka的编译和使用,然后再启动生产者和消费者。

生产者循环等待用户输入,输入后回车,消息就发布出去了,此时消费者显示订阅到的内容。