梯度下降(反向传播)python实现

目录

- 各个层的建立

- 激活函数层

- ReLU函数

- Sigmoid层

- Affine层

- Softmax-with-Loss 层

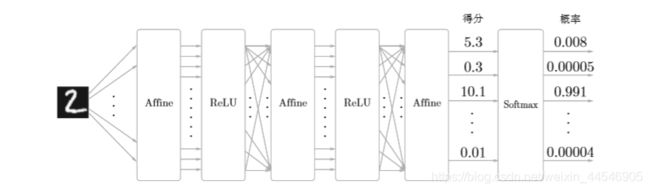

- 实例(两层神经网络)

数值分析的方法虽然简单但计算量过大,相较之下,BP更加高效

各个层的建立

- 在数值分析方法中整个神经网络由一个类来实现

- 而BP反向传播每个隐层都有两个类(Affine和激活函数),输出层是softmax-with-Loss

激活函数层

ReLU函数

class Relu:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

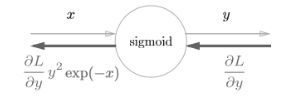

Sigmoid层

解析性求导

class Sigmoid:

def __init__(self):

self.out = None

def forward(self, x):

out = 1 / (1 + np.exp(-x))

self.out = out

return out

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

和数值分析法不同:存放权值参数的类,数值分析是整个神经网络建一个类

BP:每个隐层和输出层分别建一个类

在这里插入代码片Affine层

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.dW = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x, self.W) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T) #分配到输入的梯度

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

return dx #用到下一步成为往前一层的梯度头头

Softmax-with-Loss 层

输出层:反向传播时,梯度输出的时

这个结果只有两种配合才能做到:

- softmax+交叉熵误差

- 恒等函数+平方差误差

class SoftmaxWithLoss:

def __init__(self):

self.loss = None # 损失

self.y = None # softmax的输出

self.t = None # 监督数据(one-hot vector)

def forward(self, x, t):

self.t = t

self.y = softmax(x)

self.loss = cross_entropy_error(self.y, self.t)

return self.loss

def backward(self, dout=1):

batch_size = self.t.shape[0]

dx = (self.y - self.t) / batch_size

return dx

实例(两层神经网络)

导入库

import sys, os

sys.path.append(os.pardir)

import numpy as np

from common.layers import *

from common.gradient

import numerical_gradient

from collections import OrderedDict

类的定义

class TwoLayerNet:

def __init__(self, input_size, hidden_size, output_size, weight_init_std=0.01):

# 初始化权重

self.params = {}

self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = weight_init_std * np.random.randn(hidden_size, output_size)

self.params['b2'] = np.zeros(output_size)

# 生成层

self.layers = OrderedDict()

#这个字典定义的方法和上面的比,它是有顺序的,按照存入的顺序存放,因为下面要在循环连顺序执行

self.layers['Affine1'] = Affine(self.params['W1'], self.params['b1'])

self.layers['Relu1'] = Relu()

self.layers['Affine2'] = Affine(self.params['W2'], self.params['b2'])

self.lastLayer = SoftmaxWithLoss()

def predict(self, x):

for layer in self.layers.values(): #字典中的所有值

x = layer.forward(x)

return x

# x:输入数据, t:监督数据

def loss(self, x, t):

y = self.predict(x)

return self.lastLayer.forward(y, t)

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1)

if t.ndim != 1 :

t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

# 数值分析的方法

def numerical_gradient(self, x, t):

loss_W = lambda W: self.loss(x, t)

grads = {}

grads['W1'] = numerical_gradient(loss_W, self.params['W1'])

grads['b1'] = numerical_gradient(loss_W, self.params['b1'])

grads['W2'] = numerical_gradient(loss_W, self.params['W2'])

grads['b2'] = numerical_gradient(loss_W, self.params['b2'])

return grads

#反向转播的方法

def gradient(self, x, t):

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.lastLayer.backward(dout)

layers = list(self.layers.values())

layers.reverse() #反转,制造反向传播的顺序

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

grads['W1'] = self.layers['Affine1'].dW

grads['b1'] = self.layers['Affine1'].db

grads['W2'] = self.layers['Affine2'].dW

grads['b2'] = self.layers['Affine2'].db

return grads

调用

import sys, os

sys.path.append(os.pardir)

import numpy as np

from dataset.mnist import load_mnist

from two_layer_net import TwoLayerNet

# 读入数据

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True, one_hot_label=True)

network = TwoLayerNet(input_size=784, hidden_size=50, output_size=10)

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.1

#观察趋势画图用的

train_loss_list = []

train_acc_list = []

test_acc_list = []

iter_per_epoch = max(train_size / batch_size, 1)

for i in range(iters_num):

batch_mask = np.random.choice(train_size, batch_size)

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

# 通过误差反向传播法求梯度

grad = network.gradient(x_batch, t_batch)

# 更新权值

for key in ('W1', 'b1', 'W2', 'b2'):

network.params[key] -= learning_rate * grad[key]

loss = network.loss(x_batch, t_batch)

train_loss_list.append(loss)

#评估精度

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, t_train)

test_acc = network.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print(train_acc, test_acc)