我是如何爬取全国机票和航班信息的

| 我会两种语言,一种写给程序执行,一种说给你听 |

文章导航

- 前言:

- 目标:

- Ajax介绍:

- 起始URL:

- 明确爬取数据信息:

- 添加token

- 先获取一天一个城市的航班信息

- 获取Json 信息:

- Json 提取信息

- 未来40天爬取

- 多个城市航班信息

- 写入 CSV

- 完整代码

- 结果展示

- 最后声明:

前言:

因为疫情原因,在家闲的无聊,于是看了一下厦门飞往武汉的机票只要200元,于是我诞生了一个爬取机票的念头

目标:

爬取未来40天全国飞往厦门的机票价格及航班信息

Ajax介绍:

AJAX = Asynchronous JavaScript and XML(异步的 JavaScript 和 XML)。

AJAX 是一种在无需重新加载整个网页的情况下,能够更新部分网页的技术。

起始URL:

https://flights.ctrip.com/itinerary/oneway/sjw-xmn?date=2020-03-25

明确爬取数据信息:

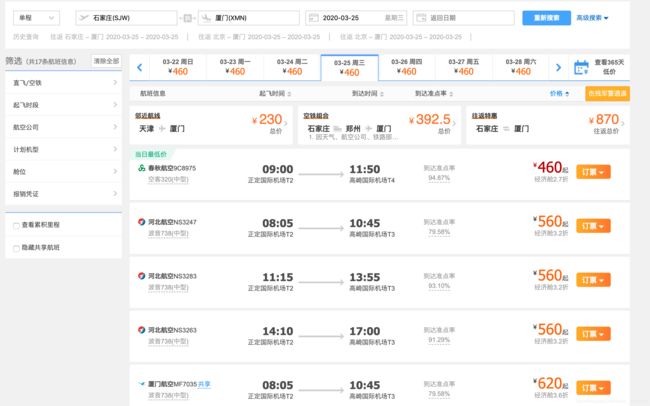

通过 网页检查–> network --> XHR 头部 可以看到机票信息的 api

https://flights.ctrip.com/itinerary/api/12808/products

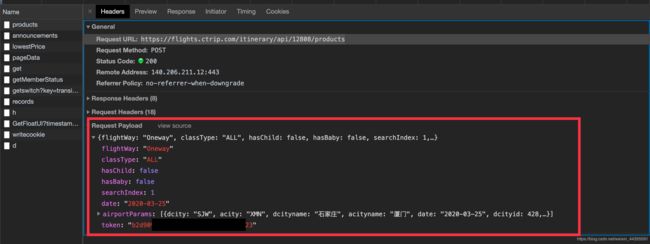

这个api网址是无法访问的,不过依旧有数据传送,我们只能通过模拟头部请求获取(payload是一种以JSON格式进行数据传输的一种方式)

添加token

有小伙伴说不知道token(令牌)在哪,这个图片最下面的一行就是你的令牌了,如果不添加token,那么我们会爬了个寂寞

发现有一个products 的文件 (如果没有 请尝试 F5 刷新页面)

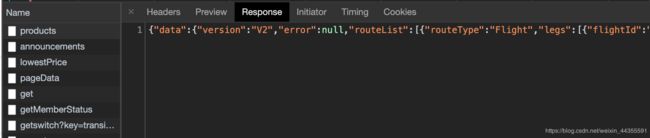

我们可以看到Json数据都在response当中,也就是我们想要的航班信息,机票价格都是通过这个文件传送的.

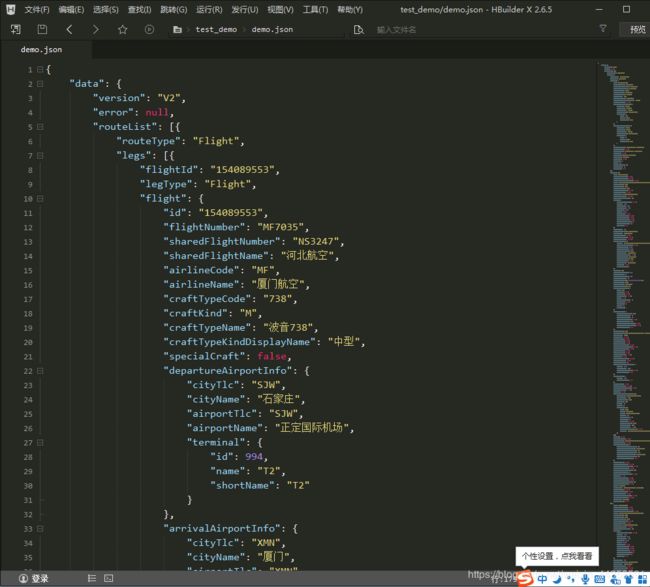

我们复制这行Json数据在其他软件展开便于观察内容,(或者网页搜索:Json在线解析)

首先是航班信息:

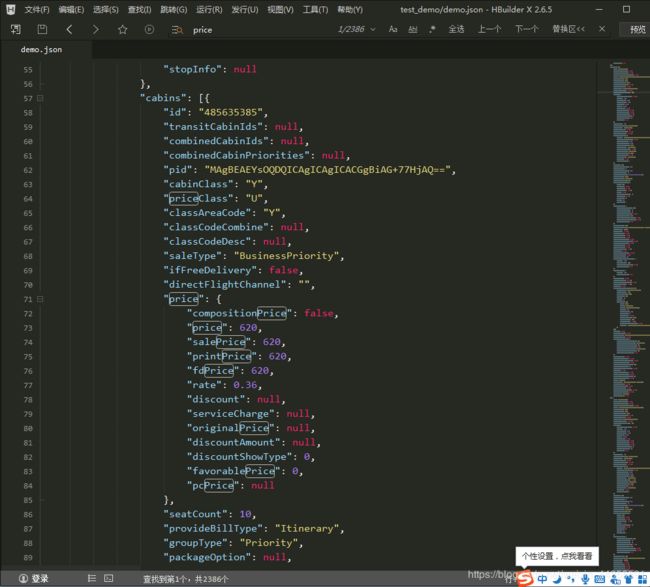

往下有机票价格:

我们看到机票价格分了很多种类,有售价,打折的价格,我们就爬取第一个price就好了,经过观察大部分价格都是一样的

我们已经明确的要爬取的信息了, 接下来就开始抓取数据吧

先获取一天一个城市的航班信息

获取Json 信息:

import requests

import json

from fake_useragent import UserAgent

if __name__ == "__main__":

url = "https://flights.ctrip.com/itinerary/api/12808/products/oneway/sjw,sjw-xmn?date=2020-03-25"

# 这里的url 必须写全!!!

headers = {

"User-Agent": '{}'.format(UserAgent().random), # 构造随机请求头

"Referer": "https://flights.ctrip.com/itinerary/oneway/sjw-xmn?date=2020-03-25",

"Content-Type": "application/json"

}

request_payload = {

"flightWay": "Oneway",

"classType": "ALL",

"hasChild": False,

"hasBaby": False,

"searchIndex": 1,

"airportParams": [

{"dcity": "SJW", "acity": "XMN", "dcityname": "石家庄", "acityname": "厦门", "date": "2020-03-25", "dcityid": 428}

]

"token": 从头部获取的token,写在这里

}

# post请求

response = requests.post(url, data=json.dumps(request_payload), headers=headers).text

print(response)

Json 提取信息

# post请求

response = requests.post(url, data=json.dumps(request_payload), headers=headers, timeout=30).text

# 避免爬取过快 设置延迟

# json.dumps 将 Python 对象编码成 JSON 字符串

routeList = json.loads(response).get('data').get('routeList')

# json.loads 将已编码的 JSON 字符串解码为 Python 对象

# 依次读取每条信息

for route in routeList:

# 判断是否有信息,有时候没有会报错

if len(route.get('legs')) == 1:

legs = route.get('legs')

flight = legs[0].get('flight')

# 提取想要的信息

airlineName = flight.get('airlineName')

flightNumber = flight.get('flightNumber')

departureDate = flight.get('departureDate')

arrivalDate = flight.get('arrivalDate')

departureCityName = flight.get('departureAirportInfo').get('cityName')

departureAirportName = flight.get('departureAirportInfo').get('airportName')

arrivalCityName = flight.get('arrivalAirportInfo').get('cityName')

arrivalAirportName = flight.get('arrivalAirportInfo').get('airportName')

print(airlineName, "\t",

flightNumber, "\t",

price, "\t",

departureDate, "\t",

arrivalDate, "\t",

craftTypeName, "\t",

departureCityName, "\t",

departureAirportName, "\t",

departureterminal, "\t",

arrivalCityName, "\t",

arrivalAirportName, "\t",

arrivalterminal, )

else:

pass

然而我们只获取了一天的数据是并没有什么参考价值的,接下来我们获取未来40天的航班信息

未来40天爬取

首先我们先构造一个日期遍历的方法

def gen_dates(start_date, day_counts):

next_day = timedelta(days=1) # timedalte 是datetime中的一个对象,该对象表示两个时间的差值,day=1表示相差一天

for i in range(day_counts): # 从起始时间的现在

yield start_date + next_day * i

def get_date_list(start_date):

"""

:param start_date: 开始时间

:return: 开始时间未来40天后的日期列表

"""

if start_date < datetime.datetime.now():

start = datetime.datetime.now()

else:

start = start_date

end = start + datetime.timedelta(days=40) # 爬取未来一个月的机票

data = []

for d in gen_dates(start, ((end - start).days)):

data.append(d.strftime("%Y-%m-%d"))

return date

if __name__ == "__main__":

start_date = datetime.datetime.strptime("2020-03-25", "%Y-%m-%d") # 类型为 返回的data是一个日期列表,这样我们就能自定义起始日期,获取未来40天的航班信息!

当然,一个城市怎么够呢, 我们可以根据不同出发城市的头部获取更多的航班信息

多个城市航班信息

我们只需要把信息放在一个盒子里后面通过 format自动添加就可

cities_data = [

{"dcity": "SJW", "acity": "XMN", "dcityname": "石家庄", "acityname": "厦门", "date": "{}", "dcityid": 428, "token": "*********************"},

{"dcity": "BJS", "acity": "XMN", "dcityname": "北京", "acityname": "厦门", "date": "{}", "dcityid": 1, "token": "**********************"},

]

写入 CSV

path = "/home/liuyang/Spider/Scrapy_Project/ScrapyS/Airtickets/TO_XMN{}.csv".format(start_date)

# 创建csv文件对象

with open(path, "a+") as f:

writer = csv.writer(f, dialect="excel")

# 基于文件对象构建 csv写入对象

csv_write = csv.writer(f)

csv_data = [airlineName, flightNumber,

departureCityName, departureAirportName, departureterminal, departureDate,

arrivalDate, arrivalCityName, arrivalAirportName, arrivalterminal,

price, craftTypeName]

csv_write.writerow(csv_data)

f.close()

完整代码

import csv

import requests

import json

import datetime

from datetime import timedelta

from fake_useragent import UserAgent

def gen_dates(start_date, day_counts):

next_day = timedelta(days=1) # timedalte 是datetime中的一个对象,该对象表示两个时间的差值,day=1表示相差一天

for i in range(day_counts): # 从起始时间的现在

yield start_date + next_day * i

def get_date_list(start_date):

"""

:param start_date: 开始时间

:return: 开始时间未来40天后的日期列表

"""

if start_date < datetime.datetime.now():

start = datetime.datetime.now()

else:

start = start_date

end = start + datetime.timedelta(days=40) # 爬取未来一个月的机票

data = []

for d in gen_dates(start, ((end - start).days)):

data.append(d.strftime("%Y-%m-%d"))

return data

cities_data = [

{"dcity": "SJW", "acity": "XMN", "dcityname": "石家庄", "acityname": "厦门", "date": "{}", "dcityid": 428, "token": "********你的token令牌************"},

{"dcity": "BJS", "acity": "XMN", "dcityname": "北京", "acityname": "厦门", "date": "{}", "dcityid": 1, "token": "***********************"},

]

if __name__ == "__main__":

start_date = datetime.datetime.strptime("2020-03-25", "%Y-%m-%d") # 结果展示

这里还有简单的数据分析啦

https://blog.csdn.net/weixin_44355591/article/details/105008238

最后欢迎各位访问我的博客网站:wangwanghub.com

最后声明:

本篇仅供参考学习,如若用于商业用途,后果自负.

如果本篇文章涉及了某平台的利益,请联系我,我立马删除,我贼怂的