FFmpeg4Android:视频文件解码

4 FFmpeg解码

4.1 视频解码流程

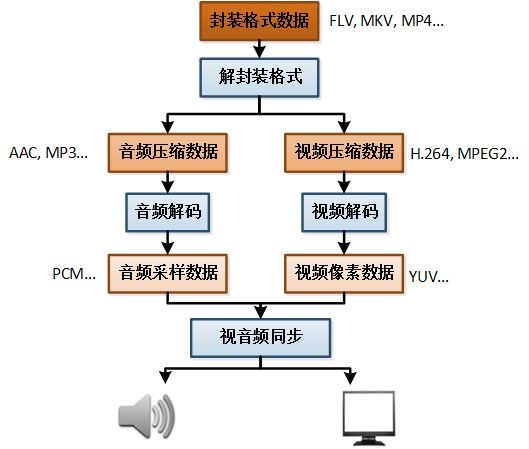

a) 视频播放流程

视频播放器播放视频文件,需要经过以下几个步骤:解封装,解码视音频,视音频同步。如果播放本地文件则不需要解协议,为以下几个步骤:解封装,解码视音频,视音频同步。他们的过程如图所示:

(参考雷神博客:[总结]视音频编解码技术零基础学习方法)

其中解码部分是核心,本章主要讲解视频的解码与转码流程。

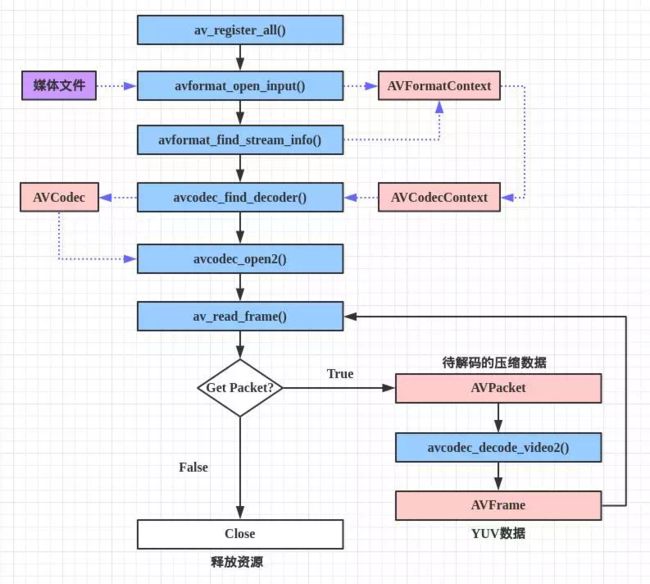

b) 解码流程

解码者是这固定的几个流程,只要按照这个固定模式来调用函数即可,下面是各函数的作用:

av_register_all():注册所有组件

avformat_open_input():打开输入视频文件

avformat_find_stream_info():获取视频文件信息

avcodec_find_decoder():查找解码器

avcodec_open2():打开解码器

av_read_frame():从输入文件读取一帧压缩数据

avcodec_decode_video2():解码一帧压缩数据

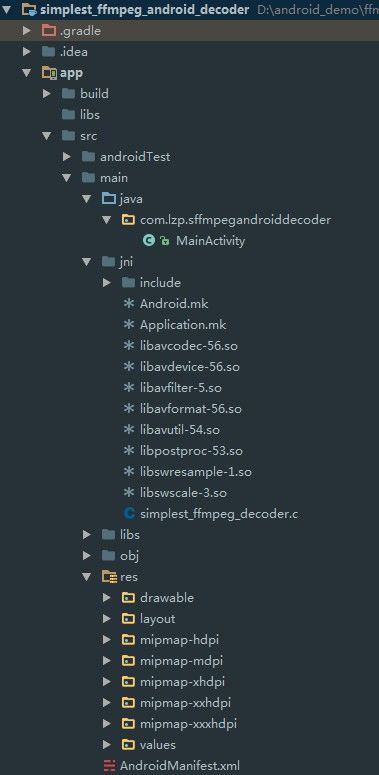

4.2 解码实例

该实例实现的作用为,将.mp4文件转换成.yuv文件。

Java端代码,MainActivity.java:

package com.lzp.sffmpegandroiddecoder;

import android.os.Bundle;

import android.os.Environment;

import android.app.Activity;

import android.util.Log;

import android.view.View;

import android.view.View.OnClickListener;

import android.widget.Button;

import android.widget.EditText;

public class MainActivity extends Activity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

Button startButton = (Button) this.findViewById(R.id.button_start);

final EditText urlEdittext_input= (EditText) this.findViewById(R.id.input_url);

final EditText urlEdittext_output= (EditText) this.findViewById(R.id.output_url);

startButton.setOnClickListener(new OnClickListener() {

public void onClick(View arg0){

String folderurl=Environment.getExternalStorageDirectory().getPath();

String urltext_input=urlEdittext_input.getText().toString();

String inputurl=folderurl+"/"+urltext_input;

Log.e("path", inputurl);

String urltext_output=urlEdittext_output.getText().toString();

String outputurl=folderurl+"/"+urltext_output;

Log.i("inputurl",inputurl);

Log.i("outputurl",outputurl);

decode(inputurl,outputurl);

}

});

}

//JNI

public native int decode(String inputurl, String outputurl);

static{

System.loadLibrary("avutil-54");

System.loadLibrary("swresample-1");

System.loadLibrary("avcodec-56");

System.loadLibrary("avformat-56");

System.loadLibrary("swscale-3");

System.loadLibrary("postproc-53");

System.loadLibrary("avfilter-5");

System.loadLibrary("avdevice-56");

System.loadLibrary("sffdecoder");

}

}C端源代码位于jni/simplest_ffmpeg_decoder.c,其负责对*.mp4文件的解码操作,根据上节中视频解码流程,实现过的代码如下所示:

#include nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

if(videoindex==-1){

LOGE("Couldn't find a video stream.\n");

return -1;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL){

LOGE("Couldn't find Codec.\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0){

LOGE("Couldn't open codec.\n");

return -1;

}

pFrame=av_frame_alloc();

pFrameYUV=av_frame_alloc();

out_buffer=(unsigned char *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P,

pCodecCtx->width, pCodecCtx->height, 1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer,

AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

packet=(AVPacket *)av_malloc(sizeof(AVPacket));

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height,

pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height,

AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

sprintf(info, "[Input ]%s\n", input_str);

sprintf(info, "%s[Output ]%s\n",info,output_str);

sprintf(info, "%s[Format ]%s\n",info, pFormatCtx->iformat->name);

sprintf(info, "%s[Codec ]%s\n",info, pCodecCtx->codec->name);

sprintf(info, "%s[Resolution]%dx%d\n",info, pCodecCtx->width,pCodecCtx->height);

fp_yuv=fopen(output_str,"wb+");

if(fp_yuv==NULL){

printf("Cannot open output file.\n");

return -1;

}

frame_cnt=0;

time_start = clock();

while(av_read_frame(pFormatCtx, packet)>=0){

// 这里只要处理视频帧

if(packet->stream_index==videoindex){

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if(ret < 0){

LOGE("Decode Error.\n");

return -1;

}

if(got_picture){

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data,

pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

}

av_free_packet(packet);

}

//flush decoder

//FIX: Flush Frames remained in Codec

while (1)

{

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data,

pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

time_finish = clock();

time_duration=(double)(time_finish - time_start);

sprintf(info, "%s[Time ]%fms\n",info,time_duration);

sprintf(info, "%s[Count ]%d\n",info,frame_cnt);

sws_freeContext(img_convert_ctx);

fclose(fp_yuv);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

} Android.mk文件位于jni/Android.mk,如下所示:

LOCAL_PATH := $(call my-dir)

# FFmpeg library

include $(CLEAR_VARS)

LOCAL_MODULE := avcodec

LOCAL_SRC_FILES := libavcodec-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avdevice

LOCAL_SRC_FILES := libavdevice-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avfilter

LOCAL_SRC_FILES := libavfilter-5.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avformat

LOCAL_SRC_FILES := libavformat-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avutil

LOCAL_SRC_FILES := libavutil-54.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := postproc

LOCAL_SRC_FILES := libpostproc-53.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swresample

LOCAL_SRC_FILES := libswresample-1.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swscale

LOCAL_SRC_FILES := libswscale-3.so

include $(PREBUILT_SHARED_LIBRARY)

# Program

include $(CLEAR_VARS)

LOCAL_MODULE := sffdecoder

LOCAL_SRC_FILES :=simplest_ffmpeg_decoder.c

LOCAL_C_INCLUDES += $(LOCAL_PATH)/include

LOCAL_LDLIBS := -llog -lz

LOCAL_SHARED_LIBRARIES := avcodec avdevice avfilter avformat avutil postproc swresample swscale

include $(BUILD_SHARED_LIBRARY)build.gradle文件位于,如下所示:

import org.apache.tools.ant.taskdefs.condition.Os

apply plugin: 'com.android.application'

android {

compileSdkVersion 26

buildToolsVersion "26.0.1"

defaultConfig {

applicationId "com.lzp.sffmpegandroiddecoder"

minSdkVersion 15

targetSdkVersion 26

versionCode 1

versionName "1.0"

testInstrumentationRunner "android.support.test.runner.AndroidJUnitRunner"

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

}

}

//指定动态库路径

sourceSets {

main{

jni.srcDirs = [] // disable automatic ndk-build call, which ignore our Android.mk

jniLibs.srcDir 'src/main/libs'

}

}

// call regular ndk-build(.cmd) script from app directory

task ndkBuild(type: Exec) {

workingDir file('src/main')

commandLine getNdkBuildCmd()

//commandLine 'E:/Android/android-ndk-r10e/ndk-build.cmd' //也可以直接使用绝对路径

}

tasks.withType(JavaCompile) {

compileTask -> compileTask.dependsOn ndkBuild

}

task cleanNative(type: Exec) {

workingDir file('src/main')

commandLine getNdkBuildCmd(), 'clean'

}

clean.dependsOn cleanNative

}

//获取NDK目录路径

def getNdkDir() {

if (System.env.ANDROID_NDK_ROOT != null)

return System.env.ANDROID_NDK_ROOT

Properties properties = new Properties()

properties.load(project.rootProject.file('local.properties').newDataInputStream())

def ndkdir = properties.getProperty('ndk.dir', null)

if (ndkdir == null)

throw new GradleException("NDK location not found. Define location with ndk.dir in the local.properties file or with an ANDROID_NDK_ROOT environment variable.")

return ndkdir

}

//根据不同系统获取ndk-build脚本

def getNdkBuildCmd() {

def ndkbuild = getNdkDir() + "/ndk-build"

if (Os.isFamily(Os.FAMILY_WINDOWS))

ndkbuild += ".cmd"

return ndkbuild

}

dependencies {

compile fileTree(dir: 'libs', include: ['*.jar'])

androidTestCompile('com.android.support.test.espresso:espresso-core:2.2.2', {

exclude group: 'com.android.support', module: 'support-annotations'

})

compile 'com.android.support:appcompat-v7:26.+'

compile 'com.android.support.constraint:constraint-layout:1.0.2'

testCompile 'junit:junit:4.12'

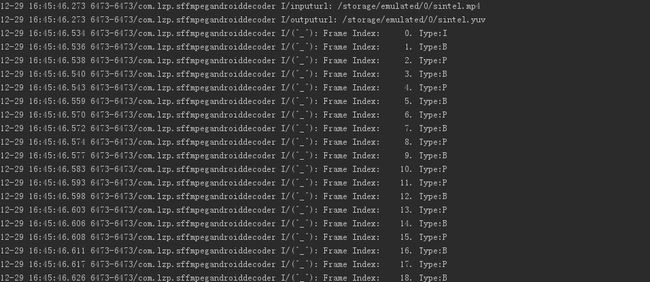

}4.3 运行

将sintel.mp4文件拷到手机SDK根目录,运行并点击Star按钮后,在AndroidMonitor中打印如下信息:

Type:I、P、B是各视频帧的类型。

源码+视频下载:http://download.csdn.net/download/itismelzp/10184248