PyCuda学习笔记之KNN加速

KNN 算法是看B站的视频:https://www.bilibili.com/video/av52220223?t=1413

前几天简单的学习了PyCuda, 所以就想应用一下, 然后就选了kNN作为加速对象, 其中也有一些坑, 所以就总结一下.

kNN算法

-

KNN算法是机器学习中一个非常简单的算法,它是一个分类算法,也叫k-近邻算法.大致意思就是查找所求点的周围最近的k个点, 看看哪一类在这k个点中占比最多, 那么所求点就属于最多的那一类.

-

代码也是上述视频的代码, 总共分两部分一部分是生成数据并调用kNN测试, 一部分是kNN算法

train.py 是生成数据并测试的, 源码如下

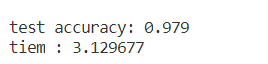

import numpy as np import matplotlib.pyplot as plt from knn import * # data generation np.random.seed(314) data_size_1 = 300 x1_1 = np.random.normal(loc=5.0, scale=1.0, size=data_size_1) x2_1 = np.random.normal(loc=4.0, scale=1.0, size=data_size_1) y_1 = [0 for _ in range(data_size_1)] data_size_2 = 400 x1_2 = np.random.normal(loc=10.0, scale=2.0, size=data_size_2) x2_2 = np.random.normal(loc=8.0, scale=2.0, size=data_size_2) y_2 = [1 for _ in range(data_size_2)] x1 = np.concatenate((x1_1, x1_2), axis=0) x2 = np.concatenate((x2_1, x2_2), axis=0) x = np.hstack((x1.reshape(-1,1), x2.reshape(-1,1))) y = np.concatenate((y_1, y_2), axis=0) data_size_all = data_size_1+data_size_2 shuffled_index = np.random.permutation(data_size_all) x = x[shuffled_index] y = y[shuffled_index] split_index = int(data_size_all*0.7) x_train = x[:split_index] y_train = y[:split_index] x_test = x[split_index:] y_test = y[split_index:] # visualize data plt.scatter(x_train[:,0], x_train[:,1], c=y_train, marker='.') plt.show() plt.scatter(x_test[:,0], x_test[:,1], c=y_test, marker='.') plt.show() # data preprocessing x_train = (x_train - np.min(x_train, axis=0)) / (np.max(x_train, axis=0) - np.min(x_train, axis=0)) x_test = (x_test - np.min(x_test, axis=0)) / (np.max(x_test, axis=0) - np.min(x_test, axis=0)) # knn classifier clf = KNN(k=3) clf.fit(x_train, y_train) print('train accuracy: {:.3}'.format(clf.score())) y_test_pred = clf.predict(x_test) print('test accuracy: {:.3}'.format(clf.score(y_test, y_test_pred))) -

knn.py

import numpy as np import operator class KNN(object): def __init__(self, k=3): self.k = k def fit(self, x, y): self.x = x self.y = y def _square_distance(self, v1, v2): return np.sum(np.square(v1-v2)) def _vote(self, ys): ys_unique = np.unique(ys) vote_dict = {} for y in ys: if y not in vote_dict.keys(): vote_dict[y] = 1 else: vote_dict[y] += 1 sorted_vote_dict = sorted(vote_dict.items(), key=operator.itemgetter(1), reverse=True) return sorted_vote_dict[0][0] def predict(self, x): y_pred = [] for i in range(len(x)): dist_arr = [self._square_distance(x[i], self.x[j]) for j in range(len(self.x))] sorted_index = np.argsort(dist_arr) top_k_index = sorted_index[:self.k] y_pred.append(self._vote(ys=self.y[top_k_index])) return np.array(y_pred) def score(self, y_true=None, y_pred=None): if y_true is None and y_pred is None: y_pred = self.predict(self.x) y_true = self.y score = 0.0 for i in range(len(y_true)): if y_true[i] == y_pred[i]: score += 1 score /= len(y_true) return score

kNN加速

-

由于这个例子比较简单, 所以加速部分只能加速计算两点距离的部分,其中特别要注意的事是: 由于显卡不是专门的计算显卡, 所有向PyCuda里面传的数值一定要转成32位的.

-

train.py

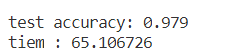

import time import numpy as np import matplotlib.pyplot as plt # from knn import * from knn_pycuda import * # data generation np.random.seed(272) data_size_1 = 3000 x1_1 = np.random.normal(loc=10.0, scale=2.0, size=data_size_1) x2_1 = np.random.normal(loc=8.0, scale=2.0, size=data_size_1) # y1_1 = [0 for i in range(data_size_1)] y1_2 = np.zeros(data_size_1, dtype=np.int32) data_size_2 = 4000 x1_2 = np.random.normal(loc=20.0, scale=4.0, size=data_size_2) x2_2 = np.random.normal(loc=16.0, scale=4.0, size=data_size_2) # y2_1 = [1 for i in range(data_size_2)] y2_2 = np.ones(data_size_2, dtype=np.int32) # 将数组拼接起来 x1 = np.concatenate((x1_1, x1_2), axis=0) x2 = np.concatenate((x2_1, x2_2), axis=0) # 沿着水平方向将数组堆叠起来. 传入是以元组的形式, 返回ndarray对象 x = np.hstack((x1.reshape(-1, 1), x2.reshape(-1, 1))) y = np.concatenate((y1_2, y2_2), axis=0) # plt.scatter(x[:, 0], x[:, 1], c=y) # plt.show() data_size_all = data_size_1 + data_size_2 # 随机置换序列 shuffled_index = np.random.permutation(data_size_all) # 打乱顺序 x = x[shuffled_index] y = y[shuffled_index] split_index = int(data_size_all * 0.7) x_train = x[:split_index] y_train = y[:split_index] x_test = x[split_index:] y_test = y[split_index:] # visualize data # plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train, marker='.') # plt.show() # plt.scatter(x_test[:, 0], x_test[:, 1], c=y_test, marker='.') # plt.show() # visualize data # plt.scatter(x_train[:, 0], x_train[:, 1], marker='.') # plt.show() # plt.scatter(x_test[:, 0], x_test[:, 1], marker='.') # plt.show() x_train = (x_train - np.min(x_train, axis=0)) / (np.max(x_train, axis=0) - np.min(x_train, axis=0)) x_test = (x_test - np.min(x_test, axis=0)) / (np.max(x_test, axis=0) - np.min(x_test, axis=0)) time_start = time.time() clf = KNN(k=3) clf.fit(x_train, y_train) y_pred = clf.predict(x_test) print('test accuracy: {:.3}'.format(clf.score(y_test, y_pred))) time_end = time.time() print('tiem : %f'%(time_end - time_start)) -

knn_pycuda.py

import numpy as np import operator import pycuda.autoinit import pycuda.driver as cuda from pycuda.compiler import SourceModule mod = SourceModule(""" __global__ void squareDistance(float* x1, float* y1, float* x2, float* y2, float* distence, int N) { int index = threadIdx.x + blockIdx.x * blockDim.x; int stride = blockDim.x * gridDim.x; for (int i = index; i < N; i += stride) { //printf("x1, y1: %f, 5%f", x1[i], y1[i]); distence[i] = (x2[0]-x1[i]) * (x2[0]-x1[i]) + (y2[0]-y1[i]) * (y2[0]-y1[i]); } } """) class KNN(object): def __init__(self, k=3): self.k = k def fit(self, x, y): self.x = x self.y = y def _vote(self, ys): ys_unique = np.unique(ys) # print('ys_unique, ys: %d %d'%(len(ys_unique), len(ys))) vote_dict = {} for y in ys: if y not in vote_dict.keys(): vote_dict[y] = 1 else: vote_dict[y] += 1 """ 1. sort 是应用在 list 上的方法,sorted 可以对所有可迭代的对象进行排序操作。 list 的 sort 方法返回的是对已经存在的列表进行操作,而内建函数 sorted 方 法返回的是一个新的 list,而不是在原来的基础上进行的操作。 2. operator 模块提供了一套与Python的内置运算符对应的高效率函数。 例如,operator.add(x, y) 与表达式 x+y 相同。operator模块提供的itemgetter函数用于获取对象的哪些维的数据,参数为一些序号(即需要获取的数据在对象中的序号),下面看例子。 a = [1,2,3] >>> b=operator.itemgetter(1) //定义函数b,获取对象的第1个域的值 >>> b(a) 2 >>> b=operator.itemgetter(1,0) //定义函数b,获取对象的第1个域和第0个的值 >>> b(a) (2, 1) 要注意,operator.itemgetter函数获取的不是值,而是定义了一个函数,通过该函数作用到对象上才能获取值。 """ sorted_vote_dict = sorted(vote_dict.items(), key=operator.itemgetter(1), reverse=True) # print(sorted_vote_dict[0][0]) return sorted_vote_dict[0][0] def predict(self, x): square_distance = mod.get_function('squareDistance') y_pred = [] n_size = len(self.x) n_size = np.int32(n_size) x1 = np.empty(n_size, dtype=np.float32) x1 = self.x[:,0] y1 = np.empty(n_size, dtype=np.float32) y1 = self.x[:,1] # print(self.x.shape) # 把x1, y1的排列方式转成行优先, 没有这一步会出错 x1 = x1.copy(order='C') y1 = y1.copy(order='C') # 显卡原因因为是游戏显卡, 所以只能计算32位的浮点数 # 注意: 申请x1的时候, 虽然写了x1的类型是float32,但还是要写下面这两句 x1 = np.float32(x1) y1 = np.float32(y1) for i in range(len(x)): x2 = np.empty(1, dtype=np.float32) x2[0] = x[i][0] y2 = np.empty(1, dtype=np.float32) y2[0] = x[i][1] dist_arr = np.empty(n_size, dtype=np.float32) thread_size = 256 block_size = int((n_size + thread_size - 1) / thread_size) square_distance( cuda.In(x1), cuda.In(y1), cuda.In(x2), cuda.In(y2), cuda.Out(dist_arr), n_size, block=(thread_size, 1, 1), grid=(block_size, 1) ) sorted_index = np.argsort(dist_arr) top_k_index = sorted_index[:self.k] y_pred.append(self._vote(ys=self.y[top_k_index])) return np.array(y_pred) def score(self, y_true=None, y_pred=None): if y_true is None or y_pred is None: y_pred = self.predict(self.x) y_true = self.y score = 0.0 for i in range(len(y_true)): if y_pred[i] == y_true[i]: score += 1 score /= len(y_true) return score