Google的预训练模型又霸榜了,这次叫做T5(附榜单)

来源:科学空间

本文约

1000字

,建议阅读

5分钟

。

本文将介绍Google最近新出的预训练模型。

Google又出大招了,这次叫做T5:

T5 serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. The bulk of the code in this repository is used for loading, preprocessing, mixing, and evaluating datasets. It also provides a way to fine-tune the pre-trained models released alongside the publication.

T5 can be used as a library for future model development by providing useful modules for training and fine-tuning (potentially huge) models on mixtures of text-to-text tasks.

这次的结果基本上比之前最优的RoBERTa都要高出4%,其中BoolQ那个已经超过了人类表现了:

榜单在此:

https://super.gluebenchmark.com/leaderboard

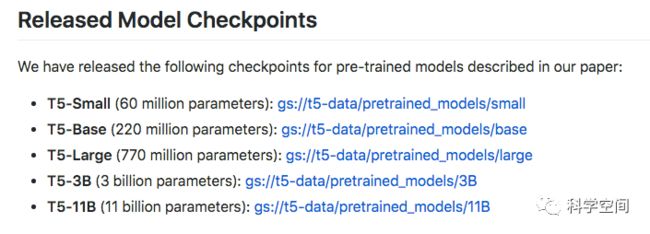

这次的模型参数量,最多达到了110亿!

!

!

!

!

!

!

Github上也给出了简单的使用教程,当然这么大的参数,估计也只能用tpu了:

https://github.com/google-research/text-to-text-transfer-transformer

此处之外,Github还提到另外一个贡献,就是一个名为tensorflow-datasets的库,里边可以方便地调用很多已经转好为tf.data格式的数据,无缝对接tensorflow。

详情请看:

https://www.tensorflow.org/datasets

对了,还有论文:

https://arxiv.org/abs/1910.10683

大名是

《Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer》

Transfer learning, where a model is first pre-trained on a data-rich task before being fine-tuned on a downstream task, has emerged as a powerful technique in natural language processing (NLP). The effectiveness of transfer learning has given rise to a diversity of approaches, methodology, and practice. In this paper, we explore the landscape of transfer learning techniques for NLP by introducing a unified framework that converts every language problem into a text-to-text format. Our systematic study compares pre-training objectives, architectures, unlabeled datasets, transfer approaches, and other factors on dozens of language understanding tasks. By combining the insights from our exploration with scale and our new “Colossal Clean Crawled Corpus”, we achieve state-of-the-art results on many benchmarks covering summarization, question answering, text classification, and more. To facilitate future work on transfer learning for NLP, we release our dataset, pre-trained models, and code.

似乎叫做《Exploring the Limits of TPU》适合一些?

大致扫了一下论文,应该是用了类似UNILM的Seq2Seq预训练方式吧,把各种有标签的、无标签的数据都扔了进去。

现在就简单推送一下,细读之后再分享感想,现在就蹭一下热度。

编辑:王菁

校对:王欣