python应用案例-爬取京东商品评论

目标:爬取京东某商品的评论

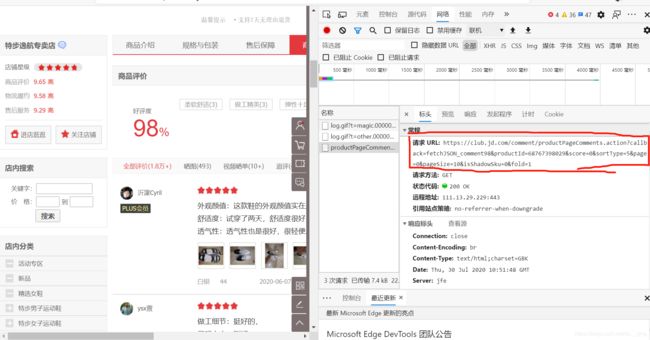

1.任意选择一个商品

2.找到url

3.写代码

导入模块requests

(可以通过cmd的pip install requests命令安装requests模块)

import requests

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

4.根据商品url地址发起请求获取响应结果

import requests

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

html= requests.get(url).text

5.输出查看是否出错

import requests

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

html= requests.get(url).text

print(html)

可以看出以上代码已成功获取内容

接下来选取出所需要的评论信息

6.择取评论

注意到:刚才返回的是一个json字符串,需要将json字符串转换为python数据类型

导入json模块

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

观察评论位置

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

print(i['content'])

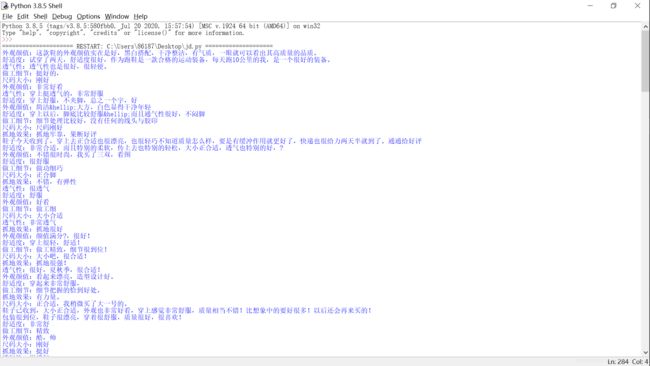

运行结果

7.可以看出爬取的只是第一页的评论

现在尝试获得更多评论

观察后面几页的urll:

第二页:https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=1&pageSize=10&isShadowSku=0&rid=1&fold=1

第三页:https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=2&pageSize=10&isShadowSku=0&rid=1&fold=1

可以看出url的变化规律

使用循环来获得前十页的评论:

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

for count in range(10):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

print(i['content'])

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

for count in range(10):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

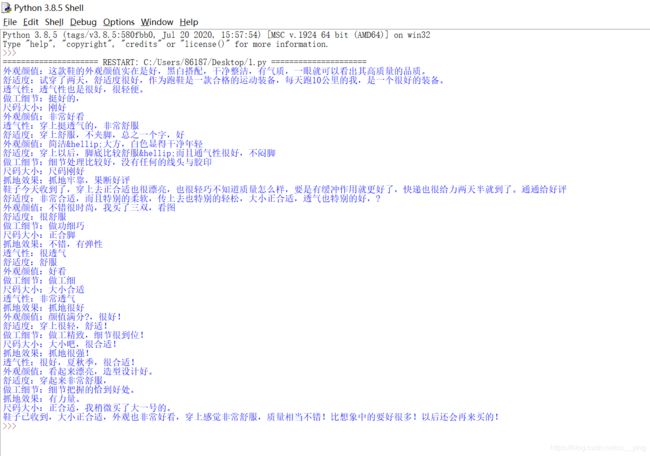

print('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n')

9.将打印内容存入一个文件中

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

for count in range(10):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

file=open("comment.txt", 'a')

file.writelines('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n\n')

file.close()

10.增加获取评论的数量

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

for count in range(100):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

html= requests.get(url).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

file=open("comment.txt", 'a')

file.writelines('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n\n')

file.close()

运行后发现中途报错

考虑到相同ip频繁访问,尝试使用代理ip,并修改user-agent

import requests

import json

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

head = {}

head['Uxer-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36 Edg/84.0.522.50'

for count in range(100):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

proxies = [{'http':'socks5://117.69.13.180'},{'http':'socks5://113.194.130.117'},{'http':'socks5://110.243.15.253'},

{'http':'socks5://125.108.72.92'},{'http':'socks5://110.243.15.253'},{'http':'socks5://171.35.149.223'},

{'http':'socks5://125.108.108.150'},{'http':'socks5://123.163.116.56'},{'http':'socks5://123.54.52.37'},

{'http':'socks5://113.124.87.85'},{'http':'socks5://171.11.29.66'},{'http':'socks5://163.204.92.79'},

{'http':'socks5://117.57.48.102'},{'http':'socks5://125.108.110.245'},{'http':'socks5://115.218.6.85'},

{'http':'socks5://219.146.127.6'},{'http':'socks5://120.83.101.249'},{'http':'socks5://223.242.225.19'},

{'http':'socks5://121.8.146.99'},{'http':'socks5://110.243.7.237'},{'http':'socks5://115.218.2.104'},

{'http':'socks5://183.195.106.118'},{'http':'socks5://49.86.176.16'},{'http':'socks5://222.89.32.141'},

{'http':'socks5://1.198.73.202'},{'http':'socks5://136.228.128.6'},{'http':'socks5://136.228.128.6'},]

proxies1 = random.choice(proxies)

html= requests.get(url,head,proxies=proxies1).text

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

file=open("comment.txt", 'a')

file.writelines('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n\n')

file.close()

运行后出错,检查后导入random模块

导入后,程序运行中途可能出现某些ip无法访问的问题

增加一个while循环,增加程序容错率

import requests

import json

import random

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

head = {}

head['Uxer-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36 Edg/84.0.522.50'

for count in range(100):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

proxies = [{'http':'socks5://117.69.13.180'},{'http':'socks5://113.194.130.117'},{'http':'socks5://110.243.15.253'},

{'http':'socks5://125.108.72.92'},{'http':'socks5://110.243.15.253'},{'http':'socks5://171.35.149.223'},

{'http':'socks5://125.108.108.150'},{'http':'socks5://123.163.116.56'},{'http':'socks5://123.54.52.37'},

{'http':'socks5://113.124.87.85'},{'http':'socks5://171.11.29.66'},{'http':'socks5://163.204.92.79'},

{'http':'socks5://117.57.48.102'},{'http':'socks5://125.108.110.245'},{'http':'socks5://115.218.6.85'},

{'http':'socks5://219.146.127.6'},{'http':'socks5://120.83.101.249'},{'http':'socks5://223.242.225.19'},

{'http':'socks5://121.8.146.99'},{'http':'socks5://110.243.7.237'},{'http':'socks5://115.218.2.104'},

{'http':'socks5://183.195.106.118'},{'http':'socks5://49.86.176.16'},{'http':'socks5://222.89.32.141'},

{'http':'socks5://1.198.73.202'},{'http':'socks5://136.228.128.6'},{'http':'socks5://136.228.128.6'},]

n = 0

while n == 0:

try:

proxies1 = random.choice(proxies)

html= requests.get(url,head,proxies=proxies1).text

n += 1

except:

n = 0

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

file=open("comment.txt", 'a')

file.writelines('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n\n')

file.close()

导入time模块,控制访问频率

import requests

import json

import random

import time

url='https://club.jd.com/comment/productPageComments.action?callback=fetchJSON_comment98&productId=68767398029&score=0&sortType=5&page=0&pageSize=10&isShadowSku=0&fold=1'

head = {}

head['Uxer-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36 Edg/84.0.522.50'

for count in range(100):

if count!=0:

url='https://club.jd.com/comment/productPageComments.action?callback=fetch'\

'JSON_comment98&productId=68767398029&score=0&sortType=5&page='+str(count)+'&pageSize=10&isShadowSku=0&rid=0&fold=1'

proxies = [{'http':'socks5://117.69.13.180'},{'http':'socks5://113.194.130.117'},{'http':'socks5://110.243.15.253'},

{'http':'socks5://125.108.72.92'},{'http':'socks5://110.243.15.253'},{'http':'socks5://171.35.149.223'},

{'http':'socks5://125.108.108.150'},{'http':'socks5://123.163.116.56'},{'http':'socks5://123.54.52.37'},

{'http':'socks5://113.124.87.85'},{'http':'socks5://171.11.29.66'},{'http':'socks5://163.204.92.79'},

{'http':'socks5://117.57.48.102'},{'http':'socks5://125.108.110.245'},{'http':'socks5://115.218.6.85'},

{'http':'socks5://219.146.127.6'},{'http':'socks5://120.83.101.249'},{'http':'socks5://223.242.225.19'},

{'http':'socks5://121.8.146.99'},{'http':'socks5://110.243.7.237'},{'http':'socks5://115.218.2.104'},

{'http':'socks5://183.195.106.118'},{'http':'socks5://49.86.176.16'},{'http':'socks5://222.89.32.141'},

{'http':'socks5://1.198.73.202'},{'http':'socks5://136.228.128.6'},{'http':'socks5://136.228.128.6'},]

n = 0

while n == 0:

try:

proxies1 = random.choice(proxies)

html= requests.get(url,head,proxies=proxies1).text

n += 1

except:

n = 0

html=html[20:-2]

html=json.loads(html)

html=html['comments']

for i in html:

file=open("comment.txt", 'a')

file.writelines('用户id: '+str(i['id'])+'\n'+'评分: '+str(i['score'])+'颗星'+'\n'+'评论: '+i['content']+'\n\n')

file.close()

time.sleep(1)