Standard Gameplay and IAP Metrics for Mobile Games

雅虎邮箱推来一篇好文,转载以备。

Standard Gameplay and IAP Metrics for Mobile Games

By Andy Savage | Published Jan 14 2015 06:25 AM inMobile Development

Part 1

原文:http://www.gamedev.net/page/resources/_/technical/mobile-development/standard-gameplay-and-iap-metrics-for-mobile-games-r3860

Over the next few weeks I am publishing some example analytics for optimising gameplay and customer conversion. I will be using a real world example game, "Ancient Blocks", which is actually available on the App Store if you want to see the game in full.

The reports in this article were produced using Calq, but you could use an alternative service or build these metrics in-house. This series is designed to be "What to measure" rather than "How to measure it".

Common KPIs

The high-level key performance indicators (KPIs) are typically similar across all mobile games, regardless of genre. Most developers will have KPIs that include:

- D1, D7, D30 retention - how often players are coming back.

- DAU, WAU, MAU - daily, weekly and monthly active users, a measurement of the active playerbase.

- User LTVs - what is the lifetime value of a player (typically measured over various cohorts, gender, location, acquiring ad campaign etc).

- DARPU - daily average revenue per user, i.e. the amount of revenue generated per active player per day.

- ARPPU - average revenue per paying user, a related measurement to LTV but it only counts the subset of users that are actually paying.

There will also be game specific KPIs. These will give insight on isolated parts of the game so that they can be improved. The ultimate goal is improving the high-level KPIs by improving as many sub-game areas as possible.

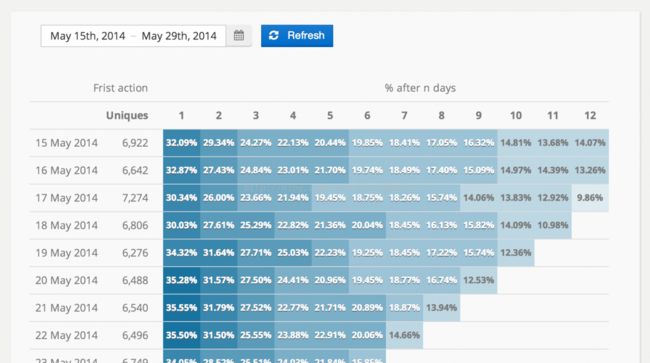

Retention

Retention is a measure of how often players are coming back to your game after a period. D1 (day 1) retention is how many players returned to play the next day, D7 means 7 days later etc. Retention is a critical indicator of how sticky your game is.

Arguably it's more important to measure retention than it is to measure revenue. If you have great retention but poor user life-time values (LTV) then you can normally refine and improve the latter. The opposite is not true. It's much harder to monetise an application with low retention rates.

A retention grid is a good way to visualize game retention over a period

When the game is iterated upon (either by adding/removing features, or adjusting existing ones) the retention can be checked to see if the changes had a positive impact.

Active user base

You may have already head of "Daily/Weekly/Monthly" Active Users. These are industry standard measurements showing the size of your active user base. WAU for example, is a count of the unique players that have played in the last 7 days. Using DAU/WAU/MAU measurements is an easy way to spot if your audience is growing, shrinking, or flat.

Active user measurements need to be analysed along side retention data. Your userbase could be flat if you have lots of new users but are losing existing users (known as "churn") at the same rate.

Game-specific KPIs

In addition to the common KPIs each game will have additional metrics which are specific to the product in question. This could include data on player progression through the game (such as levels), game mechanics and balance metrics, viral and sharing loops etc.

Most user journeys (paths of interaction that a user can take in your application, such as a menu to start a new game) will also be measured so they can be iterated on and optimised.

For Ancient Blocks game specific metrics include:

- Player progression:

- Which levels are being completed.

- Whether players are replaying on a harder difficulty.

- Level difficulty:

- How many attempts does it takes to finish a level.

- How much time is spent within a level.

- How many power ups does a player use before completing a level.

- In game currency:

- When does a user spend in game currency?

- What do they spend it on?

- What does a player normally do before they make a puchase?

In-game tutorial

When a player starts a game for the first time, it is typical for them to be shown an interative tutorial that teaches new players how to play. This is often the first impression a user gets of your game and as a result it needs to be extremely well-refined. With a bad tutorial your D1 retention will be poor.

Ancient Blocks has a simple 10 step tutorial that shows the user how to play (by dragging blocks vertically until they are aligned).

Goals

The data collected about the tutorial needs to show any areas which could be improved. Typically these are areas where users are getting stuck, or taking too long.

- Identify any sticking points within the tutorial (points where users get stuck).

- Iteratively these tutorial steps to improve conversion rate (the percentage that get to the end successfully).

Metrics

In order to improve the tutorial a set of tutorial-specific metrics should be defined. For Ancient Blocks the key metrics we need are:

- The percentages of players that make it through each tutorial step.

- The percentage of players that actually finish the tutorial.

- The amount of time spent on each step.

- The percentage of players that go on to play the level after the tutorial.

Implementation

Tracking tutorial steps is straight-forward using an action-based analytics platform - in our case,Calq. Ancient Blocks uses a single action calledTutorial Step. This action includes a custom attribute calledStep to indicate which tutorial step the user is on (0 indicates the first step). We also want to track how long a user spend on each step (in seconds). To do this we also include a property calledDuration.

| Action | Properties |

|---|---|

| Tutorial Step |

|

Analysis

Analysing the tutorial data is reasonably easy. Most of the metrics can be found by creating a simple conversion funnel, with one funnel step for each tutorial stage.

The completed funnel query shows the conversion rate of the entire tutorial on a step by step basis. From here it is very easy to see which steps "lose" the most users.

As you can see from the results: step 4 has a conversion rate of around 97% compared to 99% for the other steps. This step would be a good candidate to improve. Even though it's only a 1 percentage point difference, that still means around $1k in lost revenue just on that step. Per month! For a popular game the different would be much larger.

Part 2

原文:http://www.gamedev.net/page/resources/_/technical/mobile-development/standard-gameplay-and-iap-metrics-for-mobile-games-part-2-r3899

Measuring gameplay

Gameplay is obviously a critical component in the success of a mobile game. It won't matter how great the artwork or soundtrack is if the gameplay isn't awesome too.

Drilling down into the gameplay specifics will vary between games of different genres. Our example game, Ancient Blocks, is a level-based puzzle game and the metrics that are collected here will reflect that. If you are following this series for your own games then you will need to adjust the metrics accordingly.

Game balance

It's imperative that a game is well balanced. If it's too easy then players will get bored. If it's too hard then players will get frustrated and may quit playing entirely. We want to avoid both scenarios.

For Ancient Blocks the initial gameplay metrics we are going to record are:

- The percentage of players who finish the first level.

- The percentage of players who finish the first 5 levels.

- The percentage of players that quit without finishing a level.

- The number of times a player replays a level before passing the level.

- The average time spent playing each level.

- The number of "power ups" that a player uses to pass each level.

- The number of blocks a player swipes to pass each level.

- The number of launches (block explosions) that a player triggers to pass each level.

Implementation

The example game is reasonably simple and we can get a lot of useful data from just 3 actions:Gameplay.Start for when a player starts a new level of our game,Gameplay.Finish for when a user finishes playing a level (the same action for whether they passed or failed), andGameplay.PowerUp for when a player uses one of Ancient Blocks' special power-ups (bomb, colour remove, or slow down) whilst playing a level.

| Action | Properties |

|---|---|

| Gameplay.Start |

|

| Gameplay.Finish |

|

| Gameplay.PowerUp |

|

Analysis

With just the 3 actions defined above it is possible to do a range of in-depth analysis on player behaviour and game balance.

Early player progression

A player's initial experience of a game extends beyond the tutorial to the first few levels. It is critical to get this right. Progress rate is a great indicator of whether or not the first levels are balanced, and whether players really understood the tutorial that showed them how to play (tutorial metrics were covered in the previous article).

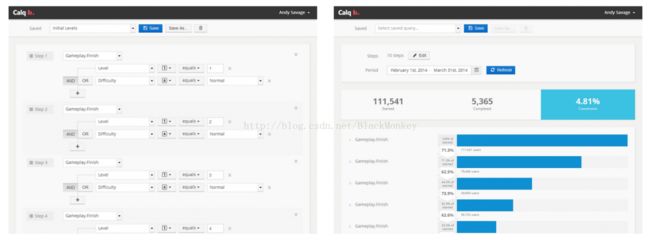

For Ancient Blocks the time taken on each level is reasonably short and so we are going to analyse initial progression through the first 10 levels. To do this we can create a conversion funnel that describes the user journey through these first 10 levels (or more if we wanted). The funnel will need 10 steps, one for each of the early levels. The action to be analysed isGameplay.Finish as this represents finishing a level.

Each step will need a filter. The first filter needs to be on the level Id to filter the step to the correct level, and a second filter on the Success property to only include level play that passed. We don't want to include failed attempts at a level in our progression stats.

All games will have a natural rate of drop off as levels increase since not all players will want to progress further into the game. Some people just won't enjoy playing your game - we are all different in what we look for and that's no bad thing. However, if certain levels are experiencing a significantly larger drop off than we expect, or a sudden drop compared to the level before, then those levels are good candidates to be rebalanced. It could be that the level is too hard, it could be less enjoyable, or it could be that the player doesn't understand what they need to do to progress.

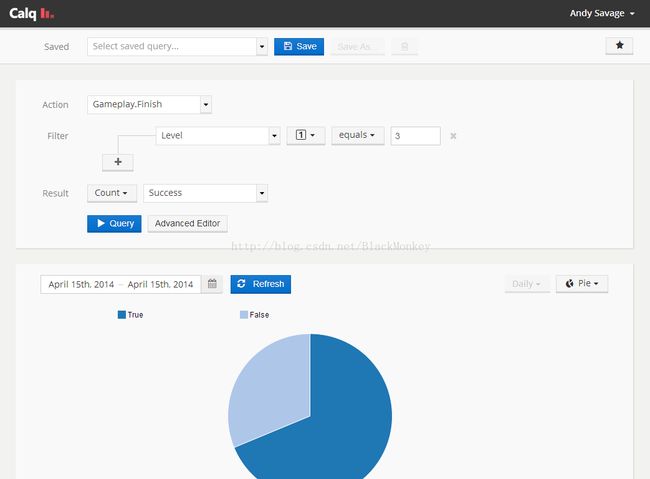

Level completion rates

Player progression doesn't provide the entire picture. A level might be played many times before it is actually completed and a conversion funnel doesn't show this. We need to look at how many times each level is being played compared to how many times it is actually completed successfully.

As an example, let's look at Ancient Block's 3rd level. We can query the number of times the level has been played and break it down into successes and failures. We do this using theGameplay.Finish action again, and apply a filter to only show the 3rd level. This time we group the results by the Success property to display the success rate.

The design spec for Ancient Blocks has a 75% success rate target for the 3rd level. As you can see from the results above it's slightly too hard, though not by much. A little tweaking of level parameters could get us on target reasonably easily.

Aborted sessions

An incredibly useful metric for measuring early gameplay is comparing the number of people that start a level but don't actually finish it - i.e. closed the application (pressed the home button etc). This is especially useful to measure straight after the tutorial level. If players are just quitting then they either don't like the game, or they are getting frustrated.

We can use a short conversion funnel to measure this. By using theGameplay.Start action, the Tutorial Step action from the previous article (so we can include only people who finished the tutorial already), and theGameplay.Finish action.

The results above show that 64.9% of players (which is the result between the 2nd and 3rd step in the funnel) that actually finished the tutorial went on to also finish the level. This means 35.1% of players quit the game in that gap. This number is much higher than we would expect, and represents a lot of lost players. This is a critical metric for the Ancient Blocks designers to iterate on and improve.

Part 3

原文:http://www.gamedev.net/page/resources/_/technical/mobile-development/standard-gameplay-and-iap-metrics-for-mobile-games-part-3-r3941

Optimizing in-app purchases (IAPs)

The goal of most mobile games is to either generate brand awareness or to provide revenue. Ancient Blocks is a commercial offering using the freemium model and revenue is the primary objective.

The game has an in game currency called "Gems" which can be spent on boosting the effects of in game power ups. Using a power up during a level will also cost a gem each time. Players can slowly accrue gems by playing. Alternatively a player can also buy additional gems in bulk using real world payments.

Our goal here is to increase the average life time value (LTV) of each player. This is done in 3 ways: converting more players into paying customers, making those customers pay more often, and increasing the value of each purchase made.

Some of the metrics we will need to measure include:

- Which user journey to the IAP screen gives the best conversions?

- The number of players that look at the IAP options but do not go on to make a purchase.

- The number of players that try to make a purchase but fail.

- Which items are the most popular?

- The cost brackets of the most popular items.

- The percentage of customers that go on to make a repeat purchase.

- The customer sources (e.g. ad campaigns) that generate the most valuable customers.

Implementation

Most of the required metrics can be achieved with just 4 simple actions, all related to purchase actions:

- Monetization.IAP - When a player actually buys something with real world cash using in-app purchasing (i.e. buying new gems, not spending gems).

- Monetization.FailedIAP - A player tried to make a purchase the transaction did not complete. Some extra information is normally given back by the store provider to indicate the reason (whether that be iTunes, Google Play etc).

- Monetization.Shop - The player opened the shop screen. It's important to know how players reached the shop screen. If a particular action (such as an in-game prompt) generates the most sales, then you will want to trigger that prompt more often (and probably refine its presentation).

- Monetization.Spend - The player spent gems in the shop to buy something. This is needed to map between real world currency and popular items within the game (as they are priced in gems).

| Action | Properties |

|---|---|

| Monetization.IAP |

|

| Monetization.FailedIAP |

|

| Monetization.Shop |

|

| Monetization.Spend |

|

In addition to these properties Ancient Blocks is tracking range of global properties (set withsetGlobalProperty(...)) detailing how each player was acquired (which campaign, which source etc). This is done automatically with the SDK where supported.

IAP conversions

One of the most important metrics is the conversion rate for the in game store, i.e. how many people viewing the store (or even just playing the game) go and make a purchase with real world currency.

Typically around 1.5 - 2.5% of players will actually make a purchase in this style of freemium game. The store-to-purchase conversion rate however is typically much lower. This is because the store is often triggered many times in a single game session, once after each level in some games. If a game is particularly aggressive at funnelling players towards the store screen then the conversion rate could be even lower - and yet still be a good conversion rate for that game.

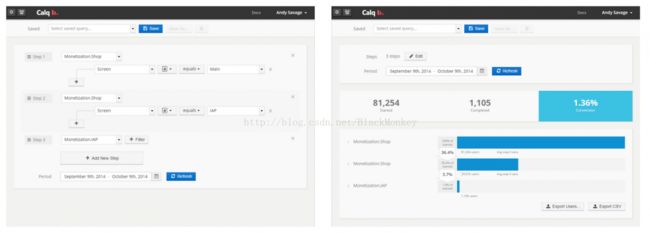

To measure this in Ancient Blocks a simple funnel is used with the following actions:

- Monetization.Shop (with the Screen property set to "Main") - the player opened the main shop screen.

- Monetization.Shop (with the Screen property set to "IAP") - the player opened the IAP shop (the shop that sells Gems for real world money).

- Monetization.IAP - the player made (and completed) a purchase.

As you can see, the conversion rate in Ancient Blocks is 1.36%. This is lower than expected and is a good indicator that the process needs some adjustment. When the designers of Ancient Blocks modify the store page and the prompts to trigger it, they can revisit this conversion funnel to see if the changes had a positive impact.

IAP failures

It's useful to monitor the failure rates of attempted IAPs. This can easily be measured using theMonetization.FailedIAP action from earlier.

You should look at why payments are failing so you can try to do something about it (though some of the time it might be out of the developers' control). Sharp changes in IAP rates can also indicate problems with payment gateways, API changes, or even attempts at fraud. In each of these cases you would want to take action pro-actively.

The reasons given for failure vary between payment providers (whether that's a mobile provider such as Google Play or the App Store, or an online payment provider). Depending on your provider you will get more or less granular data to act upon.

Comparing IAPs across customer acquisition sources

Most businesses measure the conversion effectiveness of acquisition campaigns (e.g. the number of impressions compared to the number of people that downloaded the game). Using Calq this can be taken further to show the acquisition sources that actually went on to make the most purchases (or spend the most money etc).

Using the Monetization.IAP or Monetization.Spend actions as appropriate, Calq can chart the data based on the referral data set withsetGlobalProperty(...). Remember to accommodate that you may have more players from one source than another which could apply a bias. You want the query to be adjusted by total players per source.

The results indicate which customer sources are spending more, and this data should be factored in to any acquisition budgets. This technique can also be used to measure other in game actions that are not revenue related. It's extremely useful to measure engagement and retention by aquisition source for example.

Series summary

This 3 part series is meant as a starting point to build upon. Each game is going to be slightly different and it will make sense to measure different events. The live version ofAncient Blocks actually measures many more data points than this.

Key take away points:

- The ultimate goal is to improve the core KPIs (retention, engagement, and user LTVs), but to do this you wil need to measure and iterate on many smaller game components.

- Metrics are often linked. Improving one metric will normally affect another and vice versa.

- Propose, test, measure, and repeat. Always be adding refinements or new features to your product. Measure the impact each time. If it works then you refine it and measure again. If it doesn't then you should rethink or remove it. Don't be afraid to kill to features that are not adding any value!

- Measure everything! You will likely want to answer even more business or product questions of your game later, but you will need the data there first to answer these questions.

About the Author(s)

After spending many years in the social and mobile gaming industries, Andy has recently made the switch over to analytics. Andy is keen to show game devs that recording the correct metrics is a critical tool for game development.

License

GDOL (Gamedev.net Open License)