hadoop大数据平台

一般hadoop不能安装在root用户下,所以首先新建一个用户。

[root@server1 ~]# useradd hadoop

[root@server1 ~]# su - hadoop

下载安装包

hadoop-3.2.1.tar.gz和jdk-8u241-linux-x64.tar.gz

本地离线

将压缩包解压,并且做两个软链接方便以后升级使用。

[hadoop@server1 ~]$ tar zxf jdk-8u241-linux-x64.tar.gz

[hadoop@server1 ~]$ tar zxf hadoop-3.2.1.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.8.0_241/ java

[hadoop@server1 ~]$ ln -s hadoop-3.2.1 hadoop

升级的操作是将java这个软链接到时候链接到新的jdk版本上就行了。

更改hadoop文件中java的路径即可。现在设置如下

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ vim hadoop-env.sh

export JAVA_HOME=/home/hadoop/java##指定java路径

export HADOOP_HOME=/home/hadoop/hadoop ##指定hadoop路径

现在做的实验是离线的,所以也不用启动什么别的服务,里面都有包直接进行调用即可。包在下面的位置

[hadoop@server1 hadoop]$ ls share/hadoop/mapreduce/

hadoop-mapreduce-client-app-3.2.1.jar

hadoop-mapreduce-client-common-3.2.1.jar

hadoop-mapreduce-client-core-3.2.1.jar

hadoop-mapreduce-client-hs-3.2.1.jar

hadoop-mapreduce-client-hs-plugins-3.2.1.jar

hadoop-mapreduce-client-jobclient-3.2.1.jar

hadoop-mapreduce-client-jobclient-3.2.1-tests.jar

hadoop-mapreduce-client-nativetask-3.2.1.jar

hadoop-mapreduce-client-shuffle-3.2.1.jar

hadoop-mapreduce-client-uploader-3.2.1.jar

hadoop-mapreduce-examples-3.2.1.jar

jdiff

lib

lib-examples

sources

下来创建一个目录,将文件拷过来

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

##直接调用hadoop-mapreduce-examples-3.2.1.jar这个包 使用grep过滤input目录中以fds开头的输出到output中,注意output这个目录不能存在

[hadoop@server1 hadoop]$ cd output/ ##output会自动生成

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 output]$ cat * ##查看文件中的内容

1 dfsadmin

这样离线式的就完成了

伪分布式

用单个节点模拟整个分布式

首先编辑核心文件,告诉master在哪

[hadoop@server1 hadoop]$ vim etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>##master名字

<value>hdfs://localhost:9000</value>##master地址和端口号

</property>

</configuration>

[hadoop@server1 hadoop]$ vim workers ##现在做的是伪分布式的,所以不用管这个文件,本来要在里面指定master和slave

服务启动后会先找core-site.xml文件,然后连接woekers读取用户

同时还要设定副本数

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value> ##设定副本数为1,hadoop默认副本为3,但是现在没有那么多节点所以设置一个即可

</property>

</cotnfiguratiot>

下来做免密,因为hadoop访问需要

[hadoop@server1 hadoop]$ ssh-keygen

免密完成之后将密钥追加到~/.ssh/authorized_keys文件中并修改权限

[hadoop@server1 hadoop]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@server1 hadoop]$ chmod 600 ~/.ssh/authorized_keys

[hadoop@server1 hadoop]$ ssh 192.168.122.11 ##连接自己查看免密是否成功

做完上面的工作使用命令格式化

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

默认不指定用户的话会在/tmp/下生成一个开头为hadoop的文件横线后面的是用户名,接着后面为/dfs/name的目录,这个目录下面放的是元数据镜像。

下面为了能使用命令更为方便设置环境变量。

[hadoop@server1 ~]$ vim .bash_profile

JAVA_HOME=/home/hadoop/java

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$JAVA_HOME/bin

[hadoop@server1 ~]$ source .bash_profile ##读取文件

[hadoop@server1 ~]$ jps##查看启动了什么东西

2880 SecondaryNameNode ##第二主节点 最好启动在别的机器上

3152 Jps

2561 NameNode ##主节点 相当于master

2677 DataNode ##从节点/数据节点 相当于slave

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report ##查看报告

Configured Capacity: 13407092736 (12.49 GB)

Present Capacity: 9898311680 (9.22 GB)

DFS Remaining: 9898303488 (9.22 GB)

DFS Used: 8192 (8 KB)

DFS Used%: 0.00%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (1):

Name: 192.168.122.11:9866 (server1) ##现在只有一个节点存活

Hostname: server1

Decommission Status : Normal

Configured Capacity: 13407092736 (12.49 GB)

DFS Used: 8192 (8 KB)

Non DFS Used: 3508781056 (3.27 GB)

DFS Remaining: 9898303488 (9.22 GB)

DFS Used%: 0.00%

DFS Remaining%: 73.83%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Fri Mar 27 21:49:08 CST 2020

Last Block Report: Fri Mar 27 21:11:47 CST 2020

Num of Blocks: 0

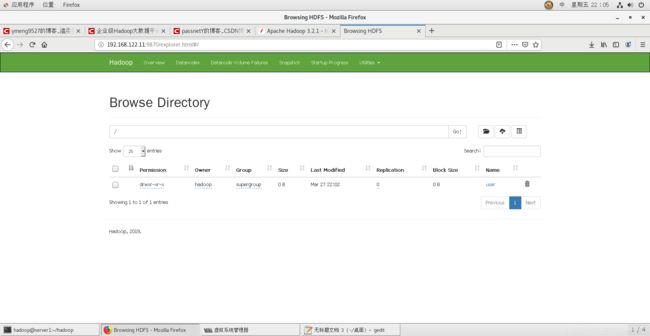

除了上面的可以查看节点等信息hadoop还自带了一个可视化在9870端口

下来我们给上面放一些文件

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir -p /user/hadoop##创建一个文件目录

这样就创建完成,并且在可视化端也可以看到

现在连上去默认连的就是hadoop这个用户使用命令查看的时候也是默认查看/user/hadoop这个目录中的东西。包括别的操作也是在这个目录中的。

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls ##列出文件

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input ##将input上传

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount input output

##现在这样输出output不会出现在本地,而是出现在分布式节点上,查看本地的没用,需要查看要用命令

[hadoop@server1 hadoop]$ bin/hadoop dfs -ls

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2020-03-27 22:08 input

drwxr-xr-x - hadoop supergroup 0 2020-03-27 22:14 output

[hadoop@server1 hadoop]$ bin/hadoop dfs -cat output/*

或者将本地的output删除掉然后直接将output下载下来进行查看

[hadoop@server1 hadoop]$ bin/hadoop dfs -get output

完全分布式

再启动两台虚拟机,构成一个master两个slave的集群

首先将伪分布式的集群停止

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

Stopping namenodes on [server1]

Stopping datanodes

Stopping secondary namenodes [server1]

[hadoop@server1 hadoop]$ rm -rf /tmp/* ##将原来的数据删除

在server1上将备份数从1改为2,现在有两个节点

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

将woeker更改

[hadoop@server1 hadoop]$ vim workers ##工作节点

192.168.122.12

192.168.122.13

[hadoop@server1 hadoop]$ bin/hdfs namenode -format ##重新初始化

初始化完成给每个节点安装nfs,保证数据传输一致,并且创建hadoop一致,以及hadoop用户id一致。

[root@server1 ~]# yum install nfs-utils.x86_64 -y

[root@server1 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) 组=1000(hadoop)

[root@server2 ~]# useradd hadoop

[root@server2 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) 组=1000(hadoop)

将nfs安装完成后在server1上将/home/hadoop共享出去

[root@server1 ~]# vim /etc/exports

/home/hadoop *(rw,anonuid=1000,anongid=1000)

在server1上启动nfs在别的节点启动rpcbind,之后将目录挂载

[root@server1 ~]# systemctl start nfs

[root@server2 ~]# systemctl start rpcbind.service

[root@server2 ~]# mount 192.168.122.11:/home/hadoop /home/hadoop

[root@server2 ~]# su - hadoop

[hadoop@server2 ~]$ ls

hadoop hadoop-3.2.1.tar.gz jdk1.8.0_241

hadoop-3.2.1 java jdk-8u241-linux-x64.tar.gz

这样的方式在任意节点都可以进行更改,设定完成后启动集群

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

Starting datanodes

Starting secondary namenodes [server1]

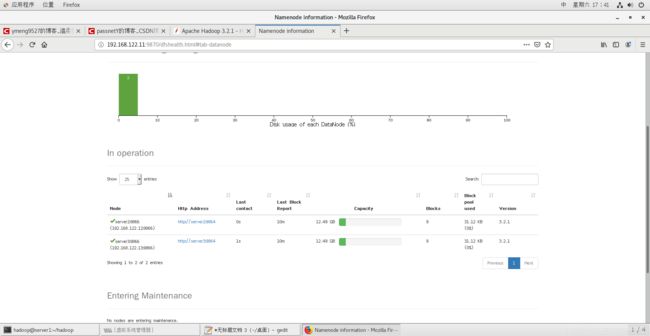

这时server1为namenode,server2,3为datanode

[hadoop@server1 hadoop]$ jps

2834 SecondaryNameNode

2615 NameNode ##只存分布式系统的元数据

[hadoop@server2 ~]$ jps

2337 DataNode ##专门存数据

[hadoop@server3 ~]$ jps

1853 DataNode

现在上传一些数据

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir -p /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input

上传完成后在web界面可以进行查看

看到有两个节点

数据两份

在线扩容

[root@server4 ~]# useradd hadoop ##创建用户

[root@server4 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) 组=1000(hadoop) ##确保id一样

[root@server4 ~]# yum install -y nfs-utils.x86_64 ##安装nfs

[root@server4 ~]# systemctl start rpcbind.service

[root@server4 ~]# mount 192.168.122.11:/home/hadoop/ /home/hadoop/ ##挂载家目录

[root@server4 ~]# su - hadoop

[hadoop@server4 hadoop]$ cd etc/hadoop/

[hadoop@server4 hadoop]$ vim workers ##将4的ip加入workers

192.168.122.14

[hadoop@server4 hadoop]$ cd ../..

[hadoop@server4 hadoop]$ bin/hdfs --daemon start datanode ##不用全部启动,只用在4上启动datanode就可以了

[hadoop@server4 hadoop]$ jps

1762 DataNode

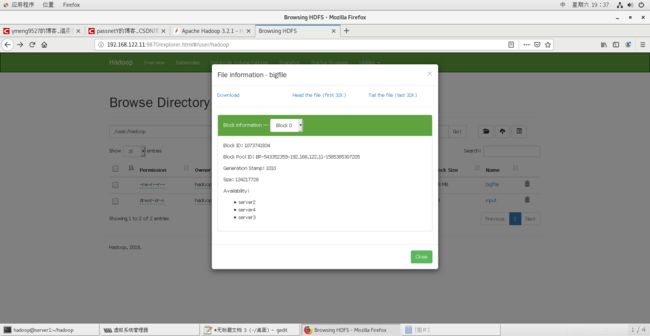

我们现在一个块只能存放128M如果我们存放很多的数据呢?当我们存放一个500M的文件进行上传。

[h

adoop@server4 hadoop]$ dd if=/dev/zero of=bigfile bs=1M count=500

[hadoop@server1 hadoop]$ bin/hdfs dfs -put bigfile ##将文件分成了4个块

2020-03-28 19:27:08,707 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-03-28 19:28:22,596 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-03-28 19:29:10,772 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-03-28 19:29:48,756 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

mapreduce

设置:

首先编辑文件

[hadoop@server1 hadoop]$ vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value> ##使用yarn进行管理

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value> ##调用jar包中的文件

</property>

</configuration>

[hadoop@server1 hadoop]$ vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

设置完成进行启动

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

Starting resourcemanager

Starting nodemanagers

192.168.122.14: Warning: Permanently added '192.168.122.14' (ECDSA) to the list of known hosts.