[新手安装篇] - Docker搭建Hadoop本地集群

-

-

- 博由

- Docker安装使用

- 下载安装

- 基本命令使用

- 使用Docker建立ubuntu虚拟机

- 1 建立ubuntu虚拟机

- 2 启动ubuntu机器

- 安装JAVA

- 安装配置Hadoop

- 虚拟机下载安装

- 本机传输到虚拟机

- 环境配置

- bashrc

- hadoop-envsh

- core-sitexml

- hdfs-sitexml

- mapred-sitexml

- 安装SSH配置机器的访问连接关系

- 安装SSH

- 配置

- 提交虚拟机

- 启动Hadoop集群

- 启动Masterslave1 slave2

- 配置hosts

- 配置ssh key

- 启动hadoop

- 参考

-

博由

学习Hadoop仅仅搭建伪分布式集群,总感觉不给力,因此想通过虚拟机来模拟真实工作环境的Hadoop集群,起初选择了一些VirtualBox之类的虚拟机,但是总感觉不爽,经过同事介绍可以通过Docker来搭建虚拟机环境,不仅部署快,而且速度也是杠杠的,本文就是刚搭建成功的一套基于Docker方式的Hadoop集群环境。Docker安装&使用

下载&安装

1.下载地址:https://www.docker.com/products/docker#/mac

2.安装向导:https://docs.docker.com/docker-for-mac/

[官网描述的已经非常清楚了,而且安装非常简单,这里不做详细描述。]基本命令使用

docker ps: 查看启动的虚拟机进程

docker images: 查看所有的容器

docker pull: 下载

docker commit: 新建保存镜像

docker run: 启动虚拟机

这些命令在接下来可以使用到。使用Docker建立ubuntu虚拟机

1, 建立ubuntu虚拟机

命令:docker pull ubuntu:14.04 有点类似于maven仓库的感觉,会从library/ububtu下载.当然你也可以选择其他的仓库,构成方式是:repository:tag的语法结构。2, 启动ubuntu机器

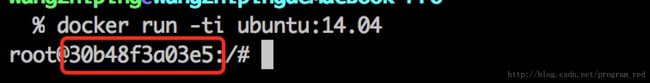

查看1创建的机器:docker images 启动:docker run -ti ubuntu:14.04

其中红色框内的一串字符是容器ID,可以在docker images 的 imageID列查看到,这个很有用处。到这里我们就已经搭建完ubuntu虚拟主机了。

安装JAVA

有了主机,我们需要安装Hadoop需要的jvm环境,

[1] apt-get update

[2] apt-get install software-properties-common python-software-properties

[3] add-apt-repository ppa:webupd8team/java

[4] apt-get install oracle-java8-installer

最好先apt-get update,容易出现下面命令无法安装的情况,本人就踩到了这个坑。并且在安装过程中出现了:

Unable to locate package oracle-java7-installer

错误,解决方式:

http://blog.csdn.net/program_red/article/details/53820100

[5] export JAVA_HOME=/usr/lib/jvm/java-8-oracle安装&配置Hadoop

安装和配置Hadoop有两种途径:

[1] 虚拟机下载安装

[2] 本机传输到虚拟机虚拟机下载安装

[1] apt-get install -y wget

[2] wget http://mirrors.sonic.net/apache/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

[3] tar xvzf hadoop-2.6.0.tar.gz本机传输到虚拟机

如果本机下载了Hadoop,可以通过docker传输到指定的虚拟机

命令:docker cp 源路径 目标路径

例如:docker cp hadoop/ 16859e097ec7:/tmp/hadoop环境配置

.bashrc

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

export HADOOP_HOME=/root/soft/hadoop/hadoop-2.7.1

export HADOOP_CONFIG_HOME=$HADOOP_HOME/etc/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbinhadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-oraclecore-site.xml

<configuration>

<property>

<name>hadoop.tmp.dirname>

<value>/root/soft/hadoop/hadoop-2.7.1/tmpvalue>

property>

<property>

<name>fs.default.namename>

<value>hdfs://master:9000value>

property>

configuration>hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>2value>

<final>truefinal>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>/root/soft/hadoop/hadoop-2.7.1/namenodevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/root/soft/hadoop/hadoop-2.7.1/datanodevalue>

property>

configuration>mapred-site.xml

<configuration>

<property>

<name>mapred.job.trackername>

<value>master:9001value>

property>

configuration>

安装SSH&配置机器的访问连接关系

安装SSH

安装:apt-get install ssh

在.bashrc配置:/usr/sbin/sshd

启动sshd服务:service ssh start配置

ssh-keygen -t rsa -P '' -f ~/.ssh/id_dsa

cd .ssh

cat id_dsa.pub >> authorized_keys提交虚拟机

docker commit -m 'hadoop install' ubuntu:hadoop

可以通过:

docker images 查看容器启动Hadoop集群

启动Master,slave1, slave2

docker run -ti -h master ubuntu:hadoop

docker run -ti -h slave1 ubuntu:hadoop

docker run -ti -h slave2 ubuntu:hadoop配置hosts

vi /etc/hosts

增加:

172.17.0.2 master

172.17.0.3 slave1

172.17.0.4 slave2配置ssh key

可参考:http://www.cnblogs.com/ivan0626/p/4144277.html

Master/Slave1/Slave2

ssh-keygen -t rsa

cat id_dsa.pub >> authorized_keys

然后将slave1和slave2的authorized_keys内容拷贝到master authorized_keys.authorized_keys文件同步到master,slave1,slave2

scp /root/.ssh/authorized_keys slave1:/root/.ssh/

scp /root/.ssh/authorized_keys slave2:/root/.ssh/NOTICE:可能出现异常:

ssh: connect to host slave1 port 22: Connection refused

lost connection

这是ssh服务没有启动,service ssh start启动hadoop

start-all.sh

注意可能存在:Error: JAVA_HOME is not set and could not be found. 异常,这个是因为hadoop-env.sh没有配置JAVA_HOME,只要配置好了就o了。

CMD:

root@master:~/soft/hadoop/hadoop-2.7.1# start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /root/soft/hadoop/hadoop-2.7.1/logs/hadoop-root-namenode-master.out

localhost: starting datanode, logging to /root/soft/hadoop/hadoop-2.7.1/logs/hadoop-root-datanode-master.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /root/soft/hadoop/hadoop-2.7.1/logs/hadoop-root-secondarynamenode-master.out

starting yarn daemons

resourcemanager running as process 443. Stop it first.

localhost: starting nodemanager, logging to /root/soft/hadoop/hadoop-2.7.1/logs/yarn-root-nodemanager-master.out

root@master:~/soft/hadoop/hadoop-2.7.1# jps

1367 NodeManager

1465 Jps

987 DataNode

443 ResourceManager

1149 SecondaryNameNode

862 NameNode

root@master:~/soft/hadoop/hadoop-2.7.1#缺点:此时保存了镜像之后,容易出现一个问题,就是重新启动虚拟机之后,会丢失/etc/hosts文件内容,下次启动时,需要重新配置。后续:提供此问题解决方案。

参考

[1] https://my.oschina.net/u/1866821/blog/483243

[2] http://jingyan.baidu.com/article/9c69d48fb9fd7b13c8024e6b.html

[3] http://justcoding.iteye.com/blog/2184871

[4] https://www.docker.com/products/docker#/mac

![[新手安装篇] - Docker搭建Hadoop本地集群_第1张图片](http://img.e-com-net.com/image/info8/39cfef43a7ab4f39b143974249b680bc.jpg)

![[新手安装篇] - Docker搭建Hadoop本地集群_第2张图片](http://img.e-com-net.com/image/info8/3c36fe0650374764af615e8bac622147.jpg)

![[新手安装篇] - Docker搭建Hadoop本地集群_第3张图片](http://img.e-com-net.com/image/info8/45c498d9217a4183b7534bcceae1f958.jpg)

![[新手安装篇] - Docker搭建Hadoop本地集群_第4张图片](http://img.e-com-net.com/image/info8/dab26d5704a54e9dabf9cc4ead3f197e.jpg)