Python爬虫学习笔记二:urllib

urllib

模拟浏览器发送请求的库,Python自带

Python2:urllib urllib2

Python3:urllib.request urllib.parse

1urllib.request

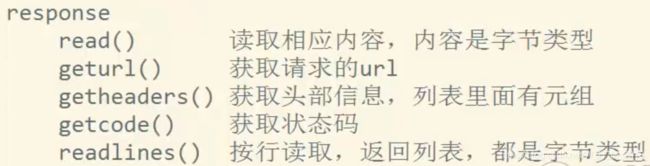

response

urlopen(url)

urlretrieve(url,image_path)

import urllib.request

url = 'heep://www.baidu.com'

# 完整的url

# http://www.baidu.com:80/index.heml?name=wanghao&password=Wanghao123#lala

# #是锚点

response = urllib.request.urlopen(url=url)

print (response)

$ respons响应对象

print (response.read().decode()) #读取相应内容

with open('baidu.html','w',encoding='utf8') as fp:

fp.write(response.read().decode()) # 写入文件

with open('baidu.html','wb',encoding='utf8') as fp:

fp.write(response.read()) # 写入二进制文件

print(response.geturl()) #获取请求的url

print(dict(response.getheaders())) #获取头部信息,列表里面有元组

print(response.getcode()) #获取状态吗

print(response.readlines()) #按行读取,返回列表,都是字节类型

# 下载图片

image_url = 'https://timgsa.baidu.com/timg?image&quality=80&size=b9999_10000&sec=1556383782&di=41bf15fb0adf02f7fe6c9428501a0d33&imgtype=jpg&er=1&src=http%3A%2F%2Fimages0.gbicom.cn%2Fuploads%2Fueditor%2F20150928%2F1443407409889192.png'

# 1

response = urllib.request.urlopen(image_url)

# 图像只能写入本地二进制格式

with open('google.jpg','wb') as fp:

fp.write(response.read())

# 2

urllib.request.urlretrieve(image_url,'google.jpg')字符串---二进制字符串

encode() 字符串-二进制 默认utf8

decode () 二进制-字符串 默认utf8

2urllib.parse

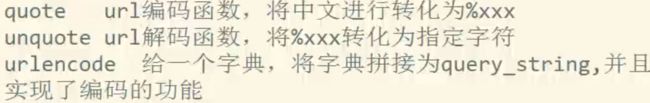

quote

unquote

urlencode

import urllib.parse

image_url = 'https://timgsa.baidu.com/timg?image&quality=80&size=b9999_10000&sec=1556384117&di=db75e9c18aad35914cfe72641def8ce5&imgtype=jpg&er=1&src=http%3A%2F%2Fupload.semidata.info%2Fnew.eefocus.com%2Farticle%2Fimage%2F2016%2F11%2F14%2F582912ba660c4-thumb.jpg'

# url只能由特定的字符组成,字母,数字,下划线,,,其它的要进行编码

url = 'heep://www.baidu.com/index.html?name=王浩&pwd=123456'

ret = urllib.parse.quote(url) #url编码函数 %xxx

re = urllib.parse.unquote(ret) #url解码函数

print(ret)

url = 'heep://www.baidu.com/index.html'

# 参数:name age sex height

# name = 'wanghao'

# age = 18

# sex = 'man'

# height = '182'

# weight = 145

data = {

'name':name

'age':age

'sex':sex

'height':height

'weight':weight

}

# 1

lt = []

for k,v in data.items():

lt.append(k + '=' str(v))

query_string = '&'.join(lt)

# 2

query_string = urllib.parse.urlencode(data) #拼接+编码!

url = url + '?' + query_string

print (url)

3 get方式

import urllib.request

import urllib.parse

word = input('请输入您想要搜索的内容:')

url = 'http://www.baidu.com/s?'

data={

'ie':'utf-8',

'wd':word,

}

query_string = urllib.parse.urlencode(data)

url += query_string

response = urllib.request.urlopen(url)

filename = word + '.html'

with open(filename,'wb') as fp:

fp.write(response.read())4 构建请求头部信息(反爬第一步)

伪装自己的UA:浏览器身份

构建请求对象:urllib.request.Request()

import urllib.request

import urllib.parse

import ssl

ssl._create_default_heeps_context = ssl._create_unverified_context) #安全证书

url = 'http://www.baidu.com/' #最后一个/一定要加哦

# 这个ua是python

response = urllib.request.urlopen(url)

print (response.read().decode())

伪装头部

headers = {

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36

}

# 构建请求对象

request = urllib.request.Request(url=url,headers=headers)

response = uellib,request.urlopen(request)

5 post

百度翻译

import urllib.request

import urllib.parse

post_url = 'http://fanyi.baidu.com/sug'

word = inpuit('请输入您要查询的英文单词:')

form_data = {

'kw':word

}

# 发送请求的过程

headers = {

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36

}

# 构建请求对象

request = urllib.request.Request(url = post_url,headers=headers)

# 处理post表单数据 解码后再变为二进制形式

form_data = urllib.parse.urlencode(form_data).encode()

# 发送请求

response = urllib.request.urlopen(request,data = form_data)

print(response.read().decode())import urllib.request

import urllib.parse

post_url = 'https://fanyi.baidu.com/v2transapi'

'

word = baby

form_data = {

'from':'en',

'to':'zh',

'query':word,

'tranbstype':'realtime',

'simple_means_flag':'3',

'sign':'814534.560887'

'token':'5b981af8814dda0b7ad75552953e358c'

}

# 发送请求的过程

headers = {

'Host': 'fanyi.baidu.com'

'Connection': 'keep-alive'

'Content-Length': '122'

'Accept': '*/*'

'Origin': 'https://fanyi.baidu.com'

'X-Requested-With': 'XMLHttpRequest'

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8'

'Referer': 'https://fanyi.baidu.com/'

#'Accept-Encoding': gzip, deflate, br' 不进行压缩,不加

'Accept-Language': en-US,en;q=0.9,zh-CN;q=0.8,zh;q=0.7'

'Cookie': 'BAIDUID=D932BA22DB1B366D8D7EDBC9842A576A:FG=1; BIDUPSID=D932BA22DB1B366D8D7EDBC9842A576A; PSTM=1528330460; MCITY=-%3A; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; BDUSS=53MTN2QU1vNVFNblNHcjIxaWEtQVdnblVOU3NiRG5QM3I0cW13WFlpdDNTczFjRVFBQUFBJCQAAAAAAAAAAAEAAACeqk00V0jE5saaAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAHe9pVx3vaVcdm; DOUBLE_LANG_SWITCH=0; to_lang_often=%5B%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%2C%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%5D; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; BDSFRCVID=1wPOJeC627Dck6r9xsguhIABO2KKLNRTH6aIrHsxzvaHMw0kcAsnEG0PtU8g0Ku-eGcEogKK0eOTHk_F_2uxOjjg8UtVJeC6EG0P3J; H_BDCLCKID_SF=tJItVIKKJIvMqRjnMPoKq4u_KxrXb-uXKKOLVKj5Lp7keq8CD6JU-PnBKGrOb-bt2g5C5qA-Whvd8xo2y5jHyJDnKp7E-to7B6kjBbOp-brpsIJM5h_WbT8U5ec-0-J4aKviaKJHBMb1jU7DBT5h2M4qMxtOLR3pWDTm_q5TtUt5OCcnK4-XjjjyjGrP; BDRCVFR[pNjdDcNFITf]=mk3SLVN4HKm; delPer=0; PSINO=1; H_PS_PSSID=1462_21103_28767_28724_28557_28834_28584; BDRCVFR[dG2JNJb_ajR]=mk3SLVN4HKm; BDRCVFR[-pGxjrCMryR]=mk3SLVN4HKm; locale=zh; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1554455559,1554629794,1555166960,1555781847; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1555781847; from_lang_often=%5B%7B%22value%22%3A%22de%22%2C%22text%22%3A%22%u5FB7%u8BED%22%7D%2C%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%2C%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%5D'

}

# 构建请求对象

request = urllib.request.Request(url = post_url,headers=headers)

# 处理post表单数据 解码后再变为二进制形式

form_data = urllib.parse.urlencode(form_data).encode()

# 发送请求

response = urllib.request.urlopen(request,form_data)

print(response.read().decode())6 Ajax

返回json数据或者状态信息

6.1get 豆瓣影评数据

import urllib.request

import urllib.parse

url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&'

page = int(input('请输入想要第几页的数据:'))

# start=0 limit=20

number = 20

#构建get参数

data = {

'start':(page - 1)*number,

'limit':number,

}

# 将字典转化为query_string

query_string = urllib.parse.urlencode(data)

#修改url

url += query_string

#构建请求对象

headers = {

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36

}

#发送请求

request = urllib.request.Request(url=url,headers=headers)

#获取响应

response = urllib.request.urlopen(request)

#打印数据

print(response.read().decode())6.2post

肯德基 http://www.kfc.com.cn/kfccda/storelist/index.aspx

import urllib.request

import urllib.parse

post_url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword'

city = input('请输入要查询的城市:')

page = input('请输入要查询第几页:')

size = input('请输入要多少条数据:')

formdata = {

'cname':'',

'pid':'',

'keyword':city

'pageIndex':page,

'pageSize':size,

}

headers={

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36

}

request = urllib.request.Request(url=post_url,headers=headers)

formdata = urllib.parse.urlencode(formdata).encode()

response = urllib.request.urlopen(request,data = formdata)

print(response.read().decode())6.3复杂get

百度贴吧

import urllib.request

import urllib.parse

import os

url = 'http://tieba.baidu.com/f?ie=utf-8&kw=python'

# 1 pn == 0

# 2 pn == 50

# n pn == (n-1)*50

#需求: 输入吧名,输入开始,结束页码,然后再当前文件夹中创建一个以吧名为名字的文件夹,里面是每一页html内容,文件加名字为 吧名_page.html

ba_name = input('请输入要爬取的吧名:')

start_page = int(input('请输入要爬取的起始页码:'))

end_page = int(input('请输入要爬取的结束页码'))

#创建文件夹

if not os.path.exists(ba_name):

os.mkdir(ba_name)

循环

for page in range(start_page,end_page+1):

data={

'kw':ba_name,

'pn':(pagea-1)*50,

}

data = urllib.parse.urlencode(data)

url_t = url +data

print(url_t)

headers ={

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36

}

request = urllib.request.Request(url = url_t,headers= headers)

print('第%s页开始下载......'% page)

response = urllib.request.urlopen(request)

filename = ba_name + '_' + str(page)+'.html'

filepath = ba_name +'/' +filename

#写入文件

with open(filepath,''wb) as fp:

fp.write(response.read())

print('第%s页结束下载......'% page)

7 URLError\HTTPErroe

urllib.error

NameError

TypeError

FileNotFound

异常处理,结构 try-except

7.1URLError:

1 没有网络

2 服务器连接失败

3 找不到指定的服务器

import urllib.request

import urllib.parse

import urllib.error

url = 'http://www.maodan.com/'

try:

response = urllib.request.urlopen(url)

print(response)

except Exception as e:

# except urllib.error.URLError as e:

print(e)

7.2HTTPError

是URLError 的子类,

抛出异常时,子类在父类之前。