docker集群部署logstash导入数据到es

docker集群部署logstash导入数据到es

- 一.创建yml配置文件

- 二.创建pipeline配置文件logstash.conf

- 三.编写docker-compose 文件

- 四.运行后查看日志

- 五.踩过的坑

- input中指定的文件找不到

- 导入es中的时间字段不是date类型

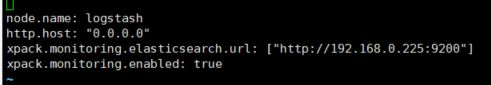

一.创建yml配置文件

在docker集群master服务器创建/ES/config文件夹,vi logstash.yml文件,添加如下配置

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.url: ["es集群url"]

xpack.monitoring.enabled: true # 收集监控数据

二.创建pipeline配置文件logstash.conf

在docker集群master服务器创建/ES/pipeline文件夹,vi logstash.conf文件,根据实际需求编写

input{

file {

path => "/usr/share/logstash/data/nfs/basedata_resources_area.csv"

sincedb_path => "/dev/null"

start_position => "beginning"

}

}

filter {

csv {

separator => ","

columns => ["id","time","areaid","areaname","pid","areatype","remark"]

}

mutate{

split => {"time" => "."}

add_field => ["datetime","%{time[0]}"]

remove_field => ["message","path","host","time","remark"]

}

date {

match => ["datetime","dd/MM/yyyy HH:mm:ss","ISO8601"]

target => "datetime"

}

}

output {

elasticsearch {

hosts => ["192.168.0.225:9200"]

index => "basedata_resources_area"

document_type => "_doc"

}

}

我接收的是csv文件,使用插件过滤清洗后导入es,最需要注意的是input文件路径一定要是绝对路径!而且因为是在docker集群中部署,部署的docker节点是随机分的,如果你把需要导入的文件放在master服务器上的文件夹中,而service被分配部署在node1服务器上,此时的路径是不对的,因为node1服务器上没有这个文件,所以写docker-compose时要做路径映射,文件路径指向映射后的路径。

三.编写docker-compose 文件

在Portainer界面Stacks中AddStack,docker-compose如下

version: '3.6'

services:

logstash:

image: docker.elastic.co/logstash/logstash:6.4.2

hostname: logstash

container_name: logstash

environment:

- TZ="Asia/Shanghai"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- /ES/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro

- /ES/pipeline/logstash.conf:/usr/share/logstash/pipeline/logstash.conf:ro

- /data/nfs:/usr/share/logstash/data/nfs:ro

ports:

- "5044:5044"

networks:

- esnet

networks:

esnet:

说明这里要挂载三个文件夹,一个是logstash自身的配置文件,一个是logstash的pipeline的配置文件,一个是要导入的文件存放的文件夹

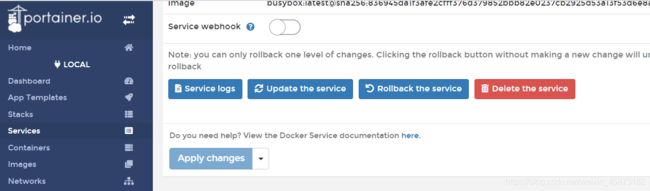

四.运行后查看日志

点击部署后service启动,在services界面找到启动的logstash service,点击进入,在Service Logs中查看日志

五.踩过的坑

input中指定的文件找不到

因为是在docker集群中部署,部署的docker节点是随机分的,所以如果你把需要导入的文件放在master服务器上的文件夹中,而service如果被分配部署在node1服务器上,此时的路径是不对的,因为node1服务器上没有这个文件,所以写docker-compose时要做路径映射,input中文件路径指向映射后的路径。

导入es中的时间字段不是date类型

如果导入es中的时间字段不是date类型,那么该字段是无法作为时间轴来进行分析的,所以需要进行转换

假设我的原始time字段的值如:3/6/2020 10:11:12.13+08:08

filter {

csv { #拆分csv文件,分好列

separator => ","

columns => ["id","time","areaid","areaname","pid","areatype","remark"]

}

mutate{ #time字段按”.“分割,添加datetime字段,值为分割后得到的可处理的时间字段3/6/2020 10:11:12,然后将原来的time字段删除

split => {"time" => "."}

add_field => ["datetime","%{time[0]}"]

remove_field => ["message","path","host","time","remark"]

}

date { #将datetime转换为date类型,match =>[字段名,要匹配拦截的时间格式1,要匹配拦截的时间格式2]

match => ["datetime","dd/MM/yyyy HH:mm:ss","ISO8601"]

target => "datetime" #转换后指向datetime字段,如果不设置,默认值为指向@timestamp

}

}