点云项目学习一:PCL+opencv环境配置与Kinect获得深度信息

点云项目学习一:PCL+opencv环境配置与Kinect获得深度信息

- 环境配置

- 获得深度信息

- 运行结果

- 预留任务

目前第一篇博客,记录一下创新项目的学习进程。本项目基于Kinect2.0采集室内场景深度信息,在Pointnet框架中进行学习,实现识别的效果。

环境配置

我们的环境配置是:win10+vs2019+opencv+PCL1.10.1+Kinect v2

包含目录:

我直接把opencv+Kinect+PCL都贴上来了,读者请按照自己的地址。

C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\inc

D:\PCL 1.10.1\3rdParty\VTK\include\vtk-8.2

D:\PCL 1.10.1\3rdParty\Qhull\include

C:\Program Files\OpenNI2\Include

D:\PCL 1.10.1\3rdParty\FLANN\include

D:\PCL 1.10.1\3rdParty\Eigen\eigen3

D:\PCL 1.10.1\3rdParty\Boost\include\boost-1_72

D:\PCL 1.10.1\include\pcl-1.10

库目录:

C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\Lib\x64

D:\PCL 1.10.1\lib

D:\PCL 1.10.1\3rdParty\VTK\lib

D:\PCL 1.10.1\3rdParty\Qhull\lib

C:\Program Files\OpenNI2\Lib

D:\PCL 1.10.1\3rdParty\FLANN\lib

D:\PCL 1.10.1\3rdParty\Boost\lib

附加依赖项:

kinect20.lib

opencv_world412d.lib

PCL的就不列了,主要分得清release和debug就好了,太多了,贴一个其他博主写的教程:

vs2019+PCL配置

另:opencv的包含目录和库目录我按照一位暴力博主的方式永久配置了,每次新建项目就不需要重新导入了。

opencv永久配置

获得深度信息

我也是一边查csdn和github找各路博主搜来的代码,终于跑成功了几个:

#include "kinect.h"

#include 代码来源

下面开始分析代码:

Eigen是一个高层次的C ++库,有效支持线性代数,矩阵和矢量运算,数值分析及其相关的算法。我们主要用它的MatrixXd

如:Matrix3d:表示元素类型为double大小为3*3的矩阵变量

#include "kinect.h"

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

using namespace cv;

using namespace std;

头文件没啥好说的

// 获取Kinect设备,没有就返回failed

IKinectSensor* m_pKinectSensor;//定义类指针

HRESULT hr;

hr = GetDefaultKinectSensor(&m_pKinectSensor);//获取感应器

if (FAILED(hr))

{

return hr;

}

Kinect文档

IKinectSensor就是一个类,代表你的传感器设备,有一些库函数。

HRESULT为函数返回值(一种数据类型)

GetDefaultKinectSensor()这个函数用来获取预设的感应器,括号里填自己的预设名字就可以,然后就可以设置传感器的状态。

eg:

GetDefaultKinectSensor(&IKinectSensor); //获取感应器

IKinectSensor->Open(); //打开感应器

IMultiSourceFrameReader* m_pMultiFrameReader = NULL;

//表示多源框架的阅读器

if (m_pKinectSensor)

{

hr = m_pKinectSensor->Open();

if (SUCCEEDED(hr))

{

// 获取多数据源到读取器

hr = m_pKinectSensor->OpenMultiSourceFrameReader(

FrameSourceTypes::FrameSourceTypes_Color |

FrameSourceTypes::FrameSourceTypes_Infrared |

FrameSourceTypes::FrameSourceTypes_Depth,

&m_pMultiFrameReader);

//打开一个新的流阅读器,获取颜色,红外帧,深度

}

}

获取了一些数据

if (!m_pKinectSensor || FAILED(hr))//再来一次判断罢了

{

return E_FAIL;

}

// 三个数据帧及引用

IDepthFrameReference* m_pDepthFrameReference = NULL;//对实际深度框架的引用

IColorFrameReference* m_pColorFrameReference = NULL;

IInfraredFrameReference* m_pInfraredFrameReference = NULL;

IDepthFrame* m_pDepthFrame = NULL;//表示一个帧,其中每个像素代表该像素看到的最近物体的距离。

IInfraredFrame* m_pInfraredFrame = NULL;

IColorFrame* m_pColorFrame = NULL;

// 三个图片格式

Mat i_rgb(1080, 1920, CV_8UC4); //注意:这里必须为4通道的图,Kinect的数据只能以Bgra格式传出

Mat i_depth(424, 512, CV_8UC1);

Mat i_Infrared(424, 512, CV_8UC1);

Mat i_ir(424, 512, CV_16UC1);

Mat i_depthToRgb(424, 512, CV_8UC4);

//16位无符号整型

UINT16* depthData = new UINT16[424 * 512];

UINT16* InfraredData = new UINT16[424 * 512];

CameraSpacePoint* m_pCameraCoordinates = new CameraSpacePoint[512 * 424];//相机空间中的3D位置

ColorSpacePoint* m_pColorCoordinates = new ColorSpacePoint[512 * 424];//色彩空间中的2D位置

IMultiSourceFrame* m_pMultiFrame = nullptr;//表示KinectSensor中的多源框架

定义了一些东西。

while (true)

{

// 获取新的一个多源数据帧

hr = m_pMultiFrameReader->AcquireLatestFrame(&m_pMultiFrame);

if (FAILED(hr) || !m_pMultiFrame)

{

continue;

}

// 从多源数据帧中分离出彩色数据,深度数据和红外数据

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_ColorFrameReference(&m_pColorFrameReference);

if (SUCCEEDED(hr))

hr = m_pColorFrameReference->AcquireFrame(&m_pColorFrame);

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_DepthFrameReference(&m_pDepthFrameReference);

if (SUCCEEDED(hr))

hr = m_pDepthFrameReference->AcquireFrame(&m_pDepthFrame);

if (SUCCEEDED(hr))

hr = m_pMultiFrame->get_InfraredFrameReference(&m_pInfraredFrameReference);

if (SUCCEEDED(hr))

hr = m_pInfraredFrameReference->AcquireFrame(&m_pInfraredFrame);

// color拷贝到图片中

UINT nColorBufferSize = 1920 * 1080 * 4;

if (SUCCEEDED(hr))

hr = m_pColorFrame->CopyConvertedFrameDataToArray(nColorBufferSize, reinterpret_cast<BYTE*>(i_rgb.data), ColorImageFormat::ColorImageFormat_Bgra);

if (SUCCEEDED(hr))

{

hr = m_pInfraredFrame->CopyFrameDataToArray(424 * 512, InfraredData);

for (int i = 0; i < 512 * 424; i++)

{

// 0-255深度图,为了显示明显,只取深度数据的低8位

BYTE intensity = static_cast<BYTE>(InfraredData[i] % 256);

reinterpret_cast<BYTE*>(i_Infrared.data)[i] = intensity;

}

}

// depth拷贝到图片中

if (SUCCEEDED(hr))

{

hr = m_pDepthFrame->CopyFrameDataToArray(424 * 512, depthData);

for (int i = 0; i < 512 * 424; i++)

{

// 0-255深度图,为了显示明显,只取深度数据的低8位

BYTE intensity = static_cast<BYTE>(depthData[i] % 256);

reinterpret_cast<BYTE*>(i_depth.data)[i] = intensity;

}

ICoordinateMapper* m_pCoordinateMapper = NULL;

hr = m_pKinectSensor->get_CoordinateMapper(&m_pCoordinateMapper);

HRESULT hr = m_pCoordinateMapper->MapDepthFrameToColorSpace(512 * 424, depthData, 512 * 424, m_pColorCoordinates);

if (SUCCEEDED(hr))

{

for (int i = 0; i < 424 * 512; i++)

{

ColorSpacePoint p = m_pColorCoordinates[i];

if (p.X != -std::numeric_limits<float>::infinity() && p.Y != -std::numeric_limits<float>::infinity())

{

int colorX = static_cast<int>(p.X + 0.5f);

int colorY = static_cast<int>(p.Y + 0.5f);

if ((colorX >= 0 && colorX < 1920) && (colorY >= 0 && colorY < 1080))

{

i_depthToRgb.data[i * 4] = i_rgb.data[(colorY * 1920 + colorX) * 4];

i_depthToRgb.data[i * 4 + 1] = i_rgb.data[(colorY * 1920 + colorX) * 4 + 1];

i_depthToRgb.data[i * 4 + 2] = i_rgb.data[(colorY * 1920 + colorX) * 4 + 2];

i_depthToRgb.data[i * 4 + 3] = i_rgb.data[(colorY * 1920 + colorX) * 4 + 3];

}

}

}

}

imshow("rgb2depth", i_depthToRgb);

if (waitKey(1) == VK_ESCAPE)

break;

// 显示

/*imshow("rgb", i_rgb);

if (waitKey(1) == VK_ESCAPE)

break;*/

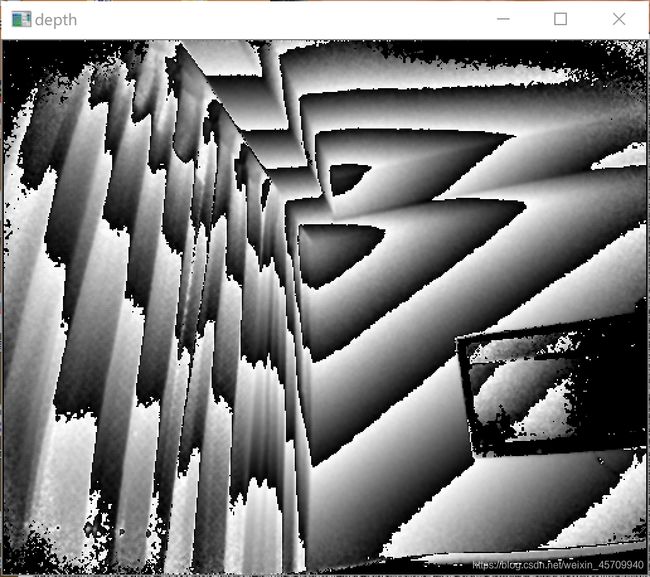

imshow("depth", i_depth);

if (waitKey(1) == VK_ESCAPE)

break;

imshow("Infrared", i_Infrared);

if (waitKey(1) == VK_ESCAPE)

break;

if (SUCCEEDED(hr))

{

HRESULT hr = m_pCoordinateMapper->MapDepthFrameToCameraSpace(512 * 424, depthData, 512 * 424, m_pCameraCoordinates);

}

if (SUCCEEDED(hr))

{

if (waitKey(100) == VK_SPACE)

{

SYSTEMTIME st;

GetLocalTime(&st);

char output_file[32];

char output_RGB[32];

char output_depth[32];

char output_ir[32];

sprintf_s(output_file, "%4d-%2d-%2d-%2d-%2d-%2d.txt", st.wYear, st.wMonth, st.wDay, st.wHour, st.wMinute, st.wSecond);

sprintf_s(output_RGB, "%4d-%2d-%2d-%2d-%2d-%2d-rgb.png", st.wYear, st.wMonth, st.wDay, st.wHour, st.wMinute, st.wSecond);

sprintf_s(output_depth, "%4d-%2d-%2d-%2d-%2d-%2d-depth.png", st.wYear, st.wMonth, st.wDay, st.wHour, st.wMinute, st.wSecond);

sprintf_s(output_ir, "%4d-%2d-%2d-%2d-%2d-%2d-ir.png", st.wYear, st.wMonth, st.wDay, st.wHour, st.wMinute, st.wSecond);

imwrite(output_RGB, i_rgb);

imwrite(output_depth, i_depth);

imwrite(output_ir, i_Infrared);

FILE* file = fopen(output_file, "w");

for (int i = 0; i < 512 * 424; i++)

{

CameraSpacePoint p = m_pCameraCoordinates[i];

if (p.X != -std::numeric_limits<float>::infinity() && p.Y != -std::numeric_limits<float>::infinity() && p.Z != -std::numeric_limits<float>::infinity())

{

float cameraX = static_cast<float>(p.X);

float cameraY = static_cast<float>(p.Y);

float cameraZ = static_cast<float>(p.Z);

if (file)

{

float b = i_depthToRgb.data[i * 4 + 0];

float g = i_depthToRgb.data[i * 4 + 1];

float r = i_depthToRgb.data[i * 4 + 2];

fprintf(file, "%.4f %.4f %.4f %.4f %.4f %.4f\n", cameraX, cameraY, cameraZ, r, g, b);

}

//cout << "x: " << cameraX << "y: " << cameraY << "z: " << cameraZ << endl;

//GLubyte *rgb = new GLubyte();

//rgb[2] = i_depthToRgb.data[i * 4 + 0];

//rgb[1] = i_depthToRgb.data[i * 4 + 1];

//rgb[0] = i_depthToRgb.data[i * 4 + 2];

//// 显示点

//glColor3ubv(rgb);

//glVertex3f(cameraX, -cameraY, cameraZ);

}

}

fclose(file);

cout << "文件保存成功" << endl;

}

}

}

// 释放资源

SafeRelease(m_pColorFrame);

SafeRelease(m_pDepthFrame);

SafeRelease(m_pInfraredFrame);

SafeRelease(m_pColorFrameReference);

SafeRelease(m_pDepthFrameReference);

SafeRelease(m_pInfraredFrameReference);

SafeRelease(m_pMultiFrame);

}

不停刷新显示

cv::destroyAllWindows();

m_pKinectSensor->Close();

std::system("pause");

return 0;

结束。

运行结果

预留任务

深度信息保存为pcd格式。

欢迎留言。