PCL+kinect2.0实现点云数据的获取及储存

说明

最近也是开始了PCL的学习之路,希望在与大家交流的过程中可以从小白一步步成长。这篇代码也是受一位学长和网上一些相关文章的启发写出来的,下面我会给出源码和一些容易掉进去的坑,希望有志同道合的朋友可以多多指正。那位学长也是很厉害的,大家可以去他的主页看看有没有自己一直想解决却解决不了的问题的方法。他的主页

点云显示+储存 代码:

#include"kinect2_grabber.h"

//---------------------------------

#include

#include

#include

#include

#include

typedef pcl::PointXYZRGBA PointType;

int main()

{

int c = 0;

int total = 0;//文件名

//pcl::PointCloud cloud_filtered;// 按范围过滤后的点云

boost::shared_ptr m_viewer;

//显示窗口初始化

m_viewer.reset(new pcl::visualization::PCLVisualizer("viewer", false));

m_viewer->setBackgroundColor(0, 0, 0);//设置背景颜色

m_viewer->initCameraParameters();

//m_viewer->addCoordinateSystem();//添加红绿蓝坐标轴

m_viewer->createInteractor();

// 获取Kinect设备

// Point Cloud

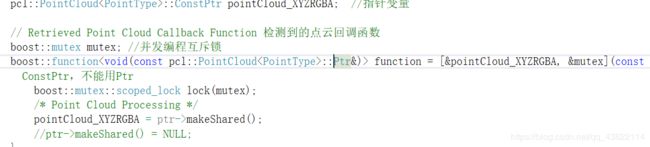

pcl::PointCloud::ConstPtr pointCloud_XYZRGBA; //指针变量

// Retrieved Point Cloud Callback Function 检测到的点云回调函数

boost::mutex mutex; //并发编程互斥锁

boost::function::ConstPtr&)> function = [&pointCloud_XYZRGBA, &mutex](const pcl::PointCloud::ConstPtr& ptr) { //只能用ConstPtr,不能用Ptr

boost::mutex::scoped_lock lock(mutex);

/* Point Cloud Processing */

pointCloud_XYZRGBA = ptr->makeShared();

//ptr->makeShared() = NULL;

};

boost::shared_ptr grabberForKinect = boost::shared_ptr(new pcl::Kinect2Grabber);// Kinect2Grabber

boost::signals2::connection connection = grabberForKinect->registerCallback(function); // Register Callback Function

grabberForKinect->start(); //Start Grabber

while (!m_viewer->wasStopped()) {

// Update Viewer

c++;

m_viewer->spinOnce(1); //调用交互程序并更新屏幕一次。

boost::mutex::scoped_try_lock lock(mutex);

//cloud_filtered = filterByScope(pointCloud_XYZRGBA, Z_nearby, Z_faraway, X_left, X_right, Y_bottom, Y_top);//截取对应范围图像

if (lock.owns_lock() && pointCloud_XYZRGBA) {

//cloud_filtered = filterByScope(pointCloud_XYZRGBA, Z_nearby, Z_faraway, X_left, X_right, Y_bottom, Y_top);

//5 显示 Point Cloud

if (!m_viewer->updatePointCloud(pointCloud_XYZRGBA->makeShared(), "cloud")) {//pointCloud_XYZRGBA是指针类型,不能用( . )运算符

m_viewer->addPointCloud(pointCloud_XYZRGBA->makeShared(), "cloud");

}

if (c % 10 == 0) {//每隔一段时间保存一次图片

//存储图片。

char name[100];

sprintf(name, "D:\\%d.pcd", total++);

pcl::io::savePCDFileASCII(name, *pointCloud_XYZRGBA);//以.pcd的格式保存点云数据到磁盘

}

}

}//while

}

关于代码,其中已经给注释了,还有几个比较容易出问题的地方。

一、

这里阴影部分的内容,一定要是 ConstPtr (参照第一篇源码) 否则就会出现下列的错误

二、

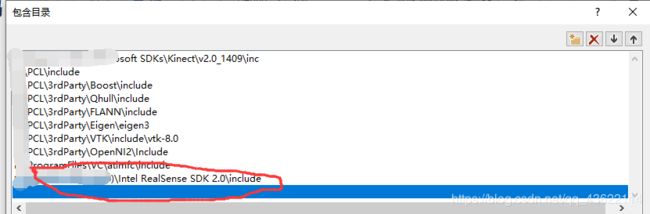

调试——>项目属性——>VC++ 目录——>包含目录 ,里不要出现这样一项,我就是因为配置的问题,搞了好几天,脑袋都大了,最后是这里有问题。如果带上这一项的话,会出现无法解析的外部符号。

另外可能有的朋友没有kinect2_grabber.h这个头文件的源码,下面给出:

#pragma once

#pragma once

// Kinect2Grabber is pcl::Grabber to retrieve the point cloud data from Kinect v2 using Kinect for Windows SDK 2.x.

// This source code is licensed under the MIT license. Please see the License in License.txt.

#ifndef KINECT2_GRABBER

#define KINECT2_GRABBER

#define NOMINMAX

#include

#include

#include

#include

#include

#include

namespace pcl

{

struct pcl::PointXYZ;

struct pcl::PointXYZI;

struct pcl::PointXYZRGB;

struct pcl::PointXYZRGBA;

template class pcl::PointCloud;

template

inline void SafeRelease(Interface *& IRelease)

{

if (IRelease != NULL) {

IRelease->Release();

IRelease = NULL;

}

}

class Kinect2Grabber : public pcl::Grabber

{

public:

Kinect2Grabber();

virtual ~Kinect2Grabber() throw ();

virtual void start();

virtual void stop();

virtual bool isRunning() const;

virtual std::string getName() const;

virtual float getFramesPerSecond() const;

typedef void (signal_Kinect2_PointXYZ)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZI)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZRGB)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZRGBA)(const boost::shared_ptr>&);

protected:

boost::signals2::signal* signal_PointXYZ;

boost::signals2::signal* signal_PointXYZI;

boost::signals2::signal* signal_PointXYZRGB;

boost::signals2::signal* signal_PointXYZRGBA;

pcl::PointCloud::Ptr convertDepthToPointXYZ(UINT16* depthBuffer);

pcl::PointCloud::Ptr convertInfraredDepthToPointXYZI(UINT16* infraredBuffer, UINT16* depthBuffer);

pcl::PointCloud::Ptr convertRGBDepthToPointXYZRGB(RGBQUAD* colorBuffer, UINT16* depthBuffer);

pcl::PointCloud::Ptr convertRGBADepthToPointXYZRGBA(RGBQUAD* colorBuffer, UINT16* depthBuffer);

boost::thread thread;

mutable boost::mutex mutex;

void threadFunction();

bool quit;

bool running;

HRESULT result;

IKinectSensor* sensor;

ICoordinateMapper* mapper;

IColorFrameSource* colorSource;

IColorFrameReader* colorReader;

IDepthFrameSource* depthSource;

IDepthFrameReader* depthReader;

IInfraredFrameSource* infraredSource;

IInfraredFrameReader* infraredReader;

int colorWidth;

int colorHeight;

std::vector colorBuffer;

int depthWidth;

int depthHeight;

std::vector depthBuffer;

int infraredWidth;

int infraredHeight;

std::vector infraredBuffer;

};

pcl::Kinect2Grabber::Kinect2Grabber()

: sensor(nullptr)

, mapper(nullptr)

, colorSource(nullptr)

, colorReader(nullptr)

, depthSource(nullptr)

, depthReader(nullptr)

, infraredSource(nullptr)

, infraredReader(nullptr)

, result(S_OK)

, colorWidth(1920)

, colorHeight(1080)

, colorBuffer()

, depthWidth(512)

, depthHeight(424)

, depthBuffer()

, infraredWidth(512)

, infraredHeight(424)

, infraredBuffer()

, running(false)

, quit(false)

, signal_PointXYZ(nullptr)

, signal_PointXYZI(nullptr)

, signal_PointXYZRGB(nullptr)

, signal_PointXYZRGBA(nullptr)

{

// Create Sensor Instance

result = GetDefaultKinectSensor(&sensor);

if (FAILED(result)) {

throw std::exception("Exception : GetDefaultKinectSensor()");

}

// Open Sensor

result = sensor->Open();

if (FAILED(result)) {

throw std::exception("Exception : IKinectSensor::Open()");

}

// Retrieved Coordinate Mapper

result = sensor->get_CoordinateMapper(&mapper);

if (FAILED(result)) {

throw std::exception("Exception : IKinectSensor::get_CoordinateMapper()");

}

// Retrieved Color Frame Source

result = sensor->get_ColorFrameSource(&colorSource);

if (FAILED(result)) {

throw std::exception("Exception : IKinectSensor::get_ColorFrameSource()");

}

// Retrieved Depth Frame Source

result = sensor->get_DepthFrameSource(&depthSource);

if (FAILED(result)) {

throw std::exception("Exception : IKinectSensor::get_DepthFrameSource()");

}

// Retrieved Infrared Frame Source

result = sensor->get_InfraredFrameSource(&infraredSource);

if (FAILED(result)) {

throw std::exception("Exception : IKinectSensor::get_InfraredFrameSource()");

}

// Retrieved Color Frame Size

IFrameDescription* colorDescription;

result = colorSource->get_FrameDescription(&colorDescription);

if (FAILED(result)) {

throw std::exception("Exception : IColorFrameSource::get_FrameDescription()");

}

result = colorDescription->get_Width(&colorWidth); // 1920

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = colorDescription->get_Height(&colorHeight); // 1080

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(colorDescription);

// To Reserve Color Frame Buffer

colorBuffer.resize(colorWidth * colorHeight);

// Retrieved Depth Frame Size

IFrameDescription* depthDescription;

result = depthSource->get_FrameDescription(&depthDescription);

if (FAILED(result)) {

throw std::exception("Exception : IDepthFrameSource::get_FrameDescription()");

}

result = depthDescription->get_Width(&depthWidth); // 512

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = depthDescription->get_Height(&depthHeight); // 424

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(depthDescription);

// To Reserve Depth Frame Buffer

depthBuffer.resize(depthWidth * depthHeight);

// Retrieved Infrared Frame Size

IFrameDescription* infraredDescription;

result = infraredSource->get_FrameDescription(&infraredDescription);

if (FAILED(result)) {

throw std::exception("Exception : IInfraredFrameSource::get_FrameDescription()");

}

result = infraredDescription->get_Width(&infraredWidth); // 512

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = infraredDescription->get_Height(&infraredHeight); // 424

if (FAILED(result)) {

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(infraredDescription);

// To Reserve Infrared Frame Buffer

infraredBuffer.resize(infraredWidth * infraredHeight);

signal_PointXYZ = createSignal();

signal_PointXYZI = createSignal();

signal_PointXYZRGB = createSignal();

signal_PointXYZRGBA = createSignal();

}

pcl::Kinect2Grabber::~Kinect2Grabber() throw()

{

stop();

disconnect_all_slots();

disconnect_all_slots();

disconnect_all_slots();

disconnect_all_slots();

thread.join();

// End Processing

if (sensor) {

sensor->Close();

}

SafeRelease(sensor);

SafeRelease(mapper);

SafeRelease(colorSource);

SafeRelease(colorReader);

SafeRelease(depthSource);

SafeRelease(depthReader);

SafeRelease(infraredSource);

SafeRelease(infraredReader);

}

void pcl::Kinect2Grabber::start()

{

// Open Color Frame Reader

result = colorSource->OpenReader(&colorReader);

if (FAILED(result)) {

throw std::exception("Exception : IColorFrameSource::OpenReader()");

}

// Open Depth Frame Reader

result = depthSource->OpenReader(&depthReader);

if (FAILED(result)) {

throw std::exception("Exception : IDepthFrameSource::OpenReader()");

}

// Open Infrared Frame Reader

result = infraredSource->OpenReader(&infraredReader);

if (FAILED(result)) {

throw std::exception("Exception : IInfraredFrameSource::OpenReader()");

}

running = true;

thread = boost::thread(&Kinect2Grabber::threadFunction, this);

}

void pcl::Kinect2Grabber::stop()

{

boost::unique_lock lock(mutex);

quit = true;

running = false;

lock.unlock();

}

bool pcl::Kinect2Grabber::isRunning() const

{

boost::unique_lock lock(mutex);

return running;

lock.unlock();

}

std::string pcl::Kinect2Grabber::getName() const

{

return std::string("Kinect2Grabber");

}

float pcl::Kinect2Grabber::getFramesPerSecond() const

{

return 30.0f;

}

void pcl::Kinect2Grabber::threadFunction()

{

while (!quit) {

boost::unique_lock lock(mutex);

// Acquire Latest Color Frame

IColorFrame* colorFrame = nullptr;

result = colorReader->AcquireLatestFrame(&colorFrame);

if (SUCCEEDED(result)) {

// Retrieved Color Data

result = colorFrame->CopyConvertedFrameDataToArray(colorBuffer.size() * sizeof(RGBQUAD), reinterpret_cast(&colorBuffer[0]), ColorImageFormat::ColorImageFormat_Bgra);

if (FAILED(result)) {

throw std::exception("Exception : IColorFrame::CopyConvertedFrameDataToArray()");

}

}

SafeRelease(colorFrame);

// Acquire Latest Depth Frame

IDepthFrame* depthFrame = nullptr;

result = depthReader->AcquireLatestFrame(&depthFrame);

if (SUCCEEDED(result)) {

// Retrieved Depth Data

result = depthFrame->CopyFrameDataToArray(depthBuffer.size(), &depthBuffer[0]);

if (FAILED(result)) {

throw std::exception("Exception : IDepthFrame::CopyFrameDataToArray()");

}

}

SafeRelease(depthFrame);

// Acquire Latest Infrared Frame

IInfraredFrame* infraredFrame = nullptr;

result = infraredReader->AcquireLatestFrame(&infraredFrame);

if (SUCCEEDED(result)) {

// Retrieved Infrared Data

result = infraredFrame->CopyFrameDataToArray(infraredBuffer.size(), &infraredBuffer[0]);

if (FAILED(result)) {

throw std::exception("Exception : IInfraredFrame::CopyFrameDataToArray()");

}

}

SafeRelease(infraredFrame);

lock.unlock();

if (signal_PointXYZ->num_slots() > 0) {

signal_PointXYZ->operator()(convertDepthToPointXYZ(&depthBuffer[0]));

}

if (signal_PointXYZI->num_slots() > 0) {

signal_PointXYZI->operator()(convertInfraredDepthToPointXYZI(&infraredBuffer[0], &depthBuffer[0]));

}

if (signal_PointXYZRGB->num_slots() > 0) {

signal_PointXYZRGB->operator()(convertRGBDepthToPointXYZRGB(&colorBuffer[0], &depthBuffer[0]));

}

if (signal_PointXYZRGBA->num_slots() > 0) {

signal_PointXYZRGBA->operator()(convertRGBADepthToPointXYZRGBA(&colorBuffer[0], &depthBuffer[0]));

}

}

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertDepthToPointXYZ(UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZ* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++) {

for (int x = 0; x < depthWidth; x++, pt++) {

pcl::PointXYZ point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertInfraredDepthToPointXYZI(UINT16* infraredBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZI* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++) {

for (int x = 0; x < depthWidth; x++, pt++) {

pcl::PointXYZI point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Setting PointCloud Intensity

point.intensity = static_cast(infraredBuffer[y * depthWidth + x]);

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertRGBDepthToPointXYZRGB(RGBQUAD* colorBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZRGB* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++) {

for (int x = 0; x < depthWidth; x++, pt++) {

pcl::PointXYZRGB point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Color Space, and Setting PointCloud RGB

ColorSpacePoint colorSpacePoint = { 0.0f, 0.0f };

mapper->MapDepthPointToColorSpace(depthSpacePoint, depth, &colorSpacePoint);

int colorX = static_cast(std::floor(colorSpacePoint.X + 0.5f));

int colorY = static_cast(std::floor(colorSpacePoint.Y + 0.5f));

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)) {

RGBQUAD color = colorBuffer[colorY * colorWidth + colorX];

point.b = color.rgbBlue;

point.g = color.rgbGreen;

point.r = color.rgbRed;

}

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)) {

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

}

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertRGBADepthToPointXYZRGBA(RGBQUAD* colorBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZRGBA* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++) {

for (int x = 0; x < depthWidth; x++, pt++) {

pcl::PointXYZRGBA point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Color Space, and Setting PointCloud RGBA

ColorSpacePoint colorSpacePoint = { 0.0f, 0.0f };

mapper->MapDepthPointToColorSpace(depthSpacePoint, depth, &colorSpacePoint);

int colorX = static_cast(std::floor(colorSpacePoint.X + 0.5f));

int colorY = static_cast(std::floor(colorSpacePoint.Y + 0.5f));

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)) {

RGBQUAD color = colorBuffer[colorY * colorWidth + colorX];

point.b = color.rgbBlue;

point.g = color.rgbGreen;

point.r = color.rgbRed;

point.a = color.rgbReserved;

}

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)) {

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

}

*pt = point;

}

}

return cloud;

}

}

#endif KINECT2_GRABBER

最后希望大家有问题多多留言,我们共同进步。

转载注明出处,方便有问题的朋友交流,谢谢配合!