Dubbo整合Seata实现AT模式分布式事务

Seata

Seata 是一款开源的分布式事务解决方案,致力于提供高性能和简单易用的分布式事务服务。Seata 将为用户提供了 AT、TCC、SAGA 和 XA 事务模式,为用户打造一站式的分布式解决方案。

Seata的事务模式

Seata针对不同的业务场景提供了四种不同的事务模式,具体如下

- AT模式: AT 模式的一阶段、二阶段提交和回滚(借助undo_log表来实现)均由 Seata 框架自动生成,用户只需编写“业务SQL”,便能轻松接入分布式事务,AT 模式是一种对业务无任何侵入的分布式事务解决方案。

- TTC模式: 相对于 AT 模式,TCC 模式对业务代码有一定的侵入性,但是 TCC 模式无 AT 模式的全局行锁,TCC 性能会比 AT模式高很多。( 适用于核心系统等对性能有很高要求的场景。)

- SAGA模式:Sage 是长事务解决方案,事务驱动,使用那种存在流程审核的业务场景,如: 金融行业,需要层层审核。

- XA模式: XA模式是分布式强一致性的解决方案,但性能低而使用较少。

AT模式

AT模式,分为两个阶段

- 一阶段:业务数据和回滚日志记录在同一个本地事务中提交,释放本地锁和连接资源

- 二阶段:提交异步化 ( 或者事务执行失败,回滚通过一阶段的回滚日志进行反向补偿)

一阶段:本地事务提交

在一阶段,Seata 会拦截“业务 SQL”,首先解析 SQL 语义,找到“业务 SQL”要更新的业务数据,在业务数据被更新前,将其保存成“before image”,然后执行“业务 SQL”更新业务数据,在业务数据更新之后,再将其保存成“after image”,最后生成行锁。以上操作全部在一个数据库事务内完成,这样保证了一阶段操作的原子性。

二阶段:执行成功,进行分布式事务提交

业务 SQL”在一阶段已经提交至数据库, 所以 Seata 框架只需将一阶段保存的快照数据和行锁删掉,完成数据清理即可。

二阶段:执行失败,进行业务回滚

首先对比“数据库当前业务数据”和 “after image”,避免发生业务脏写(类似于CAS操作),完成校验后用“before image”还原业务数据,并且删除中间数据。

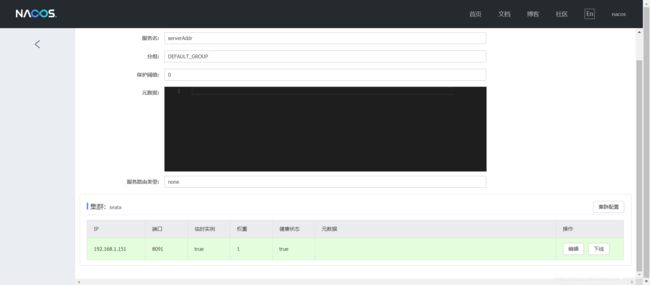

Seata Server安装

第一步:下载安装服务端,并解压到指定位置

unzip seata-server-1.1.0.zip

seata的目录结构:

- bin:存放各系统的启动脚本

- conf:存放seata server启动时所需要配置信息、数据库模式下所需要的建表语句

- lib:运行seata server所需要的的依赖包

第二步:配置seata

seata的配置文件(conf目录下)

- file.conf: 该文件用于配置存储方式、透传事务信息的NIO等信息,默认对应registry.conf中file配置方式

- registry.conf:seata server核心配置文件,可以通过该文件配置服务注册方式、配置读取方式。

注册方式目前支持file、nacos、eureka、redis、zk、consul、etcd3、sofa等方式,默认为file,对应读取file.conf内的注册方式信息。

读取配置信息的方式支持file、nacos、apollo、zk、consul、etcd3等方式,默认为file,对应读取file.conf文件内的配置。

修改registry.conf

- 注册中心使用Nacos

- 配置中心使用file进行配置

# 注册中心配置,用于TC,TM,RM的相互服务发现

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

consul {

cluster = "seata"

serverAddr = "127.0.0.1:8848"

}

}

# 配置中心配置,用于读取TC的相关配置

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

file {

name = "file.conf"

}

}

修改file.conf,配置中心配置,用于读取TC的相关配置

store {

## store mode: file?.b

mode = "file"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

registry.confuser = "mysql"

password = "mysql"

minConn = 1

maxConn = 10

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

}

}

第三步:创建seata数据库、以及所需的三张表

- global_table: 存储全局事务session数据的表

- branch_table:存储分支事务session数据的表

- lockTable:存储分布式锁数据的表

-- -------------------------------- The script used when storeMode is 'db' --------------------------------

-- the table to store GlobalSession data

CREATE TABLE IF NOT EXISTS `global_table`

(

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`status` TINYINT NOT NULL,

`application_id` VARCHAR(32),

`transaction_service_group` VARCHAR(32),

`transaction_name` VARCHAR(128),

`timeout` INT,

`begin_time` BIGINT,

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`xid`),

KEY `idx_gmt_modified_status` (`gmt_modified`, `status`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store BranchSession data

CREATE TABLE IF NOT EXISTS `branch_table`

(

`branch_id` BIGINT NOT NULL,

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`resource_group_id` VARCHAR(32),

`resource_id` VARCHAR(256),

`branch_type` VARCHAR(8),

`status` TINYINT,

`client_id` VARCHAR(64),

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store lock data

CREATE TABLE IF NOT EXISTS `lock_table`

(

`row_key` VARCHAR(128) NOT NULL,

`xid` VARCHAR(96),

`transaction_id` BIGINT,

`branch_id` BIGINT NOT NULL,

`resource_id` VARCHAR(256),

`table_name` VARCHAR(32),

`pk` VARCHAR(36),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`row_key`),

KEY `idx_branch_id` (`branch_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

第四步:启动seata server

sh seata-server.sh -p 8091 -h 0.0.0.0 -m file

Options:

--host, -h

The host to bind.

Default: 0.0.0.0

--port, -p

The port to listen.

Default: 8091

--storeMode, -m log store mode : file、db

Default: file

--help

补充:

- 外网访问:如果需要外网访问 需要将0.0.0.0转成外网IP

- 后台启动: nohup sh seata-server.sh -p 8091 -h 127.0.0.1 -m file >catalina.out 2>&1 &

Dubbo整合Seata实现AT模式

注入依赖

<!--seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-seata</artifactId>

<version>2.1.0.RELEASE</version>

<exclusions>

<exclusion>

<artifactId>seata-all</artifactId>

<groupId>io.seata</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.1.0</version>

</dependency>

application.properties 配置文件

#mysql

spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

spring.datasource.driverClassName=com.mysql.cj.jdbc.Driver

spring.datasource.url=jdbc:mysql://127.0.0.1:3306/dubbodemo

spring.datasource.username=mysql

spring.datasource.password=mysql

spring.cloud.alibaba.seata.tx-service-group=springcloud-alibaba-producer-test

resoures目录下新建 file.conf ,配置如下

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

# the client batch send request enable

enableClientBatchSendRequest = false

#thread factory for netty

threadFactory {

bossThreadPrefix = "NettyBoss"

workerThreadPrefix = "NettyServerNIOWorker"

serverExecutorThreadPrefix = "NettyServerBizHandler"

shareBossWorker = false

clientSelectorThreadPrefix = "NettyClientSelector"

clientSelectorThreadSize = 1

clientWorkerThreadPrefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

bossThreadSize = 1

#auto default pin or 8

workerThreadSize = "default"

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

# service configuration, only used in client side

service {

#transaction service group mapping

### springcloud-alibaba-demo-test 对应的是application.properties中配置的

### seata对应 seata-server中配置的集群名称clutser

vgroupMapping.springcloud-alibaba-producer-test= "seata"

seata.grouplist = "127.0.0.1:8091"

#degrade, current not support

enableDegrade = false

#disable seata

disableGlobalTransaction = false

}

#client transaction configuration, only used in client side

client {

rm {

asyncCommitBufferLimit = 10000

lock {

retryInterval = 10

retryTimes = 30

retryPolicyBranchRollbackOnConflict = true

}

reportRetryCount = 5

tableMetaCheckEnable = false

reportSuccessEnable = false

sqlParserType = druid

}

tm {

commitRetryCount = 5

rollbackRetryCount = 5

}

undo {

dataValidation = true

logSerialization = "jackson"

logTable = "undo_log"

}

log {

exceptionRate = 100

}

}

## transaction log store, only used in server side

store {

## store mode: file、db

mode = "file"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

user = "mysql"

password = "mysql"

minConn = 1

maxConn = 10

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

}

}

## server configuration, only used in server side

server {

recovery {

#schedule committing retry period in milliseconds

committingRetryPeriod = 1000

#schedule asyn committing retry period in milliseconds

asynCommittingRetryPeriod = 1000

#schedule rollbacking retry period in milliseconds

rollbackingRetryPeriod = 1000

#schedule timeout retry period in milliseconds

timeoutRetryPeriod = 1000

}

undo {

logSaveDays = 7

#schedule delete expired undo_log in milliseconds

logDeletePeriod = 86400000

}

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

maxCommitRetryTimeout = "-1"

maxRollbackRetryTimeout = "-1"

rollbackRetryTimeoutUnlockEnable = false

}

## metrics configuration, only used in server side

metrics {

enabled = false

registryType = "compact"

# multi exporters use comma divided

exporterList = "prometheus"

exporterPrometheusPort = 9898

}

resoures目录下新建registry.conf 配置如下:

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

cluster = "seata"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

nacos {

serverAddr = "localhost"

namespace = ""

group = "SEATA_GROUP"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

DataSourceProxyConfig数据源加载

- SEATA是基于数据源拦截来实现的分布式事务,

需要排除掉SpringBoot默认自动注入的DataSourceAutoConfigurationBean,自定义配置数据源。

在启动类上添加:@SpringBootApplication(exclude = DataSourceAutoConfiguration.class) ,并添加以下配置类

@Configuration

public class DataSourceProxyConfig {

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource(){

return new DruidDataSource();

}

@Bean

public DataSourceProxy dataSourceProxy(DataSource dataSource) {

return new DataSourceProxy(dataSource);

}

@Bean

public SqlSessionFactory sqlSessionFactoryBean(DataSourceProxy dataSourceProxy) throws Exception {

SqlSessionFactoryBean sqlSessionFactoryBean = new SqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSourceProxy);

sqlSessionFactoryBean.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources("classpath*:/mapper/*Mapper.xml"));

sqlSessionFactoryBean.setTransactionFactory(new SpringManagedTransactionFactory());

return sqlSessionFactoryBean.getObject();

}

@Bean

public GlobalTransactionScanner globalTransactionScanner(){

return new GlobalTransactionScanner("springcloud-alibaba-consumer", "springcloud-alibaba-comsumer-test");

}

}

创建业务表和undo-log表

CREATE TABLE IF NOT EXISTS `undo_log`

(

`id` BIGINT(20) NOT NULL AUTO_INCREMENT COMMENT 'increment id',

`branch_id` BIGINT(20) NOT NULL COMMENT 'branch transaction id',

`xid` VARCHAR(100) NOT NULL COMMENT 'global transaction id',

`context` VARCHAR(128) NOT NULL COMMENT 'undo_log context,such as serialization',

`rollback_info` LONGBLOB NOT NULL COMMENT 'rollback info',

`log_status` INT(11) NOT NULL COMMENT '0:normal status,1:defense status',

`log_created` DATETIME NOT NULL COMMENT 'create datetime',

`log_modified` DATETIME NOT NULL COMMENT 'modify datetime',

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`, `branch_id`)

) ENGINE = InnoDB

AUTO_INCREMENT = 1

DEFAULT CHARSET = utf8 COMMENT ='AT transaction mode undo table';

# 业务数据表

CREATE TABLE `sys_user` (

`id` varchar(36) NOT NULL,

`name` varchar(100) NOT NULL,

`msg` varchar(500) NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO `sys_user` (`id`, `name`, `msg`) VALUES ('1', '小王', '初始化数据');

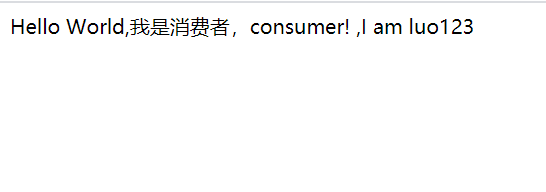

核心业务方法

@Override

@GlobalTransactional

public String ceshi(String input) {

UserPojo userPojo = userPojoMapper.selectByPrimaryKey("1");

userPojo.setName("luo123");

userPojoMapper.updateByPrimaryKey(userPojo);

try {

// 方便数据库看数据,暂停20秒

Thread.sleep(20 * 1000);

} catch (InterruptedException e) {

e.printStackTrace();

return "";

}

return "Hello World,"+input+"! ,I am "+ userPojo.getName();

}

该项目源码:可在我的github中获取