Python神经网络 - MNIST手写数字识别

MNIST手写数字识别

- 功能描述

- Python源代码

- 运行结果

功能描述

基于手写数字的数据集,实现神经网络的训练和推理。

http://pjreddie.com/projects/mnist-in-csv/

http://www.pjreddie.com/media/files/mnist_train.csv

http://www.pjreddie.com/media/files/mnist_test.csv

Python源代码

#!/usr/bin/env python

# coding: utf-8

# In[1]:

import numpy

import scipy.special # 这个库包含sigmoid函数,叫做expit()

import matplotlib.pyplot # 导入绘图库

# In[2]:

#创建神经网络类,至少有3个函数 :

#初始化函数--设定输入层节点、隐藏层节点和输出层节点的数量,学习率设定

#训练 --学习给定训练集样本后,优化权重

#查询 --给定输入,从输出节点给出答案

# neural network class definition

class neuralNetwork :

# initialise the neural network

def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate) :

# set number of nodes in each input, hidden, output layer

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

# learning rate

self.lr = learningrate

# link weight matrices, wih and who

# weight inside the arrays are w_i_j, where link is from node i to node j in the next layer

# w11 w21

# w12 w22 etc

# 初始化权重值为-0.5 ~ 0.5的随机数,注意行和列不要弄反了

self.wih = (numpy.random.rand(self.hnodes, self.inodes) - 0.5)

self.who = (numpy.random.rand(self.onodes, self.hnodes) - 0.5)

# 可选操作:使用正态分布的随机数初始化权重,中心为0.0

#self.wih = numpy.random.normal(0.0, pow(self.hnodes, -0.5),(self.hnodes, self.inodes))

#self.who = numpy.random.normal(0.0, pow(self.onodes, -0.5),(self.onodes, self.hnodes))

# 定义激活函数为sigmoid: 这个函数接收x作为输入,expit(x)为输出

self.activation_function = lambda x:scipy.special.expit(x)

pass

# train the neural network

def train(self, inputs_list, targets_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list, ndmin=2).T

targets = numpy.array(targets_list, ndmin=2).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih, inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

# output layer error is the (target - actual)

output_errors = targets - final_outputs

# hidden layer error is the output_errors, split by weights, recombined at hidden nodes

# 重点!!反向传播误差

hidden_errors = numpy.dot(self.who.T, output_errors)

#更新权重:w=w+mu*e(n)*sigmoid(final_inputs)的导数*vector_u;

# update the weights for the links between the hidden and output layers

# 其中:*表示对应元素的乘法,dot表示矩阵的点积

self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs))

# update the weights for the links between the input and hidden layers

self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs))

pass

# query the neural network

def query(self, inputs_list) :

# 转换inputs_list为二维数组,以便于计算

inputs = numpy.array(inputs_list, ndmin=2).T

# 矩阵相乘

hidden_inputs = numpy.dot(self.wih, inputs)

# 激活

hidden_outputs = self.activation_function(hidden_inputs)

# 矩阵相乘

final_inputs = numpy.dot(self.who, hidden_outputs)

# 激活

final_outputs = self.activation_function(final_inputs)

return final_outputs

# In[3]:

# number of input, hidden and output nodes

input_nodes = 784 # 28*28=784

hidden_nodes = 100

output_nodes = 10

# learning rate is xxx

learning_rate = 0.3

# create instance of neural network

n = neuralNetwork(input_nodes, hidden_nodes, output_nodes, learning_rate)

# In[4]:

# 读取训练集文件数据(首先要将数据上传到网站上file-open-upload)

training_data_file = open("mnist_train_500.csv",'r')

training_data_list = training_data_file.readlines()# 将所有数据都读入list,也可以每次读一行

training_data_file.close()

# In[5]:

# 开始训练神经网络

for record in training_data_list:

all_values = record.split(',')

# image_array = numpy.asfarray(all_values[1:]).reshape((28,28))

# matplotlib.pyplot.imshow(image_array, cmap='Greys', interpolation='None')

# 将数据转换为0.01~1.00范围内

# 选择0.01作为最低点为了避免先前观察到的0值输入会人为地造成权重更新失败

# 输入值可以是1.0,但是输出值不可以

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

# 构建目标输出,使用0.01和0.99分别代替0和1作为目标,防止饱和网络(sigmoid不能输出1和0)

targets = numpy.zeros(output_nodes) + 0.01 # 目标输出是10个数据

targets[int(all_values[0])] = 0.99

n.train(inputs, targets)

pass

# In[6]:

# 测试神经网络

test_data_file = open("mnist_test.csv",'r')

test_data_list = test_data_file.readlines()# 将所有数据都读入list,也可以每次读一行

test_data_file.close()

all_values = test_data_list[0].split(',')

print(all_values[0])

image_array = numpy.asfarray(all_values[1:]).reshape((28,28))

matplotlib.pyplot.imshow(image_array, cmap='Greys', interpolation='None')

n.query((numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01)

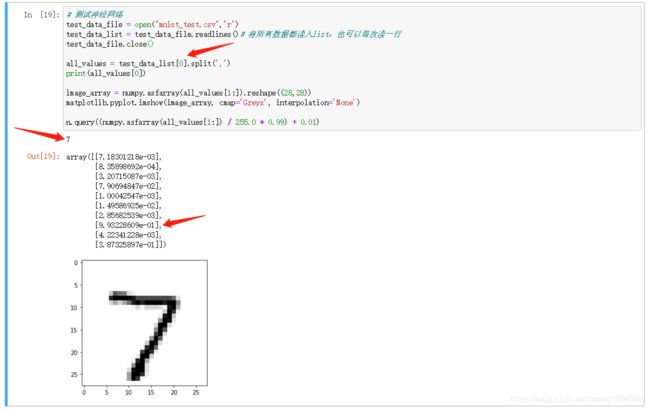

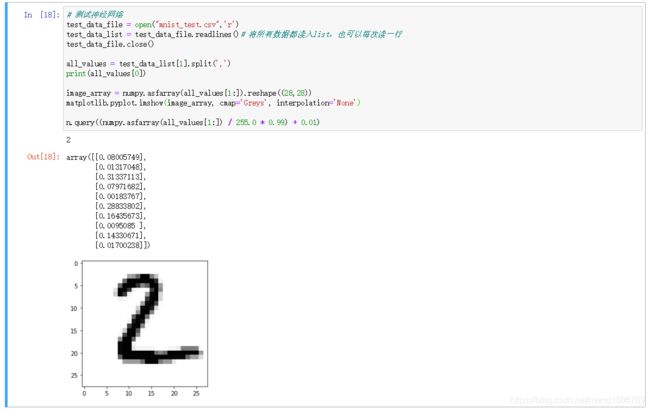

运行结果

通过修改test_data_list数组的编号,可以选择不同的数据进行测试。

输出一个array,其中最大的数的编号就是得到的数字结果。