AlexNet模型代码整理

1. 网络模型

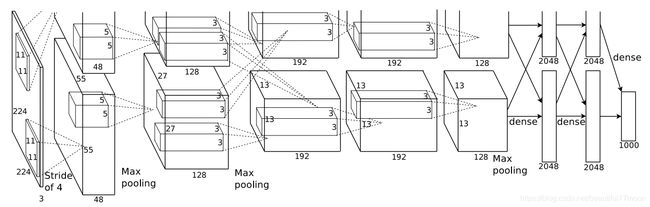

(论文是用两块gpu并行训练的,所以图中的模型是上下两部分的,第一层卷积核的个数是48+48=96)

2. 模型参数

3. 模型代码

import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 96, kernel_size=11, stride=4, padding=(1,2)), # input[3, 224, 224] output[48, 55, 55]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27]

nn.Conv2d(96, 256, kernel_size=5, padding=2), # output[128, 27, 27]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13]

nn.Conv2d(256, 384, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(384, 384, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1), # output[128, 13, 13]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6]

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(256 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)

if init_weights: # pytorch中对卷积和全连接层自动进行kaiming初始化

self._initialize_weights()

def forward(self, x):

x = self.features(x) # 卷积层提取特征

x = torch.flatten(x, start_dim=1) # pytorch中tensor通常的排列顺序:[batch,channel,height,width]

x = self.classifier(x) # 全连接层分类

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布

nn.init.constant_(m.bias, 0)

4. 实验源码