讲解: 一份数据分析报告 #既包含代码又包含数据分析结果的PDF形式

- Markdown写法 :说明

- code import pandas as pd

快捷切换 esc+m 变为Markdown/esc+m 变为code

- 图形化呈现 :结果

练习1 这是一份用户消费行为的分析报告(数据来源于网络,用户在一家CD网站上的消费)

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

plt.style.use('ggplot')

columns = ['user_id','order_dt','order_products','order_amount']

df = pd.read_table('CDNOW_master.txt',names = columns,sep = '\s+',parse_dates= True)

- user_id 用户名

- order_dt 购买日期

- order_products 购买产品数

- order_amount 购买金额

df.head()

|

user_id |

order_dt |

order_products |

order_amount |

| 0 |

1 |

19970101 |

1 |

11.77 |

| 1 |

2 |

19970112 |

1 |

12.00 |

| 2 |

2 |

19970112 |

5 |

77.00 |

| 3 |

3 |

19970102 |

2 |

20.76 |

| 4 |

3 |

19970330 |

2 |

20.76 |

df.info()

RangeIndex: 69659 entries, 0 to 69658

Data columns (total 4 columns):

user_id 69659 non-null int64

order_dt 69659 non-null int64

order_products 69659 non-null int64

order_amount 69659 non-null float64

dtypes: float64(1), int64(3)

memory usage: 2.1 MB

需要对order_dt进行清理

df.describe()

|

user_id |

order_dt |

order_products |

order_amount |

| count |

69659.000000 |

6.965900e+04 |

69659.000000 |

69659.000000 |

| mean |

11470.854592 |

1.997228e+07 |

2.410040 |

35.893648 |

| std |

6819.904848 |

3.837735e+03 |

2.333924 |

36.281942 |

| min |

1.000000 |

1.997010e+07 |

1.000000 |

0.000000 |

| 25% |

5506.000000 |

1.997022e+07 |

1.000000 |

14.490000 |

| 50% |

11410.000000 |

1.997042e+07 |

2.000000 |

25.980000 |

| 75% |

17273.000000 |

1.997111e+07 |

3.000000 |

43.700000 |

| max |

23570.000000 |

1.998063e+07 |

99.000000 |

1286.010000 |

- 大部分订单只消费了少量商品(平均2.4),有一定极值干扰

- 用户的消费金额比较稳定,平均消费35元,中位数在35,有一定极值干扰

df['order_dt'] = pd.to_datetime(df.order_dt,format = "%Y%m%d")

df['month'] = df.order_dt.values.astype('datetime64[M]')

df

|

user_id |

order_dt |

order_products |

order_amount |

month |

| 0 |

1 |

1997-01-01 |

1 |

11.77 |

1997-01-01 |

| 1 |

2 |

1997-01-12 |

1 |

12.00 |

1997-01-01 |

| 2 |

2 |

1997-01-12 |

5 |

77.00 |

1997-01-01 |

| 3 |

3 |

1997-01-02 |

2 |

20.76 |

1997-01-01 |

| 4 |

3 |

1997-03-30 |

2 |

20.76 |

1997-03-01 |

| 5 |

3 |

1997-04-02 |

2 |

19.54 |

1997-04-01 |

| 6 |

3 |

1997-11-15 |

5 |

57.45 |

1997-11-01 |

| 7 |

3 |

1997-11-25 |

4 |

20.96 |

1997-11-01 |

| 8 |

3 |

1998-05-28 |

1 |

16.99 |

1998-05-01 |

| 9 |

4 |

1997-01-01 |

2 |

29.33 |

1997-01-01 |

| 10 |

4 |

1997-01-18 |

2 |

29.73 |

1997-01-01 |

| 11 |

4 |

1997-08-02 |

1 |

14.96 |

1997-08-01 |

| 12 |

4 |

1997-12-12 |

2 |

26.48 |

1997-12-01 |

| 13 |

5 |

1997-01-01 |

2 |

29.33 |

1997-01-01 |

| 14 |

5 |

1997-01-14 |

1 |

13.97 |

1997-01-01 |

| 15 |

5 |

1997-02-04 |

3 |

38.90 |

1997-02-01 |

| 16 |

5 |

1997-04-11 |

3 |

45.55 |

1997-04-01 |

| 17 |

5 |

1997-05-31 |

3 |

38.71 |

1997-05-01 |

| 18 |

5 |

1997-06-16 |

2 |

26.14 |

1997-06-01 |

| 19 |

5 |

1997-07-22 |

2 |

28.14 |

1997-07-01 |

| 20 |

5 |

1997-09-15 |

3 |

40.47 |

1997-09-01 |

| 21 |

5 |

1997-12-08 |

4 |

46.46 |

1997-12-01 |

| 22 |

5 |

1997-12-12 |

3 |

40.47 |

1997-12-01 |

| 23 |

5 |

1998-01-03 |

3 |

37.47 |

1998-01-01 |

| 24 |

6 |

1997-01-01 |

1 |

20.99 |

1997-01-01 |

| 25 |

7 |

1997-01-01 |

2 |

28.74 |

1997-01-01 |

| 26 |

7 |

1997-10-11 |

7 |

97.43 |

1997-10-01 |

| 27 |

7 |

1998-03-22 |

9 |

138.50 |

1998-03-01 |

| 28 |

8 |

1997-01-01 |

1 |

9.77 |

1997-01-01 |

| 29 |

8 |

1997-02-13 |

1 |

13.97 |

1997-02-01 |

| ... |

... |

... |

... |

... |

... |

| 69629 |

23556 |

1997-09-27 |

3 |

31.47 |

1997-09-01 |

| 69630 |

23556 |

1998-01-03 |

2 |

28.98 |

1998-01-01 |

| 69631 |

23556 |

1998-06-07 |

2 |

28.98 |

1998-06-01 |

| 69632 |

23557 |

1997-03-25 |

1 |

14.37 |

1997-03-01 |

| 69633 |

23558 |

1997-03-25 |

2 |

28.13 |

1997-03-01 |

| 69634 |

23558 |

1997-05-18 |

3 |

45.51 |

1997-05-01 |

| 69635 |

23558 |

1997-06-24 |

2 |

23.74 |

1997-06-01 |

| 69636 |

23558 |

1998-02-25 |

4 |

48.22 |

1998-02-01 |

| 69637 |

23559 |

1997-03-25 |

2 |

23.54 |

1997-03-01 |

| 69638 |

23559 |

1997-05-18 |

3 |

35.31 |

1997-05-01 |

| 69639 |

23559 |

1997-06-27 |

3 |

52.80 |

1997-06-01 |

| 69640 |

23560 |

1997-03-25 |

1 |

18.36 |

1997-03-01 |

| 69641 |

23561 |

1997-03-25 |

2 |

30.92 |

1997-03-01 |

| 69642 |

23561 |

1998-01-28 |

1 |

15.49 |

1998-01-01 |

| 69643 |

23561 |

1998-05-29 |

3 |

37.05 |

1998-05-01 |

| 69644 |

23562 |

1997-03-25 |

2 |

29.33 |

1997-03-01 |

| 69645 |

23563 |

1997-03-25 |

1 |

10.77 |

1997-03-01 |

| 69646 |

23563 |

1997-10-04 |

2 |

47.98 |

1997-10-01 |

| 69647 |

23564 |

1997-03-25 |

1 |

11.77 |

1997-03-01 |

| 69648 |

23564 |

1997-05-21 |

1 |

11.77 |

1997-05-01 |

| 69649 |

23564 |

1997-11-30 |

3 |

46.47 |

1997-11-01 |

| 69650 |

23565 |

1997-03-25 |

1 |

11.77 |

1997-03-01 |

| 69651 |

23566 |

1997-03-25 |

2 |

36.00 |

1997-03-01 |

| 69652 |

23567 |

1997-03-25 |

1 |

20.97 |

1997-03-01 |

| 69653 |

23568 |

1997-03-25 |

1 |

22.97 |

1997-03-01 |

| 69654 |

23568 |

1997-04-05 |

4 |

83.74 |

1997-04-01 |

| 69655 |

23568 |

1997-04-22 |

1 |

14.99 |

1997-04-01 |

| 69656 |

23569 |

1997-03-25 |

2 |

25.74 |

1997-03-01 |

| 69657 |

23570 |

1997-03-25 |

3 |

51.12 |

1997-03-01 |

| 69658 |

23570 |

1997-03-26 |

2 |

42.96 |

1997-03-01 |

69659 rows × 5 columns

1.进行用户消费趋势的分析(按月)

- 每月的消费总金额

- 每月的消费次数

- 每月的产品购买量

- 每月的消费人数

grouped_month = df.groupby('month')

order_month_amount = grouped_month.order_amount.sum()

order_month_amount.head()

month

1997-01-01 299060.17

1997-02-01 379590.03

1997-03-01 393155.27

1997-04-01 142824.49

1997-05-01 107933.30

Name: order_amount, dtype: float64

import matplotlib.pyplot as plt

%matplotlib inline

plt.style.use('ggplot')

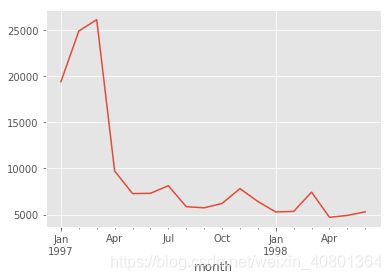

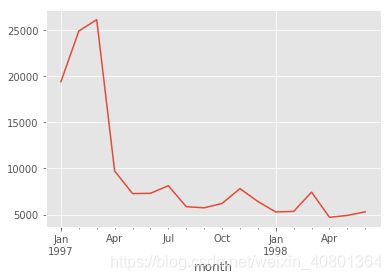

order_month_amount.plot()

由上图可知,消费金额在前三个月达到高峰,后续消费较为稳定,有轻微下降趋势

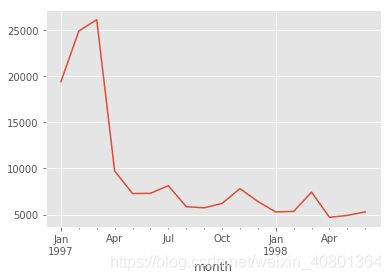

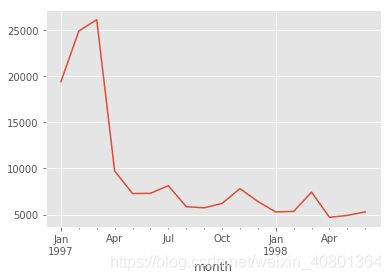

grouped_month.user_id.count().plot()

[外链图片转存失败(img-jCtrxOWZ-1567614745665)(output_16_1.png)]

前三个月消费订单数在10000笔左右,后续月份的平均消费人数则在2500人

grouped_month.order_products.sum().plot()

df.groupby('month').user_id.apply(lambda x :len(x.drop_duplicates())).plot()

df.groupby(['month','user_id']).count().reset_index()

df.groupby('month').user_id.count().plot()

每月的消费人数低于每月的消费次数,但差异不大;

前三个月每月的消费人数在8000-10000之间,后续月份,平均消费人数在2000人不到

df.pivot_table(index = 'month',

values = ['order_products','order_amount','user_id'],

aggfunc = {'order_products':'sum',

'order_amount':'sum',

'user_id':'count'}).head()

|

order_amount |

order_products |

user_id |

| month |

|

|

|

| 1997-01-01 |

299060.17 |

19416 |

8928 |

| 1997-02-01 |

379590.03 |

24921 |

11272 |

| 1997-03-01 |

393155.27 |

26159 |

11598 |

| 1997-04-01 |

142824.49 |

9729 |

3781 |

| 1997-05-01 |

107933.30 |

7275 |

2895 |

问题:

- 每月用户的平均消费金额趋势

- 每月用户平均消费次数的趋势

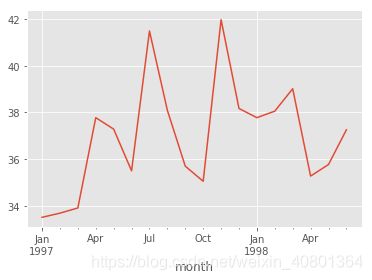

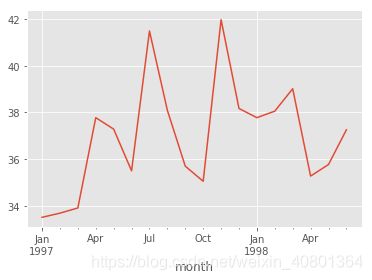

df.groupby('month').order_amount.mean().plot()

2.用户个体消费分析

- 用户消费金额,消费次数的描述统计

- 用户消费金额和消费次数的散点图

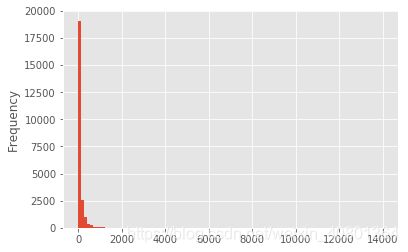

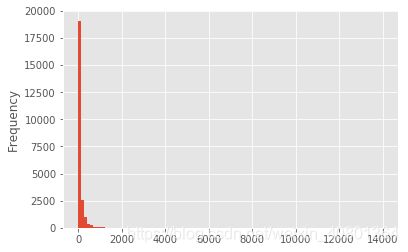

- 用户消费金额的分布图

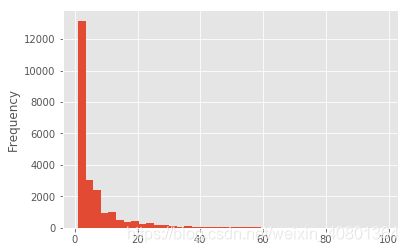

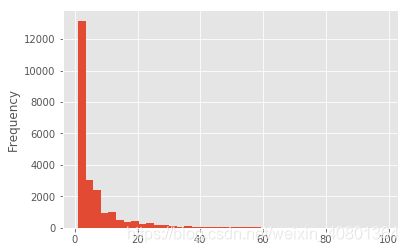

- 用户消费次数的分布图(二八分布)

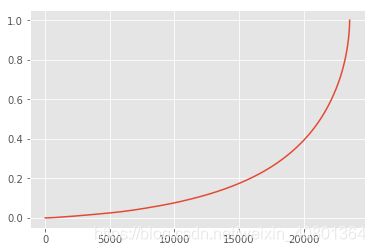

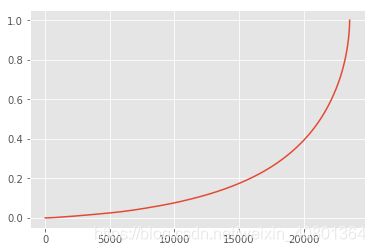

- 用户累计消费金额占比(百分之多少的用户占百分之多少的消费额),头部用户的多寡,实现运营策略,制定方向

grouped_user = df.groupby('user_id')

grouped_user.sum().describe()

|

order_products |

order_amount |

| count |

23570.000000 |

23570.000000 |

| mean |

7.122656 |

106.080426 |

| std |

16.983531 |

240.925195 |

| min |

1.000000 |

0.000000 |

| 25% |

1.000000 |

19.970000 |

| 50% |

3.000000 |

43.395000 |

| 75% |

7.000000 |

106.475000 |

| max |

1033.000000 |

13990.930000 |

- 用户平均购买了7张CD,但是中位值只有3,说明小部分用户购买了大量的CD

- 用户平均消费106元,中位数有43,判断同上,有极值干扰(一个人买了很多)

- 基本上符合一个2-8分布

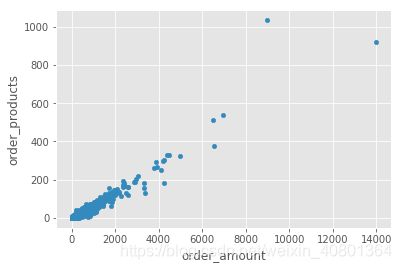

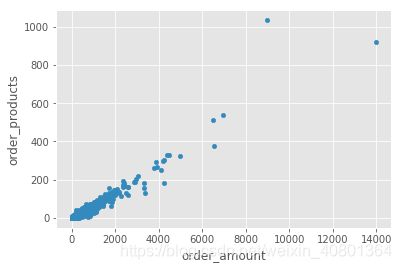

grouped_user.sum().plot.scatter(x = 'order_amount',y = 'order_products')

grouped_user.sum().query('order_amount <4000').plot.scatter(x = 'order_amount',y = 'order_products')

- 散点图对极值比较敏感,在开始时可以进行过滤,query(‘order_amount <4000’)

- 函数时有顺序的,plot.scatter

- CD 数量和客单价有近似正比的关系,可以说明95%的CD的客单价都是差不多的,单一的产品,可能存在极少的珍藏版CD;

- 如果店家里有多种品类,如CD机,音乐唱片等,可能呈现扇形的扩散状态

- 散点图揭示的是一种规律

grouped_user.sum().order_amount.plot.hist(bins = 100)

从直方图中,用户的消费金额,绝大多数呈现集中趋势,小部分异常值干扰判断,可以用**过滤操作**排除异常

grouped_user.sum().query('order_products<100').order_products.plot.hist(bins = 40)

- 使用切比雪夫过滤掉异常值,计算95%的数据分布情况,去掉5个均值以外的结果

user_cumsum = grouped_user.sum().sort_values('order_amount').apply(lambda x:x.cumsum()/x.sum())

user_cumsum

user_cumsum.reset_index().order_amount.plot()

按用户消费金额进行升序排列,有图可知50%的用户仅贡献了15%的消费额度,而排名前5000的用户就贡献了60%的消费额(如果运营这60%的用户就可能是效益更高)

3.用户的消费行为

- 用户第一次消费(收购)–和渠道相关,客单价比较高,用户留存率比较低行业,首购的用户从渠道来,可以拓展运营方式和业务

- 用户最后一次消费–与用户的流失息息相关

- 新老客消费比

- 用户分层

- 用户购买周期(按订单)

- 用户生命周期(按第一次&最后一次消费)

(1) 用户第一次消费

grouped_user.min().order_dt.value_counts().plot()

- 用户第一次购买分布,集中在前三个月

- 其中,在2月11日至2月15日有一次剧烈的波动(渠道或奖励机制发生了变化)

(2) 用户最后一次消费

grouped_user.max().order_dt.value_counts().plot()

- 用户最后一次购买的分布比第一次分布广

- 大部分最后一次购买,集中在前三个月,说明有很多用户购买了一次就不再进行购买(在真实的场景中,可能是逐渐的缓慢累加)

- 随着时间递增,最后一次购买数也在递增,消费呈现流失上升的状况(运营的用户忠诚度下降)

(3)新老客消费比

user_life = grouped_user.order_dt.agg(['min','max'])

user_life.head()

|

min |

max |

| user_id |

|

|

| 1 |

1997-01-01 |

1997-01-01 |

| 2 |

1997-01-12 |

1997-01-12 |

| 3 |

1997-01-02 |

1998-05-28 |

| 4 |

1997-01-01 |

1997-12-12 |

| 5 |

1997-01-01 |

1998-01-03 |

(user_life['min'] == user_life['max']).value_counts()

True 12054

False 11516

dtype: int64

(4)用户分层 -RFM(用户消费额,用户消费次数,用户最近一次消费)-象限法

①RFM模型分析

rfm = df.pivot_table(index = 'user_id',

values = ['order_products','order_amount','order_dt'],

aggfunc = {'order_dt':'max',

'order_amount':'sum',

'order_products':'sum'})

rfm.head()

|

order_amount |

order_dt |

order_products |

| user_id |

|

|

|

| 1 |

11.77 |

1997-01-01 |

1 |

| 2 |

89.00 |

1997-01-12 |

6 |

| 3 |

156.46 |

1998-05-28 |

16 |

| 4 |

100.50 |

1997-12-12 |

7 |

| 5 |

385.61 |

1998-01-03 |

29 |

rfm['R'] = - (rfm.order_dt-rfm.order_dt.max())/np.timedelta64(1,'D')

rfm.rename(columns = {'order_products':'F','order_amount':'M'},inplace = True)

rfm.head()

|

M |

order_dt |

F |

R |

| user_id |

|

|

|

|

| 1 |

11.77 |

1997-01-01 |

1 |

545.0 |

| 2 |

89.00 |

1997-01-12 |

6 |

534.0 |

| 3 |

156.46 |

1998-05-28 |

16 |

33.0 |

| 4 |

100.50 |

1997-12-12 |

7 |

200.0 |

| 5 |

385.61 |

1998-01-03 |

29 |

178.0 |

def rfm_func(x):

level = x.apply(lambda x:'1'if x>= 0 else '0')

label = level.R +level.F + level.M

d = {'111':'重要价值客户',

'011':'重要保持客户',

'101':'重要挽留客户',

'001':'重要发展客户',

'110':'一般价值客户',

'010':'一般保持客户',

'100':'一般挽留客户',

'000':'一般发展客户',

}

result = d[label]

return result

rfm['label'] = rfm[['R','F','M']].apply(lambda x:x-x.mean()).apply(rfm_func,axis =1)

rfm.head()

|

M |

order_dt |

F |

R |

label |

| user_id |

|

|

|

|

|

| 1 |

11.77 |

1997-01-01 |

1 |

545.0 |

一般挽留客户 |

| 2 |

89.00 |

1997-01-12 |

6 |

534.0 |

一般挽留客户 |

| 3 |

156.46 |

1998-05-28 |

16 |

33.0 |

重要保持客户 |

| 4 |

100.50 |

1997-12-12 |

7 |

200.0 |

一般发展客户 |

| 5 |

385.61 |

1998-01-03 |

29 |

178.0 |

重要保持客户 |

rfm.groupby('label').sum()

|

M |

F |

R |

| label |

|

|

|

| 一般价值客户 |

7181.28 |

650 |

36295.0 |

| 一般保持客户 |

19937.45 |

1712 |

29448.0 |

| 一般发展客户 |

196971.23 |

13977 |

591108.0 |

| 一般挽留客户 |

438291.81 |

29346 |

6951815.0 |

| 重要价值客户 |

167080.83 |

11121 |

358363.0 |

| 重要保持客户 |

1592039.62 |

107789 |

517267.0 |

| 重要发展客户 |

45785.01 |

2023 |

56636.0 |

| 重要挽留客户 |

33028.40 |

1263 |

114482.0 |

从RFM分层可知,大部分用户为重要保持用户,这是由于极值的影响,所有RFM的划分标准应该为业务为准

- 尽量用小部分的用户覆盖大部分的额度

- 不要为了数据好看划分等级

rfm.loc[rfm.label == '重要价值客户','color'] = 'k'

rfm.loc[rfm.label == '重要保持客户','color'] = 'r'

rfm.loc[rfm.label == '重要发展客户','color'] = 'gold'

rfm.loc[rfm.label == '重要挽留客户','color'] = 'y'

rfm.loc[rfm.label == '一般保持客户','color'] = 'g'

rfm.loc[rfm.label == '一般价值客户','color'] = 'b'

rfm.loc[rfm.label == '一般发展客户','color'] = 'c'

rfm.loc[rfm.label == '一般挽留客户','color'] = 'm'

rfm.plot.scatter('F','R',c = rfm.color)

rfm.head()

|

M |

order_dt |

F |

R |

label |

color |

| user_id |

|

|

|

|

|

|

| 1 |

11.77 |

1997-01-01 |

1 |

545.0 |

一般挽留客户 |

m |

| 2 |

89.00 |

1997-01-12 |

6 |

534.0 |

一般挽留客户 |

m |

| 3 |

156.46 |

1998-05-28 |

16 |

33.0 |

重要保持客户 |

r |

| 4 |

100.50 |

1997-12-12 |

7 |

200.0 |

一般发展客户 |

c |

| 5 |

385.61 |

1998-01-03 |

29 |

178.0 |

重要保持客户 |

r |

② 用户分层 -用户生命周期相关(新客/老客…)

- 新用户:第一次消费

- 活跃用户:一直持续消费

- 回流:之前没有消费,有一段时间没消费,现在又消费了(时间间隔不定)

- 流失/不活跃:有一短时间没消费,或者上月消费这月不消费

pivoted_counts = df.pivot_table(index = 'user_id',

columns = 'month',

values = 'order_dt',

aggfunc = 'count').fillna(0)

pivoted_counts.head()

| month |

1997-01-01 00:00:00 |

1997-02-01 00:00:00 |

1997-03-01 00:00:00 |

1997-04-01 00:00:00 |

1997-05-01 00:00:00 |

1997-06-01 00:00:00 |

1997-07-01 00:00:00 |

1997-08-01 00:00:00 |

1997-09-01 00:00:00 |

1997-10-01 00:00:00 |

1997-11-01 00:00:00 |

1997-12-01 00:00:00 |

1998-01-01 00:00:00 |

1998-02-01 00:00:00 |

1998-03-01 00:00:00 |

1998-04-01 00:00:00 |

1998-05-01 00:00:00 |

1998-06-01 00:00:00 |

| user_id |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 2 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 3 |

1.0 |

0.0 |

1.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

| 4 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 5 |

2.0 |

1.0 |

0.0 |

1.0 |

1.0 |

1.0 |

1.0 |

0.0 |

1.0 |

0.0 |

0.0 |

2.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

df_purchase = pivoted_counts.applymap(lambda x: 1 if x >0 else 0)

df_purchase.tail()

| month |

1997-01-01 00:00:00 |

1997-02-01 00:00:00 |

1997-03-01 00:00:00 |

1997-04-01 00:00:00 |

1997-05-01 00:00:00 |

1997-06-01 00:00:00 |

1997-07-01 00:00:00 |

1997-08-01 00:00:00 |

1997-09-01 00:00:00 |

1997-10-01 00:00:00 |

1997-11-01 00:00:00 |

1997-12-01 00:00:00 |

1998-01-01 00:00:00 |

1998-02-01 00:00:00 |

1998-03-01 00:00:00 |

1998-04-01 00:00:00 |

1998-05-01 00:00:00 |

1998-06-01 00:00:00 |

| user_id |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23566 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 23567 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 23568 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 23569 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 23570 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

def active_status(data):

status = []

for i in range(18):

if data[i] == 0:

if len(status) > 0 :

if status[i-1] == 'unreg':

status.append('unreg')

else:

status.append('unactive')

else:

status.append('unreg')

else:

if len(status) == 0:

status.append('new')

else:

if status[i-1] == 'unactive':

status.append('return')

elif status[i-1] == 'unreg':

status.append('new')

else:

status.append('active')

return status

代码逻辑

① 若本月没有消费

- 若之前是未注册,则依旧未未注册

- 若之前有消费,则为流失/补活跃

- 其他情况,为未注册

② 若本月有消费

- 若是第一次消费,则为新用户

- 如果之前有过消费,则上个月为不活跃,则为回流

- 如果上个月为未注册,则为新用户

- 除此之外,为活跃

purchase_stats = df_purchase.apply(active_status,axis=1)

purchase_stats.head(5)

purchase_stats_ct = purchase_stats.replace('unreg',np.NaN).apply(lambda x:pd.value_counts(x))

purchase_stats_ct.fillna(0).T.head()

purchase_stats_ct.fillna(0).T.plot.area()

purchase_stats_ct.fillna(0).T.apply(lambda x:x/x.sum(),axis =1)

由表可知,每月的用户消费状态变化

- 活跃用户,持续消费的用户,对应消费运营的质量

- 回流用户,之前不消费本月才消费,对应的使唤回运营

- 不活跃用户,对应流失

(5)用户购买周期(按订单)

order_diff = grouped_user.apply(lambda x:x.order_dt -x.order_dt.shift())

order_diff

user_id

1 0 NaT

2 1 NaT

2 0 days

3 3 NaT

4 87 days

5 3 days

6 227 days

7 10 days

8 184 days

4 9 NaT

10 17 days

11 196 days

12 132 days

5 13 NaT

14 13 days

15 21 days

16 66 days

17 50 days

18 16 days

19 36 days

20 55 days

21 84 days

22 4 days

23 22 days

6 24 NaT

7 25 NaT

26 283 days

27 162 days

8 28 NaT

29 43 days

...

23556 69629 63 days

69630 98 days

69631 155 days

23557 69632 NaT

23558 69633 NaT

69634 54 days

69635 37 days

69636 246 days

23559 69637 NaT

69638 54 days

69639 40 days

23560 69640 NaT

23561 69641 NaT

69642 309 days

69643 121 days

23562 69644 NaT

23563 69645 NaT

69646 193 days

23564 69647 NaT

69648 57 days

69649 193 days

23565 69650 NaT

23566 69651 NaT

23567 69652 NaT

23568 69653 NaT

69654 11 days

69655 17 days

23569 69656 NaT

23570 69657 NaT

69658 1 days

Name: order_dt, Length: 69659, dtype: timedelta64[ns]

df.order_dt.shift().head()

0 NaT

1 1997-01-01

2 1997-01-12

3 1997-01-12

4 1997-01-02

Name: order_dt, dtype: datetime64[ns]

df.order_dt.head()

0 1997-01-01

1 1997-01-12

2 1997-01-12

3 1997-01-02

4 1997-03-30

Name: order_dt, dtype: datetime64[ns]

order_diff.describe()

count 46089

mean 68 days 23:22:13.567662

std 91 days 00:47:33.924168

min 0 days 00:00:00

25% 10 days 00:00:00

50% 31 days 00:00:00

75% 89 days 00:00:00

max 533 days 00:00:00

Name: order_dt, dtype: object

(order_diff/np.timedelta64(1,'D')).hist(bins =20)

user_life = grouped_user.order_dt.agg(['min','max'])

(user_life['max'] - user_life['min']).describe()

count 23570

mean 134 days 20:55:36.987696

std 180 days 13:46:43.039788

min 0 days 00:00:00

25% 0 days 00:00:00

50% 0 days 00:00:00

75% 294 days 00:00:00

max 544 days 00:00:00

dtype: object

((user_life['max'] - user_life['min'])/np.timedelta64(1,'D')).hist(bins =20)

- 用户的生命周期受只购买一次的用户影响比较厉害(可以排除)

- 用户均消费134天,中位数仅0天

u_1 = ((user_life['max'] - user_life['min']).reset_index()[0]/np.timedelta64(1,'D'))

u_1[u_1>0].hist(bins = 20)

(6)复购率和回购率(核心电商相关指标)

pivoted_counts.head()

| month |

1997-01-01 00:00:00 |

1997-02-01 00:00:00 |

1997-03-01 00:00:00 |

1997-04-01 00:00:00 |

1997-05-01 00:00:00 |

1997-06-01 00:00:00 |

1997-07-01 00:00:00 |

1997-08-01 00:00:00 |

1997-09-01 00:00:00 |

1997-10-01 00:00:00 |

1997-11-01 00:00:00 |

1997-12-01 00:00:00 |

1998-01-01 00:00:00 |

1998-02-01 00:00:00 |

1998-03-01 00:00:00 |

1998-04-01 00:00:00 |

1998-05-01 00:00:00 |

1998-06-01 00:00:00 |

| user_id |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 2 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 3 |

1.0 |

0.0 |

1.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

| 4 |

2.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

0.0 |

0.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

| 5 |

2.0 |

1.0 |

0.0 |

1.0 |

1.0 |

1.0 |

1.0 |

0.0 |

1.0 |

0.0 |

0.0 |

2.0 |

1.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

purchase_r = pivoted_counts.applymap(lambda x: 1 if x>1 else np.NaN if x==0 else 0)

purchase_r.head()

| month |

1997-01-01 00:00:00 |

1997-02-01 00:00:00 |

1997-03-01 00:00:00 |

1997-04-01 00:00:00 |

1997-05-01 00:00:00 |

1997-06-01 00:00:00 |

1997-07-01 00:00:00 |

1997-08-01 00:00:00 |

1997-09-01 00:00:00 |

1997-10-01 00:00:00 |

1997-11-01 00:00:00 |

1997-12-01 00:00:00 |

1998-01-01 00:00:00 |

1998-02-01 00:00:00 |

1998-03-01 00:00:00 |

1998-04-01 00:00:00 |

1998-05-01 00:00:00 |

1998-06-01 00:00:00 |

| user_id |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

0.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

| 2 |

1.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

| 3 |

0.0 |

NaN |

0.0 |

0.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

1.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

0.0 |

NaN |

| 4 |

1.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

0.0 |

NaN |

NaN |

NaN |

0.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

NaN |

| 5 |

1.0 |

0.0 |

NaN |

0.0 |

0.0 |

0.0 |

0.0 |

NaN |

0.0 |

NaN |

NaN |

1.0 |

0.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

applymap和apply的差别:

- apply 用在dataframe上,用于对row或者column进行计算;

- applymap 用于dataframe上,是元素级别的操作;

- map (其实是python自带的)用于series上,是元素级别的操作。

链接: https://www.cnblogs.com/cymwill/p/7577369.html

- 这个地方是由于使用if条件语句而使用applymap?

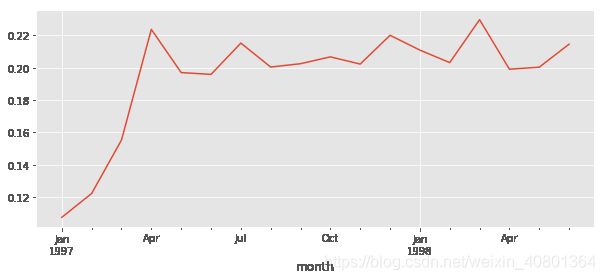

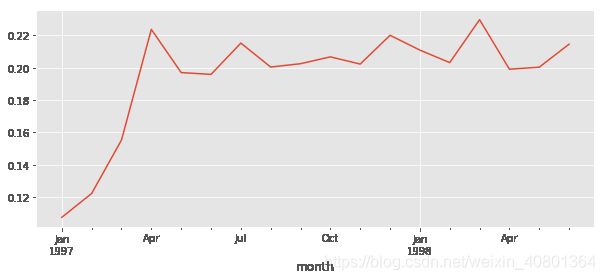

(purchase_r.sum()/purchase_r.count()).plot(figsize =(10,4))

复购率稳定在20%左右,前三个月因为有大量新用户涌入,而这批用户只购买了一次,所有导致复购率降低

df_purchase.head()

| month |

1997-01-01 00:00:00 |

1997-02-01 00:00:00 |

1997-03-01 00:00:00 |

1997-04-01 00:00:00 |

1997-05-01 00:00:00 |

1997-06-01 00:00:00 |

1997-07-01 00:00:00 |

1997-08-01 00:00:00 |

1997-09-01 00:00:00 |

1997-10-01 00:00:00 |

1997-11-01 00:00:00 |

1997-12-01 00:00:00 |

1998-01-01 00:00:00 |

1998-02-01 00:00:00 |

1998-03-01 00:00:00 |

1998-04-01 00:00:00 |

1998-05-01 00:00:00 |

1998-06-01 00:00:00 |

| user_id |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 2 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| 3 |

1 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

| 4 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

| 5 |

1 |

1 |

0 |

1 |

1 |

1 |

1 |

0 |

1 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

def purchase_back(data):

status = []

for i in range(17):

if data[i] == 1:

if data[i+1] ==1:

status.append(1)

if data[i+1] ==0:

status.append(0)

else:

status.append(np.NaN)

status.append(np.NaN)

return status

import pandas as pd

import numpy as np

purchase_b = df_purchase.apply(purchase_back,axis =1)

purchase_b.head(5)

user_id

1 [0, nan, nan, nan, nan, nan, nan, nan, nan, na...

2 [0, nan, nan, nan, nan, nan, nan, nan, nan, na...

3 [0, nan, 1, 0, nan, nan, nan, nan, nan, nan, 0...

4 [0, nan, nan, nan, nan, nan, nan, 0, nan, nan,...

5 [1, 0, nan, 1, 1, 1, 0, nan, 0, nan, nan, 1, 0...

dtype: object

(purchase_b.sum()/purchase_b.count()).plot(figsize =(10,4))