Android FFMpeg应用实例(一):利用CMake方式实现视频解码MP4转YUV(附Demo源码)

本篇博文将给大家介绍一个FFMpeg在Android平台上的一个应用实例,实现视频转码格式转换。如果你还没了解如何通过CMake集成JNI开发环境和在Android Studio中集成FFMpeg,请阅读Android CMake集成JNI开发环境和Android Studio通过JNI(CMake方式)集成FFMpeg音视频处理框架。本人博客会长期更新Android FFmpeg,OpenGL和OpenCV,如果您感兴趣的话,可以关注我CSDN哦。

下面我们开始实现视频转码功能

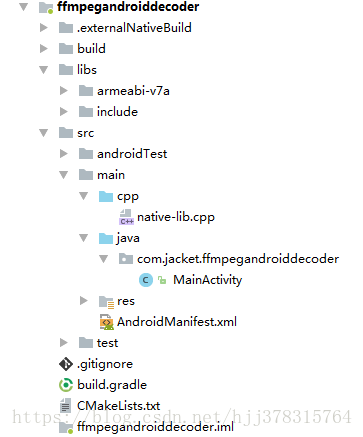

项目结构如下

其中Java代码位于MainActivity下,C++代码位于native-lib.cpp下,在native-lib.cpp文件下调用FFmpeg库提供的函数库进行视频解码。

native-lib.cpp代码如下:

#include nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

if(videoindex==-1){

LOGE("Couldn't find a video stream.\n");

return -1;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);//查找解码器

if(pCodec==NULL){

LOGE("Couldn't find Codec.\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0){//打开解码器

LOGE("Couldn't open codec.\n");

return -1;

}

pFrame=av_frame_alloc();

pFrameYUV=av_frame_alloc();

out_buffer=(unsigned char *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize,out_buffer,

AV_PIX_FMT_YUV420P,pCodecCtx->width, pCodecCtx->height,1);

packet=(AVPacket *)av_malloc(sizeof(AVPacket));

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

sprintf(info, "[Input ]%s\n", input_str);

sprintf(info, "%s[Output ]%s\n",info,output_str);

sprintf(info, "%s[Format ]%s\n",info, pFormatCtx->iformat->name);

sprintf(info, "%s[Codec ]%s\n",info, pCodecCtx->codec->name);

sprintf(info, "%s[Resolution]%dx%d\n",info, pCodecCtx->width,pCodecCtx->height);

fp_yuv=fopen(output_str,"wb+");

if(fp_yuv==NULL){

printf("Cannot open output file.\n");

return -1;

}

frame_cnt=0;

time_start = clock();

while(av_read_frame(pFormatCtx, packet)>=0){//读取一帧压缩数据

if(packet->stream_index==videoindex){

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);//解码一帧压缩数据

if(ret < 0){

LOGE("Decode Error.\n");

return -1;

}

if(got_picture){

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y //把H264数据写入fp_h264文件

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

}

av_free_packet(packet);

}

//flush decoder

//FIX: Flush Frames remained in Codec

/**

* 当av_read_frame()循环退出的时候,实际上解码器中可能还包含剩余的几帧数据。

* 因此需要通过“ flush_decoder”将这几帧数据输出。

* “flush_decoder”功能简而言之即直接调用avcodec_decode_video2()获得AVFrame,而不再向解码器传递。

*/

while (1) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

time_finish = clock();

time_duration=(double)(time_finish - time_start);

sprintf(info, "%s[Time ]%fms\n",info,time_duration);

sprintf(info, "%s[Count ]%d\n",info,frame_cnt);

sws_freeContext(img_convert_ctx);

fclose(fp_yuv);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

jstring

Java_com_jacket_ffmpegandroiddecoder_MainActivity_avcodecinfo(

JNIEnv *env, jobject) {

char info[40000] = {0};

av_register_all();

AVCodec *c_temp = av_codec_next(NULL);

while (c_temp != NULL) {

if (c_temp->decode != NULL) {

sprintf(info, "%sdecode:", info);

} else {

sprintf(info, "%sencode:", info);

}

switch (c_temp->type) {

case AVMEDIA_TYPE_VIDEO:

sprintf(info, "%s(video):", info);

break;

case AVMEDIA_TYPE_AUDIO:

sprintf(info, "%s(audio):", info);

break;

default:

sprintf(info, "%s(other):", info);

break;

}

sprintf(info, "%s[%10s]\n", info, c_temp->name);

c_temp = c_temp->next;

}

return env->NewStringUTF(info);

}

}

在native-lib.cpp文件中,引入FFmpeg的库,调用FFmpeg库的相关C函数对视频流先进行分帧处理,然后对每一帧视频数据进行压缩转码处理,最后根据输出路径,生成对应的YUV文件。

MainActivity代码如下:

package com.jacket.ffmpegandroiddecoder;

import android.os.Bundle;

import android.os.Environment;

import android.support.v7.app.AppCompatActivity;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

import android.widget.TextView;

public class MainActivity extends AppCompatActivity implements View.OnClickListener {

// Used to load the 'native-lib' library on application startup.

static {

System.loadLibrary("native-lib");

}

private Button codec;

private TextView tv_info;

private EditText editText1,editText2;

private Button btnDecoder;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

init();

}

private void init() {

codec = (Button) findViewById(R.id.btn_codec);

tv_info= (TextView) findViewById(R.id.tv_info);

editText1 = (EditText) findViewById(R.id.editText1);

editText2 = (EditText) findViewById(R.id.editText2);

btnDecoder= (Button) findViewById(R.id.button);

codec.setOnClickListener(this);

btnDecoder.setOnClickListener(this);

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.btn_codec:

tv_info.setText(avcodecinfo());

break;

case R.id.button:

startDecode();

break;

default:

break;

}

}

private void startDecode() {

///sdcard/ych/videotemp/2018-03-23_1521792056455_camera.mp4

String folderurl= Environment.getExternalStorageDirectory().getPath()+"/ych/videotemp";

// String inputurl=folderurl+"/"+editText1.getText().toString();

String inputurl=folderurl+"/"+"2018-03-23_1521792056455_camera.mp4";

String outputurl=folderurl+"/"+editText2.getText().toString();

Log.e("ws-----------inputurl",inputurl);

Log.e("ws------------outputurl",outputurl);

decode(inputurl,outputurl);

}

public native int decode(String inputurl, String outputurl);

public native String avcodecinfo();

}

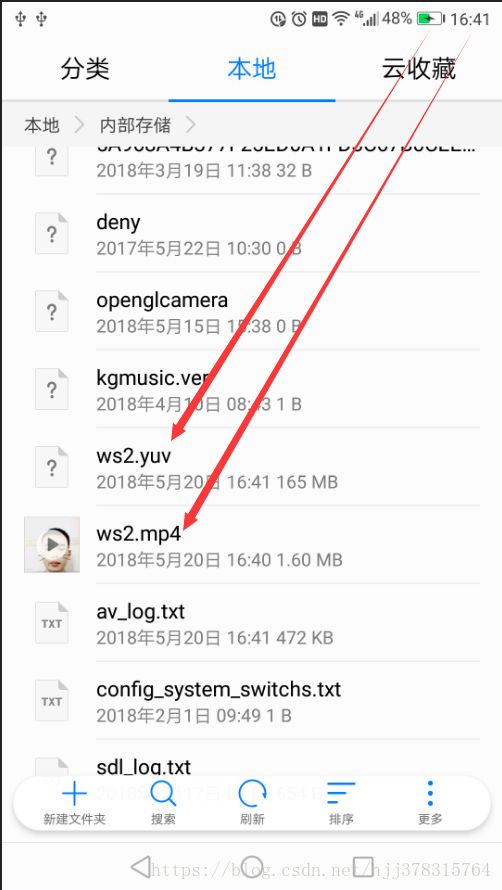

拷贝视频ws2.mp4到手机SD卡下,进行编译转码即可得到对应的YUV文件

运行结果如下:

本篇博文源码在github,喜欢给个star,谢谢