设置cookie:手动设置cookie值的方式有两种 一种通过往请求头headers里面添加cookie 另一种通过cookiejar设置cookie值 本文采取往请求头headers里面添加cookie

1-构造请求头headers

header={

'user-agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

'cookie':cookie

}

2-创建session对象 将cookie值存入 方便之后不需要重复写入cookie

sess = requests.Session()

sess.headers = header

url = 'https://www.zhihu.com/hot'

r = sess.get(url)

3-接下来就是获取知乎热搜新闻上我们需要的字段 如热搜标题 热搜热度 热搜URL 热搜图片

selector = etree.HTML(r.text)

eles = selector.cssselect('div.HotList-list>section')

for index,ele in enumerate(eles):

title = ele.xpath('./div[@class="HotItem-content"]/a/h2/text()')[0]

url = ele.xpath('./div[@class="HotItem-content"]/a/@href')[0]

hot = ele.xpath('./div[@class="HotItem-content"]/div/text()')[0]

crawled_time = datetime.now()

jpgUrl = ele.xpath('./a[@class="HotItem-img"]/img')

if jpgUrl:

img = jpgUrl[0].get('src')

res = requests.get(img)

imgbase64code = base64.b64encode(res.content)

else:

img = ''

4-将获取到的字段存入到本地文件夹中 如果本地没有这个文件夹 通过os.mkdir创建这个文件夹

if not os.path.exists(r'd:/知乎新闻'):

os.mkdir(r'd:/知乎新闻')

5-存取知乎上获取的字段值

with open(r'd:/知乎新闻/%d.txt'%(index+1),'w') as f:

f.write('知乎标题title:'+title+'\n')

f.write('知乎热搜URL:'+url+'\n')

f.write('知乎热搜热度HOT:'+hot+'\n')

f.write('知乎热搜爬取时间:'+str(crawled_time)+'\n')

f.write('知乎图片base64编码:'+str(imgbase64code)+'\n')

6-完整代码如下

import requests

from lxml import etree

import cssselect

import base64

from datetime import datetime

import os

if not os.path.exists(r'd:/知乎新闻'):

os.mkdir(r'd:/知乎新闻')

header={

'user-agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

'cookie':cookie

}

sess = requests.Session()

sess.headers = header

url = 'https://www.zhihu.com/hot'

r = sess.get(url)

selector = etree.HTML(r.text)

eles = selector.cssselect('div.HotList-list>section')

for index,ele in enumerate(eles):

title = ele.xpath('./div[@class="HotItem-content"]/a/h2/text()')[0]

url = ele.xpath('./div[@class="HotItem-content"]/a/@href')[0]

hot = ele.xpath('./div[@class="HotItem-content"]/div/text()')[0]

crawled_time = datetime.now()

jpgUrl = ele.xpath('./a[@class="HotItem-img"]/img')

if jpgUrl:

img = jpgUrl[0].get('src')

res = requests.get(img)

imgbase64code = base64.b64encode(res.content)

else:

img = ''

with open(r'd:/知乎新闻/%d.txt'%(index+1),'w') as f:

f.write('知乎标题title:'+title+'\n')

f.write('知乎热搜URL:'+url+'\n')

f.write('知乎热搜热度HOT:'+hot+'\n')

f.write('知乎热搜爬取时间:'+str(crawled_time)+'\n')

f.write('知乎图片base64编码:'+str(imgbase64code)+'\n')

print("爬取知乎新闻热搜全部完成")

7-完整效果演示

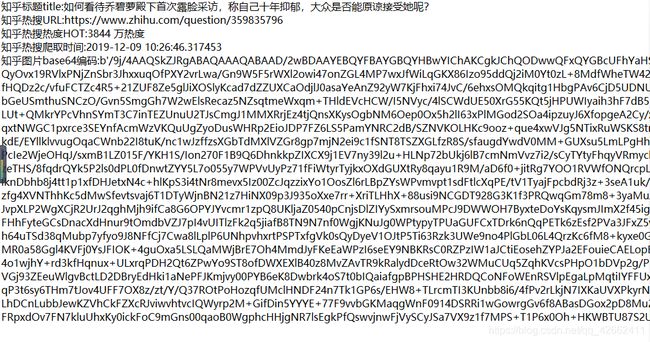

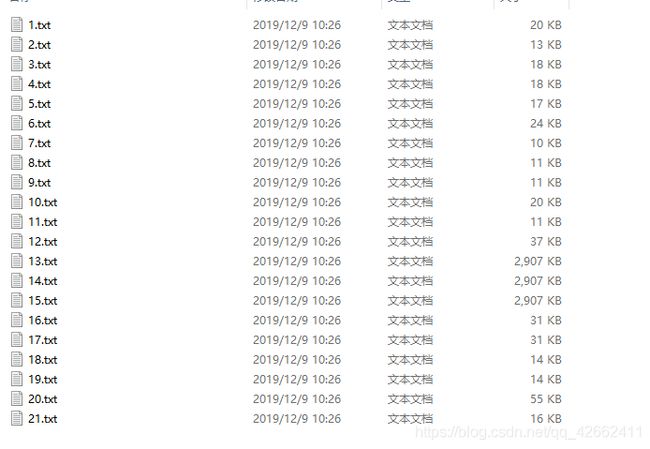

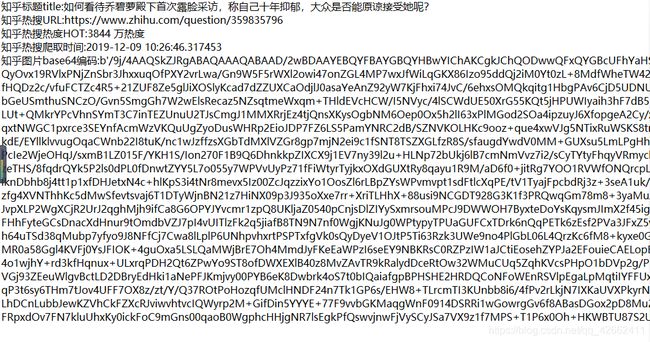

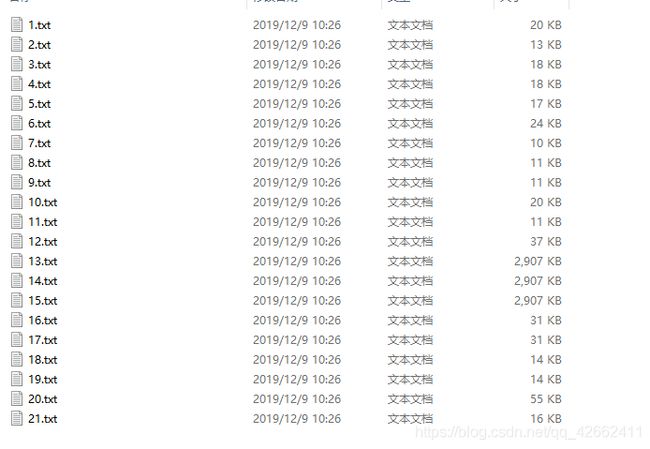

知乎新闻文件下会有50个txt文件 打开每一个txt文件会出现如下的内容

知乎新闻文件下会有50个txt文件 打开每一个txt文件会出现如下的内容