图片文字分割

图片文字分割

基于像素识别图片文字

目前已经有成熟的OCR图片文字识别技术了,但还是学习一下一些基本的实现知识。

OCR

OCR(optical character recognition)文字识别是指电子设备(例如扫描仪或数码相机)检查纸上打印的字符,然后用字符识别方法将形状翻译成计算机文字的过程;即,对文本资料进行扫描,然后对图像文件进行分析处理,获取文字及版面信息的过程。如何除错或利用辅助信息提高识别正确率,是OCR最重要的课题的友好性,产品的稳定性,易用性及可行性等。

OCR技术目前用于各大领域用于文字识别,那么从拍的一张图片如何通过分割得到其中的字呢,下面用python语言进行对图片的分割:

需要用到的包:

import os

import cv2

import numpy as npOS模块是一个Python的系统编程的操作模块,可以处理文件和目录。

CV2是OpenCV官方的一个扩展库,含有各种有关图像处理的的函数以及进程。

NumPy是Python的一种开源的数值计算扩展。这种工具可用来存储和处理大型矩阵

读取图片:

image = cv2.imread("origin.png")

二值化

# adaptiveThreshold参数解释

# 第一个原始图像

# 第二个像素值上限

# 第三个cv2.ADAPTIVE_THRESH_GAUSSIAN_C :领域内像素点加权和,权重为一个高斯窗口

# 第四个值的赋值方法:只有cv2.THRESH_BINARY 和cv2.THRESH_BINARY_INV

# 第五个Block size:规定领域大小(一个正方形的领域)

# 第六个常数C,阈值等于均值或者加权值减去这个常数(为0相当于阈值 就是求得领域内均值或者加权值)

image = cv2.cvtColor(image_color, cv2.COLOR_BGR2GRAY)

adaptive_threshold = cv2.adaptiveThreshold(image,255,\

cv2.ADAPTIVE_THRESH_GAUSSIAN_C,\

cv2.THRESH_BINARY_INV, 11, 2)二值化后方便处理图片像素灰度值

根据垂直和水平方向像素与空间阈值确定方格:

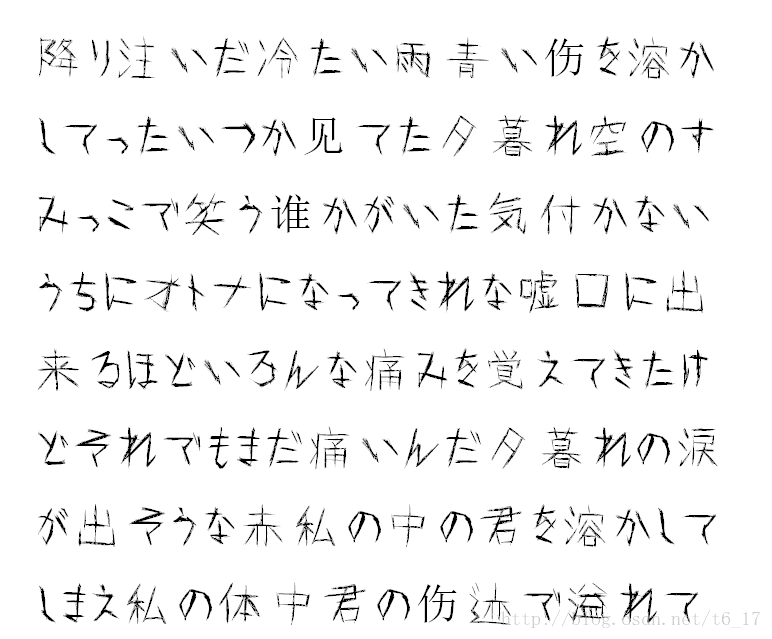

分割后:

提取每个字:

完整代码

import os

import cv2

import numpy as np

base_dir = "./origin/"

dst_dir = "./result/"

min_val = 10

min_range = 30

count = 0

def extract_peek(array_vals, minimun_val, minimun_range):

start_i = None

end_i = None

peek_ranges = []

for i, val in enumerate(array_vals):

if val > minimun_val and start_i is None:

start_i = i

elif val > minimun_val and start_i is not None:

pass

elif val < minimun_val and start_i is not None:

if i - start_i >= minimun_range:

end_i = i

print(end_i - start_i)

peek_ranges.append((start_i, end_i))

start_i = None

end_i = None

elif val < minimun_val and start_i is None:

pass

else:

raise ValueError("cannot parse this case...")

return peek_ranges

def cutImage(img, peek_range):

global count

for i, peek_range in enumerate(peek_ranges):

for vertical_range in vertical_peek_ranges2d[i]:

x = vertical_range[0]

y = peek_range[0]

w = vertical_range[1] - x

h = peek_range[1] - y

pt1 = (x, y)

pt2 = (x + w, y + h)

count += 1

img1 = img[y:peek_range[1], x:vertical_range[1]]

new_shape = (150, 150)

img1 = cv2.resize(img1, new_shape)

cv2.imwrite(dst_dir + str(count) + ".png", img1)

# cv2.rectangle(img, pt1, pt2, color)

for fileName in os.listdir(base_dir):

img = cv2.imread(base_dir + fileName)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

adaptive_threshold = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, \

cv2.THRESH_BINARY_INV, 11, 2)

horizontal_sum = np.sum(adaptive_threshold, axis=1)

peek_ranges = extract_peek(horizontal_sum, min_val, min_range)

line_seg_adaptive_threshold = np.copy(adaptive_threshold)

for i, peek_range in enumerate(peek_ranges):

x = 0

y = peek_range[0]

w = line_seg_adaptive_threshold.shape[1]

h = peek_range[1] - y

pt1 = (x, y)

pt2 = (x + w, y + h)

cv2.rectangle(line_seg_adaptive_threshold, pt1, pt2, 255)

vertical_peek_ranges2d = []

for peek_range in peek_ranges:

start_y = peek_range[0]

end_y = peek_range[1]

line_img = adaptive_threshold[start_y:end_y, :]

vertical_sum = np.sum(line_img, axis=0)

vertical_peek_ranges = extract_peek(

vertical_sum, min_val, min_range)

vertical_peek_ranges2d.append(vertical_peek_ranges)

cutImage(img, peek_range)