gstreamer学习笔记:通过gst-launch工具抓取播放的音频数据并通过upd传输

假设现在有两台虚拟机 A 和 B, A正在播放音乐,B想抓取A所播放的音乐。操作如下:

(1) A 播放音乐:

gst-launch-1.0 filesrc location=xxxx.wav ! wavparse ! autoaudiosink

(2) A 获取pulseaudio正在播放的音频数据,通过udp发送

gst-launch-1.0 -v rtpbin name=rtpbin latency=100 pulsesrc device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! queue2 ! audioconvert ! avenc_aac ! rtpmp4apay pt=96 ! queue2 ! rtpbin.send_rtp_sink_1 rtpbin.send_rtp_src_1 ! udpsink host=127.0.0.1 port=5002 async=false

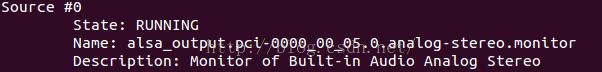

注意:1、device后面指定的为pulseaudio monitor 的名字,可以通过pactl list 命令查看。如下图:

2、host后面指定的Ip地址为B的ip地址。

附上C代码:

#include

#include

#include

#include

#include

#define HOST_IP "172.26.178.103"

#define PORT 8888

#define DEVICE "alsa_output.pci-0000_00_05.0.analog-stereo.monitor"

/* 终端执行的命令:

gst-launch-1.0 -v rtpbin name=rtpbin latency=100 pulsesrc \

device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! queue ! \

audioconvert ! avenc_aac ! rtpmp4apay pt=96 ! queue ! \

rtpbin.send_rtp_sink_0 rtpbin.send_rtp_src_0 ! udpsink host=127.0.0.1 port=8888 async=false

*/

/* 编译

gcc -Wall music_server.c -o music_server $(pkg-config --cflags --libs gstreamer-1.0)

*/

int main(int argc,char *argv[])

{

if(argc != 1 && argc != 3

&& argc != 5 && argc != 7){

printf("invalide args, usage: ./music_server [-d device_name] [-i hostip] [-p port_number]\n");

return -1;

}

char *short_options = "d:i:p:";

static struct option long_options[] = {

//{"reqarg", required_argument, NULL, 'r'},

//{"noarg", no_argument, NULL, 'n'},

//{"optarg", optional_argument, NULL, 'o'},

{"device", required_argument, NULL, 'd'+'l'},

{"host", required_argument, NULL, 'i'+'l'},

{"port", required_argument, NULL, 'p'+'l'},

{0, 0, 0, 0}

};

int opt = 0;

char device[256] = {'\0'};

char host[30] = {'\0'};

int port = 0;

while ( (opt = getopt_long(argc, argv, short_options, long_options, NULL)) != -1){

//printf("opt = %d\n", opt);

switch(opt){

case 'd':

case 'd'+'l':

if(optarg){

char* deviceName = optarg;

int len = strlen(deviceName);

printf("device name = %s\n", deviceName);

strncpy(device, deviceName, len);

device[len] = '\0';

}

else

printf("device name optarg is null\n");

break;

case 'i':

case 'i'+'l':

if(optarg){

char* hostIp = optarg;

int len = strlen(hostIp);

strncpy(host, hostIp, len);

host[len] = '\0';

printf("hostIp = %s\n", hostIp);

}

else

printf("host ip optarg is null\n");

break;

case 'p':

case 'p'+'l':

if(optarg){

char* portNum = optarg;

port = atoi(portNum);

printf("portNum = %s\n", portNum);

}

else

printf("port number optarg is null\n");

break;

default:

break;

}

}

GstElement *audioSource,*queue0,*audioConvert,*aacEnc;

GstElement *rtpMp4apay,*queue1,*rtpBin, *udpSink;

GstElement *pipeline;

GMainLoop *loop;

GstPad *srcpad,*sinkpad;

gst_init(&argc, &argv);

pipeline = gst_pipeline_new(NULL);

g_assert(pipeline);

audioSource = gst_element_factory_make("pulsesrc","pulseaudio_source");

g_assert(audioSource);

if(device[0] == '\0'){

g_object_set(G_OBJECT(audioSource),"device", DEVICE, NULL);

printf("pulseaudio device name: %s\n", DEVICE);

}

else{

g_object_set(G_OBJECT(audioSource),"device", device, NULL);

printf("pulseaudio device name: %s\n", device);

}

queue0 = gst_element_factory_make("queue","queue_0");

g_assert(queue0);

audioConvert = gst_element_factory_make("audioconvert","audio_convert");

g_assert(audioConvert);

aacEnc = gst_element_factory_make("avenc_aac","aac_enc");

g_assert(aacEnc);

//g_object_set(G_OBJECT(aacEnc),"compliance", -2, NULL);

rtpMp4apay = gst_element_factory_make("rtpmp4apay","rtp_Mp4_apay");

g_assert(rtpMp4apay);

g_object_set(G_OBJECT(rtpMp4apay), "pt", 96, NULL);

queue1 = gst_element_factory_make("queue","queue_1");

g_assert(queue1);

rtpBin = gst_element_factory_make("rtpbin","rtp_bin");

g_assert(rtpBin);

g_object_set(G_OBJECT(rtpBin), "latency", 100, NULL);

udpSink = gst_element_factory_make("udpsink","udp_sink");

g_assert(udpSink);

gboolean async = FALSE;

if(host[0] == '\0' && port == 0){

g_object_set(G_OBJECT(udpSink),"port",PORT,"host",HOST_IP,"async",async,NULL);

printf("(1)host ip = %s, port = %d\n", HOST_IP, PORT);

}

else if(host[0] == '\0'){

g_object_set(G_OBJECT(udpSink),"port",port,"host",HOST_IP,"async",async,NULL);

printf("(2)host ip = %s, port = %d\n", HOST_IP, port);

}

else if(port == 0){

g_object_set(G_OBJECT(udpSink),"port",PORT,"host",host,"async",async,NULL);

printf("(3)host ip = %s, port = %d\n", host, PORT);

}

else{

g_object_set(G_OBJECT(udpSink),"port",port,"host",host,"async",async,NULL);

printf("(4)host ip = %s, port = %d\n", host, port);

}

gst_bin_add_many(GST_BIN(pipeline),audioSource,queue0,audioConvert,aacEnc,rtpMp4apay,queue1,rtpBin,udpSink,NULL);

if(!gst_element_link_many(audioSource,queue0,audioConvert,aacEnc,rtpMp4apay,queue1,NULL)){

g_error("Failed to link \n");

return -1;

}

sinkpad=gst_element_get_request_pad(rtpBin,"send_rtp_sink_0");

srcpad=gst_element_get_static_pad(queue1,"src");

if(gst_pad_link(srcpad,sinkpad)!=GST_PAD_LINK_OK)

g_error("Failed to link video payloader to vrtpbin");

gst_object_unref(srcpad);

srcpad=gst_element_get_static_pad(rtpBin,"send_rtp_src_0");

sinkpad=gst_element_get_static_pad(udpSink,"sink");

if(gst_pad_link(srcpad,sinkpad)!=GST_PAD_LINK_OK)

g_error("Failed to link vrtpbin to vrtpsink");

gst_object_unref(srcpad);

gst_object_unref(sinkpad);

g_print("starting sender pipeline\n");

// gst_element_set_state(pipeline,SGT_STATE_PLAYING);

gst_element_set_state (pipeline, GST_STATE_PLAYING);

loop=g_main_loop_new(NULL,FALSE);

g_main_loop_run(loop);

g_print("stopping sender pipeline\n");

gst_element_set_state(pipeline,GST_STATE_NULL);

return 0;

}

(3) B 接收数据,并储存为xxxx.pcm文件:

gst-launch-1.0 -v rtpbin name=rtpbin latency=100 udpsrc caps="application/x-rtp,media=(string)audio,clock-rate=(int)44100,encoding-name=(string)MP4A-LATM,cpresent=(string)0,config=(string)40002420adca00,ssrc=(uint)3235970720,payload=(int)96,clock-base=(uint)2595934541,seqnum-base=(uint)37499" port=5002 ! rtpbin.recv_rtp_sink_0 rtpbin. ! rtpmp4adepay ! queue ! avdec_aac ! filesink location=xxxx.pcm

(4)B播放xxx.pcm文件:

aplay -r 44100 -f s16_le -c 2 xxx.pcm

补充:直接从pulseaudio抓数据并存为文件

(1) 抓取raw数据,存为pcm文件

gst-launch-1.0 pulsesrc device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! filesink location=xxx.pcm

(2)转换为aac文件

gst-launch-1.0 pulsesrc device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! "audio/x-raw,layout=(string)interleaved,rate=(int)44100,channels=(int)2" ! avenc_aac ! avmux_adts ! filesink location=1.aac

执行可能会出现:ERROR: from element /GstPipeline:pipeline0/avenc_aac:avenc_aac0: Codec is experimental, but settings don't allow encoders to produce output of experimental quality的错误

解决办法是:在avenc_aac 后面添加compliance=-2,问题就解决了。

(3)播放抓取的aac文件

gst-launch-1.0 filesrc location=./1.aac ! aacparse ! avdec_aac ! autoaudiosink

(4)音视频合成TS流

gst-launch -e mpegtsmux name="mux" ! filesink location=mux.ts videotestsrc ! video/x-raw-yuv, framerate=25/1, width=640, height=360 ! \

x264enc ! mux. filesrc location=./1.aac ! aacparse ! mux.

gst-launch -e mpegtsmux name="mux" ! filesink location=mux.ts videotestsrc ! video/x-raw-yuv, framerate=25/1, width=640, height=360 ! \

x264enc ! mux. pulsesrc device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! ffenc_aac ! aacparse ! mux.

gst-launch-1.0 -e avmux_mpegts name="mux" ! filesink location=mux.ts videotestsrc pattern=ball ! "video/x-raw,framerate=60/1,width=720,height=480" ! \

avenc_mpeg2video ! mux. pulsesrc device=alsa_output.pci-0000_00_05.0.analog-stereo.monitor ! avenc_aac compliance=-2 ! aacparse ! mux.