根据乐谱合成钢琴音乐

1 钢琴音乐合成原理和方法

决定乐器音色的主要因素为谐波特征,因此,在获得了音符基频之后合成钢琴音乐需要按照钢琴的谐波特征进行。本次实验首先分析钢琴部分音符的频域包络和时域包络,得到其频域谐频与基频的关系以及时域声音强度衰减的特征规律,让后根据此特征并结合已知的音乐基频合成钢琴音乐。

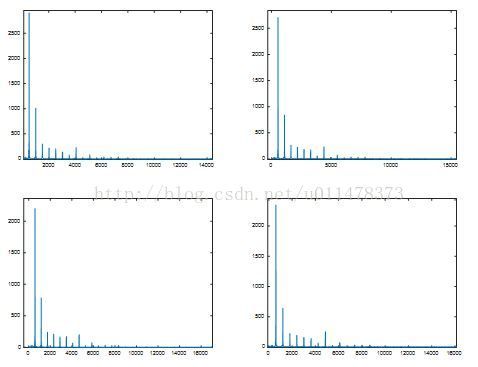

分别分析钢琴Do ReMi Fa四个音的频谱,即对四个实录音符做fft在求模,得到的下面四幅图:

分别计算各个音符2~15次谐频与基频的比例关系,取平均值,得到的平均比例关系为钢琴音符频域包络特征。最终得到的1~15谐波与基频的比例关系如下:

[1,0.340,0.102,0.085,0.070,0.065,0.028,0.085,0.011,0.030,0.010,0.014,0.012,0.013,0.004]

关于乐谱与基频的关系可以参考百度文库中的一个表格:http://wenku.baidu.com/link?url=Comd7xeOv_4fFKFTyirgfMYWd_-xh26-DNc7KLliNLVMTtObrkZOf2IFp31hY8p95T0TGBXsx-_iYilwvXT81j0ODifO4Ki9tiidO4rPvPy

在分析钢琴音符的时域包络,即衰减特征,可以得到其包络如下图:

得到了钢琴音色的频域和时域特征之后根据2.8中时频分析得到的各个时间段的音符基频和持续时间就可以合成模拟的钢琴音乐。在合成每个音时的主要方法,是先根据谐波与基频的比例特征生成15个正弦波并累加,然后根据每个音的持续时间对其在时域进行衰减。

2实验结果

本实验模拟的钢琴频域和时域特征如下图所示:

最后合成的钢琴音乐波形如下图所示:

通过输出的音乐文件pianomusic.wav可以听到合成音乐的效果。必须要承认,该合成效果与真正的钢琴声音又差别,误差主要是由谐波数量以及比例关系的精度造成的。另外,该钢琴音乐与给定的吉他音乐在旋律上也有差别,主要是音符提取精度不足造成的。

3代码(python)

# TO DO: reform it into piano

#-----------------------------------------

def gen_sin(amp, f, fs, tau):

nT = np.linspace(0,tau, round(tau/(1.0/fs)))

signal =np.array([amp*np.cos(2*np.pi*f*t) for t in nT])

return signal

#model the harmonic feature in frequency domain

Amp=[1,0.340,0.102,0.085,0.070,0.065,0.028,0.085,0.011,0.030,0.010,0.014,0.012,0.013,0.004]

numharmonic=len(Amp)

pianomusic=[0 for x in range(0,len(wave_data[0]))]

startpoint=0

#model the piano note attenuation feature in the time domain

attenuation=[0 for x in range(0, 8000)]

#the attack stage

for i in range(0,200):

attenuation[i]=i*0.005

#the attenuate stage

for i in range(200,800):

attenuation[i]=1-(i-200)*0.001

#the maintain stage

for i in range(800,4000):

attenuation[i]=0.4-(i-800)*0.000078

for i in range(4000,8000):

attenuation[i]=0.15-(i-4000)*0.0000078

#compose each note in each time quantum

#nomalizedbasicfreq=[261.63,261.63,261.63,261.63,293.665,293.665,293.665,293.665,329.628,329.628,329.628,329.628,349.228,349.228,349.228,349.228,391.995,391.995,391.995,391.995,440,440,440,440,493.883,493.883,493.883,493.883,523.251,523.251,523.251,523.251,587.33,587.33,587.33,587.33,659.255,659.255,659.255,659.255]

#notestime=[4,4,4,4,4,4,4,4,4,4]

for w in range(0,len(notestime)):

pianonote = [0 for x in range(0, windowsize*notestime[w])] #get the length according to the time of the note

for i in range(0, numharmonic): #get the note by add each harmonic by the amplitude comparatively with the basic frequency

pianonote = pianonote + gen_sin(ampli[startpoint] /50* Amp[i], nomalizedbasicfreq[startpoint] * (i + 1), 8000, 0.125*notestime[w])

#attenuate the note with the time domain feature

for k in range(0,windowsize*notestime[w]):

pianomusic[startpoint*windowsize+k]=pianonote[k]*attenuation[k]

startpoint=startpoint+notestime[w] #record the start point of the next note

startpoint=0

for w in range(0,len(notestime1)):

pianonote = [0 for x in range(0, windowsize*notestime1[w])] #get the length according to the time of the note

for i in range(0, numharmonic): #get the note by add each harmonic by the amplitude comparatively with the basic frequency

pianonote = pianonote + gen_sin(ampli1[startpoint] /50 * Amp[i], basicfreq1[startpoint] * (i + 1), 8000, 0.125*notestime1[w])

#attenuate the note with the time domain feature

for k in range(0,windowsize*notestime1[w]):

pianomusic[startpoint*windowsize+k]=pianomusic[startpoint*windowsize+k]+pianonote[k]*attenuation[k]

startpoint=startpoint+notestime1[w] #record the start point of the next note

startpoint=0

for w in range(0,len(notestime2)):

pianonote = [0 for x in range(0, windowsize*notestime2[w])] #get the length according to the time of the note

for i in range(0, numharmonic): #get the note by add each harmonic by the amplitude comparatively with the basic frequency

pianonote = pianonote + gen_sin(ampli2[startpoint] /100 * Amp[i], basicfreq2[startpoint] * (i + 1), 8000, 0.125*notestime2[w])

#attenuate the note with the time domain feature

#for k in range(0,windowsize*notestime2[w]):

# pianomusic[startpoint*windowsize+k]=pianomusic[startpoint*windowsize+k]+pianonote[k]*attenuation[k]

startpoint=startpoint+notestime2[w] #record the start point of the next note

for i in range(0,len(wave_data[0])):

wave_data[0][i]=pianomusic[i]

#get a wave file

f = wave.open(r"pianomusic.wav", "wb")

#get the channel, sampling width and sampling frequency information

#see details in 2.9 of my report

f.setnchannels(1)

f.setsampwidth(2)

f.setframerate(8000)

f.writeframes(wave_data[0].tostring()) #put the data into the wave file

f.close()

print("STEP 9: please see in figure and listen the pianomusic.wav file")

plt.figure()

plt.subplot(211)

plt.plot(Amp)

plt.title(r'frequency domain harmonic feature')

plt.subplot(212)

plt.plot(attenuation)

plt.title(r'time domain attenuation feature')

plt.figure()

plt.plot(pianomusic)

plt.title(r'STEP 9:reform it into piano')

plt.show()