docker-compose搭建Zookeeper集群以及Kafka集群

一、安装docker-compose

这里不使用官方链接进行安装,因为会很慢

https://github.com/docker/compose/releases

可以前往官网查看目前最新版,然后下面自行更换

curl -L https://get.daocloud.io/docker/compose/releases/download/1.25.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

#验证是否安装成功

docker-compose --version

准备工作:

#创建两个文件夹分别存放docker-compose.yml文件,方便管理

cd /usr/local

mkdir docker

cd docker

mkdir zookeper

mkdir kafka

因为考虑到有时候只需要启动zookeeper而并不需要启动kafka,例如:使用Dubbo,SpringCloud的时候利用Zookeeper当注册中心。所以本次安装分成两个docker-compose.yml来安装和启动

二、搭建zookeeper集群

cd /usr/local/docker/zookeeper

vim docker-compose.yml

docker-compose.yml文件内容

version: '3.3'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo2:

image: zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo3:

image: zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

参考:

DockerHub zookeeper链接:https://hub.docker.com/_/zookeeper

接着:

# :wq 保存退出之后

cd /usr/local/docker/zookeeper #确保docker-compose.yml在当前目录,且自己目前也在当前目录

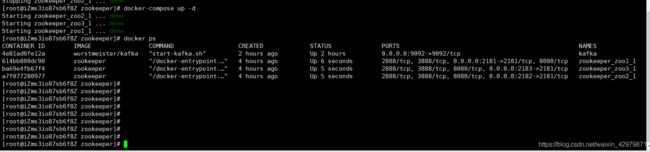

docker-compose up -d

#等待安装和启动

docker ps #查看容器状态

#参考命令

docker-compose ps #查看集群容器状态

docker-compose stop #停止集群容器

docker-compose restart #重启集群容器

如图代表zookeeper已经安装以及启动成功,可自行使用端口扫描工具扫描,等待kafka安装成功以后集中测试

三、Kafka集群搭建

确保已经搭建完成zookeeper环境

cd /usr/local/docker/kafka

vim dokcer-compose.yml

docker-compose.yml内容:

version: '2'

services:

kafka1:

image: wurstmeister/kafka

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_HOST_NAME: 192.168.0.1 ## 修改:宿主机IP

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.1:9092 ## 修改:宿主机IP

KAFKA_ZOOKEEPER_CONNECT: 192.168.0.1:2181, 192.168.0.1:2182, 192.168.0.1:2183 #刚刚安装的zookeeper宿主机IP以及端口

KAFKA_ADVERTISED_PORT: 9092

container_name: kafka1

kafka2:

image: wurstmeister/kafka

ports:

- "9093:9092"

environment:

KAFKA_ADVERTISED_HOST_NAME: 192.168.0.1 ## 修改:宿主机IP

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.1:9093 ## 修改:宿主机IP

KAFKA_ZOOKEEPER_CONNECT: 192.168.0.1:2181, 192.168.0.1:2182, 192.168.0.1:2183 #刚刚安装的zookeeper宿主机IP以及端口

KAFKA_ADVERTISED_PORT: 9093

container_name: kafka2

kafka-manager:

image: sheepkiller/kafka-manager ## 镜像:开源的web管理kafka集群的界面

environment:

ZK_HOSTS: 192.168.0.1 ## 修改:宿主机IP

ports:

- "9000:9000" ## 暴露端口

kafka-manager可以自行选择是否安装,不需要安装去除即可

接着:

# :wq 保存退出之后

cd /usr/local/docker/kafka#确保docker-compose.yml在当前目录,且自己目前也在当前目录

docker-compose up -d

#等待安装和启动

docker ps #查看容器状态

#参考命令

docker-compose ps #查看集群容器状态

docker-compose stop #停止集群容器

docker-compose restart #重启集群容器

四、使用Java代码进行测试

(1)引入pom.xml依赖

<!-- https://mvnrepository.com/artifact/org.apache.kafka/kafka -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>2.1.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.alibaba/fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.62</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.10</version>

</dependency>

(2)创建pojo对象以及Consumer和Producter

User对象

import lombok.Data;

@Data

public class User {

private String id;

private String name;

}

Producter

import java.util.Properties;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import com.alibaba.fastjson.JSON;

public class CollectKafkaProducer {

// 创建一个kafka生产者

private final KafkaProducer<String, String> producer;

// 定义一个成员变量为topic

private final String topic;

// 初始化kafka的配置文件和实例:Properties & KafkaProducer

public CollectKafkaProducer(String topic) {

Properties props = new Properties();

// 配置broker地址

props.put("bootstrap.servers", "192.168.0.1:9092");

// 定义一个 client.id

props.put("client.id", "demo-producer-test");

// 其他配置项:

// props.put("batch.size", 16384); //16KB -> 满足16KB发送批量消息

// props.put("linger.ms", 10); //10ms -> 满足10ms时间间隔发送批量消息

// props.put("buffer.memory", 33554432); //32M -> 缓存提性能

// kafka 序列化配置:

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// 创建 KafkaProducer 与 接收 topic

this.producer = new KafkaProducer<>(props);

this.topic = topic;

}

// 发送消息 (同步或者异步)

public void send(Object message, boolean syncSend) throws InterruptedException {

try {

// 同步发送

if(syncSend) {

producer.send(new ProducerRecord<>(topic, JSON.toJSONString(message)));

}

// 异步发送(callback实现回调监听)

else {

producer.send(new ProducerRecord<>(topic,

JSON.toJSONString(message)),

new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e != null) {

System.err.println("Unable to write to Kafka in CollectKafkaProducer [" + topic + "] exception: " + e);

}

}

});

}

} catch (Exception e) {

e.printStackTrace();

}

}

// 关闭producer

public void close() {

producer.close();

}

// 测试函数

public static void main(String[] args) throws InterruptedException {

String topic = "topic1";

CollectKafkaProducer collectKafkaProducer = new CollectKafkaProducer(topic);

for(int i = 0 ; i < 10; i ++) {

User user = new User();

user.setId(i+"");

user.setName("张三");

collectKafkaProducer.send(user, true);

}

Thread.sleep(Integer.MAX_VALUE);

}

}

Consumer

import java.util.Collections;

import java.util.List;

import java.util.Properties;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.consumer.OffsetAndMetadata;

import org.apache.kafka.common.TopicPartition;

import lombok.extern.slf4j.Slf4j;

@Slf4j

public class CollectKafkaConsumer {

// 定义消费者实例

private final KafkaConsumer<String, String> consumer;

// 定义消费主题

private final String topic;

// 消费者初始化

public CollectKafkaConsumer(String topic) {

Properties props = new Properties();

// 消费者的zookeeper 地址配置

props.put("zookeeper.connect", "192.168.0.1:2181");

// 消费者的broker 地址配置

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.0.1:9092");

// 消费者组定义

props.put(ConsumerConfig.GROUP_ID_CONFIG, "demo-group-id");

// 是否自动提交(auto commit,一般生产环境均设置为false,则为手工确认)

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

// 自动提交配置项

// props.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "1000");

// 消费进度(位置 offset)重要设置: latest,earliest

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

// 超时时间配置

props.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "30000");

// kafka序列化配置

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

// 创建consumer对象 & 赋值topic

consumer = new KafkaConsumer<>(props);

this.topic = topic;

// 订阅消费主题

consumer.subscribe(Collections.singletonList(topic));

}

// 循环拉取消息并进行消费,手工ACK方式

private void receive(KafkaConsumer<String, String> consumer) {

while (true) {

// 拉取结果集(拉取超时时间为1秒)

ConsumerRecords<String, String> records = consumer.poll(1000);

// 拉取结果集后获取具体消息的主题名称 分区位置 消息数量

for (TopicPartition partition : records.partitions()) {

List<ConsumerRecord<String, String>> partitionRecords = records.records(partition);

String topic = partition.topic();

int size = partitionRecords.size();

log.info("获取topic:{},分区位置:{},消息数为:{}", topic, partition.partition(), size);

// 分别对每个partition进行处理

for (int i = 0; i< size; i++) {

System.err.println("-----> value: " + partitionRecords.get(i).value());

long offset = partitionRecords.get(i).offset() + 1;

// consumer.commitSync(); // 这种提交会自动获取partition 和 offset

// 这种是显示提交partition 和 offset 进度

consumer.commitSync(Collections.singletonMap(partition,

new OffsetAndMetadata(offset)));

log.info("同步成功, topic: {}, 提交的 offset: {} ", topic, offset);

}

}

}

}

// 测试函数

public static void main(String[] args) {

String topic = "topic1";

CollectKafkaConsumer collectKafkaConsumer = new CollectKafkaConsumer(topic);

collectKafkaConsumer.receive(collectKafkaConsumer.consumer);

}

}

先启动Producter,随后启动Consumer

成功消费消息,所有配置OK