CS231n作业(四)全连接神经网络分类

一、作业说明

本文对应cs231n的用两层神经网络进行cifar10数据集的分类。

程序的源码已上传,也欢迎有需要的读者下载,地址为https://download.csdn.net/download/wjp_ctt/10766477

二、背景知识

关于全连接神经网络的详细知识,可以参见我的上一篇博客,cs231n神经网络,里面包含了神经网络的建立,梯度计算,正则化等基本知识。

在我们的程序中,使用了dropout正则化技术,和Adam学习率自适应方法。

三、程序源码

首先构建神经网络的一层,存放在layer.py文件中。

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 4 12:52:25 2018

@author: wjp_ctt

"""

import numpy as np

#定义神经网络的一层

class LAYER(object):

def __init__(self, input_dim, output_dim, data):

"""

初始化神经网络的一层

---------------------------------------------------

input_dim :输入维度

output_dim : 输出维度

data : 输入数据

"""

self.input_dim = input_dim

self.output_dim = output_dim

self.input = data

self.output = np.zeros([data.shape[0], output_dim])

self.u1 = np.zeros_like(self.output)

self.w = 0.01*np.random.rand(self.input_dim, self.output_dim)

self.b = 0.01 * np.ones(output_dim)

self.dw = np.zeros_like(self.w)

self.db = np.zeros_like(self.b)

self.dsdwh = []

self.dw_m = np.zeros_like(self.w)

self.dw_v = np.zeros_like(self.w)

self.db_m = np.zeros_like(self.b)

self.db_v = np.zeros_like(self.b)

def forward(self, training_mode, p):

temp = np.dot(self.input, self.w)

temp += self.b

temp[temp<=0]=0 #激活函数,使用ReLU

#训练模式下使用dropout

if training_mode:

self.u1=(np.random.rand(*temp.shape)基于神经网络的一层,我们进行神经网络的叠加,可以得到多层神经网络。这段程序存放于layer.py中。

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 4 14:48:40 2018

@author: wjp_ctt

"""

import numpy as np

import layer

from matplotlib import pylab as plt

#定义神经网络

class NN(object):

def __init__(self, num_layers, layer_size, data, labels, learning_rate, p, batch_size, iterations, k, beta1, beta2):

"""

初始化神经网络

num_layers :网络的层数

layer_size :网络每一层的大小

data :网络需要处理的数据,即网络的输入

labels : 网络输入数据的标签

learning_rate :学习率

p :dropout的概率

batch_size :训练过程中btach的大小

iterations : 训练的迭代次数

k : 学习率的降低参数

beta1 : Adam法中动量项的系数

beta2 :Adam法中梯度项的系数

"""

self.num_layers = num_layers

self.layer_size = layer_size

self.data = data

self.labels = labels

self.num_layers = num_layers

self.learning_rate = learning_rate

self.p = p

self.batch_size = batch_size

self.iterations = iterations

self.k = k

self.beta1 = beta1

self.beta2 = beta2

self.layers = []

self.socre = []

self.loss = 0

self.gradient = []

"""构建神经网络的每一层"""

def add_layer(self):

for i in range(self.num_layers):

if i == 0:

layer_temp=layer.LAYER(self.layer_size[i][0], self.layer_size[i][1], self.data)

self.layers.append(layer_temp)

else:

self.layers[i-1].forward(False, self.p)

input_data=self.layers[i-1].output

layer_temp=layer.LAYER(self.layer_size[i][0], self.layer_size[i][1], input_data)

self.layers.append(layer_temp)

"""构建神经网络的softmax层"""

def add_softmax_layer(self):

self.socre=self.layers[-1].output

self.socre=np.exp(self.socre)

self.socre=self.socre/np.tile(np.sum(self.socre,axis=1), (self.layers[-1].output_dim,1)).T

"""构建用于训练的batch"""

def sample_training_data(self, data, labels):

batch_index= np.random.randint(0, data.shape[0], self.batch_size)

batch=data[batch_index]

batch_labels=labels[batch_index]

return batch, batch_labels

"""前向传播过程"""

def forward(self, training_mode):

for i in range(self.num_layers):

if i == 0:

self.layers[i].input = self.data.copy()

else :

self.layers[i].input = self.layers[i-1].output.copy() #以上一层的输出作为这一层的输入

if i == self.num_layers-1:

self.layers[i].forward(False , self.p) #最后一层不进行dropout操作

else:

self.layers[i].forward(training_mode , self.p)

self.add_softmax_layer()

"""计算损失"""

def compute_loss(self):

loss=np.zeros(self.data.shape[0])

for i in range(self.data.shape[0]):

loss[i] = -np.log(self.socre[i, self.labels[i]])

self.loss = np.mean(loss)

"""反向传播过程"""

def bp(self):

for i in range(self.num_layers, 0, -1):

if i == self.num_layers:

dsdo = self.socre.copy()

for j in range(self.data.shape[0]):

dsdo[j, self.labels[j]]-=1

else:

dsdo = np.dot(self.layers[i].dsdwh, self.layers[i].w.T)

if i != self.num_layers:

dsdo *= self.layers[i-1].u1

dodwh = np.dot(self.layers[i-1].input, self.layers[i-1].w)

dodwh[np.where(dodwh<=0)] = 0

dodwh[np.where(dodwh>0) ] = 1

self.layers[i-1].dsdwh = dsdo * dodwh

self.layers[i-1].db = np.mean(self.layers[i-1].dsdwh, axis = 0)

self.layers[i-1].dw = np.dot(self.layers[i-1].input.T, self.layers[i-1].dsdwh)/self.data.shape[0]

"""使用Adam进行网络权重更新"""

def Adam(self, t):

for i in range(self.num_layers):

#更新w

self.layers[i].dw_m = self.beta1*self.layers[i].dw_m +(1-self.beta1)*self.layers[i].dw

mt = self.layers[i].dw_m/(1-self.beta1**(t+1))

self.layers[i].dw_v = self.beta2*self.layers[i].dw_v +(1-self.beta2)*(self.layers[i].dw**2)

vt = self.layers[i].dw_v / (1-self.beta2**(t+1))

alpha = self.learning_rate*np.exp(-(i+1)*self.k)

self.layers[i].w += - alpha * mt/(np.sqrt(vt)+1e-8)

#更新b

self.layers[i].db_m = self.beta1*self.layers[i].db_m +(1-self.beta1)*self.layers[i].db

mt = self.layers[i].db_m/(1-self.beta1**(t+1))

self.layers[i].db_v = self.beta2*self.layers[i].db_v +(1-self.beta2)*(self.layers[i].db**2)

vt = self.layers[i].db_v / (1-self.beta2**(t+1))

self.layers[i].b += - alpha * mt/(np.sqrt(vt)+1e-8)

"""训练过程"""

def training(self, training_data, training_labels):

total_loss=[]

for i in range(self.iterations):

batch, batch_labels = self.sample_training_data(training_data, training_labels)

self.data = batch

self.labels = batch_labels

self.forward(True)

self.compute_loss()

self.bp()

self.Adam(i)

if np.mod(i,10)==0:

total_loss.append(self.loss)

if np.mod(i, 1000)==0:

print('Steps ',i,' finished. Loss is ', self.loss,' \n')

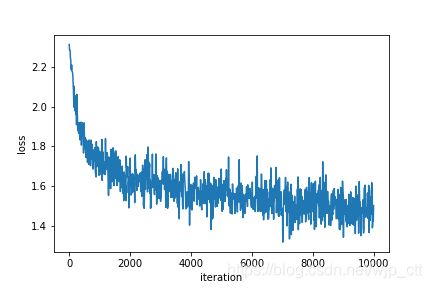

plt.figure(0)

plt.plot(range(0, self.iterations, 10), total_loss)

plt.xlabel('iteration')

plt.ylabel('loss')

plt.savefig('Loss')

"""测试过程"""

def testing(self, testing_data, testing_labels):

self.data= testing_data

self.labels=testing_labels

self.forward(False)

result=np.argmax(self.socre,axis=1)

correct=np.where(result==testing_labels)

correct_num=np.size(correct[0])

accuracy=correct_num/testing_data.shape[0]

return accuracy

由于我们用神经网络对cifar10数据进行处理,所以我们还要包含读取cifar10的程序,以下的代码存放于load_cifar10.py中。

# -*- coding: utf-8 -*-

"""

Created on Mon Nov 5 17:14:15 2018

@author: wjp_ctt

"""

import numpy as np

import random

#读取cifar10数据

def unpickle(file):

import pickle

with open(file, 'rb') as fo:

dict = pickle.load(fo, encoding='bytes')

return dict

def sample_training_data(data, labels, num):

batch_index= np.random.randint(0, data.shape[0], num)

batch=data[batch_index].T

batch_labels=labels[batch_index]

return batch, batch_labels

def get_validation_set(k_fold, num_validation, training_data):

num_training=np.size(training_data, 0)

validation_set=random.sample(range(0,num_training),k_fold*num_validation)

validation_set=np.reshape(validation_set,[num_validation, k_fold])

return validation_set

#进行数据预处理(归一化并加上偏置)

def preprocessing(data):

mean=np.mean(data,axis=0)

std=np.std(data,axis=0)

data=np.subtract(data,mean)

data=np.divide(data, std)

# data=np.hstack([data, np.ones((data.shape[0],1))])

return data

def get_data():

#构建训练数据集

training_data=np.zeros([50000,3072],dtype=np.uint8)

training_filenames=np.zeros([50000],dtype=list)

training_labels=np.zeros([50000],dtype=np.int)

for i in range(0,5):

file_name='cifar-10-python/cifar-10-batches-py/data_batch_'+str(i+1)

temp=unpickle(file_name)

training_data[i*10000+0:i*10000+10000,:]=temp.get(b'data')

training_filenames[i*10000+0:i*10000+10000]=temp.get(b'filenames')

training_labels[i*10000+0:i*10000+10000]=temp.get(b'labels')

print('Training data loaded: 50000 samples from 10 categories!\n')

#构建测试数据集

testing_data=np.zeros([10000,3072],dtype=np.uint8)

testing_filenames=np.zeros([10000],dtype=list)

testing_labels=np.zeros([10000],dtype=np.int)

file_name='cifar-10-python/cifar-10-batches-py/test_batch'

temp=unpickle(file_name)

testing_data=temp.get(b'data')

testing_filenames=temp.get(b'filenames')

testing_labels=temp.get(b'labels')

print('Testing data loaded: 10000 samples from 10 categories!\n')

#预处理

training_data=preprocessing(training_data)

testing_data=preprocessing(testing_data)

return training_data, training_labels, testing_data, testing_labels

最后我们就只要写一个demo.py,包含数据读取,网络建立,训练和测试的过程即可。

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 4 16:46:09 2018

@author: wjp_ctt

"""

import nn

import load_cifar10

"""读取数据并设置网络参数"""

training_data , training_labels, testing_data, testing_labels = load_cifar10.get_data()

num_layers =2

layer_size =[[3072,100],[100,10]]

learning_rate =1e-4

p =0.5

batch =256

iterations =10000

k =0.01

beta1 =0.9

beta2 =0.999

#搭建网络

my_nn = nn.NN(num_layers, layer_size, training_data, training_labels, learning_rate, p, batch, iterations, k, beta1, beta2)

my_nn.add_layer()

my_nn.add_softmax_layer()

#训练网络

my_nn.training(training_data, training_labels)

#测试

accuracy=my_nn.testing(testing_data, testing_labels)

print("testing accuracy is ", accuracy)

四、程序输出

Training data loaded: 50000 samples from 10 categories!

Testing data loaded: 10000 samples from 10 categories!

Steps 0 finished. Loss is 2.3143959116805086

Steps 1000 finished. Loss is 1.6558507311506676

Steps 2000 finished. Loss is 1.7406557371666806

Steps 3000 finished. Loss is 1.5221941630138875

Steps 4000 finished. Loss is 1.486093197932207

Steps 5000 finished. Loss is 1.552217635152904

Steps 6000 finished. Loss is 1.5143278145965866

Steps 7000 finished. Loss is 1.486140799958154

Steps 8000 finished. Loss is 1.521612594954726

Steps 9000 finished. Loss is 1.3407518195092742

testing accuracy is 0.5075

五、结果说明

最终的精度为50.75%,对比之前使用线性分类器结合softmax的方法的38.8%结果有很大提升。但是结果还是不让人满意,这是由与这里还是把原始图片拉成一个向量进行处理,忽略了像素间的相对关系,这是我们后续使用卷积神经网络可以提升的地方。