部署Kubernetes集群-1.18.6版本

Kubeadm 部署 Kubernetes1.18.6 集群

一、环境说明

| 主机名 | IP地址 | 角色 | 系统 |

|---|---|---|---|

| k8s-master | 192.168.182.150 | k8s-master | Centos7.6 |

| k8s-node-1 | 192.168.182.160 | k8s-node | Centos7.6 |

| k8s-node-2 | 192.168.182.170 | k8s-node | Centos7.6 |

- 注意:官方建议每台机器至少双核2G内存,同时需确保MAC和product_uuid唯一(参考下面的命令查看)

ip link

cat /sys/class/dmi/id/product_uuid

二、环境配置

- 以下命令在三台主机上均需运行

1、设置阿里云yum源(可选)

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

rm -rf /var/cache/yum && yum makecache && yum -y update && yum -y autoremove

# 注意: 网络条件不好,可以不用 update

2、安装依赖包

yum install -y epel-release conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

3、关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

4、关闭SELinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

5、关闭swap分区

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

6、加载内核模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

- modprobe ip_vs lvs基于4层的负载均很

- modprobe ip_vs_rr 轮询

- modprobe ip_vs_wrr 加权轮询

- modprobe ip_vs_sh 源地址散列调度算法

- modprobe nf_conntrack_ipv4 连接跟踪模块

- modprobe br_netfilter 遍历桥的数据包由iptables进行处理以进行过滤和端口转发

7、设置内核参数

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/k8s.conf

- overcommit_memory是一个内核对内存分配的一种策略,取值又三种分别为0, 1, 2

- overcommit_memory=0, 表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。

- overcommit_memory=1, 表示内核允许分配所有的物理内存,而不管当前的内存状态如何。

- overcommit_memory=2, 表示内核允许分配超过所有物理内存和交换空间总和的内存

- net.bridge.bridge-nf-call-iptables 设置网桥iptables网络过滤通告

- net.ipv4.tcp_tw_recycle 设置 IP_TW 回收

- vm.swappiness 禁用swap

- vm.panic_on_oom 设置系统oom(内存溢出)

- fs.inotify.max_user_watches 允许用户最大监控目录数

- fs.file-max 允许系统打开的最大文件数

- fs.nr_open 允许单个进程打开的最大文件数

- net.ipv6.conf.all.disable_ipv6 禁用ipv6

- net.netfilter.nf_conntrack_max 系统的最大连接数

8、安装 Docker

1、首先卸载旧版

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

2、安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

3、设置安装源(阿里云)

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

4、启用测试库(可选)

yum-config-manager --enable docker-ce-edge

yum-config-manager --enable docker-ce-test

5、安装

yum makecache fast

yum list docker-ce --showduplicates | sort -r

yum -y install docker-ce-18.09.9-3.el7

6、启动

systemctl start docker

7、开机自启设置

systemctl enable docker

-

Docker建议配置阿里云镜像加速

-

另外Kubeadm建议将 systemd 设置为 cgroup 驱动,所以还要修改 daemon.json

sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://bk6kzfqm.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

systemctl daemon-reload

systemctl restart docker

9、安装 kubeadm 和 kubelet

1、配置安装源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#重建yum缓存,输入y添加证书认证

yum makecache fast

2、安装

yum install -y kubelet kubeadm kubectl

systemctl enable --now kubelet

3、配置自动补全命令

#安装bash自动补全插件

yum install bash-completion -y

#设置kubectl与kubeadm命令补全,下次login生效

kubectl completion bash > /etc/bash_completion.d/kubectl

kubeadm completion bash > /etc/bash_completion.d/kubeadm

10、拉取所需镜像

- 由于国内网络因素,kubernetes镜像需要从mirrors站点或通过dockerhub用户推送的镜像拉取

kubeadm config images list --kubernetes-version v1.18.6

W0803 07:13:18.584538 11055 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.18.6

k8s.gcr.io/kube-controller-manager:v1.18.6

k8s.gcr.io/kube-scheduler:v1.18.6

k8s.gcr.io/kube-proxy:v1.18.6

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.7

1、拉取镜像

- 另因阿里云的镜像暂时还没更新到v1.18.5版本,所以通过dockerhub上拉取,目前阿里云最新同步版本是v1.18.3,想通过v1.18.3版本拉取镜像请参考

vim get-k8s-images.sh

#!/bin/bash

# Script For Quick Pull K8S Docker Images

KUBE_VERSION=v1.18.6

PAUSE_VERSION=3.2

CORE_DNS_VERSION=1.6.7

ETCD_VERSION=3.4.3-0

# pull kubernetes images from hub.docker.com

docker pull kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker pull kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker pull kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker pull kubeimage/kube-scheduler-amd64:$KUBE_VERSION

# pull aliyuncs mirror docker images

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

# retag to k8s.gcr.io prefix

docker tag kubeimage/kube-proxy-amd64:$KUBE_VERSION k8s.gcr.io/kube-proxy:$KUBE_VERSION

docker tag kubeimage/kube-controller-manager-amd64:$KUBE_VERSION k8s.gcr.io/kube-controller-manager:$KUBE_VERSION

docker tag kubeimage/kube-apiserver-amd64:$KUBE_VERSION k8s.gcr.io/kube-apiserver:$KUBE_VERSION

docker tag kubeimage/kube-scheduler-amd64:$KUBE_VERSION k8s.gcr.io/kube-scheduler:$KUBE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION k8s.gcr.io/coredns:$CORE_DNS_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION k8s.gcr.io/etcd:$ETCD_VERSION

# untag origin tag, the images won't be delete.

docker rmi kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-scheduler-amd64:$KUBE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

2、Master 导出镜像

如果网络不好的手动导入我的镜像包

链接:https://pan.baidu.com/s/1XmjnDy5ZkLcYmz3_-hqeMw

提取码:vknk

docker save $(docker images | grep -v REPOSITORY | awk 'BEGIN{OFS=":";ORS=" "}{print $1,$2}') -o k8s-1.18.6-images.tar

3、node 节点导入镜像

docker image load -i k8s-1.18.6-images.tar

三、初始化集群

- 以下命令如无特殊说明,均在k8s-master上执行

1、使用kubeadm init初始化集群(注意修 apiserver 地址为本机IP)

kubeadm init --kubernetes-version=v1.18.6 --apiserver-advertise-address=192.168.182.150 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

-

–kubernetes-version=v1.16.2 : 加上该参数后启动相关镜像(刚才下载的那一堆)

-

–pod-network-cidr=10.244.0.0/16 :(Pod 中间网络通讯我们用flannel,flannel要求是10.244.0.0/16,这个IP段就是Pod的IP段)

-

–service-cidr=10.1.0.0/16 : Service(服务)网段(和微服务架构有关)

-

初始化成功后会输出类似下面的加入命令,暂时无需运行,先记录。

kubeadm join 192.168.120.128:6443 --token duz8m8.njvafly3p2jrshfx --discovery-token-ca-cert-hash sha256:60e15ba0f562a9f29124914a1540bd284e021a37ebdbcea128f4e257e25002db

2、为需要使用kubectl的用户进行配置

#把密钥配置加载到自己的环境变量里

export KUBECONFIG=/etc/kubernetes/admin.conf

#每次启动自动加载$HOME/.kube/config下的密钥配置文件(K8S自动行为)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3、 集群网络配置(选择一种就可以)

1、安装 flannel 网络

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 注意:修改集群初始化地址及镜像能否拉去

2、安装Pod Network(使用七牛云镜像)

curl -o kube-flannel.yml https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

sed -i "s/quay.io\/coreos\/flannel/quay-mirror.qiniu.com\/coreos\/flannel/g" kube-flannel.yml

kubectl apply -f kube-flannel.yml

rm -f kube-flannel.yml

- 使用下面的命令确保所有的Pod都处于Running状态,可能要等到许久。

kubectl get pod --all-namespaces -o wide

3、安装 calico 网络 —推荐使用这种

wget https://docs.projectcalico.org/v3.15/manifests/calico.yaml

vim calico.yaml

## 搜ip地址192 大概是3580行 修改后删除到注释 保存退出

kubectl apply -f calico.yaml ##生效

4、向Kubernetes集群中添加Node节点

- 在k8s-node-2和k8s-node-3上运行之前在k8s-node-1输出的命令

kubeadm join 192.168.182.150:6443 --token 1larur.w0l80fztn9lb5r8j \

> --discovery-token-ca-cert-hash sha256:01fee86a181f25894dc20991ad832b5f09fb4bda29f9b8a19a6c3c9ced638f75

- 注意:没有记录集群 join 命令的可以通过以下方式重新获取

kubeadm token create --print-join-command --ttl=0

2、为需要使用kubectl的用户进行配置

- 查看集群中的节点状态,可能要等等许久才Ready

kubectl get nodes

5、kube-proxy 开启 ipvs

kubectl get configmap kube-proxy -n kube-system -o yaml > kube-proxy-configmap.yaml

sed -i 's/mode: ""/mode: "ipvs"/' kube-proxy-configmap.yaml

kubectl apply -f kube-proxy-configmap.yaml

rm -f kube-proxy-configmap.yaml

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

四、部署 kubernetes-dashboard

1、在 Master 上部署 Dashboard

[root@k8s-master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-74c9747c46-cp4dk 1/1 Running 0 143m 10.244.235.193 k8s-master

kube-system calico-node-6xmxh 1/1 Running 0 143m 192.168.152.145 k8s-master

kube-system calico-node-8mbwq 1/1 Running 0 131m 192.168.152.146 k8s-node1

kube-system calico-node-sqtgq 1/1 Running 0 131m 192.168.152.147 k8s-node2

kube-system coredns-6955765f44-n8d8v 1/1 Running 0 3h44m 10.244.235.195 k8s-master

kube-system coredns-6955765f44-p4kd4 1/1 Running 0 3h44m 10.244.235.194 k8s-master

kube-system etcd-k8s-master 1/1 Running 0 3h44m 192.168.152.145 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 3h44m 192.168.152.145 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 3h44m 192.168.152.145 k8s-master

kube-system kube-flannel-ds-amd64-2d88c 1/1 Running 0 131m 192.168.152.147 k8s-node2

kube-system kube-flannel-ds-amd64-69ftl 1/1 Running 0 3h6m 192.168.152.145 k8s-master

kube-system kube-flannel-ds-amd64-9ct9d 1/1 Running 0 131m 192.168.152.146 k8s-node1

kube-system kube-proxy-7p56k 1/1 Running 0 122m 192.168.152.146 k8s-node1

kube-system kube-proxy-mfbs8 1/1 Running 0 122m 192.168.152.145 k8s-master

kube-system kube-proxy-skt9w 1/1 Running 0 122m 192.168.152.147 k8s-node2

kube-system kube-scheduler-k8s-master 1/1 Running 0 3h44m 192.168.152.145 k8s-master

kube-system metrics-server-cfc97f444-ptpqv 1/1 Running 0 7m10s 10.244.36.75 k8s-node1

kubernetes-dashboard dashboard-metrics-scraper-76585494d8-2z5dq 1/1 Running 0 20m 10.244.36.73 k8s-node1

kubernetes-dashboard kubernetes-dashboard-6b86b44f87-ctwth 1/1 Running 0 20m 10.244.169.134 k8s-node2

2、下载并修改Dashboard安装脚本(在Master上执行)

- 参照官网安装说明在master上执行:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0- beta5/aio/deploy/recommended.yaml ##下载失败的 直接复制下面的文件

cat > recommended.yaml<<-EOF

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: kubernetes-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: kubernetes-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-metrics-scraper

template:

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

spec:

containers:

- name: kubernetes-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.0

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

EOF

- 修改recommended.yaml文件内容(vi recommended.yaml):

---

#增加直接访问端口

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #增加

ports:

- port: 443

targetPort: 8443

nodePort: 30008 #增加

selector:

k8s-app: kubernetes-dashboard

---

#因为自动生成的证书很多浏览器无法使用,所以我们自己创建,注释掉kubernetes-dashboard-certs对象声明

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-certs

# namespace: kubernetes-dashboard

#type: Opaque

---

3、创建证书

mkdir dashboard-certs

cd dashboard-certs/

#创建命名空间

kubectl create namespace kubernetes-dashboard

## 删除命名空间kubectl delete namespace kubernetes-dashboard

# 创建私钥key文件

openssl genrsa -out dashboard.key 2048

#证书请求

openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

#自签证书

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#创建kubernetes-dashboard-certs对象

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

4、创建 dashboard 管理员

1、创建账号

vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

kubectl create -f dashboard-admin.yaml

2、为用户分配权限

vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

kubectl create -f dashboard-admin-bind-cluster-role.yaml

5、安装 Dashboard

#安装

kubectl create -f ~/recommended.yaml

#检查结果

kubectl get pods -A -o wide

[root@k8s-master ~]# kubectl get service -n kubernetes-dashboard -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

dashboard-metrics-scraper ClusterIP 10.1.186.219 <none> 8000/TCP 19m k8s-app=dashboard-metrics-scraper

kubernetes-dashboard NodePort 10.1.60.1 <none> 443:30008/TCP 19m k8s-app=kubernetes-dashboard

6、查看并复制用户Token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-9mqvw

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 762b0839-9ba3-4442-b123-e2c2b37a1088

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ik9VVVQ1YkdpeDA1N1U0OUc4X0RZM2ppUndsNUdUNTRuOU1jZ0RuSUcxd00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tOW1xdnciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNzYyYjA4MzktOWJhMy00NDQyLWIxMjMtZTJjMmIzN2ExMDg4Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.W5DLW4dYX5u33Lg97BYb33eIWL5gTFT5xyZ5uqcPun4ChpMY7lrGA2GuxPhdfWbju7DaFMr7eacgnWoAOQzr_rgrCWCWnT7xmEWpvChbi7VVpyEGrVVqxXVRYIWMrpP5s-TYD8doEjeoxrFDwo4CWX7zv834vhkjnharY5ZBZYEKAw06Eg7d-HFsq8ZTAkeg8wXtuRd_OHvPddAuxmZCnf3Y3yLh6Ak7n3OkWKBupY7pRVUnzDBT2Nk7vv0YrAFm6f6x2Wg-WeE7Wbgwt7cOBMo2fJixfdmo0GDdwv0stCk4kuz-8wXpGtR2nGzEWX7AY5snT9AEabYvrOLIYRy7sQ

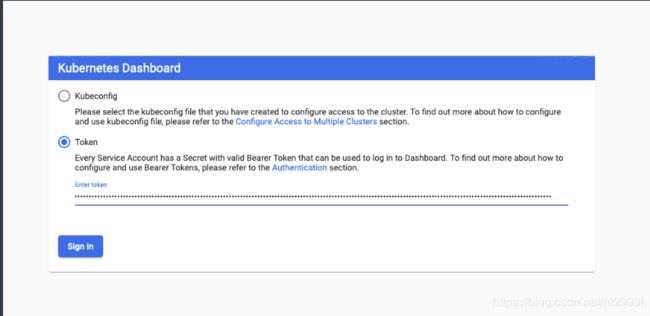

7、访问

- [https://192.168.182.150:30008,选择Token登录,输入刚才复制的密钥

#如果进入网站提示证书错误执行,原路返回一步一步delete回到创建证书步骤重做

kubectl delete -f ~/recommended.yaml

8、安装部署 metrics-server 插件

(很重要的角色,在集群中实现扩容,故障自愈,伸缩)

1、简单介绍

- heapster已经被metrics-server取代,如果使用kubernetes的自动扩容功能的话,那首先得有一个插件,然后该插件将收集到的信息(cpu、memory…)与自动扩容的设置的值进行比对,自动调整pod数量。关于该插件,在kubernetes的早些版本中采用的是heapster,1.13版本正式发布后,丢弃了heapster,官方推荐采用metrics-sever。

2、下载相关yaml文件

下载metrics-server-master.zip 注意版本不同文件可能不同

链接:https://pan.baidu.com/s/18PsL5wlgmfh1Ci6ZIPk5Dw

提取码:p6na

[root@k8s-master ~]# unzip metrics-server-master.zip ##解压上传的插件包

[root@k8s-master ~]# cd metrics-server-master/deploy/1.8+

[root@k8s-master 1.8+]# ll

总用量 28

-rw-r--r-- 1 root root 384 4月 28 09:46 aggregated-metrics-reader.yaml

-rw-r--r-- 1 root root 308 4月 28 09:46 auth-delegator.yaml

-rw-r--r-- 1 root root 329 4月 28 09:46 auth-reader.yaml

-rw-r--r-- 1 root root 298 4月 28 09:46 metrics-apiservice.yaml

-rw-r--r-- 1 root root 815 4月 28 09:46 metrics-server-deployment.yaml

-rw-r--r-- 1 root root 291 4月 28 09:46 metrics-server-service.yaml

-rw-r--r-- 1 root root 502 4月 28 09:46 resource-reader.yaml

3、修改安装脚本

vim metrics-server-deployment.yaml ## 注意是修改 这里只截取了片段不能直接替换

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6 # 修改镜像下载地址

args: # 添加以下内容

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

mountPath: /tmp

5、执行安装脚本并产看结果

#安装

[root@k8s-master 1.8+]# kubectl create -f .

#1-2分钟后查看结果

[root@k8s-master 1.8+]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 256m 12% 2002Mi 52%

k8s-node1 103m 5% 1334Mi 34%

k8s-node2 144m 7% 1321Mi 34%

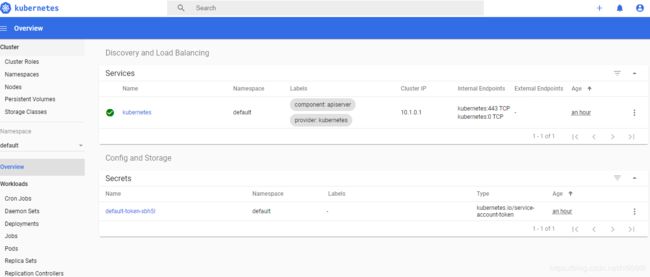

再回到dashboard界面可以看到CPU和内存使用情况了:

9、导出认证

[root@k8s-master01 dashboard]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-9d8x7

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 58c5cfc1-64fb-42b8-ac33-aab1743dc4e2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InpZWlh1OTZndm5TTVgtUVRUc2ZnUnE1bHh6TjM2cXdxaGNjcTdyMWI5RHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tOWQ4eDciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNThjNWNmYzEtNjRmYi00MmI4LWFjMzMtYWFiMTc0M2RjNGUyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.bDWVmPocpKlFpLHLs0X0Axc51FT1ZjioVP8CTL_7_-1Acxpaxi4H54-KMbtKwNjM6ogYRN9jMYWS0zmIVF1NpouEnUFYPNOb05x1jPAOgFyWrfEJRuRXZgFLdy3s79JHfpg-0ZEySDAZIRBYHtvbHLC1eIBZBAm_sTPhQmYg_UxCSWklC1i6ZYEOJ6Q-8HqqCbBNEH31BTv8m59P420g2M3U9Y6IuhN1vPffesB7Y8XMK8qRqgrINGaF_i0z0ascInBQabS0X2TL30eBQfV1Fzl0s1VspTnpYUO2Uq3A1jbZpEl1PJsPzy0BvwpO4PP6aRg8EM3oqCSUvfRu9cQwDQ

[root@k8s-master01 dashboard]# vim /root/.kube/config # 增加 token 内容

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxekNDQXIrZ0F3SUJBZ0lVTFFhcXpaaitVc0tRU1BiWVlMRmxDWnhDZVBNd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SUJjTk1qQXdOREU1TURVeE1UQXdXaGdQTWpBM01EQTBNRGN3TlRFeE1EQmFNR2N4Q3pBSkJnTlYKQkFZVEFrTk9NUkV3RHdZRFZRUUlFd2hJWVc1bldtaHZkVEVMTUFrR0ExVUVCeE1DV0ZNeEZ6QVZCZ05WQkFvVApEbk41YzNSbGJUcHRZWE4wWlhKek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweERqQU1CZ05WQkFNVEJXRmtiV2x1Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBeG1MWWxNQXFEeGVreXljWWlvQXUKU2p5VzhiUCtxTzF5bUhDWHVxSjQ3UW9Vd0lSVEFZdVAyTklQeFBza04xL3ZUeDBlTjFteURTRjdYd3dvTjR5cApacFpvRjNaVnV1NFNGcTNyTUFXT1d4VU93REZNZFZaSkJBSGFjZkdMemdOS01FZzRDVDhkUmZBUGxrYVdxNkROCmJKV3JYYW41WGRDUnE2NlpTdU9lNXZXTWhENzNhZ3UzWnBVZWtHQmpqTEdjNElTL2c2VzVvci9LeDdBa0JuVW0KSlE3M2IyWUl3QnI5S1ZxTUFUNnkyRlhsRFBpaWN1S0RFK2tGNm9leG04QTljZ1pKaDloOFZpS0trdnV3bVh5cwpNREtIUzJEektFaTNHeDVPUzdZR1ZoNFJGTGp0VXJuc1h4TVBtYWttRFV1NkZGSkJsWlpkUTRGN2pmSU9idldmCjlRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGS1pCcWpKRldWejZoV1l1ZkZGdApHaGJnQ05MU01COEdBMVVkSXdRWU1CYUFGQWJLKzBqanh6YUp3R1lGYWtpWVJjZzZENkpmTUEwR0NTcUdTSWIzCkRRRUJDd1VBQTRJQkFRQ05Ra3pueDBlSDU3R2NKZTF5WUJqNkY4YmVzM2VQNGRWcUtqQVZzSkh6S3dRWnpnUjIKcnVpMmdZYTZjdWNMNGRWVllHb05mRzRvdWI0ekJDTUIzZkRyN2FPRFhpcGcrdWx3OFpRZGRaN3RIYnZRTlIyMApTTHhnWnlFYU9MSFdmRVNYNFVJZk1mL3pDaGZ0Yzdhb1NpcUNhMGo2NmY2S3VVUnl6SSsxMThqYnpqK1gwb1d1ClVmdVV3dk5xWHR5ZjlyUTVWQW40bjhiU25nZDBGOXgzNFlyeUNMQ0REOWdBaWR3SDlVM3I3eVVGQ1Rkbm9leEgKSTgyYjRLdHZzT2NGMk5Dd21WZDFBWDNJSEFmMENRMEZSQ21YWjF3aFNxd1lFeVAxTStMMEcxN29CTmU5cmttMwo4U0NyWjczaWtiN0k1NXlVOWRrMjdXbVByb1hXMjAvcXhHeDYKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBeG1MWWxNQXFEeGVreXljWWlvQXVTanlXOGJQK3FPMXltSENYdXFKNDdRb1V3SVJUCkFZdVAyTklQeFBza04xL3ZUeDBlTjFteURTRjdYd3dvTjR5cFpwWm9GM1pWdXU0U0ZxM3JNQVdPV3hVT3dERk0KZFZaSkJBSGFjZkdMemdOS01FZzRDVDhkUmZBUGxrYVdxNkROYkpXclhhbjVYZENScTY2WlN1T2U1dldNaEQ3MwphZ3UzWnBVZWtHQmpqTEdjNElTL2c2VzVvci9LeDdBa0JuVW1KUTczYjJZSXdCcjlLVnFNQVQ2eTJGWGxEUGlpCmN1S0RFK2tGNm9leG04QTljZ1pKaDloOFZpS0trdnV3bVh5c01ES0hTMkR6S0VpM0d4NU9TN1lHVmg0UkZManQKVXJuc1h4TVBtYWttRFV1NkZGSkJsWlpkUTRGN2pmSU9idldmOVFJREFRQUJBb0lCQVFDdkRPRld3QWxjcjl3MQpkaFh0Z0JWWVpBWTgyRHBKRE53bExwUnpscEZsZDVQQUhBS3lSbGR6VmtlYjVJNmNYZ1pucEtYWTZVaDIxYWhxCndldHF1Szl4V2g0WE5jK0gxaklYMlBiQnRPVmI4VVRHeWJsUmdBV0ZoNjBkQmFuNjZtUTRIa0Z6eDBFcFNSNDMKMTZselg3eGpwOTFDRkkxNC9tVExQSkQreDhLYXYxcDVPU1BYQkxhdzR6V1JycmFVSnFrVUtZcmRJUVlkNC9XQQpLNVp3WGpRdklpZzlGclArb2Fnb1kyelFzODFXMmlVd1pXanhkQnV0dXZiQW5mVEc0ZkQvUjc3MnNzUU44dkFvCldDUGpTcTlLckJZQzJYaWd5L2JkSHFFT3lpSmxUQVpaazZLQXlBN0ExbCs5WDFSOWxyUTFPTkpOS1k5WWRybTIKajFudW1WSXhBb0dCQU5sS3B4MW9tQVBQK0RaOGNMdjkwZDlIWm1tTDJZYkppUUtNdEwrUTJLKzdxZHNwemtOaQorb1J2R0NOR0R1U3JZbDZwWjFDMk0xajkxNXJwbWFrZmJmV2NDRWtKenlVRjhSMzUyb2haMUdYeWQzcmkxMWxqCndpcnlmcHl2QnF1SWlKYWR4Rk1UdGRoTmFuRTNFeURrSVJ0UW03YXcyZHppUnNobHkxVXFGMEYvQW9HQkFPbTYKQjFvbnplb2pmS0hjNnNpa0hpTVdDTnhXK2htc1I4akMxSjVtTDFob3NhbmRwMGN3ekJVR05hTDBHTFNjbFRJbwo4WmNNeWdXZU1XbmowTFA3R0syVUwranlyK01xVnFkMk1PRndLanpDOHNXQzhTUEovcC96ZWZkL2ZSUE1PamJyCm8rMExvblUrcXFjTGw1K1JXQ2dJNlA1dFo2VGR5eTlWekFYVUV2Q0xBb0dBQjJndURpaVVsZnl1MzF5YWt5M3gKeTRTcGp3dC9YTUxkOHNKTkh3S1hBRmFMVWJjNUdyN3kvelN5US9HTmJHb1RMbHJqOUxKaFNiVk5kakJrVm9tRgp2QXVYbExYSzQ5NHgrKzJhYjI5d2VCRXQxWGlLRXJmOTFHenp0KytYY0oxMDJuMkNSYnEwUmkxTlpaS1ZDbGY4CmNPdnNndXZBWVhFdExJT2J6TWxraFkwQ2dZRUEyUnFmOGJLL3B4bkhqMkx5QStYT3lMQ1RFbmtJWUFpVHRYeWsKbTI0MzFGdUxqRW9FTkRDem9XUGZOcnFlcUVZNm9CbEFNQnNGSFNyUW81ZW1LVWk0cDZQYXpQdUJQZlg2QUJ2ZApVOHNvc01BMVdocERmQWNKcWZJei9SNURSTHlUNXFnRDRSREptemJXdGN3aXoybm5CV2toWkJTa0RaU29SQlBpCkxCZk9iL2tDZ1lFQXk1ZS9MaXgzSzVvdHhGRC8xVVV0cGc2dEJqMksxVkg5bDJJWFBidmFKMjZQYnZWYkEwQTUKM0Z5UmZnSTlZTTc3T3QxbTY0ZlRTV21YdTJKU0JpM3FFQ2xic3FRT2taZXZ1V2VsSVY5WnhWblc5NVMzMHVuUwp0ZEk3ZDVTUm1OSUpWK0l1Mk9IRGxkYXN4TzJmcVFoTnVxSFRiVStvNW42ZCtUUlpXVTdpN0drPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

token: JSUzI1NiIsImtpZCI6Ikg5dThGMmc0c1ZBOTVkajVjMGRlb2poZjJMaExDSFp1T1NJWTdobkYtWmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTRsYzkyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiNjc2MGRkZi1kN2FhLTRlZjctYWZkOS05YzA0ZThlMWE5NTQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.XCA6-Wo7q8tJY8td1PRGkruvuNOmtHenjzyToRq5fJjGmWjdLspMDRvDul7YjMeY5eNuhcMG1cJgnyTZZW4gypIiVK1cAtvNR-U4oS0Vv8PqknZdc5-U1ftjIUeayH33tPCAgj-rui31CTwg26s0Z0B312XHF6tLOZZYxkavd1zYVt7DJaJcJpVsC1yaagoLBTjrfpV42N2s49QxnXMaQwYJGy2vowbLcxekdOV2h-7Hv63DxqBRoFYNx_DawN2m3JFfIyQMP7lwENXvNK76wnY2boO8asbIS92V4poLnc9v0r4gtV80dFp3558_XYBWhnZq-_klFHsfxJ0Opt_iEA

#注意格式 token前加4个空格和上面对齐 长秘钥前加一个空格

[root@k8s-master01 dashboard]# cp /root/.kube/config /data/dashboard/k8s-dashboard.kubeconfig #没有创建即可

[root@k8s-master01 dashboard]# sz k8s-dashboard.kubeconfig #上传到真机 用文件登录 终端工具不同命令不同