UFLDL Exercise:Convolution and Pooling

这一节讲的是cnn里面最核心的两个操作:convolution和pooling,至于这两个操作的作用和具体的做法这里就不罗嗦了。可惜这个教程没有讲解cnn的bp算法,虽然说跟普通的多层神经网络思想是一样的,但对新手来说实现cnn的bp算法还是需要花一定时间去研究的~

STEP 2: Implement and test convolution and pooling

cnnConvolve.m

function convolvedFeatures = cnnConvolve(patchDim, numFeatures, images, W, b, ZCAWhite, meanPatch)

%cnnConvolve Returns the convolution of the features given by W and b with

%the given images

%

% Parameters:

% patchDim - patch (feature) dimension

% numFeatures - number of features

% images - large images to convolve with, matrix in the form

% images(r, c, channel, image number)

% W, b - W, b for features from the sparse autoencoder

% ZCAWhite, meanPatch - ZCAWhitening and meanPatch matrices used for

% preprocessing

%

% Returns:

% convolvedFeatures - matrix of convolved features in the form

% convolvedFeatures(featureNum, imageNum, imageRow, imageCol)

numImages = size(images, 4);

imageDim = size(images, 1);

imageChannels = size(images, 3);

convolvedFeatures = zeros(numFeatures, numImages, imageDim - patchDim + 1, imageDim - patchDim + 1);

% Instructions:

% Convolve every feature with every large image here to produce the

% numFeatures x numImages x (imageDim - patchDim + 1) x (imageDim - patchDim + 1)

% matrix convolvedFeatures, such that

% convolvedFeatures(featureNum, imageNum, imageRow, imageCol) is the

% value of the convolved featureNum feature for the imageNum image over

% the region (imageRow, imageCol) to (imageRow + patchDim - 1, imageCol + patchDim - 1)

%

% Expected running times:

% Convolving with 100 images should take less than 3 minutes

% Convolving with 5000 images should take around an hour

% (So to save time when testing, you should convolve with less images, as

% described earlier)

% -------------------- YOUR CODE HERE --------------------

% Precompute the matrices that will be used during the convolution. Recall

% that you need to take into account the whitening and mean subtraction

% steps

%根据题目提供的公式可以得到下面的两个变量,将会在卷积的时候使用到

WT = W * ZCAWhite;

add = b - W * ZCAWhite * meanPatch;

% --------------------------------------------------------

convolvedFeatures = zeros(numFeatures, numImages, imageDim - patchDim + 1, imageDim - patchDim + 1);

for imageNum = 1:numImages

for featureNum = 1:numFeatures

% convolution of image with feature matrix for each channel

convolvedImage = zeros(imageDim - patchDim + 1, imageDim - patchDim + 1);

for channel = 1:3

% Obtain the feature (patchDim x patchDim) needed during the convolution

% ---- YOUR CODE HERE ----

feature = zeros(8,8); % You should replace this

feature = reshape(WT(featureNum,patchDim*patchDim*(channel-1)+1:patchDim*patchDim*channel),patchDim,patchDim);%WT的size为(numFeatures,patchDim*patchDim*channel),注意每一行是由三个通道所对应的权重排列而成的,比如一行的前patchDim*patchDim是R通道的权重,接下来的patchDim*patchDim是G通道的权重,以此类推。所以我们在这里把它拆开,存到feature中。

% ------------------------

% Flip the feature matrix because of the definition of convolution, as explained later

feature = flipud(fliplr(squeeze(feature)));

% Obtain the image

im = squeeze(images(:, :, channel, imageNum));

% Convolve "feature" with "im", adding the result to convolvedImage

% be sure to do a 'valid' convolution

% ---- YOUR CODE HERE ----

convolvedImage = convolvedImage + conv2(im,feature,'valid');%使用matlab提供的卷积函数,注意要使用valid~,并且是讲三个通道的卷积值加起来作为最后的卷积结果

% ------------------------

end

% Subtract the bias unit (correcting for the mean subtraction as well)

% Then, apply the sigmoid function to get the hidden activation

% ---- YOUR CODE HERE ----

convolvedImage = sigmoid(convolvedImage + add(featureNum));%加上bias再使用sigmoid函数

% ------------------------

% The convolved feature is the sum of the convolved values for all channels

convolvedFeatures(featureNum, imageNum, :, :) = convolvedImage;

end

end

end

function sigm = sigmoid(x)

sigm = 1 ./ (1 + exp(-x));

end

cnnPool.m

function pooledFeatures = cnnPool(poolDim, convolvedFeatures)

%cnnPool Pools the given convolved features

%

% Parameters:

% poolDim - dimension of pooling region

% convolvedFeatures - convolved features to pool (as given by cnnConvolve)

% convolvedFeatures(featureNum, imageNum, imageRow, imageCol)

%

% Returns:

% pooledFeatures - matrix of pooled features in the form

% pooledFeatures(featureNum, imageNum, poolRow, poolCol)

%

numImages = size(convolvedFeatures, 2);

numFeatures = size(convolvedFeatures, 1);

convolvedDim = size(convolvedFeatures, 3);

pooledFeatures = zeros(numFeatures, numImages, floor(convolvedDim / poolDim), floor(convolvedDim / poolDim));

% -------------------- YOUR CODE HERE --------------------

% Instructions:

% Now pool the convolved features in regions of poolDim x poolDim,

% to obtain the

% numFeatures x numImages x (convolvedDim/poolDim) x (convolvedDim/poolDim)

% matrix pooledFeatures, such that

% pooledFeatures(featureNum, imageNum, poolRow, poolCol) is the

% value of the featureNum feature for the imageNum image pooled over the

% corresponding (poolRow, poolCol) pooling region

% (see http://ufldl/wiki/index.php/Pooling )

%

% Use mean pooling here.

% -------------------- YOUR CODE HERE --------------------

for imageNum = 1:numImages

for featureNum = 1:numFeatures

temp = conv2(squeeze(convolvedFeatures(featureNum,imageNum,:,:)),ones(poolDim)/poolDim/poolDim,'valid');%用一个2*2且值都为1/4的卷积核与图像进行卷积,其实就可以模拟pooling的效果,注意这里使用valid~

pooledFeatures(featureNum,imageNum,:,:) = temp(1:poolDim:end,1:poolDim:end);%因为卷积的步长是1,而pooling的步长是poolDim,所以按poolDim步长去采样上面卷积的结果就得到pool feature了~

end

end

end

checkConvolveAndPool.m

imageDim = 64; % image dimension

imageChannels = 3; % number of channels (rgb, so 3)

patchDim = 8; % patch dimension

numPatches = 50000; % number of patches

visibleSize = patchDim * patchDim * imageChannels; % number of input units

outputSize = visibleSize; % number of output units

hiddenSize = 400; % number of hidden units

epsilon = 0.1; % epsilon for ZCA whitening

poolDim = 19; % dimension of pooling region

optTheta = zeros(2*hiddenSize*visibleSize+hiddenSize+visibleSize, 1);

ZCAWhite = zeros(visibleSize, visibleSize);

meanPatch = zeros(visibleSize, 1);

load STL10Features.mat;

W = reshape(optTheta(1:visibleSize * hiddenSize), hiddenSize, visibleSize);

b = optTheta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize);

load stlTrainSubset.mat;

convImages = trainImages(:, :, :, 1:8);

% NOTE: Implement cnnConvolve in cnnConvolve.m first!

convolvedFeatures = cnnConvolve(patchDim, hiddenSize, convImages, W, b, ZCAWhite, meanPatch);

%% STEP 2b: Checking your convolution

% To ensure that you have convolved the features correctly, we have

% provided some code to compare the results of your convolution with

% activations from the sparse autoencoder

% For 1000 random points

for i = 1:1000

featureNum = randi([1, hiddenSize]);

imageNum = randi([1, 8]);

imageRow = randi([1, imageDim - patchDim + 1]);

imageCol = randi([1, imageDim - patchDim + 1]);

patch = convImages(imageRow:imageRow + patchDim - 1, imageCol:imageCol + patchDim - 1, :, imageNum);

patch = patch(:);

patch = patch - meanPatch;

patch = ZCAWhite * patch;

features = feedForwardAutoencoder(optTheta, hiddenSize, visibleSize, patch);

if abs(features(featureNum, 1) - convolvedFeatures(featureNum, imageNum, imageRow, imageCol)) > 1e-9

fprintf('Convolved feature does not match activation from autoencoder\n');

fprintf('Feature Number : %d\n', featureNum);

fprintf('Image Number : %d\n', imageNum);

fprintf('Image Row : %d\n', imageRow);

fprintf('Image Column : %d\n', imageCol);

fprintf('Convolved feature : %0.5f\n', convolvedFeatures(featureNum, imageNum, imageRow, imageCol));

fprintf('Sparse AE feature : %0.5f\n', features(featureNum, 1));

error('Convolved feature does not match activation from autoencoder');

end

end

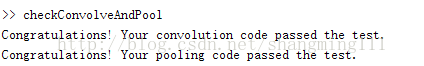

disp('Congratulations! Your convolution code passed the test.');

%% STEP 2c: Implement pooling

% Implement pooling in the function cnnPool in cnnPool.m

% NOTE: Implement cnnPool in cnnPool.m first!

pooledFeatures = cnnPool(poolDim, convolvedFeatures);

%% STEP 2d: Checking your pooling

% To ensure that you have implemented pooling, we will use your pooling

% function to pool over a test matrix and check the results.

testMatrix = reshape(1:64, 8, 8);

expectedMatrix = [mean(mean(testMatrix(1:4, 1:4))) mean(mean(testMatrix(1:4, 5:8))); ...

mean(mean(testMatrix(5:8, 1:4))) mean(mean(testMatrix(5:8, 5:8))); ];

testMatrix = reshape(testMatrix, 1, 1, 8, 8);

pooledFeatures = squeeze(cnnPool(4, testMatrix));

if ~isequal(pooledFeatures, expectedMatrix)

disp('Pooling incorrect');

disp('Expected');

disp(expectedMatrix);

disp('Got');

disp(pooledFeatures);

else

disp('Congratulations! Your pooling code passed the test.');

end