Detectron 保存faster rcnn 测试结果,类别 置信度 坐标

由于项目需要,要求获取每张图片中每个box的类别、置信度得分和bbox的坐标信息。

思路在inference阶段,每infer一张图片就新开一个txt文件,txt文件的每一行代表一个bbox得检测信息包括bbox的类别 置信度 和四个坐标值

需要修改两个文件

1,修改 detectron-master/detectron/utils/vis.py 文件

2,修改 detectron-master/tools/infer_simple.py 文件

对于detectron-master/detectron/utils/vis.py 文件主要修改vis_one_image()函数,添加了一个参数用于传入txt的文件名字。

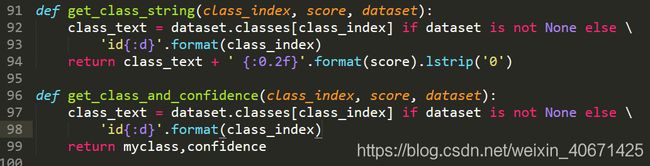

另外新添加一个函数get_class_and_confidence用于获取类别和置信度,该函数放在get_class_string杉树下面 如下图:

函数get_class_and_confidence在函数vis_one_image中调用获取类别和置信度得分,修改后的vis_one_image函数如下

def vis_one_image(

im, im_name, output_dir, boxes, segms=None, keypoints=None, thresh=0.9,

kp_thresh=2, dpi=200, box_alpha=0.0, dataset=None, show_class=False,

ext='pdf', out_when_no_box=False,tested_txt=None):

"""Visual debugging of detections."""

assert not (tested_txt==None),"please give the output full txt name"

print("save detect reselt in : %s",tested_txt)

save_to_txt = open(tested_txt,'w',encoding='utf-8')

if not os.path.exists(output_dir):

os.makedirs(output_dir)

if isinstance(boxes, list):

boxes, segms, keypoints, classes = convert_from_cls_format(

boxes, segms, keypoints)

if (boxes is None or boxes.shape[0] == 0 or max(boxes[:, 4]) < thresh) and not out_when_no_box:

return

dataset_keypoints, _ = keypoint_utils.get_keypoints()

if segms is not None and len(segms) > 0:

masks = mask_util.decode(segms)

color_list = colormap(rgb=True) / 255

kp_lines = kp_connections(dataset_keypoints)

cmap = plt.get_cmap('rainbow')

colors = [cmap(i) for i in np.linspace(0, 1, len(kp_lines) + 2)]

fig = plt.figure(frameon=False)

fig.set_size_inches(im.shape[1] / dpi, im.shape[0] / dpi)

ax = plt.Axes(fig, [0., 0., 1., 1.])

ax.axis('off')

fig.add_axes(ax)

ax.imshow(im)

if boxes is None:

sorted_inds = [] # avoid crash when 'boxes' is None

else:

# Display in largest to smallest order to reduce occlusion

areas = (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

sorted_inds = np.argsort(-areas)

mask_color_id = 0

for i in sorted_inds:

bbox = boxes[i, :4]

score = boxes[i, -1]

if score < thresh:

continue

# show box (off by default)

ax.add_patch(

plt.Rectangle((bbox[0], bbox[1]),

bbox[2] - bbox[0],

bbox[3] - bbox[1],

fill=False, edgecolor='g',

linewidth=0.5, alpha=box_alpha))

# print(classes[i],score,bbox)

mycls,confidence = get_class_and_confidence(classes[i], score, dataset)

write_linedata = "class:"+mycls+" "+"score:"+confidence+" "+"xmin:"+bbox[0]+" "+"ymin:"+bbox[1]+" "+"xmax:"+bbox[2]+" "+"ymax:"+bbox[3]

save_to_txt.write(write_linedata + '\n')

if show_class:

ax.text(

bbox[0], bbox[1] - 2,

get_class_string(classes[i], score, dataset),

fontsize=3,

family='serif',

bbox=dict(

facecolor='g', alpha=0.4, pad=0, edgecolor='none'),

color='white')

# show mask

if segms is not None and len(segms) > i:

img = np.ones(im.shape)

color_mask = color_list[mask_color_id % len(color_list), 0:3]

mask_color_id += 1

w_ratio = .4

for c in range(3):

color_mask[c] = color_mask[c] * (1 - w_ratio) + w_ratio

for c in range(3):

img[:, :, c] = color_mask[c]

e = masks[:, :, i]

_, contour, hier = cv2.findContours(

e.copy(), cv2.RETR_CCOMP, cv2.CHAIN_APPROX_NONE)

for c in contour:

polygon = Polygon(

c.reshape((-1, 2)),

fill=True, facecolor=color_mask,

edgecolor='w', linewidth=1.2,

alpha=0.5)

ax.add_patch(polygon)

# show keypoints

if keypoints is not None and len(keypoints) > i:

kps = keypoints[i]

plt.autoscale(False)

for l in range(len(kp_lines)):

i1 = kp_lines[l][0]

i2 = kp_lines[l][1]

if kps[2, i1] > kp_thresh and kps[2, i2] > kp_thresh:

x = [kps[0, i1], kps[0, i2]]

y = [kps[1, i1], kps[1, i2]]

line = plt.plot(x, y)

plt.setp(line, color=colors[l], linewidth=1.0, alpha=0.7)

if kps[2, i1] > kp_thresh:

plt.plot(

kps[0, i1], kps[1, i1], '.', color=colors[l],

markersize=3.0, alpha=0.7)

if kps[2, i2] > kp_thresh:

plt.plot(

kps[0, i2], kps[1, i2], '.', color=colors[l],

markersize=3.0, alpha=0.7)

# add mid shoulder / mid hip for better visualization

mid_shoulder = (

kps[:2, dataset_keypoints.index('right_shoulder')] +

kps[:2, dataset_keypoints.index('left_shoulder')]) / 2.0

sc_mid_shoulder = np.minimum(

kps[2, dataset_keypoints.index('right_shoulder')],

kps[2, dataset_keypoints.index('left_shoulder')])

mid_hip = (

kps[:2, dataset_keypoints.index('right_hip')] +

kps[:2, dataset_keypoints.index('left_hip')]) / 2.0

sc_mid_hip = np.minimum(

kps[2, dataset_keypoints.index('right_hip')],

kps[2, dataset_keypoints.index('left_hip')])

if (sc_mid_shoulder > kp_thresh and

kps[2, dataset_keypoints.index('nose')] > kp_thresh):

x = [mid_shoulder[0], kps[0, dataset_keypoints.index('nose')]]

y = [mid_shoulder[1], kps[1, dataset_keypoints.index('nose')]]

line = plt.plot(x, y)

plt.setp(

line, color=colors[len(kp_lines)], linewidth=1.0, alpha=0.7)

if sc_mid_shoulder > kp_thresh and sc_mid_hip > kp_thresh:

x = [mid_shoulder[0], mid_hip[0]]

y = [mid_shoulder[1], mid_hip[1]]

line = plt.plot(x, y)

plt.setp(

line, color=colors[len(kp_lines) + 1], linewidth=1.0,

alpha=0.7)

save_to_txt.close()

对于detectron-master/tools/infer_simple.py 文件,主要修改main函数,创建了一个文件夹(默认在detectron-master目录一下)output_txts用来存放每一张图片的txt。

修改后的main()函数

def main(args):

logger = logging.getLogger(__name__)

merge_cfg_from_file(args.cfg)

cfg.NUM_GPUS = 1

args.weights = cache_url(args.weights, cfg.DOWNLOAD_CACHE)

assert_and_infer_cfg(cache_urls=False)

assert not cfg.MODEL.RPN_ONLY, \

'RPN models are not supported'

assert not cfg.TEST.PRECOMPUTED_PROPOSALS, \

'Models that require precomputed proposals are not supported'

model = infer_engine.initialize_model_from_cfg(args.weights)

dummy_coco_dataset = dummy_datasets.get_coco_dataset()

if os.path.isdir(args.im_or_folder):

im_list = glob.iglob(args.im_or_folder + '/*.' + args.image_ext)

else:

im_list = [args.im_or_folder]

script_path = os.path.dirname(os.path.abspath(__file__))

txt_path = "{0}/../output_txts/".format(script_path)

if not os.path.exists():

os.makedirs(txt_path)

else:

print("path exists,it should be removed!!")

for i, im_name in enumerate(im_list):

out_name = os.path.join(

args.output_dir, '{}'.format(os.path.basename(im_name) + '.' + args.output_ext)

)

logger.info('Processing {} -> {}'.format(im_name, out_name))

im = cv2.imread(im_name)

timers = defaultdict(Timer)

t = time.time()

with c2_utils.NamedCudaScope(0):

cls_boxes, cls_segms, cls_keyps = infer_engine.im_detect_all(

model, im, None, timers=timers

)

print

logger.info('Inference time: {:.3f}s'.format(time.time() - t))

for k, v in timers.items():

logger.info(' | {}: {:.3f}s'.format(k, v.average_time))

if i == 0:

logger.info(

' \ Note: inference on the first image will be slower than the '

'rest (caches and auto-tuning need to warm up)'

)

#add save txt path

txt_name = im_name.split(".")[0] + '.txt'

save_txt_path = txt_path + txt_name

vis_utils.vis_one_image(

im[:, :, ::-1], # BGR -> RGB for visualization

im_name,

args.output_dir,

cls_boxes,

cls_segms,

cls_keyps,

dataset=dummy_coco_dataset,

box_alpha=0.3,

show_class=True,

thresh=args.thresh,

kp_thresh=args.kp_thresh,

ext=args.output_ext,

out_when_no_box=args.out_when_no_box,

tested_txt=save_txt_path

)