python爬虫爬取新浪微博搜索,通过pandas保存本地excel

python爬虫爬取新浪微博搜索,通过pandas保存本地excel

目标

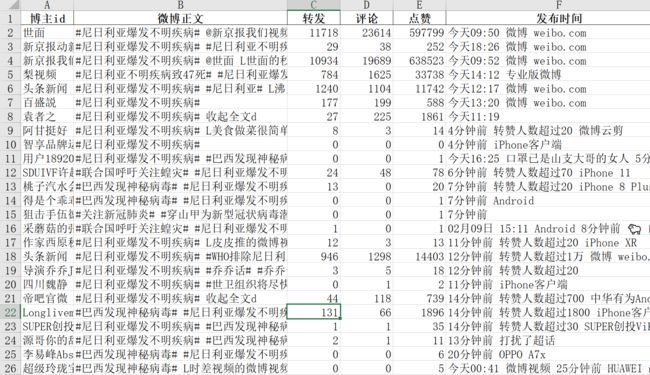

给定任意关键词,能够通过新浪微博搜索,爬取相关微博的博主id,微博正文,转发数,评论数,点赞数,发布时间

效果

进行访问

爬取目标网址为 https://s.weibo.com/

首先建立headers之类的

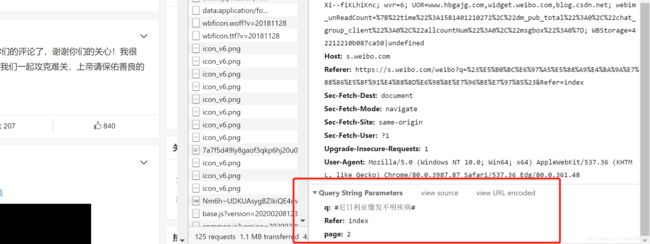

在network标签里面我们可以找到query string参数,统统都放进代码里,将这些参数encode后拼成完整url,对其进行访问,便可以得到目标网页的html

def get_Research(research_Words,page):

params = {

'q': research_Words,

'Refer': 'index',

'page': str(page)

}

url = 'https://s.weibo.com/weibo?' + urlencode(params)

#print(url)

# print(urlencode(params))

try:

response = requests.get(url)

if response.status_code == 200:

return response.text

except requests.ConnectionError:

return None

信息提取

对网页进行分析可以看到,转发评论之类的都在div节点class="card-act"的里面,而内容则在class = “card-feed” 的div里面,二者同属class = 'card’的div里,因此先定位到card,再具体找到需要找的信息所对应的子节点,将其文本保存到一个字典中,将所有的字典组成一个字典列表作为函数返回值。

def get_Information(research_Words,page):

res = []

html = get_Research(research_Words,page)

doc = pq(html)

#print(doc)

with open(current_Path + 'test.txt','w+',encoding = 'utf8') as f:

f.write(html)

# items = doc(".content").items()

items = doc("div[class='card']").items()

for li in items:

temp_Info_Dict = {}

###抽取昵称

info = li.find('div')('.name')

nick_Name = info.attr('nick-name')

temp_Info_Dict['博主id'] = nick_Name

###抽取内容

# text = li('.txt')

text = li("p[node-type='feed_list_content_full']>a")

temp_Info_Dict['微博正文'] = text.text()

if temp_Info_Dict['微博正文'] == '':

text = li("p[node-type='feed_list_content']>a")

temp_Info_Dict['微博正文'] = text.text()

#print(text.text())

#print(temp_Info_Dict['微博正文'])

###时间&设备

time_Device = li("p[class='from']>a").text()

temp_Info_Dict['发布时间'] = time_Device

###转发数 评论数 点赞数

forwards = li('.card-act li').items()#("a[action-type='feed_list_forward']")

for i,forward in enumerate(forwards):

num = re.sub("\D","",forward.text())

#print(num)

if num == '':

num = 0

else:

num = int(num)

if i == 1:

temp_Info_Dict['转发'] = num

elif i == 2:

temp_Info_Dict['评论'] = num

elif i == 3:

temp_Info_Dict['点赞'] = num

#print(forward.text())

res.append(temp_Info_Dict)

#print(res)

return res

###发布时间

保存到本地

利用pandas的函数把字典列表保存即可

##导出excel

def export_excel(export):

pf = pd.DataFrame(list(export))

#指定字段顺序

order = ['博主id','微博正文','转发','评论','点赞','发布时间']

pf = pf[order]

file_path = pd.ExcelWriter(current_Path + 'name.xlsx')

pf.fillna(' ',inplace = True)

#输出

pf.to_excel(file_path,encoding = 'utf-8',index = False)

#保存表格

file_path.save()

进行爬取

调用函数进行爬取就好,但是实际操作的时候发现问题就是只能爬第一页的内容,反反复复debug看了好几遍网页结构也没发现第二页及之后为啥爬不出来,最后终于发现是因为如果没有登录微博就看不了第二页,而程序中没有进行模拟登录。

所以想要简单的再爬好多页的话还需要加入模拟登录或者加入一个保有登录信息的cookies

def main():

lis = []

#for i in range(1,10):

lis += get_Information('#尼日利亚爆发不明疾病#',1)

#print(lis)

export_excel(lis)

完整代码

from urllib.parse import urlencode

import requests

from pyquery import PyQuery as pq

import os

import re

import xlwt

import pandas as pd

current_Path = os.path.dirname(os.path.abspath(__file__)) + '\\'

base_url = 'https://s.weibo.com/'

headers = {

'Host':'m.weibo.cn',

'Refer':'https://weibo.com/zzk1996?is_all=1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36 Edg/80.0.361.48'

}

#搜索

def get_Research(research_Words,page):

params = {

'q': research_Words,

'Refer': 'index',

'page': str(page)

}

url = 'https://s.weibo.com/weibo?' + urlencode(params)

#print(url)

# print(urlencode(params))

try:

response = requests.get(url)

if response.status_code == 200:

return response.text

except requests.ConnectionError:

return None

def get_Information(research_Words,page):

res = []

html = get_Research(research_Words,page)

doc = pq(html)

#print(doc)

with open(current_Path + 'test.txt','w+',encoding = 'utf8') as f:

f.write(html)

# items = doc(".content").items()

items = doc("div[class='card']").items()

for li in items:

temp_Info_Dict = {}

###抽取昵称

info = li.find('div')('.name')

nick_Name = info.attr('nick-name')

temp_Info_Dict['博主id'] = nick_Name

###抽取内容

# text = li('.txt')

text = li("p[node-type='feed_list_content_full']>a")

temp_Info_Dict['微博正文'] = text.text()

if temp_Info_Dict['微博正文'] == '':

text = li("p[node-type='feed_list_content']>a")

temp_Info_Dict['微博正文'] = text.text()

#print(text.text())

#print(temp_Info_Dict['微博正文'])

###时间&设备

time_Device = li("p[class='from']>a").text()

temp_Info_Dict['发布时间'] = time_Device

###转发数 评论数 点赞数

forwards = li('.card-act li').items()#("a[action-type='feed_list_forward']")

for i,forward in enumerate(forwards):

num = re.sub("\D","",forward.text())

#print(num)

if num == '':

num = 0

else:

num = int(num)

if i == 1:

temp_Info_Dict['转发'] = num

elif i == 2:

temp_Info_Dict['评论'] = num

elif i == 3:

temp_Info_Dict['点赞'] = num

#print(forward.text())

res.append(temp_Info_Dict)

#print(res)

return res

###发布时间

##导出excel

def export_excel(export):

pf = pd.DataFrame(list(export))

#指定字段顺序

order = ['博主id','微博正文','转发','评论','点赞','发布时间']

pf = pf[order]

file_path = pd.ExcelWriter(current_Path + 'name.xlsx')

pf.fillna(' ',inplace = True)

#输出

pf.to_excel(file_path,encoding = 'utf-8',index = False)

#保存表格

file_path.save()

def main():

lis = []

#for i in range(1,10):

lis += get_Information('#尼日利亚爆发不明疾病#',1)

#print(lis)

export_excel(lis)

if __name__ == '__main__':

main()

# pool = Pool()

# groups = ([x*20 for x in range(GROUP_START,GROUP_END+1)])

# pool.map(main,groups)

# pool.close()

# pool.join()