使用mongodb-exporter+prometheus+grafana实现mongodb集群监控

1 创建Mongodb集群

直接使用下面的yaml文件创建mongodb集群,本环境中,只启动了两节点的mongodb。使用hostPath进行数据存储,创建存储目录

[root@master ~]# mkdir -p /home/storage/mongo/db/

[root@master mongo]# cat mongo.yaml

apiVersion: v1

kind: Service

metadata:

name: mongo

labels:

app: mongo

spec:

ports:

- name: mongo

port: 27017

targetPort: 27017

clusterIP: None

selector:

app: mongo

---

apiVersion: v1

kind: Service

metadata:

name: mongo-service

labels:

app: mongo

spec:

ports:

- name: mongo-http

port: 27017

selector:

app: mongo

type: NodePort

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: mongo

spec:

selector:

matchLabels:

app: mongo

serviceName: "mongo"

replicas: 2

podManagementPolicy: Parallel

template:

metadata:

labels:

app: mongo

spec:

terminationGracePeriodSeconds: 10

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- mongo

topologyKey: "kubernetes.io/hostname"

containers:

- name: mongo

image: mongo:latest

command:

- mongod

- "--bind_ip_all"

- "--replSet"

- rs0

ports:

- containerPort: 27017

volumeMounts:

- name: mongo-data

mountPath: /data/db

volumes:

- name: mongo-data

hostPath:

path: /home/storage/mongo/db

[root@master mongo]# kubectl apply -f mongo.yaml

[root@master mongo]# kubectl get pod

NAME READY STATUS RESTARTS AGE

details-v1-74f858558f-rckf4 2/2 Running 6 9d

**mongo-0 2/2 Running 2 22h

mongo-1 2/2 Running 2 22h**

productpage-v1-76589d9fdc-vp6h5 2/2 Running 6 9d

ratings-v1-7855f5bcb9-fd98w 2/2 Running 6 9d

reviews-v1-64bc5454b9-knhcs 2/2 Running 6 9d

reviews-v2-76c64d4bdf-wmsgq 2/2 Running 6 9d

reviews-v3-5545c7c78f-hsw5h 2/2 Running 6 9d

[root@master mongo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

details ClusterIP 10.10.179.23 9080/TCP 9d

kubernetes ClusterIP 10.10.0.1 443/TCP 14d

mongo ClusterIP None 27017/TCP 22h

mongo-service NodePort 10.10.27.148 27017:31014/TCP 22h

productpage ClusterIP 10.10.112.221 9080/TCP 9d

ratings ClusterIP 10.10.183.94 9080/TCP 9d

reviews ClusterIP 10.10.86.216 9080/TCP 9d

[root@master mongo]#

如上所示,mongodb集群创建完成

2 测试是否创建成功

使用curl测试mongodb是否正常工作

[root@master mongo]# curl http://192.168.122.7:31014

It looks like you are trying to access MongoDB over HTTP on the native driver port.

出现上面提示,则说明mongodb启动成功

3 准备mongodb-exporter镜像

pull mongodb-exporter镜像

[root@master mongo]# docker pull noenv/mongo-exporter:latest

4 创建mongodb-exporter应用、服务、serviceaccount、servicemonitor

使用mongodb-exporter镜像创建deployment,此镜像作者在创建此镜像时,指定了mongodb-uri的镜像为172.17.0.1,所以在创建deployment时,需要重新指定mongodb的uri地址,还要注意,此deployment需要创建在monitoring namespace下,此namespace为kube-prometheus命名空间,关于kube-prometheus的部署方式,后续文章会出。具体yaml文件如下:

[root@master mongo]# cat mongo-exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongo-exporter

namespace: monitoring

labels:

k8s-app: mongo-exporter

spec:

selector:

matchLabels:

k8s-app: mongo-exporter

template:

metadata:

labels:

k8s-app: mongo-exporter

spec:

containers:

- name: mongo-exporter

image: noenv/mongo-exporter:latest

args: ["--web.listen-address=:9104", "--mongodb.uri", "mongodb://192.168.122.7:31014"]

ports:

- containerPort: 9104

name: http

创建deployment

[root@master mongo]# kubectl apply -f mongo-exporter.yaml

deployment.apps/mongo-exporter created

[root@master mongo]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 12 14d

alertmanager-main-1 2/2 Running 12 14d

alertmanager-main-2 2/2 Running 12 14d

grafana-77978cbbdc-szzbd 1/1 Running 6 14d

kube-state-metrics-7f6d7b46b4-wnkn6 3/3 Running 18 14d

**mongo-exporter-7557899dcc-xpzh5 1/1 Running 0 114s**

node-exporter-55259 2/2 Running 12 14d

node-exporter-rr2bf 2/2 Running 2 29h

prometheus-adapter-68698bc948-65blv 1/1 Running 6 14d

prometheus-k8s-0 3/3 Running 19 14d

prometheus-k8s-1 3/3 Running 19 14d

prometheus-operator-6685db5c6-7dbvj 1/1 Running 6 14d

[root@master mongo]#

创建service

[root@master mongo]# cat mongo-exporter-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: mongo-exporter

name: mongo-exporter

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 9104

nodePort: 30017

targetPort: http

selector:

k8s-app: mongo-exporter

[root@master mongo]# kubectl create -f mongo-exporter-service.yaml

service/mongo-exporter created

[root@master mongo]# kubectl describe svc -n monitoring mongo-exporter

Name: mongo-exporter

Namespace: monitoring

Labels: k8s-app=mongo-exporter

Annotations:

Selector: k8s-app=mongo-exporter

Type: NodePort

IP: 10.10.84.94

Port: http 9104/TCP

TargetPort: http/TCP

NodePort: http 30017/TCP

Endpoints: 10.124.72.5:9104

Session Affinity: None

External Traffic Policy: Cluster

Events:

[root@master mongo]#

创建serviceaccout

[root@master mongo]# kubectl create serviceaccount -n monitoring mongo-exporter

serviceaccount/mongo-exporter created

创建serviceMonitor

[root@master mongo]# cat mongo-exporter-serviceMonitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: mongo-exporter

name: mongo-exporter

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: http

jobLabel: k8s-app

selector:

matchLabels:

k8s-app: mongo-exporter

[root@master mongo]# kubectl apply -f mongo-exporter-serviceMonitor.yaml

servicemonitor.monitoring.coreos.com/mongo-exporter created

5 查看prometheus Targets

以上资源都创建完成后,在prometheus中查看mongodb-exporter Targets

此时prometheus可以正常收集mongodb集群性能数据

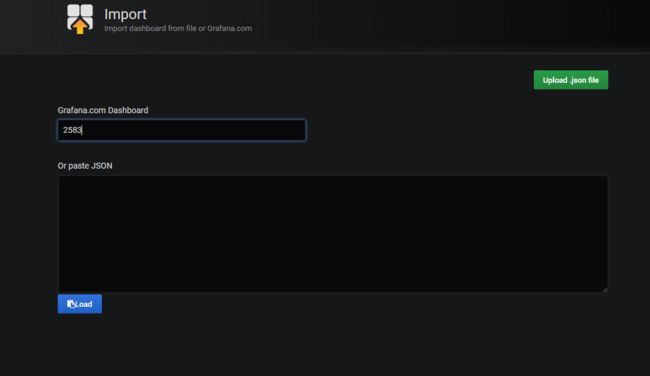

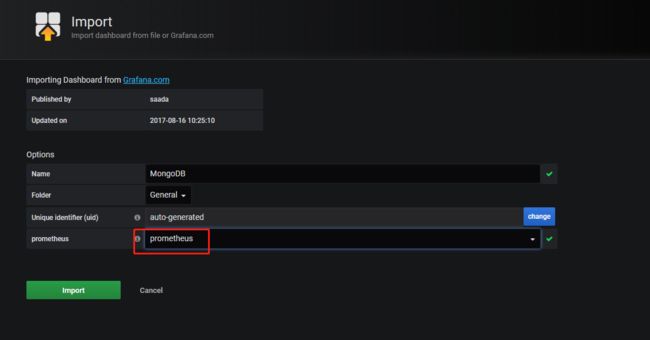

6 在grafana中创建监控面板

在grafana页面中选择官方的mongodb模板

点击Import,然后即可实现对mongodb监控

为何有的数据没有,因为:1 mongodb中没有存入数据 2 这个grafana模板中的prometheus-mongodb-exporter语句中有的指标名称不存在。