Tensorflow学习笔记之利用DNNRegressor进行时序预测

Tensorflow学习笔记之利用DNNRegressor进行时序预测

Tensorflow高级库的DNNRegressor很方便使用,如同sklearn库一样的简单,只要定义好数据格式,然后fit然后predict就可以了,时序预测中最出名的恐怕lstm了,但是最近在使用它时,对于数据缺失和数据量少的情况感觉预测不是很好,受到同事的启发,利用DNN来进行预测,效果还行,记录下方便以后查看。

实现的语言采用python(“人生苦短,我用python”)。

加载所需要的模块

import os import pandas as pd #读取csv文件 import numpy as np import pytz # 时区处理 import tensorflow as tf from sklearn import preprocessing # 数据标准化 from sklearn.model_selection import train_test_split加载数据,为了重复使用,我定义成一个函数

在加载数据的同时将年份细分为year,month,day,工作日等。def process_data(file_path): file_name = os.path.split(file_path)[1] time_name = 'time' data = pd.read_csv(file_path, header=None, names=[time_name, 'value']) data[time_name] = pd.to_datetime(data[time_name], unit='ms', utc=True) end_time = data.iloc[-1][0] tz = pytz.timezone("Asia/Shanghai") data2 = data.set_index([time_name]) data2 = data2.resample(rule='5min' ).mean() data2 = data2.tz_convert(tz) data2 = data2.interpolate(method='time') data2['year'] = data2.index.year data2['month'] = data2.index.month data2['day'] = data2.index.day data2['hour'] = data2.index.hour data2['min'] = data2.index.minute / 60 #归一化到0-1之间 data2['weekday'] = data2.index.weekday data2['work'] = data2.index.weekday < 5 data2['week'] = data2.index.week data2['work'] = data2['work'].astype(int) data2 = data2.reset_index().drop(columns='time')- 定义数据标准化与反标准化函数

def rescale(s):

reshaped_s = s.values.reshape(-1, 1)

scaler = preprocessing.StandardScaler().fit(reshaped_s)

return pd.DataFrame(data=scaler.transform(reshaped_s)), scaler

def input_fn(df, label):

"""Input builder function."""

# Creates a dictionary mapping from each continuous feature column name (k) to

# the values of that column stored in a constant Tensor.

continuous_cols = {k: tf.constant(df[k].values, shape=[df[k].size, 1]) for k in CONTINUOUS_COLUMNS}

feature_cols = dict(continuous_cols)

# Creates a dictionary mapping from each categorical feature column name (k)

# to the values of that column stored in a tf.SparseTensor.

categorical_cols = {

k: tf.SparseTensor(

indices=[[i, 0] for i in range(df[k].size)],

values=df[k].values,

dense_shape=[df[k].size, 1])

for k in CATEGORICAL_COLUMNS}

# Merges the two dictionaries into one.

feature_cols.update(categorical_cols)

# feature_cols=dict(categorical_cols)

# Converts the label column into a constant Tensor.

# label = tf.constant(df[LABEL_COLUMN].values, shape=[df[LABEL_COLUMN].size, 1])

label = tf.constant(label.values, shape=[label.size, 1])

# Returns the feature columns and the label.

return feature_cols, label

def scale(df):

for column in CONTINUOUS_COLUMNS:

df[column], column = rescale(df[column])

for column in CATEGORICAL_COLUMNS:

if column:

df[column] = df[column].apply(str)

df[LABEL_COLUMN], label_scaler = rescale(df[LABEL_COLUMN])

df['label'] = df[LABEL_COLUMN]

return df, label_scaler

- 定义文件路径读取数据并拆分数据集

file_path = "./cpudata/p0cesrpt2.csv"

df = process_data(file_path)

df2, label_scaler = scale(df.copy())

X_train, X_test, y_train, y_test = train_test_split(df2[FEATURE_COLUMNS], df2[LABEL_COLUMN], test_size=0.3)

def input_fn(df, label):

"""Input builder function."""

# Creates a dictionary mapping from each continuous feature column name (k) to

# the values of that column stored in a constant Tensor.

continuous_cols = {k: tf.constant(df[k].values, shape=[df[k].size, 1]) for k in CONTINUOUS_COLUMNS}

feature_cols = dict(continuous_cols)

# Creates a dictionary mapping from each categorical feature column name (k)

# to the values of that column stored in a tf.SparseTensor.

categorical_cols = {

k: tf.SparseTensor(

indices=[[i, 0] for i in range(df[k].size)],

values=df[k].values,

dense_shape=[df[k].size, 1])

for k in CATEGORICAL_COLUMNS}

# Merges the two dictionaries into one.

feature_cols.update(categorical_cols)

# feature_cols=dict(categorical_cols)

# Converts the label column into a constant Tensor.

# label = tf.constant(df[LABEL_COLUMN].values, shape=[df[LABEL_COLUMN].size, 1])

label = tf.constant(label.values, shape=[label.size, 1])

# Returns the feature columns and the label.

return feature_cols, label

CATEGORICAL_COLUMNS = ['work', 'min'] #分类型字段

CONTINUOUS_COLUMNS = ['year', 'month', 'day', 'hour', 'weekday', 'week']#连续性字段

FEATURE_COLUMNS = []

FEATURE_COLUMNS.extend(CATEGORICAL_COLUMNS)

FEATURE_COLUMNS.extend(CONTINUOUS_COLUMNS)

LABEL_COLUMN = 'value'

deep_columns = []

for column in CATEGORICAL_COLUMNS:

column = tf.contrib.layers.sparse_column_with_hash_bucket(

column, hash_bucket_size=1000)

deep_columns.append(tf.contrib.layers.embedding_column(column, dimension=7),)

for column in CONTINUOUS_COLUMNS:

column = tf.contrib.layers.real_valued_column(column)

deep_columns.append(column)

model_dir = "./model" #模型保存位置

learning_rate = 0.001 #学习速率

#激活函数

model_optimizer = tf.train.AdamOptimizer(

learning_rate=learning_rate)

#定义模型

m = tf.contrib.learn.DNNRegressor(model_dir=model_dir,

feature_columns=deep_columns,

hidden_units=[32, 64],

optimizer=model_optimizer)

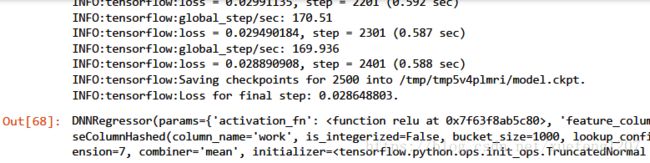

- 训练模型

m.fit(input_fn=lambda: input_fn(X_train, y_train),

steps=2500

)

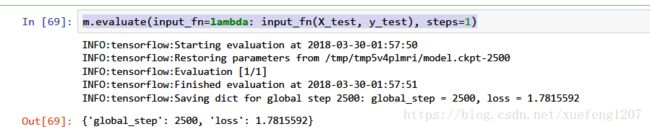

m.evaluate(input_fn=lambda: input_fn(X_test, y_test), steps=1)