回归算法(正规方程线性回归,梯度下降线性回归,岭回归)

回归算法

- 线性回归

- 线性回归的定义

- 线性回归误差的度量

- 线性回归减小误差的方法

- 方法一:正规方程

- 方法二:梯度下降

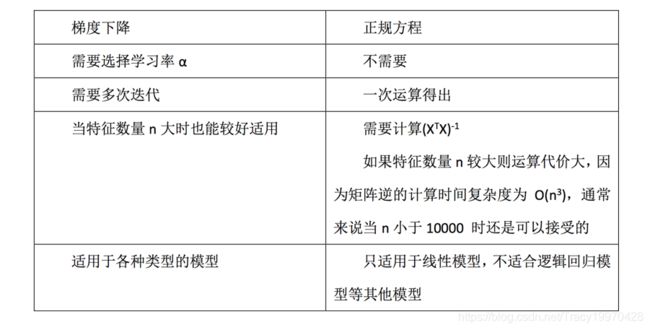

- 正规方程和梯度下降的对比

- 回归性能评估

- 过拟合和欠拟合

- 过拟合

- 欠拟合

- 解决方法

- 岭回归

- 岭回归的概念

- 岭回归的代码实现

线性回归

线性回归的定义

线性回归通过一个或多个自变量与因变量之间的关系进行建模与回归分析,其特点为一个或多个回归系数的参数的线性组合。根据自变量的个数不同分为一元线性回归和多元线性回归。其具体表示公式如下:

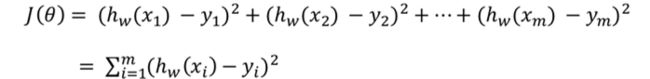

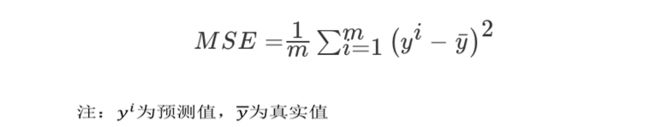

线性回归误差的度量

线性回归减小误差的方法

核心思想:找到最小损失对应的W值

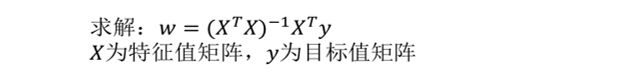

方法一:正规方程

from sklearn.datasets import load_boston

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

def mylinear():

"""

用LinearRegression方法预测波士顿房价

:return: None

"""

# 获取数据

lb = load_boston()

# 分割数据集

x_train, x_test, y_train, y_test = train_test_split(lb.data,lb.target,test_size=0.25)

# 标准化处理(注意特征值和目标值需要实例化两个API,因为维数不一样)

std_x = StandardScaler()

x_train = std_x.fit_transform(x_train)

x_test = std_x.transform(x_test)

std_y = StandardScaler()

y_train = std_y.fit_transform(y_train.reshape(-1,1))

y_test = std_y.transform(y_test.reshape(-1,1))

# 用估计器预测房价结果

lr = LinearRegression()

lr.fit(x_train,y_train)

print(lr.coef_)

predict_std = lr.predict(x_test)

y_predict = std_y.inverse_transform(predict_std.reshape(-1,1))

print("线性回归方程预测房价结果:", y_predict)

print("线性回归方程预测军方误差:", mean_squared_error(std_y.inverse_transform(y_test),y_predict))

return None

if __name__ == '__main__':

mylinear()

输出结果:

[[-0.07189689 0.11056253 0.00852308 0.06510871 -0.17156743 0.3417623

-0.0550457 -0.29720397 0.22697546 -0.21461555 -0.21549207 0.12524146

-0.323476 ]]

线性回归方程预测房价结果: [[16.67298491]

[21.51430668]

[15.63161012]

[41.67971428]

[22.12070811]

[29.74143583]

[45.16135176]

[13.47566068]

[18.94535531]

[28.80047496]

[21.2140528 ]

[28.17246202]

[26.24308882]

[12.27271099]

[26.33784283]

[20.0184693 ]

[15.56019304]

[19.78458139]

[ 8.44834886]

[19.2649333 ]

[32.51179258]

[23.04744077]

[12.19437145]

[18.24760861]

[18.15170883]

[11.03283082]

[25.74066679]

[30.53326076]

[28.75518113]

[15.41794206]

[31.71846201]

[13.11025356]

[ 9.39360885]

[25.86065388]

[14.83219011]

[19.17522972]

[24.72453426]

[17.97900083]

[24.60920764]

[16.33075212]

[32.92539655]

[19.33175092]

[22.56207634]

[22.08126759]

[26.8019178 ]

[27.81518837]

[ 6.13935003]

[20.20341886]

[15.83163726]

[33.39822915]

[21.91187973]

[21.30148556]

[29.69154311]

[35.27221326]

[25.36056497]

[22.99531908]

[24.23264307]

[ 9.91553065]

[26.88413251]

[22.80506192]

[22.10942353]

[34.84690799]

[17.0764197 ]

[30.17811061]

[30.3602628 ]

[25.59228421]

[19.61567103]

[17.44520032]

[32.08421329]

[22.42346097]

[35.13647673]

[23.55930269]

[27.7629917 ]

[25.36087011]

[22.98636269]

[23.81183436]

[43.91295741]

[31.0158378 ]

[23.90163612]

[19.8617725 ]

[ 0.40462815]

[19.06456293]

[21.00950922]

[30.57172582]

[21.54336377]

[17.94269787]

[22.56265107]

[18.70495122]

[28.99876226]

[34.92273719]

[23.07659802]

[10.38802434]

[21.50174461]

[16.91550915]

[28.36196581]

[24.33000943]

[25.9625918 ]

[17.19987812]

[36.29645747]

[20.3181725 ]

[ 6.31130636]

[ 9.82912988]

[ 5.71437948]

[12.55375899]

[11.8760076 ]

[21.08312231]

[16.16794352]

[23.89854365]

[19.00454068]

[16.35134125]

[25.74128361]

[15.40079632]

[36.4936849 ]

[ 5.36164699]

[24.70578649]

[34.23378414]

[28.17193439]

[27.68687533]

[17.6316129 ]

[27.19337924]

[19.31946272]

[22.02621029]

[27.28518294]

[29.49658103]

[39.68007288]

[40.08295584]

[26.26031041]]

线性回归方程预测军方误差: 30.331638135174217

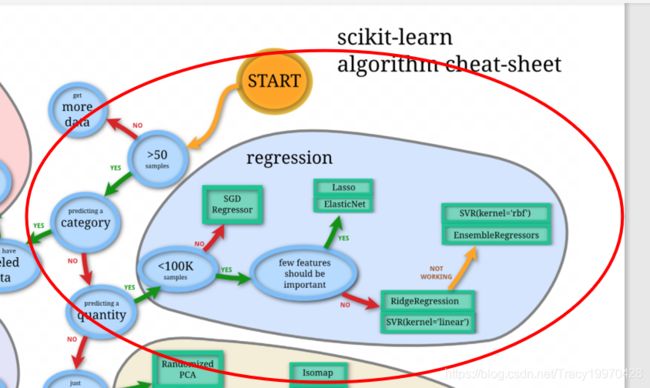

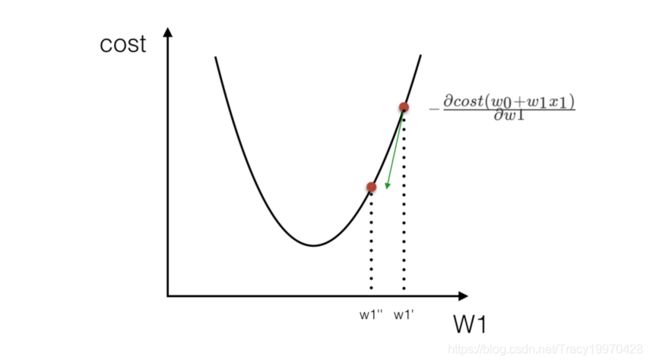

方法二:梯度下降

- 概念:沿着这个函数下降的方向找,最后就能找到山谷的最低点,然后更新W值,每次下降的幅度不同。

- 算法公式:

- 示意图

即从一开始随机生成的权重对应的cost函数值,沿着下降方向,以不同的学习速率下降,直到最终找到cost函数最小时对应的W值为止。

- 注意点:需要进行标准化处理

- 适用场景:面对训练数据规模十分庞大的任务

- 代码示例(预测波士顿房价)

from sklearn.datasets import load_boston

from sklearn.linear_model import SGDRegressor

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

def mysgd():

"""

用SGDRegression方法预测波士顿房价

:return: None

"""

# 获取数据

lb = load_boston()

# 分割数据集

x_train, x_test, y_train, y_test = train_test_split(lb.data,lb.target,test_size=0.25)

# 标准化处理(注意特征值和目标值需要实例化两个API,因为维数不一样)

std_x = StandardScaler()

x_train = std_x.fit_transform(x_train)

x_test = std_x.transform(x_test)

std_y = StandardScaler()

y_train = std_y.fit_transform(y_train.reshape(-1,1))

y_test = std_y.transform(y_test.reshape(-1,1))

# 用估计器预测房价结果

sgd = SGDRegressor()

sgd.fit(x_train,y_train)

print(sgd.coef_)

predict_std = sgd.predict(x_test)

y_predict = std_y.inverse_transform(predict_std.reshape(-1,1))

print("梯度回归方程预测房价结果:", y_predict)

print("梯度回归方程预测军方误差:", mean_squared_error(std_y.inverse_transform(y_test),y_predict))

return None

if __name__ == '__main__':

mysgd()

输出结果:

[-0.09899378 0.05707105 -0.06552787 0.10473573 -0.10534929 0.36552032

-0.02953529 -0.21774241 0.10686514 -0.05112742 -0.19883048 0.07593193

-0.34252204]

梯度回归方程预测房价结果: [[18.15533454]

[34.11869678]

[32.14174411]

[21.34420274]

[17.21388765]

[16.35700059]

[21.91035209]

[25.74225411]

[13.80938554]

[37.0260741 ]

[20.34283321]

[22.60332358]

[10.57004107]

[20.23572153]

[ 9.59137916]

[28.41603421]

[17.68100316]

[21.23897539]

[17.53442359]

[13.19849714]

[20.14575752]

[33.52831795]

[17.12473639]

[21.36697523]

[17.90262181]

[ 4.09907073]

[21.95366187]

[33.80847587]

[20.27105119]

[17.53696132]

[33.11813363]

[28.80634195]

[ 6.57209533]

[25.48550927]

[23.35904861]

[35.9924995 ]

[ 5.20083665]

[20.03161132]

[26.15215008]

[36.99552849]

[21.72707286]

[36.55176266]

[22.03371153]

[23.26822998]

[21.48258812]

[19.91669232]

[24.69897601]

[41.31930121]

[20.08257762]

[13.07880876]

[20.2117174 ]

[23.51263223]

[40.44256018]

[33.56951898]

[27.71772647]

[24.50848523]

[17.46991589]

[21.44298149]

[19.40351808]

[-4.20320864]

[24.41236848]

[22.70770552]

[18.32786776]

[21.20199655]

[29.35400254]

[17.98159301]

[23.30945554]

[19.72799619]

[17.12636849]

[17.75898183]

[23.46041889]

[13.44739229]

[22.7480483 ]

[17.2265867 ]

[26.99748033]

[21.60716127]

[14.71744082]

[20.73697436]

[32.00570023]

[17.61311475]

[20.89879453]

[18.10165056]

[24.57757874]

[35.80571785]

[23.92253462]

[25.27805868]

[40.2727596 ]

[13.42459155]

[19.19037907]

[32.87888685]

[ 6.42186031]

[19.53277955]

[ 8.98051468]

[26.30031149]

[20.90806747]

[15.90596611]

[16.28474562]

[20.14033052]

[30.55707971]

[17.70373075]

[15.45362061]

[ 5.7067405 ]

[21.30190112]

[32.76682679]

[38.25465606]

[27.28654404]

[15.74370192]

[20.45658597]

[28.26599518]

[12.94885544]

[35.28388653]

[26.48460038]

[21.49836632]

[20.56639879]

[28.386673 ]

[32.78291138]

[12.35907231]

[ 9.89626638]

[24.98827917]

[29.76318234]

[18.2809601 ]

[21.24515354]

[34.17683845]

[29.44239205]

[22.00955944]

[22.65328658]

[22.50688932]]

梯度回归方程预测军方误差: 24.97920841695512

正规方程和梯度下降的对比

回归性能评估

- API:sklearn.metrics.mean_squared_error

- 函数:mean_squared_error(y_true, y_pred)

均方误差回归损失

y_true:真实值

y_pred:预测值

return:浮点数结果 - 注意点:真实值,预测值为标准化之前的值

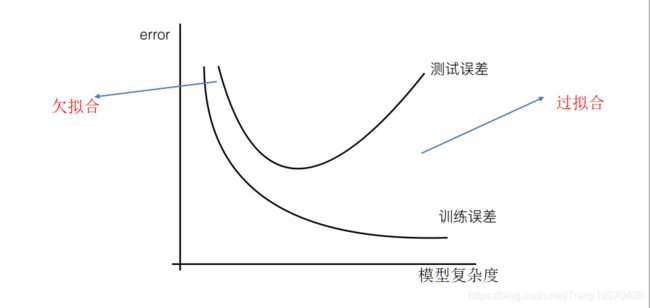

过拟合和欠拟合

过拟合

一个假设在训练数据上能够获得比其他假设更好的拟合, 但是在训练数据外的数据集上却不能很好地拟合数据,此时认为这个假设出现了过拟合的现象。(模型过于复杂)

欠拟合

一个假设在训练数据上不能获得更好的拟合, 但是在训练数据外的数据集上也不能很好地拟合数据,此时认为这个假设出现了欠拟合的现象。(模型过于简单)

解决方法

- 欠拟合:

- 欠拟合形成原因:学习到数据的特征过少

- 欠拟合解决方法:增加数据的特征数量

- 过拟合

- 过拟合形成原因:原始特征过多,存在一些嘈杂特征, 模型过于复杂是因为模型尝试去兼顾各个测试数据点

- 1) 进行特征选择,消除关联性大的特征(很难做);2)交叉验证,只能帮助判断是过拟合还是欠拟合,但不能从根本上解决这一问题(让所有数据都有过训练);3)正则化(了解)

岭回归

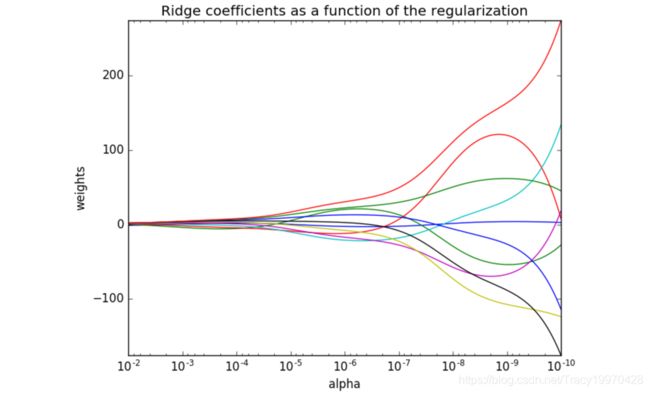

岭回归的概念

针对线性回归中的过拟合问题,以正则化为解决方法,产生了“岭回归”这种回归算法。

目的:尽量减小高次项特征的影响

作用:可以使得W的每个元素都很小,都接近于0

优点:回归得到的回归系数更符合实际,更可靠。另外,能让

估计参数的波动范围变小,变的更稳定。在存在病态数据偏多的研究中有较大的实用价值。

岭回归的代码实现

- API:sklearn.linear_model.Ridge

- 函数:klearn.linear_model.Ridge(alpha=1.0)

具有l2正则化的线性最小二乘法

alpha:正则化力度

coef_:回归系数 - 正则化程度的变化对结果的影响

- 代码示例(预测波士顿房价)1

from sklearn.datasets import load_boston

from sklearn.linear_model import Ridge

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

def mysgd():

"""

用SGDRegression方法预测波士顿房价

:return: None

"""

# 获取数据

lb = load_boston()

# 分割数据集

x_train, x_test, y_train, y_test = train_test_split(lb.data,lb.target,test_size=0.25)

# 标准化处理(注意特征值和目标值需要实例化两个API,因为维数不一样)

std_x = StandardScaler()

x_train = std_x.fit_transform(x_train)

x_test = std_x.transform(x_test)

std_y = StandardScaler()

y_train = std_y.fit_transform(y_train.reshape(-1,1))

y_test = std_y.transform(y_test.reshape(-1,1))

# 用估计器预测房价结果

rd = Ridge()

rd.fit(x_train,y_train)

print(rd.coef_)

predict_std = rd.predict(x_test)

y_predict = std_y.inverse_transform(predict_std.reshape(-1,1))

print("岭回归方程预测房价结果:", y_predict)

print("岭回归方程预测军方误差:", mean_squared_error(std_y.inverse_transform(y_test),y_predict))

return None

if __name__ == '__main__':

mysgd()

输出结果:

[[-0.09712775 0.10193582 0.04583568 0.09351994 -0.18624549 0.31246168

-0.00936299 -0.30612679 0.31170519 -0.28632496 -0.18517174 0.13279692

-0.40581663]]

岭回归方程预测房价结果: [[37.00176928]

[13.26132682]

[23.54672912]

[22.71654214]

[25.67990408]

[19.54523528]

[20.67861965]

[32.5417979 ]

[22.99726104]

[28.36399662]

[40.68055885]

[21.15905508]

[16.67517075]

[14.19302252]

[17.38041852]

[31.24705479]

[13.50863022]

[27.34963008]

[41.25713632]

[20.70165982]

[23.24533533]

[39.85987694]

[32.83040663]

[13.04899638]

[14.0838789 ]

[18.06380925]

[19.6426615 ]

[27.21555235]

[17.67976907]

[34.45092919]

[26.22531561]

[-0.33650528]

[23.34381681]

[33.72670693]

[15.28809868]

[23.24733193]

[24.30973196]

[18.18241414]

[15.5708979 ]

[16.64774211]

[23.98182194]

[40.942158 ]

[26.93749796]

[29.60798076]

[22.77235029]

[20.29534231]

[28.39466337]

[19.64746016]

[ 2.4324661 ]

[26.53607902]

[21.28318569]

[16.89454292]

[13.02035884]

[27.05552907]

[18.76256831]

[25.48453803]

[22.98359125]

[34.12163382]

[-0.07566267]

[27.22672687]

[24.57249196]

[31.35506797]

[21.51520609]

[18.1874919 ]

[ 5.91854968]

[28.8187358 ]

[20.02153002]

[22.97045159]

[20.51448762]

[23.01560955]

[36.30354424]

[26.7269793 ]

[19.40240129]

[25.22398079]

[25.89558279]

[25.43732688]

[28.03109779]

[19.60742959]

[23.1766216 ]

[33.27108218]

[22.40006098]

[23.6421197 ]

[17.17184732]

[10.82001448]

[25.60073618]

[16.22722067]

[19.81550019]

[11.61445192]

[35.65601271]

[15.29808558]

[24.62870842]

[24.89710764]

[17.93088168]

[23.31675652]

[35.35558844]

[13.24745034]

[22.10891563]

[22.51795098]

[22.32261331]

[30.19595212]

[33.13758238]

[39.13321668]

[24.43840237]

[22.96093311]

[21.18458161]

[30.30673668]

[33.30111801]

[19.22585514]

[18.14130464]

[14.90996596]

[ 9.36230842]

[15.03863595]

[13.26064651]

[23.26088159]

[21.56648367]

[22.11404782]

[29.44668622]

[25.73617186]

[19.26855022]

[17.1962654 ]

[22.0283875 ]

[36.84887348]

[11.14088656]

[26.13613835]

[24.94894001]

[23.38184477]

[16.32009033]]

岭回归方程预测军方误差: 27.751727283864966

比较不同回归算法的效果时,需要将训练集和测试集固定,本文中三种算法的训练集和测试集未固定,均为随机生成。 ↩︎