Maze环境以及DQN的实现

环境

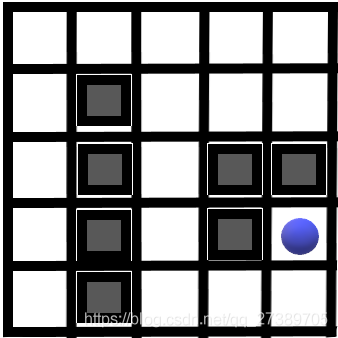

5*5的迷宫,其中(4,3)位置是出口,障碍物的位置分别为(1,1)、(1,2)、(1,3)、(1,4)、(3,2)、(3,3)、(4,2)

动作空间当然4个,上下左右

直接上代码~

import gym

import tensorflow as tf

import numpy as np

import time

import sys

if sys.version_info.major == 2:

import Tkinter as tk

else:

import tkinter as tk

class Maze(tk.Tk, object):

def __init__(self,state_x,state_y,frequency):

super(Maze, self).__init__()

self.action = [0, 1, 2, 3]

self.n_actions = len(self.action)

self.n_features = 2

self.state_min_x = 0

self.state_max_x = 4

self.state_min_y = 0

self.state_max_y = 4

self.frequency = frequency

self.block = np.zeros((self.state_max_x+1, self.state_max_y+1))

self.block[1][1] = 1

self.block[1][2] = 1

self.block[1][3] = 1

self.block[1][4] = 1

self.block[3][2] = 1

self.block[3][3] = 1

self.block[4][2] = 1

self.state_x=state_x

self.state_y=state_y

# 出口

self.out_x = 4

self.out_y = 3

def reset(self):

self.update()

self.state_x = np.random.randint(self.state_min_x, self.state_max_x)

self.state_y = np.random.randint(self.state_min_y, self.state_max_y)

if self.block[self.state_x][self.state_y] == 1:

self.reset()

if self.frequency !=0:

print("reset"+str(self.frequency))

self.frequency = 0

return np.array([self.state_x, self.state_y])

def step(self, action):

judge = 1

self.frequency+=1

if action == 0: # up

if self.state_y < self.state_max_y and self.block[self.state_x][self.state_y+1] == 0:

self.state_y += 1

else:

self.state_y = self.state_y

judge = 0

elif action == 1: # down

if self.state_y > self.state_min_y and self.block[self.state_x][self.state_y-1] == 0:

self.state_y -= 1

else:

self.state_y = self.state_y

judge = 0

elif action == 2: # right

if self.state_x < self.state_max_x and self.block[self.state_x+1][self.state_y] == 0:

self.state_x += 1

else:

self.state_x = self.state_x

judge = 0

elif action == 3: # left

if self.state_x > self.state_min_x and self.block[self.state_x-1][self.state_y] == 0:

self.state_x -= 1

else:

self.state_x = self.state_x

judge = 0

if judge == 0:

return np.array([self.state_x, self.state_y]), -1, self.is_done()

return np.array([self.state_x, self.state_y]), self.get_reward(), self.is_done()

def render(self):

return 0

def is_done(self):

if self.state_x == self.out_x and self.state_y == self.out_y:

print("finish")

return True

return False

def get_reward(self):

if self.state_x == self.out_x and self.state_y == self.out_y:

return 1

return 0

其中回馈值设置的好像有问题,收敛会很慢,但是逻辑应该不存在问题。

其中DQN的实现直接拷贝了Github上的代码

DQN_modified.py和RL_brain.py

执行的代码如下

from RL_brain import DeepQNetwork

from env_maze import Maze

def work():

step = 0

for _ in range(1000):

# initial observation

observation = env.reset()

while True:

# fresh env

env.render()

# RL choose action based on observation

action = RL.choose_action(observation)

# RL take action and get next observation and reward

observation_, reward, done = env.step(action)

RL.store_transition(observation, action, reward, observation_)

if (step > 200) and (step % 5 == 0):

RL.learn()

# swap observation

observation = observation_

# break while loop when end of this episode

if done:

break

step += 1

# end of game

print('game over')

env.destroy()

if __name__ == '__main__':

#导入Maze环境

env = Maze(0,0,0)

RL = DeepQNetwork(env.n_actions, env.n_features,

learning_rate=0.01,

reward_decay=0.9,

e_greedy=0.9,

replace_target_iter=200,

memory_size=2000,

# output_graph=True

)

env.after(100, work)

env.mainloop()

因为还不知道什么评价指标,所以我直接用frequency(到达终点尝试的次数)做了评价指标,速度简直慢的吓人

第一次到达终点用了 168次尝试,第二次直接用了224659次…天

finish

reset168

finish

reset224659

…

于是,我改了一下get_reward函数,相当于在最后这条路线上提前设置好。

def get_reward(self):

if self.state_x == self.out_x and self.state_y == self.out_y:

return 1

elif self.state_x == 4 and self.state_y == 4:

return 0.9

elif self.state_x == 3 and self.state_y == 4:

return 0.8

elif self.state_x == 2 and self.state_y == 4:

return 0.7

elif self.state_x == 2 and self.state_y == 3:

return 0.6

elif self.state_x == 2 and self.state_y == 2:

return 0.5

elif self.state_x == 2 and self.state_y == 1:

return 0.4

return 0

训练3000次后基本上10次左右就可以找到路线了,- -有点耍流氓,不过至少证明我这个实验没问题,就是收敛的有点慢

接着,如果刚开始的回馈值有问题呢?比如

def get_reward(self):

if self.state_x == self.out_x and self.state_y == self.out_y:

return 1

elif self.state_x == 4 and self.state_y == 4:

return 0.8

elif self.state_x == 3 and self.state_y == 4:

return 0.6

elif self.state_x == 2 and self.state_y == 4:

return 0.4

elif self.state_x == 2 and self.state_y == 3:

return 0.7

elif self.state_x == 2 and self.state_y == 2:

return 0.3

elif self.state_x == 2 and self.state_y == 1:

return 0.4

return 0

结果还是可以收敛,emmm 还是老老实实的学下基础内容吧