Kubernetes(K8S)(四)——Pod生命周期

文章目录

- 1.Pod 怎样管理多个容器

- 2.Pod的生命周期

- 2.1 init容器

- 1.创建Init容器

- 2.2 探针

- 1.存活探针示例

- 2.就绪探针示例

参考官网:https://kubernetes.io/zh/docs/concepts/workloads/pods/

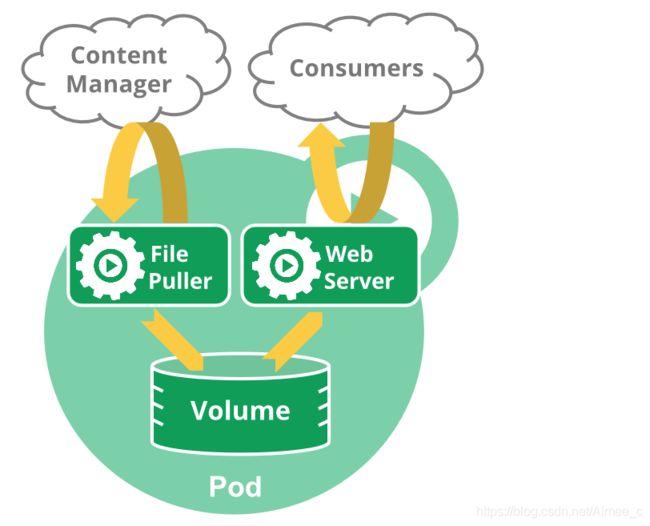

1.Pod 怎样管理多个容器

Pod (就像在鲸鱼荚或者豌豆荚中)是一组(一个或多个)容器容器是可移植、可执行的轻量级的镜像,镜像中包含软件及其相关依赖。(例如 Docker 容器),这些容器共享存储、网络、以及怎样运行这些容器的声明。Pod 中的内容总是并置(colocated)的并且一同调度,在共享的上下文中运行。

Pod 所建模的是特定于应用的“逻辑主机”,其中包含一个或多个应用容器,这些容器是相对紧密的耦合在一起 — 在容器出现之前,在相同的物理机或虚拟机上运行意味着在相同的逻辑主机上运行。

Pod 怎样管理多个容器:

Pod 被设计成支持形成内聚服务单元的多个协作过程(作为容器)。Pod 中的容器被自动的安排到集群中的同一物理或虚拟机上,并可以一起进行调度。容器可以共享资源和依赖、彼此通信、协调何时以及何种方式终止它们。

Pod 为其组成容器提供了两种共享资源:网络 和 存储。

网络:

每个 Pod 分配一个唯一的 IP 地址。Pod 中的每个容器共享网络命名空间,包括 IP 地址和网络端口。Pod 内的容器 可以使用 localhost 互相通信。当 Pod 中的容器与 Pod 之外 的实体通信时,它们必须协调如何使用共享的网络资源(例如端口)。存储一个 Pod 可以指定一组共享存储卷包含可被 Pod 中容器访问的数据的目录。

存储:

Pod 中的所有容器都可以访问共享卷,允许这些容器共享数据。卷还允许 Pod 中的持久数据保留下来,以防其中的容器需要重新启动。

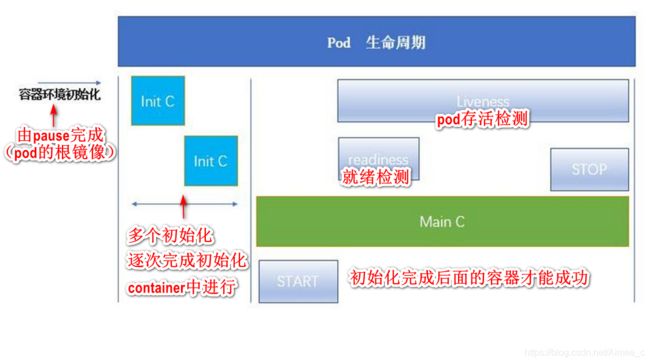

2.Pod的生命周期

2.1 init容器

官网参考:https://kubernetes.io/zh/docs/concepts/workloads/pods/init-containers/

Pod 可以包含多个容器,应用运行在这些容器里面,同时 Pod 也可以有一个或多个先于应用容器启动的Init 容器。

Init 容器的特点:

• 它们总是运行到完成。

• Init 容器不支持 Readiness,因为它们必须在 Pod 就绪之前运行完成。

• 每个 Init 容器必须运行成功,下一个才能够运行。

如果 Pod 的 Init 容器失败,Kubernetes 会不断地重启该 Pod,直到 Init 容 器成功为止。然而,如果 Pod 对应的 restartPolicy 值为 Never,它不会重新启动。

Init 容器的工作

因为 Init 容器具有与应用容器分离的单独镜像,其启动相关代码具有如下优势:

• Init 容器可以包含一些安装过程中应用容器中不存在的实用工具或个性化 代码。

• Init 容器可以安全地运行这些工具,避免这些工具导致应用镜像的安全性 降低。

• 应用镜像的创建者和部署者可以各自独立工作,而没有必要联合构建一个 单独的应用镜像。

• Init 容器能以不同于Pod内应用容器的文件系统视图运行。因此,Init容器 可具有访问 Secrets 的权限,而应用容器不能够访问。

• 由于 Init 容器必须在应用容器启动之前运行完成,因此 Init 容器提供了一 种机制来阻塞或延迟应用容器的启动,直到满足了一组先决条件。一旦前 置条件满足,Pod内的所有的应用容器会并行启动。

1.创建Init容器

示例一

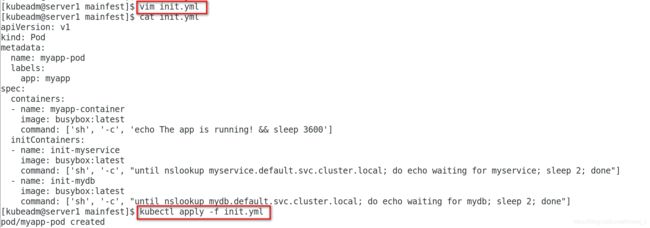

下面的例子定义了一个具有 2 个 Init 容器的简单 Pod。 第一个等待 myservice 启动,第二个等待 mydb 启动。 一旦这两个 Init容器 都启动完成,Pod 将启动spec区域中的应用容器。

[kubeadm@server1 mainfest]$ vim init.yml

[kubeadm@server1 mainfest]$ cat init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox:latest

command: ['sh', '-c', 'echo The app is running! && sleep 3600']

initContainers:

- name: init-myservice

image: busybox:latest

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

- name: init-mydb

image: busybox:latest

command: ['sh', '-c', "until nslookup mydb.default.svc.cluster.local; do echo waiting for mydb; sleep 2; done"]

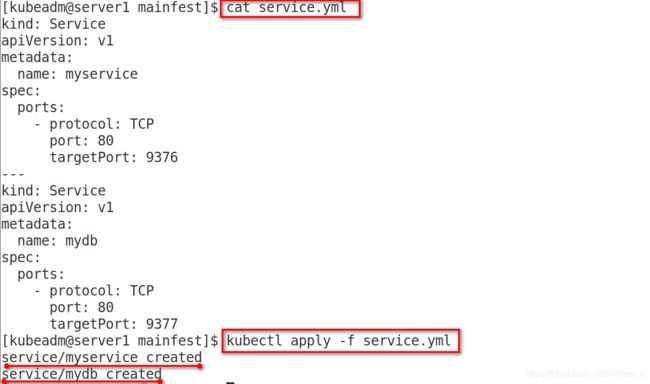

下面的 yaml 文件展示了 mydb 和 myservice 两个 Service:

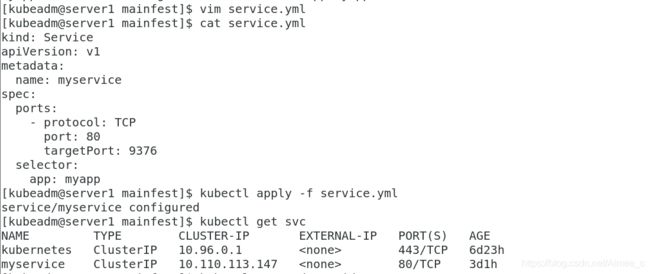

[kubeadm@server1 mainfest]$ vim service.yml

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

---

kind: Service

apiVersion: v1

metadata:

name: mydb

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9377

一旦我们启动了 mydb 和 myservice 这两个 Service,我们能够看到 Init 容器完成,并且 myapp-pod 被创建,随后my-app的Pod转移进入 Running 状态

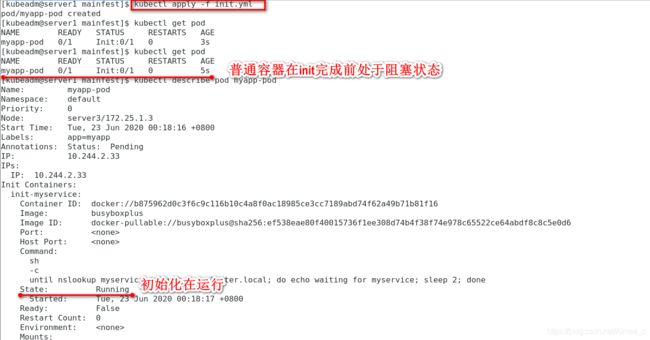

示例二

[kubeadm@server1 mainfest]$ cat init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: myapp:v1

initContainers:

- name: init-myservice

image: busyboxplus

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

[kubeadm@server1 mainfest]$ kubectl apply -f init.yml

pod/myapp-pod created

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 3s

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 5s

[kubeadm@server1 mainfest]$ kubectl describe pod myapp-pod

Name: myapp-pod

Namespace: default

Priority: 0

Node: server3/172.25.1.3

Start Time: Tue, 23 Jun 2020 00:18:16 +0800

Labels: app=myapp

Annotations: Status: Pending

IP: 10.244.2.33

IPs:

IP: 10.244.2.33

Init Containers:

init-myservice:

Container ID: docker://b875962d0c3f6c9c116b10c4a8f0ac18985ce3cc7189abd74f62a49b71b81f16

Image: busyboxplus

Image ID: docker-pullable://busyboxplus@sha256:ef538eae80f40015736f1ee308d74b4f38f74e978c65522ce64abdf8c8c5e0d6

Port: <none>

Host Port: <none>

Command:

sh

-c

until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done

State: Running

Started: Tue, 23 Jun 2020 00:18:17 +0800

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-5qqxc (ro)

Containers:

myapp-container:

Container ID:

Image: myapp:v1

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: PodInitializing

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-5qqxc (ro)

Conditions:

Type Status

Initialized False

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-5qqxc:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-5qqxc

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 15s default-scheduler Successfully assigned default/myapp-pod to server3

Normal Pulling 14s kubelet, server3 Pulling image "busyboxplus"

Normal Pulled 14s kubelet, server3 Successfully pulled image "busyboxplus"

Normal Created 14s kubelet, server3 Created container init-myservice

Normal Started 14s kubelet, server3 Started container init-myservice

[kubeadm@server1 mainfest]$ vim service.yml

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice created

[kubeadm@server1 mainfest]$ kubectl get pod -w

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 5m11s

myapp-pod 0/1 PodInitializing 0 5m19s

myapp-pod 1/1 Running 0 5m20s

2.2 探针

探针:

• ExecAction:在容器内执行指定命令。如果命令退出时返回码为 0 则认 为诊断成功。

• TCPSocketAction:对指定端口上的容器的 IP 地址进行 TCP 检查。如果 端口打开,则诊断被认为是成功的。

• HTTPGetAction:对指定的端口和路径上的容器的 IP 地址执行 HTTP Get 请求。如果响应的状态码大于等于200 且小于400,则诊断被认为是成功的。

探测结果:

• 成功:容器通过了诊断。

• 失败:容器未通过诊断。

• 未知:诊断失败,因此不会采取任何行动。

Kubelet 可以选择是否执行在容器上运行的三种探针执行和做出反应:

• livenessProbe:指示容器是否正在运行。如果存活探测失败,则 kubelet 会杀死容器,并且容器将受到其 重启策略 的影响。如果容器不提供存活探 针,则默认状态为 Success。

• readinessProbe:指示容器是否准备好服务请求。如果就绪探测失败,端 点控制器将从与 Pod 匹配的所有 Service 的端点中删除该 Pod 的 IP 地址。 初始延迟之前的就绪状态默认为 Failure。如果容器不提供就绪探针,则默 认状态为 Success。

• startupProbe: 指示容器中的应用是否已经启动。如果提供了启动探测 (startup probe),则禁用所有其他探测,直到它成功为止。如果启动探测 失败,kubelet 将杀死容器,容器服从其重启策略进行重启。如果容器没有 提供启动探测,则默认状态为成功Success。

该什么时候使用存活(liveness)和就绪(readiness)探针?

如果容器中的进程能够在遇到问题或不健康的情况下自行崩溃,则不一定需要存活探针; kubelet 将根据 Pod 的restartPolicy 自动执行正确的操作。

如果希望容器在探测失败时被杀死并重新启动,那么指定一个存活探针,并指定restartPolicy 为 Always 或 OnFailure。

如果要仅在探测成功时才开始向 Pod 发送流量,请指定就绪探针。在这种情况下,就绪探针可能与存活探针相同,但是 spec 中的就绪探针的存在意味着 Pod 将在没有接收到任何流量的情况下启动,并且只有在探针探测成功后才开始接收流量。

如果希望容器能够自行维护,可以指定一个就绪探针,该探针检查与存活探针不同的端点。请注意,如果只想在 Pod 被删除时能够排除请求,则不一定需要使用就绪探针;在删除 Pod 时,Pod 会自动将自身置于未完成状态,无论就绪探针是否存在。当等待 Pod 中的容器停止时,Pod 仍处于未完成状态。

重启策略

PodSpec 中有一个 restartPolicy 字段,可能的值为 Always、OnFailure 和 Never。默认为 Always。

Pod 的生命

一般Pod 不会消失,直到人为销毁他们,这可能是一个人或控制器。

建议创建适当的控制器来创建 Pod,而不是直接自己创建 Pod。因为单独的 Pod 在机器故障的情况下没有办法自动复原,而控制器却可以。

三种可用的控制器:

• 使用 Job 运行预期会终止的 Pod,例如批量计算。Job 仅适用于重启策略 为 OnFailure 或 Never 的 Pod。

• 对预期不会终止的 Pod 使用 ReplicationController、ReplicaSet 和 Deployment ,例如 Web 服务器。 ReplicationController 仅适用于具 有 restartPolicy 为 Always 的 Pod。

• 提供特定于机器的系统服务,使用 DaemonSet 为每台机器运行一个 Pod 。

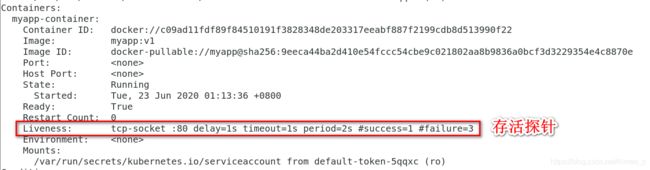

1.存活探针示例

[kubeadm@server1 mainfest]$ vim init.yml

[kubeadm@server1 mainfest]$ cat init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: myapp:v1

initContainers:

- name: init-myservice

image: busybox:1.28

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

containers:

- name: myapp-container

image: myapp:v1

imagePullPolicy: IfNotPresent

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

periodSeconds: 2

timeoutSeconds: 1

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

[kubeadm@server1 mainfest]$ kubectl apply -f init.yml

pod/myapp-pod created

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 3m35s

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice created

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 3m46s

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 3m54s

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 4m

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 0 4m13s

[kubeadm@server1 mainfest]$ kubectl describe pod myapp-pod

Name: myapp-pod

Namespace: default

Priority: 0

Node: server3/172.25.1.3

Start Time: Tue, 23 Jun 2020 01:09:33 +0800

Labels: app=myapp

Annotations: Status: Running

IP: 10.244.2.34

IPs:

IP: 10.244.2.34

Init Containers:

init-myservice:

Container ID: docker://fc21c78635243d0e9dd380a4330707b21d4eb21d8bf42f33455d7f5c5822d312

Image: busybox:1.28

Image ID: docker-pullable://busybox@sha256:141c253bc4c3fd0a201d32dc1f493bcf3fff003b6df416dea4f41046e0f37d47

Port: <none>

Host Port: <none>

Command:

sh

-c

until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 23 Jun 2020 01:09:51 +0800

Finished: Tue, 23 Jun 2020 01:13:36 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-5qqxc (ro)

Containers:

myapp-container:

Container ID: docker://c09ad11fdf89f84510191f3828348de203317eeabf887f2199cdb8d513990f22

Image: myapp:v1

Image ID: docker-pullable://myapp@sha256:9eeca44ba2d410e54fccc54cbe9c021802aa8b9836a0bcf3d3229354e4c8870e

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 23 Jun 2020 01:13:36 +0800

Ready: True

Restart Count: 0

Liveness: tcp-socket :80 delay=1s timeout=1s period=2s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-5qqxc (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-5qqxc:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-5qqxc

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m38s default-scheduler Successfully assigned default/myapp-pod to server3

Normal Pulling 4m37s kubelet, server3 Pulling image "busybox:1.28"

Normal Pulled 4m20s kubelet, server3 Successfully pulled image "busybox:1.28"

Normal Created 4m20s kubelet, server3 Created container init-myservice

Normal Started 4m20s kubelet, server3 Started container init-myservice

Normal Pulled 35s kubelet, server3 Container image "myapp:v1" already present on machine

Normal Created 35s kubelet, server3 Created container myapp-container

Normal Started 35s kubelet, server3 Started container myapp-container

[kubeadm@server1 mainfest]$ kubectl exec -it myapp-pod -- sh

/ # nginx -s stop

2020/06/22 17:17:15 [notice] 12#12: signal process started

/ # command terminated with exit code 137

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 1 7m46s

[kubeadm@server1 mainfest]$ kubectl exec -it myapp-pod -- sh

/ # nginx -s stop

2020/06/22 17:17:29 [notice] 12#12: signal process started

/ # command terminated with exit code 137

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 CrashLoopBackOff 1 8m4s

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 2 8m13s

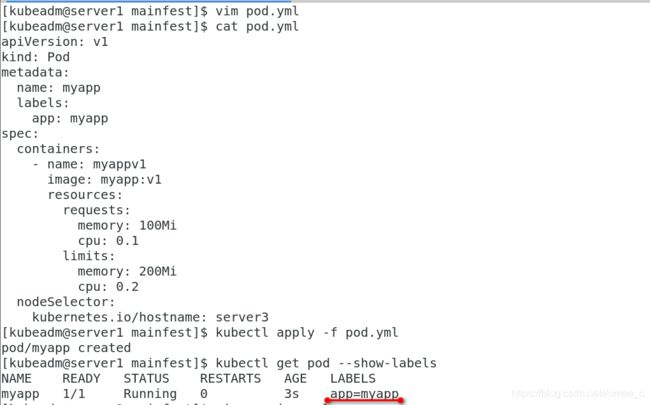

2.就绪探针示例

示例一:

[kubeadm@server1 mainfest]$ vim pod.yml

[kubeadm@server1 mainfest]$ cat pod.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp

labels:

app: myapp

spec:

containers:

- name: myappv1

image: myapp:v1

resources:

requests:

memory: 100Mi

cpu: 0.1

limits:

memory: 200Mi

cpu: 0.2

nodeSelector:

kubernetes.io/hostname: server3

[kubeadm@server1 mainfest]$ kubectl apply -f pod.yml

pod/myapp created

[kubeadm@server1 mainfest]$ kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp 1/1 Running 0 3s app=myapp

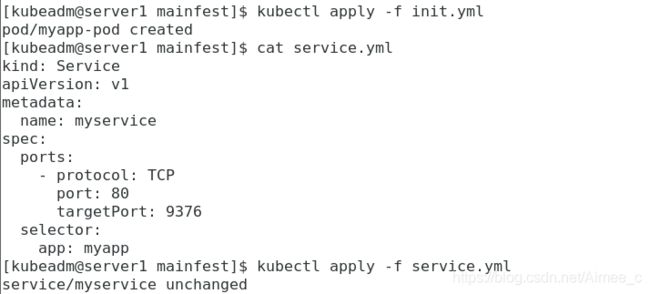

[kubeadm@server1 mainfest]$ vim service.yml

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

selector:

app: myapp

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice configured

[kubeadm@server1 mainfest]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d23h

myservice ClusterIP 10.110.113.147 <none> 80/TCP 3d1h

[kubeadm@server1 mainfest]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 80s 10.244.2.37 server3 <none> <none>

[kubeadm@server1 mainfest]$ kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.110.113.147

Port: <unset> 80/TCP

TargetPort: 9376/TCP

Endpoints: 10.244.2.37:9376

Session Affinity: None

Events: <none>

示例二

[kubeadm@server1 mainfest]$ vim init.yml

[kubeadm@server1 mainfest]$ cat init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: myapp:v1

initContainers:

- name: init-myservice

image: busybox:1.28

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

containers:

- name: myapp-container

image: myapp:v1

imagePullPolicy: IfNotPresent

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

periodSeconds: 2

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /hostname.html

port: 80

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 1

[kubeadm@server1 mainfest]$ kubectl apply -f init.yml

pod/myapp-pod created

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

selector:

app: myapp

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice unchanged

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 0 32s

[kubeadm@server1 mainfest]$ kubectl exec -it myapp-pod -- sh

/ # cd /etc/nginx/conf.d/

/etc/nginx/conf.d # ls

default.conf

/etc/nginx/conf.d # vi default.conf

/etc/nginx/conf.d # nginx -s reload

2020/06/25 19:22:43 [notice] 14#14: signal process started

/etc/nginx/conf.d # ps ax

PID USER TIME COMMAND

1 root 0:00 nginx: master process nginx -g daemon off;

7 root 0:00 sh

15 nginx 0:00 nginx: worker process

16 root 0:00 ps ax

/etc/nginx/conf.d # [kubeadm

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Running 0 4m11s

[kubeadm@server1 mainfest]$ kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.110.113.147

Port: <unset> 80/TCP

TargetPort: 9376/TCP

Endpoints:

Session Affinity: None

Events: <none>

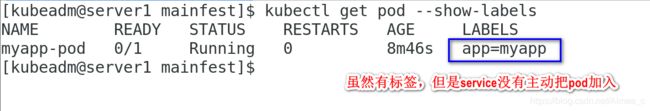

[kubeadm@server1 mainfest]$ kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myapp-pod 0/1 Running 0 8m46s app=myapp

[kubeadm@server1 mainfest]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp-pod 0/1 Running 0 10m 10.244.1.27 server2 <none> <none>

[kubeadm@server1 mainfest]$ kubectl run demo --image=busyboxplus -it --restart=Never

If you don't see a command prompt, try pressing enter.

[ root@demo:/ ]$ curl 10.244.1.27

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.244.1.27/hostname.html

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.12.2</center>

</body>

</html>

[ root@demo:/ ]$ [kubeadm@server1 mainfest]$

[kubeadm@server1 mainfest]$ cat service.yml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

[kubeadm@server1 mainfest]$ cat pod2.yml

apiVersion: apps/v1

kind: Deployment

metadata:

# Unique key of the Deployment instance

name: deployment-example

spec:

# 3 Pods should exist at all times.

replicas: 2

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

# Apply this label to pods and default

# the Deployment label selector to this value

app: myapp

spec:

containers:

- name: myapp

# Run this image

image: myapp:v2

[kubeadm@server1 mainfest]$ kubectl apply -f service.yml

service/myservice created

[kubeadm@server1 mainfest]$ vim pod2.yml

[kubeadm@server1 mainfest]$ kubectl apply -f pod2.yml

deployment.apps/deployment-example unchanged

[kubeadm@server1 mainfest]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-example-67764dd8bd-rbl7v 1/1 Running 0 5m47s

deployment-example-67764dd8bd-shwvk 1/1 Running 0 5m47s

myapp-pod 1/1 Running 0 2m35s

[kubeadm@server1 mainfest]$ kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=myapp

Type: ClusterIP

IP: 10.110.155.170

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.28:80,10.244.2.41:80,10.244.2.43:80

Session Affinity: None

Events: <none>

[kubeadm@server1 mainfest]$ kubectl run demo --image=busyboxplus -it --restart=Never

If you don't see a command prompt, try pressing enter.

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[ root@demo:/ ]$ curl 10.110.155.170

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>