使用ingress nginx代理grpc

使用ingress nginx代理grpc

- 使用ingress代理dgraph grpc服务

- 搭建dgraph服务

- 配置ui

- 使用java client连接dgraph

- 概述

- 生成公钥

- java终端访问

- 错误举例

使用ingress代理dgraph grpc服务

最近在使用图数据库dgraph,开始选择使用k8s拉起整个图数据库,使用ingress nginx代理grpc端口,别问我为什么使用grpc服务,是因为dgraph-io/dgraph4j使用的就是grpc协议。

搭建dgraph服务

使用官方文档中的yaml,直接拉起

# This highly available config creates 3 Dgraph Zeros, 3 Dgraph

# Alphas with 3 replicas, and 1 Ratel UI client. The Dgraph cluster

# will still be available to service requests even when one Zero

# and/or one Alpha are down.

#

# There are 3 services can can be used to expose outside the cluster as needed:

# dgraph-zero-public - To load data using Live & Bulk Loaders

# dgraph-alpha-public - To connect clients and for HTTP APIs

# dgraph-ratel-public - For Dgraph UI

apiVersion: v1

kind: Namespace

metadata:

name: dgraph

---

apiVersion: v1

kind: Service

metadata:

name: dgraph-zero-public

namespace: dgraph

labels:

app: dgraph-zero

monitor: zero-dgraph-io

spec:

type: ClusterIP

ports:

- port: 5080

targetPort: 5080

name: grpc-zero

- port: 6080

targetPort: 6080

name: http-zero

selector:

app: dgraph-zero

---

apiVersion: v1

kind: Service

metadata:

name: dgraph-alpha-public

namespace: dgraph

labels:

app: dgraph-alpha

monitor: alpha-dgraph-io

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

name: http-alpha

nodePort: 32678

- port: 9080

targetPort: 9080

name: grpc-alpha

selector:

app: dgraph-alpha

---

apiVersion: v1

kind: Service

metadata:

name: dgraph-ratel-public

namespace: dgraph

labels:

app: dgraph-ratel

spec:

type: ClusterIP

ports:

- port: 8000

targetPort: 8000

name: http-ratel

selector:

app: dgraph-ratel

---

# This is a headless service which is necessary for discovery for a dgraph-zero StatefulSet.

# https://kubernetes.io/docs/tutorials/stateful-application/basic-stateful-set/#creating-a-statefulset

apiVersion: v1

kind: Service

metadata:

name: dgraph-zero

namespace: dgraph

labels:

app: dgraph-zero

spec:

ports:

- port: 5080

targetPort: 5080

name: grpc-zero

clusterIP: None

# We want all pods in the StatefulSet to have their addresses published for

# the sake of the other Dgraph Zero pods even before they're ready, since they

# have to be able to talk to each other in order to become ready.

publishNotReadyAddresses: true

selector:

app: dgraph-zero

---

# This is a headless service which is necessary for discovery for a dgraph-alpha StatefulSet.

# https://kubernetes.io/docs/tutorials/stateful-application/basic-stateful-set/#creating-a-statefulset

apiVersion: v1

kind: Service

metadata:

name: dgraph-alpha

namespace: dgraph

labels:

app: dgraph-alpha

spec:

ports:

- port: 7080

targetPort: 7080

name: grpc-alpha-int

clusterIP: None

# We want all pods in the StatefulSet to have their addresses published for

# the sake of the other Dgraph alpha pods even before they're ready, since they

# have to be able to talk to each other in order to become ready.

publishNotReadyAddresses: true

selector:

app: dgraph-alpha

---

# This StatefulSet runs 3 Dgraph Zero.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: dgraph-zero

namespace: dgraph

spec:

serviceName: "dgraph-zero"

replicas: 3

selector:

matchLabels:

app: dgraph-zero

template:

metadata:

labels:

app: dgraph-zero

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- dgraph-zero

topologyKey: kubernetes.io/hostname

containers:

- name: zero

image: dgraph/dgraph:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5080

name: grpc-zero

- containerPort: 6080

name: http-zero

volumeMounts:

- name: datadir

mountPath: /dgraph

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

command:

- bash

- "-c"

- |

set -ex

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

idx=$(($ordinal + 1))

if [[ $ordinal -eq 0 ]]; then

exec dgraph zero --my=$(hostname -f):5080 --idx $idx --replicas 3

else

exec dgraph zero --my=$(hostname -f):5080 --peer dgraph-zero-0.dgraph-zero.${POD_NAMESPACE}.svc.cluster.local:5080 --idx $idx --replicas 3

fi

livenessProbe:

httpGet:

path: /health

port: 6080

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

successThreshold: 1

readinessProbe:

httpGet:

path: /health

port: 6080

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

successThreshold: 1

terminationGracePeriodSeconds: 60

volumes:

- name: datadir

persistentVolumeClaim:

claimName: datadir

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.alpha.kubernetes.io/storage-class: ceph-sc

spec:

storageClassName: ceph-sc

accessModes:

- "ReadWriteOnce"

resources:

requests:

storage: 5Gi

---

# This StatefulSet runs 3 replicas of Dgraph Alpha.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: dgraph-alpha

namespace: dgraph

spec:

serviceName: "dgraph-alpha"

replicas: 3

selector:

matchLabels:

app: dgraph-alpha

template:

metadata:

labels:

app: dgraph-alpha

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- dgraph-alpha

topologyKey: kubernetes.io/hostname

# Initializing the Alphas:

#

# You may want to initialize the Alphas with data before starting, e.g.

# with data from the Dgraph Bulk Loader: https://dgraph.io/docs/deploy/#bulk-loader.

# You can accomplish by uncommenting this initContainers config. This

# starts a container with the same /dgraph volume used by Alpha and runs

# before Alpha starts.

#

# You can copy your local p directory to the pod's /dgraph/p directory

# with this command:

#

# kubectl cp path/to/p dgraph-alpha-0:/dgraph/ -c init-alpha

# (repeat for each alpha pod)

#

# When you're finished initializing each Alpha data directory, you can signal

# it to terminate successfully by creating a /dgraph/doneinit file:

#

# kubectl exec dgraph-alpha-0 -c init-alpha touch /dgraph/doneinit

#

# Note that pod restarts cause re-execution of Init Containers. Since

# /dgraph is persisted across pod restarts, the Init Container will exit

# automatically when /dgraph/doneinit is present and proceed with starting

# the Alpha process.

#

# Tip: StatefulSet pods can start in parallel by configuring

# .spec.podManagementPolicy to Parallel:

#

# https://kubernetes.io/docs/concepts/workloads/controllers/statefulset/#deployment-and-scaling-guarantees

#

# initContainers:

# - name: init-alpha

# image: dgraph/dgraph:latest

# command:

# - bash

# - "-c"

# - |

# trap "exit" SIGINT SIGTERM

# echo "Write to /dgraph/doneinit when ready."

# until [ -f /dgraph/doneinit ]; do sleep 2; done

# volumeMounts:

# - name: datadir

# mountPath: /dgraph

containers:

- name: alpha

image: dgraph/dgraph:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 7080

name: grpc-alpha-int

- containerPort: 8080

name: http-alpha

- containerPort: 9080

name: grpc-alpha

volumeMounts:

- name: datadir

mountPath: /dgraph

env:

# This should be the same namespace as the dgraph-zero

# StatefulSet to resolve a Dgraph Zero's DNS name for

# Alpha's --zero flag.

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# dgraph versions earlier than v1.2.3 and v20.03.0 can only support one zero:

# `dgraph alpha --zero dgraph-zero-0.dgraph-zero.${POD_NAMESPACE}.svc.cluster.local:5080`

# dgraph-alpha versions greater than or equal to v1.2.3 or v20.03.1 can support

# a comma-separated list of zeros. The value below supports 3 zeros

# (set according to number of replicas)

command:

- bash

- "-c"

- |

set -ex

dgraph alpha --my=$(hostname -f):7080 --whitelist 10.2.0.0/16,192.168.0.0/16 --lru_mb 2048 --zero dgraph-zero-0.dgraph-zero.${POD_NAMESPACE}.svc.cluster.local:5080,dgraph-zero-1.dgraph-zero.${POD_NAMESPACE}.svc.cluster.local:5080,dgraph-zero-2.dgraph-zero.${POD_NAMESPACE}.svc.cluster.local:5080

livenessProbe:

httpGet:

path: /health?live=1

port: 8080

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

successThreshold: 1

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

successThreshold: 1

terminationGracePeriodSeconds: 600

volumes:

- name: datadir

persistentVolumeClaim:

claimName: datadir

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.alpha.kubernetes.io/storage-class: ceph-sc

spec:

storageClassName: ceph-sc

accessModes:

- "ReadWriteOnce"

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dgraph-ratel

namespace: dgraph

labels:

app: dgraph-ratel

spec:

selector:

matchLabels:

app: dgraph-ratel

template:

metadata:

labels:

app: dgraph-ratel

spec:

containers:

- name: ratel

image: dgraph/dgraph:latest

ports:

- containerPort: 8000

command:

- dgraph-ratel

拉起之后,会观察到

其中zero是管理节点

alpha是数据节点

ratel是提供的ui

有三个public的svc,就是对外提供的服务了,其中主要使用alpha和ratel的两个服务

配置ui

配置ingress nginx代理(http协议)

yaml配置文件ingress.yaml如下:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: dgraph-ingress

namespace: dgraph

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

rules:

- host: dgraph.lenovo.com

http:

paths:

- path: /(.*)

backend:

serviceName: dgraph-ratel-public

servicePort: 8000

- path: /zero/(.*)

backend:

serviceName: dgraph-zero-public

servicePort: 6080

- path: /alpha/(.*)

backend:

serviceName: dgraph-alpha-public

servicePort: 8080

kubectl apply -f ingress.yaml

配置hosts:

10.110.149.173 dgraph.lenovo.com

10.110.149.173 grpc.dgraph.lenovo.com

在浏览器上面访问:http://dgraph.lenovo.com/

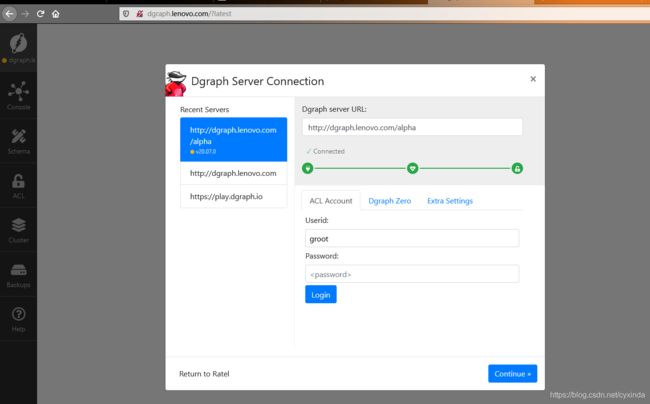

选择中间的

然后可以看到:

配置Draph server URL:http://dgraph.lenovo.com/alpha

点击connect,连接成功,然后就可以愉快的使用dgraph了。

使用java client连接dgraph

参考https://blog.csdn.net/weixin_38166686/article/details/102452691

概述

一般情况下,我们的系统对外暴露HTTP/HTTPS的接口,内部使用rpc(GRPC)通讯,这时GRPC在服务之间通过service访问,本地调试时通过service nodePort方式调用。

但随着业务壮大,需要跨集群的GRPC通讯,或者pod数量太多、nodePort端口管理混乱时,就可以考虑用ingress来统一管理和暴露GRPC服务了。

本文详细介绍如何在kubernetes用ingress负载grpc服务。

- 首先需要特别留意:ingress-nginx不支持负载明文的grpc(这个官方居然没明说,巨坑,详情请自行搜索github上的issues),所以只能在443端口上用TLS来负载。

生成公钥

前面说了ingress只支持在443端口上负载加密的grpc,所以在正式搭建前需要准备一组公私钥。

这里我们可以自行生成一组普通的公私钥,下面的命令将在当前目录生成一组公私钥文件:

[root@po-jenkins crt]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout ca.key -out ca.crt -subj "/CN=grpc.dgraph.lenovo.com/O=grpc.dgraph.lenovo.com"

Generating a 2048 bit RSA private key

.....................+++

....+++

writing new private key to 'ca.key'

-----

[root@po-jenkins crt]# ls

ca.crt ca.key

参数说明:

- ca.key: 私钥文件名

- ca.crt: 公钥文件名

- grpc.dgraph.lenovo.com: 域名后缀,后续所有使用此公私钥对的域名必须以grpc.dgraph.lenovo.com为后缀

生成k8s的secret

[root@po-jenkins crt]# kubectl create secret tls grpc-secret --key ca.key --cert ca.crt -n dgraph

secret/grpc-secret created

编辑grpc-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grpc-ingress

namespace: dgraph

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "GRPC"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

tls:

- secretName: grpc-secret

hosts:

- grpc.dgraph.lenovo.com

rules:

- host: grpc.dgraph.lenovo.com

http:

paths:

- path: /

backend:

serviceName: dgraph-alpha-public

servicePort: 9080

生成grpc ingress

[root@po-jenkins crt]# kubectl apply -f grpc-ingress.yaml

ingress.extensions/grpc-ingress created

参数说明:

- 启用nginx:kubernetes.io/ingress.class: “nginx”

- 启用TLS,下面两项2选1:

- 由nginx负责tls,服务内部还是用明文传输:nginx.ingress.kubernetes.io/ssl-redirect: “true”、nginx.ingress.kubernetes.io/backend-protocol: “GRPC”

- 由服务自行实现TLS,nginx只负责路由:nginx.ingress.kubernetes.io/backend-protocol: “GRPCS”

- 域名:rules.host、tls.hosts这两个是该grpc服务的ingress域名,可以自定义,但必须以前面生成公私钥对时使用的后缀结尾

- 服务: grpc服务的服务名和端口由rules.http.backend配置

- 公私钥: tls.secretName指向前面创建的grpcs-secret

注意:ingress-nginx的搭建,最好使用官方的yaml文件,自定义的配置可能会有冲突之处。

java终端访问

访问代码如下:

public String dgraph() throws SSLException {

SslContextBuilder builder = GrpcSslContexts.forClient();

builder.trustManager(new File("E:/engine/workspaces/crt/ca.crt"));

SslContext sslContext = builder.build();

ManagedChannel channel = NettyChannelBuilder.forAddress("grpc.dgraph.lenovo.com", 443)

.sslContext(sslContext)

.build();

DgraphGrpc.DgraphStub stub = DgraphGrpc.newStub(channel);

DgraphClient dgraphClient = new DgraphClient(stub);

String json = "{ findNames(func: has(name)){ name } }";

Map<String, String> vars = new HashMap<>();

DgraphProto.Response response = dgraphClient.newReadOnlyTransaction().queryWithVars(json, vars);

String result = response.getJson().toStringUtf8();

System.out.println(result);

return result;

}

调用结果:

{

"findNames": [{

"name": "pre_processed_data"

}, {

"name": "annotated_data"

}, {

"name": "raw_data"

}, {

"name": "bu_data"

}, {

"name": "bot_data_platform"

}, {

"name": "web_crawler"

}]

}

错误举例

访问的域名,必须与生成证书的域名一致,切记,否则会出现

8月 12, 2020 2:29:00 下午 org.apache.catalina.core.ApplicationContext log

信息: Initializing Spring DispatcherServlet 'dispatcherServlet'

2020-08-12 14:29:00,963: Initializing Servlet 'dispatcherServlet'

2020-08-12 14:29:00,975: Completed initialization in 11 ms

8月 12, 2020 2:29:03 下午 org.apache.catalina.core.StandardWrapperValve invoke

严重: Servlet.service() for servlet [dispatcherServlet] in context with path [] threw exception [Request processing failed; nested exception is java.lang.RuntimeException: java.util.concurrent.CompletionException: java.lang.RuntimeException: The doRequest encountered an execution exception:] with root cause

sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

at java.base/sun.security.provider.certpath.SunCertPathBuilder.build(SunCertPathBuilder.java:141)

at java.base/sun.security.provider.certpath.SunCertPathBuilder.engineBuild(SunCertPathBuilder.java:126)

at java.base/java.security.cert.CertPathBuilder.build(CertPathBuilder.java:297)

at java.base/sun.security.validator.PKIXValidator.doBuild(PKIXValidator.java:379)

at java.base/sun.security.validator.PKIXValidator.engineValidate(PKIXValidator.java:289)

at java.base/sun.security.validator.Validator.validate(Validator.java:264)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:285)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:144)

at io.netty.handler.ssl.ReferenceCountedOpenSslClientContext$ExtendedTrustManagerVerifyCallback.verify(ReferenceCountedOpenSslClientContext.java:255)

at io.netty.handler.ssl.ReferenceCountedOpenSslContext$AbstractCertificateVerifier.verify(ReferenceCountedOpenSslContext.java:701)

at io.netty.internal.tcnative.SSL.readFromSSL(Native Method)

at io.netty.handler.ssl.ReferenceCountedOpenSslEngine.readPlaintextData(ReferenceCountedOpenSslEngine.java:593)

at io.netty.handler.ssl.ReferenceCountedOpenSslEngine.unwrap(ReferenceCountedOpenSslEngine.java:1176)

at io.netty.handler.ssl.ReferenceCountedOpenSslEngine.unwrap(ReferenceCountedOpenSslEngine.java:1293)

at io.netty.handler.ssl.ReferenceCountedOpenSslEngine.unwrap(ReferenceCountedOpenSslEngine.java:1336)

at io.netty.handler.ssl.SslHandler$SslEngineType$1.unwrap(SslHandler.java:204)

at io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1332)

at io.netty.handler.ssl.SslHandler.decodeJdkCompatible(SslHandler.java:1227)

at io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1274)

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:503)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:442)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:281)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1422)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:931)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:700)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:635)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:552)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:514)

at io.netty.util.concurrent.SingleThreadEventExecutor$6.run(SingleThreadEventExecutor.java:1050)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.base/java.lang.Thread.run(Thread.java:830)

或者

No subject alternative DNS name matching XXXX found

常用的命令:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout ca.key -out ca.crt -subj "/CN=grpc.dgraph.lenovo.com/O=grpc.dgraph.lenovo.com"

kubectl delete secret/grpc-secret -n dgraph

kubectl create secret tls grpc-secret --key ca.key --cert ca.crt -n dgraph

kubectl delete ingress/grpc-ingress -n dgraph

kubectl apply -f ../grpc-ingress.yaml