【Kubernetes】Pod学习(十四)Deployment的升级策略

此文为学习《Kubernetes权威指南》的相关笔记

学习笔记:

对于大规模集群而言,如何在进行服务升级的同时保持可用性一直是一个难点。

作为Pod副本管理控制器,Deployment可以配置Pod发布和更新方式,保证在Pod升级过程中不存在Pod不可用的问题。

如果Pod是在Deployment上创建的,只需要在运行时修改Deployment的Pod定义或者镜像名称,并应用到Deployment镜像上,控制器就能够完成Deployment的自动更新操作,Deployment可指定的Pod更新策略有两种:

- Recreate:设置spec.strategy.type=Recreate,该策略下将杀掉正在运行的Pod,然后创建新的。

- RollingUpdate:设置spec.strategy.type=RollingUpdate,滚动更新,即逐渐减少旧Pod的同时逐渐增加新Pod。

其中默认的RollingUpdate滚动更新策略的“边删除边更新”保证了在更新期间的服务可用性,在使用这个策略时,有两个可定义参数:

- spec.strategy.RollingUpdate.maxUnavailable:更新过程中Pod数量可以低于Pod期望副本的数量或百分比(默认25%)

- spec.strategy.RollingUpdate.maxSurge:更新过程中Pod数量可以超过Pod期望副本的数量或百分比(默认25%)

接下来分别尝试使用两种更新策略

一、使用RollingUpdate更新策略

1、定义并创建nginx-deployment

在配置 nginx-deployment文件中,指定nginx容器版本为1.7.9,副本数为3

在不指定spec.strategy.type时,默认更新策略即为RollingUpdate

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

创建Deployment并查看部署情况

nginx-deployment配置生成了一个RS和下属的3个Pod副本

# kubectl create -f update-deployment-test.yaml

deployment.apps/nginx-deployment created# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-7cccdd79bf 3 3 3 32s

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-7cccdd79bf-9tn66 1/1 Running 0 40s

nginx-deployment-7cccdd79bf-jq5pw 1/1 Running 0 41s

nginx-deployment-7cccdd79bf-sn7mn 1/1 Running 0 40s

2、更新nginx-deployment下属Pod的镜像版本

书上提供了两种可用的运行时Deployment的更新方法

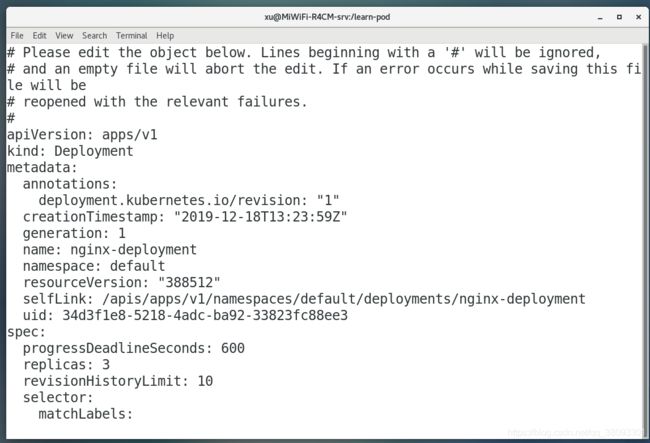

①kubectl edit deployment

使用该命令将调出该Deployment的配置文件编辑页面,修改后保存该文件就能够使控制器响应进行对应的修改

可以看到,kubectl edit不支持对Deployment的spec域之外的基本属性进行修改

包括 apiVersion/kind/name

# kubectl edit deployment nginx-deployment

A copy of your changes has been stored to "/tmp/kubectl-edit-x15dl.yaml"

error: At least one of apiVersion, kind and name was changed

②使用 kubectl set 命令进行修改

使用-h参数查看该命令的主要操作

# kubectl set -h

Configure application resourcesThese commands help you make changes to existing application resources.

Available Commands: #可以修改如下参数

env Update environment variables on a pod template

image Update image of a pod template

resources Update resource requests/limits on objects with pod templates

selector Set the selector on a resource

serviceaccount Update ServiceAccount of a resource

subject Update User, Group or ServiceAccount in a

RoleBinding/ClusterRoleBindingUsage: #命令格式

kubectl set SUBCOMMAND [options]Use "kubectl

--help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all

commands).

使用kubectl set image命令修改nginx-deployment所使用的nginx容器版本

# kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1

deployment.apps/nginx-deployment image updated

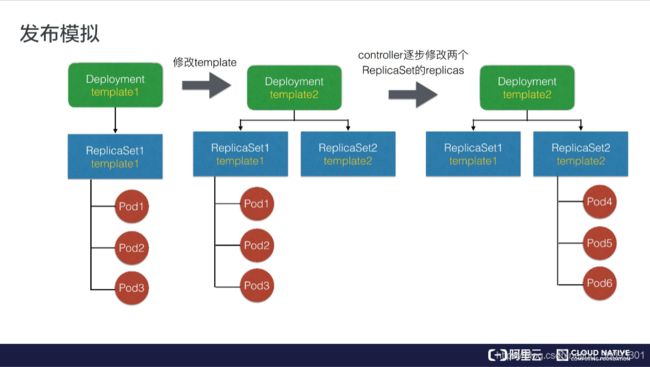

通过多次使用kubectl get rs命令,以可清晰地看到滚动升级的过程

创建一个新的RS,逐步减少旧RS的副本数量,同时增加新RS的副本数量

# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-754b687b4c 1 1 0 17s

nginx-deployment-7cccdd79bf 3 3 3 8m31s

[root@MiWiFi-R4CM-srv learn-pod]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-754b687b4c 3 3 2 33s

nginx-deployment-7cccdd79bf 1 1 1 8m47s

# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-754b687b4c 3 3 3 37s

nginx-deployment-7cccdd79bf 0 0 0 8m51s

阿里云大学的云原生课堂也给出滚动升级的模拟图

最后,查看当前Pod,可以看到旧Pod已经被删除,所有的Pod已经变成了新RS创建的新版本

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-754b687b4c-jwmhd 1/1 Running 0 36s

nginx-deployment-754b687b4c-skf7b 1/1 Running 0 38s

nginx-deployment-754b687b4c-tw7nm 1/1 Running 0 68s

在Deployment的Events中,记录了滚动升级的全过程

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 9m54s deployment-controller Scaled up replica set nginx-deployment-7cccdd79bf to 3

Normal ScalingReplicaSet 100s deployment-controller Scaled up replica set nginx-deployment-754b687b4c to 1

Normal ScalingReplicaSet 70s deployment-controller Scaled down replica set nginx-deployment-7cccdd79bf to 2

Normal ScalingReplicaSet 70s deployment-controller Scaled up replica set nginx-deployment-754b687b4c to 2

Normal ScalingReplicaSet 68s deployment-controller Scaled down replica set nginx-deployment-7cccdd79bf to 1

Normal ScalingReplicaSet 68s deployment-controller Scaled up replica set nginx-deployment-754b687b4c to 3

Normal ScalingReplicaSet 66s deployment-controller Scaled down replica set nginx-deployment-7cccdd79bf to 0

二、使用 Recreate更新策略

修改原先的Deployment定义,增加 strategy域,设置更新策略为Recreate

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

......

strategy:

type: Recreate

重新创建nginx-deployment

同样用kubectl set image命令修改容器版本

# kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1

deployment.apps/nginx-deployment image updated

查看RS列表,观察变化情况

可以看到在Recreate策略下,调度器直接清空了旧RS的Pod副本,由新RS创建新的Pod副本

在这种更新策略下,很有可能出现暂时的服务不可用情况

# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-7cccdd79bf 0 0 0 54s

# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-754b687b4c 3 3 3 4s

nginx-deployment-7cccdd79bf 0 0 0 58s

三、运行时操作标签选择器将出现的情况

可以看到,无论是kubectl edit命令还是Kubectl set命令,都支持对标签选择器selector进行修改

需要注意的是,Deployment等Pod副本调度管理器的selector与其配置的Pod标签应该是绝对一致的,在运行时添加删除或更新标签选择器,都应该对下属Pod的标签做相同的配置,否则Deployment的更新配置最终将失败

1、按照(一)中的配置文件建立nginx-deployment

# kubectl create -f update-deployment-test.yaml

deployment.apps/nginx-deployment created

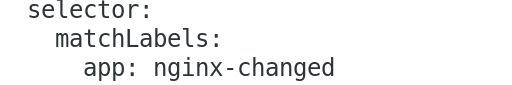

2、使用kubectl edit命令修改Deployment的标签选择器

对原有标签做出修改

可以看到,由于修改后Deploymen的selector与其配置的Pod标签不一致,更新会报验证错误而失败

与此同时,可以看到Deployment使用的selector不支持运行时修改,应该是本版本做出了限制

# deployments.apps "nginx-deployment" was not valid:

# * spec.template.metadata.labels: Invalid value: map[string]string{"app":"nginx"}: `selector` does not match template `labels`

# * spec.selector: Invalid value: v1.LabelSelector{MatchLabels:map[string]string{"app":"nginx-changed"}, MatchExpressions:[]v1.LabelSelectorRequirement(nil)}: field is immutable

3、对于标签选择器的操作对于原有Pod的影响

无论是修改、添加还是删除标签选择器,都会创建与之对应的RS和Pod副本,原有的RS和Pod不会受到影响,不会被自动删除,Deployment失去了对他们的控制权(即无法向后兼容)

四、Replication Controller的滚动升级

对于RC的滚动升级,K8s提供了一个kubectl rolling-update命令进行实现

该命令创建了一个新的RC,同样通过“边删除边更新”的方式,逐步代替旧RC,实现滚动升级

值得一提的是该命令要求新的RC必需具有新的Label,如果以配置文件的方式更新,还需要新的RC名称

RC的回滚则通过kubectl rolling-update --rollback完成

可以看出,RC的滚动升级不具有Deployment在应用版本升级过程的历史纪录、新旧版本数量的精细控制等功能

在演进过程中,已经势必被RS和Deployment所取代

这里不做深究