kubernetes存储 -- Volumes管理(三)StatefulSet控制器、StatefulSet部署mysql主从集群

StatefulSet控制器

StatefulSet可以通过Headless Service维持Pod的拓扑状态.

- StatefulSet将应用状态抽象成了两种情况:

- 拓扑状态:应用实例必须按照某种顺序启动。新创建的Pod必须和原来Pod的网络标识一样

- 存储状态:应用的多个实例分别绑定了不同存储数据。

- StatefulSet给所有的Pod进行了编号,编号规则是:$(statefulset名称)-$(序号),从0开始。

[root@server2 vol]# mkdir nginx /新建个目录

[root@server2 vol]# cd nginx/

创建一个无头服务:

[root@server2 nginx]# vim service.yml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None /没有IP

selector:

app: nginx /标签选择

创建StatefulSet控制器:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web /注意这里的名称是web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

[root@server2 nginx]# kubectl apply -f statefulset.yml

kubestatefulset.apps/web created

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-576d464467-9qs9h 1/1 Running 0 132m

web-0 1/1 Running 0 56s

web-1 1/1 Running 0 52s

它创建的pod 名字在 web 的基础上加上了序号,可见它是按顺序创建的.

我们可以把nfs的动态卷全部放到另一个命名空间去,让这个实验更清晰:

[root@server2 nginx]# kubectl create namespace nfs-client-provisioner

namespace/nfs-client-provisioner created

[root@server2 nfs-client]# vim rbac.yml

[root@server2 nfs-client]# vim deployment.yml

:%s/default/nfs-client-provisioner/g /将这两个文件的命名空间全部替换

[root@server2 nfs-client]# kubectl apply -f . -n nfs-client-provisioner

[root@server2 nfs-client]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 8m48s

web-1 1/1 Running 0 8m44s /只剩statefulset 的pod 了

root@server2 nginx]# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: app=nginx

Annotations: Selector: app=nginx

Type: ClusterIP

IP: None

Port: web 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.141.234:80,10.244.22.31:80

Session Affinity: None

Events:

我们可以通过 dig 直接解析到我们的两个pod的ip:

[root@server2 nginx]# dig nginx.default.svc.cluster.local @10.244.179.75 /@后为dns地址

;; ANSWER SECTION:

nginx.default.svc.cluster.local. 30 IN A 10.244.22.31

nginx.default.svc.cluster.local. 30 IN A 10.244.141.234

[root@server2 nginx]# dig web-0.nginx.default.svc.cluster.local @10.244.179.75 /也可以直接解析pod

;; ANSWER SECTION:

web-0.nginx.default.svc.cluster.local. 30 IN A 10.244.141.234

//我们在访问的时候就可以直接使用域名来访问了,这就是无头服务。

pod的弹缩:

拉伸到5个:

[root@server2 nginx]# vim statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 5

[root@server2 nginx]# kubectl apply -f statefulset.yml

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 9s

web-1 1/1 Running 0 5s

web-2 0/1 ContainerCreating 0 2s

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 37s

web-1 1/1 Running 0 33s

web-2 1/1 Running 0 30s

web-3 1/1 Running 0 27s

web-4 1/1 Running 0 25s

可以看出前面的创建了,后面的才会创建

收缩:

[root@server2 nginx]# vim statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 0 / 0个副本

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web configured

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 3m16s

web-1 1/1 Running 0 3m12s

web-2 1/1 Running 0 3m9s

web-3 1/1 Running 0 3m6s

web-4 0/1 Terminating 0 3m4s

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 3m19s

web-1 1/1 Running 0 3m15s

web-2 1/1 Running 0 3m12s

web-3 0/1 Terminating 0 3m9s

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 3m25s

web-1 1/1 Running 0 3m21s

web-2 1/1 Terminating 0 3m18s

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 3m31s

web-1 0/1 Terminating 0 3m27s

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 0/1 Terminating 0 3m38s

它会从后往前一个一个进行回收

加上pv的存储:

- PV和PVC的设计,使得StatefulSet对存储状态的管理成为了可能:

[root@server2 nginx]# vi statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts: /从这网下为新加入的内容

- name: www

mountPath: /usr/share/nginx/html /挂载点

volumeClaimTemplates:

- metadata:

name: www

spec:

storageClassName: managed-nfs-storage /存储类名称,可以不加,因为我们设置了默认的存储类

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web created

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 21s

web-1 1/1 Running 0 18s

web-2 1/1 Running 0 14s

[root@server2 nginx]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound pvc-364e56c9-729a-4955-90b9-edc892e95474 1Gi RWO managed-nfs-storage 24s

www-web-1 Bound pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2 1Gi RWO managed-nfs-storage 21s

www-web-2 Bound pvc-63b16bd5-7554-4426-84f2-f29757189571 1Gi RWO managed-nfs-storage 17s

[root@server2 nginx]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-364e56c9-729a-4955-90b9-edc892e95474 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 26s

pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 23s

pvc-63b16bd5-7554-4426-84f2-f29757189571 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 18s

- StatefulSet为每一个Pod分配并创建一个同样编号的PVC。这样,kubernetes就可以通过Persistent Volume机制为这个PVC绑定对应的PV,从而保证每一个Pod都拥有一个独立的Volume。

在 nfs 主机上查看

[root@server1 nfsdata]# ll

total 0

drwxrwxrwx 2 root root 6 Jul 6 17:01 default-www-web-0-pvc-364e56c9-729a-4955-90b9-edc892e95474

drwxrwxrwx 2 root root 6 Jul 6 17:01 default-www-web-1-pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2

drwxrwxrwx 2 root root 6 Jul 6 17:01 default-www-web-2-pvc-63b16bd5-7554-4426-84f2-f29757189571

#编辑发布页面

[root@server1 nfsdata]# echo web-0 >default-www-web-0-pvc-364e56c9-729a-4955-90b9-edc892e95474/index.html

[root@server1 nfsdata]# echo web-1 > default-www-web-1-pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2/index.html

[root@server1 nfsdata]# echo web-2 > default-www-web-2-pvc-63b16bd5-7554-4426-84f2-f29757189571/index.html

我们可以直接把k8s的dns服务地址写到配置文件中,就可以自动榜我们解析了:

[root@server2 nginx]# vim /etc/resolv.conf

nameserver 10.244.179.75

nameserver 114.114.114.114

[root@server2 nginx]# curl web-1.nginx.default.svc.cluster.local

web-1

[root@server2 nginx]# curl web-0.nginx.default.svc.cluster.local

web-0 /可以这样解析

[root@server2 nginx]# curl nginx.default.svc.cluster.local

web-0 /也可以不加pod名

[root@server2 nginx]# curl nginx.default.svc.cluster.local

web-2

[root@server2 nginx]# curl nginx.default.svc.cluster.local

web-1

[root@server2 nginx]# curl nginx.default.svc.cluster.local

web-2

//并且可以实现负载均衡。

- Pod被删除后重建,重建Pod的网络标识也不会改变,Pod的拓扑状态按照Pod的“名字+编号”的方式固定下来,并且为每个Pod提供了一个固定且唯一的访问入口,即Pod对应的DNS记录。

[root@server2 nginx]# vim statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 0 /改变副本数为0

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web configured

[root@server2 nginx]# kubectl get pod

No resources found in default namespace. /已经没有pod了

[root@server2 nginx]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound pvc-364e56c9-729a-4955-90b9-edc892e95474 1Gi RWO managed-nfs-storage 19m

www-web-1 Bound pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2 1Gi RWO managed-nfs-storage 19m

www-web-2 Bound pvc-63b16bd5-7554-4426-84f2-f29757189571 1Gi RWO managed-nfs-storage 19m

//但是pvc和pv依然存在

[root@server2 nginx]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-364e56c9-729a-4955-90b9-edc892e95474 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 20m

pvc-4b02cc74-ea4f-4673-8e6f-dd2660b05aa2 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 20m

pvc-63b16bd5-7554-4426-84f2-f29757189571 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 20m

[root@server2 nginx]# vim statefulset.yml

spec:

serviceName: "nginx"

replicas: 3 /改回3个

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web configured

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 13s

web-1 1/1 Running 0 10s

web-2 1/1 Running 0 7s /pod 名称没有变化

[root@server2 nginx]# curl web-0.nginx.default.svc.cluster.local

web-0

[root@server2 nginx]# curl web-1.nginx.default.svc.cluster.local

web-1

[root@server2 nginx]# curl web-2.nginx.default.svc.cluster.local

web-2

//而且里面的数据没有变化

StatefulSet部署mysql主从集群

获取帮助:https://kubernetes.io/zh/docs/tasks/run-application/run-replicated-stateful-application/

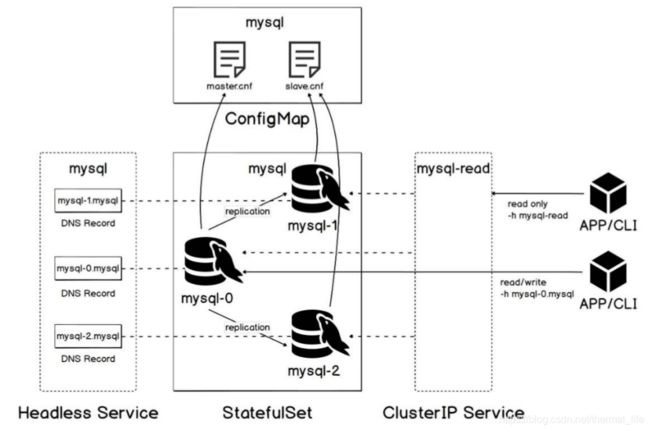

- 首先通过

cm将主节点和从结点的配置文件分离。 - 通过

statefulset控制器启动服务,0 是master,后面的都是slave结点。 无头服务为每个结点都分配了网络标识,可以通过它来访问master 主机, 来进行写操作(值有master可以进行写操作)clusterIP服务提供一个VIP,用来访问结点,进行读操作

以配置文件创建 ConfigMap :

[root@server2 mysql]# vim cm.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

[root@server2 mysql]# kubectl apply -f cm.yml

configmap/mysql created

[root@server2 mysql]# kubectl get cm

NAME DATA AGE

mysql 2 2s

创建svc,做读写分离

[root@server2 mysql]# vim svc.yml

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None /无头服务

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306 /默认使用clusterIP

selector:

app: mysql

[root@server2 mysql]# kubectl apply -f svc.yml

service/mysql created

service/mysql-read created

[root@server2 mysql]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 443/TCP 18d

service/mysql ClusterIP None 3306/TCP 4s

service/mysql-read ClusterIP 10.105.219.33 3306/TCP 4s

创建statefulset控制器,部署mysql主从

它里面需要mysql:5.7镜像和 xtrabackup:1.0 镜像,我们下载好放到 harbor仓库中。

为了方便理解,我们先只生成一个副本,即master结点。

[root@server2 mysql]# vim statefulsrt.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql /匹配标签

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

spec:

initContainers: /初始化容器,就是在容器创建前界定的一些数据

- name: init-mysql

image: mysql:5.7

command:

- bash /执行一个shell脚本

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.从pod序号索引生成mysql服务器id。

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1 /取主机名,取不到就退出

ordinal=${BASH_REMATCH[1]} /取pod序号

echo [mysqld] > /mnt/conf.d/server-id.cnf /创建文件

# 添加一个偏移量以避免保留server-id=0值。=0时不允许任何slave结点复制

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# 将相应的conf.d文件从config-map复制到emptyDir。

if [[ $ordinal -eq 0 ]]; then /判断序号是否等于0

cp /mnt/config-map/master.cnf /mnt/conf.d/ /是就将master配置文件拷贝过来,文件来自 cm

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/ /不是就拷贝slave的配置文件

fi

volumeMounts: /挂载cm

- name: conf /对应最后资源清单最下面的emptydir卷

mountPath: /mnt/conf.d

- name: config-map /对应cm,里面放的配置文件

mountPath: /mnt/config-map

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data /pvc的挂载

mountPath: /var/lib/mysql

subPath: mysql /生成mysql子目录存放数据

- name: conf /初始化完成后在把conf卷挂载到 /etc/mysql/conf.d/上,此时里面已经有初始化时生成的数据了

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 300m

memory: 1Gi

livenessProbe: /存活探针

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe: /就绪探针

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

volumes: /卷

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

volumeClaimTemplates: / pvc 模板

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

没有定义存储类,所以可以用默认的。

[root@server2 mysql]# kubectl apply -f statefulsrt.yml

statefulset.apps/mysql created

[root@server2 mysql]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

mysql-0 0/1 Init:0/1 0 5s

mysql-0 0/1 PodInitializing 0 25s /初始化

mysql-0 0/1 Running 0 26s /存活检测

mysql-0 1/1 Running 0 85s /就绪检测

[root@server2 mysql]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-mysql-0 Bound pvc-e5c41a78-ec82-4fa0-aa84-c96cde5798ce 10Gi RWO managed-nfs-storage 2m18s

/生成了pvc,挂载在 容器内的 /var/lib/mysql

[root@server2 mysql]# kubectl describe pod mysql-0

/etc/mysql/conf.d from conf (rw)

/var/lib/mysql from data (rw,path="mysql")

初始化的时候我们把 master.cnf 和serve-id.cnf 文件放在/mnt/conf.d里面,这些数据是容器共享的,只要pod不删除,卷就一直在。容器也能共享初始化容器的内容。

[root@server2 mysql]# kubectl logs mysql-0 -c init-mysql

++ hostname

+ [[ mysql-0 =~ -([0-9]+)$ ]]

+ ordinal=0

+ echo '[mysqld]'

+ echo server-id=100

+ [[ 0 -eq 0 ]]

+ cp /mnt/config-map/master.cnf /mnt/conf.d/

[root@server2 mysql]# kubectl exec mysql-0 -c mysql -- ls /etc/mysql/conf.d

master.cnf

server-id.cnf

[root@server2 mysql]# kubectl exec mysql-0 -c mysql -- cat /etc/mysql/conf.d/master.cnf

# Apply this config only on the master.

[mysqld]

log-bin 是在定义cm时的内容。

[root@server2 mysql]# kubectl exec mysql-0 -c mysql -- cat /etc/mysql/conf.d/server-id.cnf

[mysqld]

server-id=100 是初始化容器生成的内容。

我们可以看出在初始化的时候生成了 server-id.cnf 和 msater.cnf 这两个文件.初始化完成后,容器又把conf这个卷挂载到了/etc/mysql/conf.d/目录下,server-id.cnf 和 msater.cnf 这两个文件这两个文件也共享了过来。

访问:

[root@server2 mysql]# yum install -y mysql /安装一个客户端

[root@server2 mysql]# cat /etc/resolv.conf

nameserver 10.244.179.75 /k8s的dns 解析

nameserver 114.114.114.114

[root@server2 mysql]# mysql -h mysql-0.mysql.default.svc.cluster.local

通过无头服务进行数据库的远程连接。

MySQL [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

master就配置好了。

然后我们在添加slave结点:

slave结点除了配置文件我们最主要的问题就是如何从主结点取复制数据? 我们可以在pod中运行一个容器,init容器不能和普通容器共存,它运行完就退出了。

所以我们在开启一个 xtrabackup 容器,打开3307端口,专门用于数据的传输,其它的pod 可以通过初始化容器连接这个3307端口,来拷贝/var/lib/mysql中的数据。

[root@server2 mysql]# vim statefulsrt.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 2 /建立2个副本,一个msater一个slave

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql /备份数据,主节点是不需要备份的,从结点才需要

image: xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# 如果数据目录已经存在,则跳过克隆。主节点才有这个数据目录。主节点就会跳过这一步

[[ -d /var/lib/mysql/mysql ]] && exit 0 /存在就正常退出

# 跳过主服务器上的克隆(序号索引0)。

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

#接收上一个结点的3307端口中的数据。mywql-1结点从mysql-0 中克隆数据 ,mysql-2从mysql-1中克隆数据

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql /mysql-$(($ordinal-1)).mysql是无头服务里面的解析

# 准备备份

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 300m

memory: 1Gi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup /在开启一个容器

image: xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307 /打开3307端口

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql /进入数据库数据目录

# 确定克隆数据的binlog位置(如果有的话)。

if [[ -f xtrabackup_slave_info && "x$( change_master_to.sql.in /从slave端复制数据是这种形式,直接生成文件

# 在本例中忽略xtrabackup_binlog_info(它是无用的).

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then /数据从master端复制是这种形式:

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in /把这些信息写入 change_master_to.sql.in文件

fi

# 检查是否需要通过启动复制来完成克隆。

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$( 就是说新添加的初始化容器 clone-mysql 会复制上一个结点的数据,(master结点会跳过这一步),而新添加的xtrabackup 容器会打开3307端口,等待下一个结点的连接。

- master结点初始化时不会拷贝数据,因为它是master结点,初始化完成后建立mysql容器,再建立xtrabackup容器,打开3307端口,等待第一个slave结点的连接,

- 第一个slave结点初始化时,会通过初始化容器 clone-mysql 访问master结点的3307端口(mysql-$(($ordinal-1)).mysql 3307)复制数据,然后建立mysql容器,建立xtrabackup容器,打开3307端口,等待第二个slave结点的连接。

- 第二个结点初始时,通过初始化容器 访问第一个slave结点的3307端口复制数据,建立abackup容器,打开3307端口,等待第三个slave结点的连接。

我们先收缩容器至0个 ( replicas: 0),apply 一下,删除先前创建的 msater 结点,因为刚才我们没有打开3307端口。

然后在将副本数增加到两个( replicas: 2),在apply应用:

[root@server2 mysql]# kubectl apply -f statefulsrt.yml

statefulset.apps/mysql configured

NAME READY STATUS RESTARTS AGE

mysql-0 0/2 PodInitializing 0 9s

mysql-0 1/2 Running 0 21s

mysql-0 2/2 Running 0 22s /master结点运行了

mysql-1 0/2 Pending 0 0s

mysql-1 0/2 Pending 0 0s

mysql-1 0/2 Pending 0 2s

mysql-1 0/2 Init:0/1 0 2s

mysql-1 0/2 Init:0/1 0 6s

mysql-1 0/2 PodInitializing 0 27s

mysql-1 1/2 Running 0 55s

mysql-1 1/2 Error 0 82s

mysql-1 1/2 Running 1 83s

mysql-1 2/2 Running 1 87s /slave1结点运行了

[root@server2 mysql]# kubectl logs mysql-0 -c init-mysql

++ hostname

+ [[ mysql-0 =~ -([0-9]+)$ ]]

+ ordinal=0

+ echo '[mysqld]'

+ echo server-id=100

+ [[ 0 -eq 0 ]]

+ cp /mnt/config-map/master.cnf /mnt/conf.d/ 初始化容器复制配置文件

[root@server2 mysql]# kubectl logs mysql-0 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]] /目录已经存在自动退出

+ exit 0

[root@server2 mysql]# kubectl logs mysql-1 -c init-mysql

++ hostname

+ [[ mysql-1 =~ -([0-9]+)$ ]]

+ ordinal=1

+ echo '[mysqld]'

+ echo server-id=101

+ [[ 1 -eq 0 ]]

+ cp /mnt/config-map/slave.cnf /mnt/conf.d/ /slave结点复制slave的配置文件

[root@server2 mysql]# kubectl logs mysql-1 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]] /判断目录不存在,继续往下走,拷贝数据

++ hostname

+ [[ mysql-1 =~ -([0-9]+)$ ]]

+ ordinal=1

+ [[ 1 -eq 0 ]]

+ ncat --recv-only mysql-0.mysql 3307 /接收master 结点的3307端口

+ xbstream -x -C /var/lib/mysql

+ xtrabackup --prepare --target-dir=/var/lib/mysql /开始拷贝数据

[root@server1 nfsdata]# ll

total 0

drwxrwxrwx 3 root root 19 Jul 6 20:02 default-data-mysql-0-pvc-e5c41a78-ec82-4fa0-aa84-c96cde5798ce

drwxrwxrwx 3 root root 19 Jul 6 21:56 default-data-mysql-1-pvc-5ff29fa6-56e0-4021-9f4d-d989116bebff

[root@server1 nfsdata]#

nfs主机上也已经记录了。

我们在扩容到三个( replicas: 3):

[root@server2 mysql]# kubectl logs mysql-2 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]] /判断目录不存在,继续往下走,拷贝数据

++ hostname

+ [[ mysql-1 =~ -([0-9]+)$ ]]

+ ordinal=1

+ [[ 1 -eq 0 ]]

+ ncat --recv-only mysql-1.mysql 3307 /接收slave1 结点的3307端口

+ xbstream -x -C /var/lib/mysql

+ xtrabackup --prepare --target-dir=/var/lib/mysql /开始拷贝数据

mysql的主从是一个非常耗资源的事情,所以我们收缩结点的时候( replicas: 2):

[root@server2 mysql]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-0 2/2 Running 0 3m53s

mysql-1 2/2 Running 0 4m38s

mysql-2 2/2 Terminating 0 6m3s /会从最后一个开始回收

但是此时它的pvc时仍然存在的:

[root@server1 nfsdata]# ls

default-data-mysql-0-pvc-e5c41a78-ec82-4fa0-aa84-c96cde5798ce

default-data-mysql-1-pvc-5ff29fa6-56e0-4021-9f4d-d989116bebff

default-data-mysql-2-pvc-ad0de3c0-508d-4b3a-9d57-852824fc2b4b

当我们再次创建这个pod时,它会自动从原来的pvc上直接挂载,就不用再做那些初始化了。

测试主从复制:

[root@server2 mysql]# mysql -h mysql-0.mysql.default.svc.cluster.local

在master数据库创建一个数据库

MySQL [(none)]> create database linux;

Query OK, 1 row affected (0.19 sec)

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| linux |

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

[root@server2 mysql]# mysql -h mysql-1.mysql.default.svc.cluster.local

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| linux | /从结点会直接拷贝过来

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.23 sec)

[root@server2 mysql]# mysql -h mysql-2.mysql.default.svc.cluster.local

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| linux |

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.08 sec)

MySQL [(none)]> create database woaini;

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

/但是从结点无法写入,因为是只读的。