【Spark】SparkStreaming从不同基本数据源读取数据

文章目录

- 基本数据源

- 文件数据源

- 注意事项

- 步骤

- 一、创建maven工程并导包

- 二、在HDFS创建目录,并上传要做测试的数据

- 三、开发SparkStreaming代码

- 四、运行代码后,往HDFS文件夹上传文件

- 五、控制台输出结果

- 自定义数据源

- 步骤

- 一、使用nc工具给指定端口发送数据

- 二、开发代码

- RDD队列

- 步骤

- 一、开发代码

基本数据源

文件数据源

注意事项

1.SparkStreaming不支持监控嵌套目录

2.文件进入dataDirectory(受监控的文件夹)需要通过移动或者重命名实现

3.一旦文件移动进目录,则不能再修改,即使修改也不会读取修改后的数据

步骤

一、创建maven工程并导包

<properties>

<scala.version>2.11.8scala.version>

<spark.version>2.2.0spark.version>

properties>

<dependencies>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>${scala.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_2.11artifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.7.5version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive_2.11artifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.38version>

dependency>

dependencies>

<build>

<sourceDirectory>src/main/scalasourceDirectory>

<testSourceDirectory>src/test/scalatestSourceDirectory>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.0version>

<configuration>

<source>1.8source>

<target>1.8target>

<encoding>UTF-8encoding>

configuration>

plugin>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>3.2.0version>

<executions>

<execution>

<goals>

<goal>compilegoal>

<goal>testCompilegoal>

goals>

<configuration>

<args>

<arg>-dependencyfilearg>

<arg>${project.build.directory}/.scala_dependenciesarg>

args>

configuration>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-shade-pluginartifactId>

<version>3.1.1version>

<executions>

<execution>

<phase>packagephase>

<goals>

<goal>shadegoal>

goals>

<configuration>

<filters>

<filter>

<artifact>*:*artifact>

<excludes>

<exclude>META-INF/*.SFexclude>

<exclude>META-INF/*.DSAexclude>

<exclude>META-INF/*.RSAexclude>

excludes>

filter>

filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>mainClass>

transformer>

transformers>

configuration>

execution>

executions>

plugin>

plugins>

build>

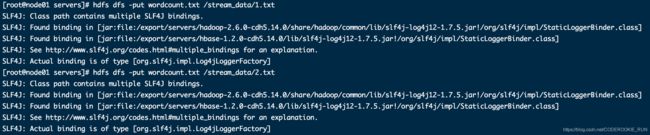

二、在HDFS创建目录,并上传要做测试的数据

cd /export/servers/

vim wordcount.txt

hello world

abc test

hadoop hive

HDFS上创建目录

hdfs dfs -mkdir /stream_data

hdfs dfs -put wordcount.txt /stream_data

三、开发SparkStreaming代码

package cn.itcast.sparkstreaming.demo1

import org.apache.spark.streaming.dstream.DStream

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.streaming.{Seconds, StreamingContext}

object getHdfsFiles {

// 自定义updateFunc函数

/**

* updateFunc需要两个参数

*

* @param newValues 新输入数据计数累加的值

* @param runningCount 历史数据计数累加完成的值

* @return 返回值是Option

*

* Option是scala中比较特殊的类,是some和none的父类,主要为了解决null值的问题

*/

def updateFunc(newValues: Seq[Int], runningCount: Option[Int]): Option[Int] = {

val finalResult: Int = newValues.sum + runningCount.getOrElse(0)

Option(finalResult)

}

def main(args: Array[String]): Unit = {

//获取SparkConf

val sparkConf: SparkConf = new SparkConf().setAppName("getHdfsFiles_to_wordcount").setMaster("local[6]").set("spark.driver.host", "localhost")

// 获取SparkContext

val sparkContext = new SparkContext(sparkConf)

// 设置日志级别

sparkContext.setLogLevel("WARN")

// 获取StreamingContext

val streamingContext = new StreamingContext(sparkContext, Seconds(5))

// 将历史结果都保存到一个路径下

streamingContext.checkpoint("./stream.check")

// 读取HDFS上的文件

val fileStream: DStream[String] = streamingContext.textFileStream("hdfs://node01:8020/stream_data")

// 对读取到的文件进行计数操作

val flatMapStream: DStream[String] = fileStream.flatMap(x => x.split(" "))

val wordAndOne: DStream[(String, Int)] = flatMapStream.map(x => (x, 1))

// reduceByKey不会将历史消息的值进行累加,所以需要用到updateStateByKey,需要的参数是updateFunc,需要自定义

val byKey: DStream[(String, Int)] = wordAndOne.updateStateByKey(updateFunc)

//输出结果

byKey.print()

streamingContext.start()

streamingContext.awaitTermination()

}

}

四、运行代码后,往HDFS文件夹上传文件

五、控制台输出结果

-------------------------------------------

Time: 1586856345000 ms

-------------------------------------------

-------------------------------------------

Time: 1586856350000 ms

-------------------------------------------

-------------------------------------------

Time: 1586856355000 ms

-------------------------------------------

(abc,1)

(world,1)

(hadoop,1)

(hive,1)

(hello,1)

(test,1)

-------------------------------------------

Time: 1586856360000 ms

-------------------------------------------

(abc,1)

(world,1)

(hadoop,1)

(hive,1)

(hello,1)

(test,1)

-------------------------------------------

Time: 1586856365000 ms

-------------------------------------------

(abc,1)

(world,1)

(hadoop,1)

(hive,1)

(hello,1)

(test,1)

-------------------------------------------

Time: 1586856370000 ms

-------------------------------------------

(abc,2)

(world,2)

(hadoop,2)

(hive,2)

(hello,2)

(test,2)

-------------------------------------------

Time: 1586856375000 ms

-------------------------------------------

(abc,2)

(world,2)

(hadoop,2)

(hive,2)

(hello,2)

(test,2)

自定义数据源

步骤

一、使用nc工具给指定端口发送数据

nc -lk 9999

二、开发代码

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object CustomReceiver {

/**

* 自定义updateFunc函数

* @param newValues

* @param runningCount

* @return

*/

def updateFunc(newValues:Seq[Int], runningCount:Option[Int]):Option[Int] = {

val finalResult: Int = newValues.sum + runningCount.getOrElse(0)

Option(finalResult)

}

def main(args: Array[String]): Unit = {

// 获取SparkConf

val sparkConf: SparkConf = new SparkConf().setAppName("CustomReceiver").setMaster("local[6]").set("spark.driver.host", "localhost")

// 获取SparkContext

val sparkContext = new SparkContext(sparkConf)

sparkContext.setLogLevel("WARN")

// 获取StreamingContext

val streamingContext = new StreamingContext(sparkContext, Seconds(5))

streamingContext.checkpoint("./stream_check")

// 读取自定义数据源的数据

val stream: ReceiverInputDStream[String] = streamingContext.receiverStream(new MyReceiver("node01", 9999))

// 对数据进行切割、计数操作

val mapStream: DStream[String] = stream.flatMap(x => x.split(" "))

val wordAndOne: DStream[(String, Int)] = mapStream.map((_, 1))

val byKey: DStream[(String, Int)] = wordAndOne.updateStateByKey(updateFunc)

// 输出结果

byKey.print()

streamingContext.start()

streamingContext.awaitTermination()

}

}

import java.io.{BufferedReader, InputStream, InputStreamReader}

import java.net.Socket

import java.nio.charset.StandardCharsets

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.receiver.Receiver

class MyReceiver(host:String,port:Int) extends Receiver[String](StorageLevel.MEMORY_AND_DISK_2){

/**

* 自定义receive方法接收socket数据,并调用store方法将数据保存起来

*/

private def receiverDatas(): Unit ={

// 接收socket数据

val socket = new Socket(host, port)

// 获取socket数据输入流

val stream: InputStream = socket.getInputStream

//通过BufferedReader ,将输入流转换为字符串

val reader = new BufferedReader(new InputStreamReader(stream,StandardCharsets.UTF_8))

var line: String = null

//判断读取到的数据不为空且receiver没有被停掉时

while ((line = reader.readLine()) != null && !isStopped()){

store(line)

}

stream.close()

socket.close()

reader.close()

}

/**

* 重写onStart和onStop方法,主要是onStart,onStart方法会被反复调用

*/

override def onStart(): Unit = {

// 启动通过连接接收数据的线程

new Thread(){

//重写run方法

override def run(): Unit = {

// 定义一个receiverDatas接收socket数据

receiverDatas()

}

}

}

// 停止结束的时候被调用

override def onStop(): Unit = {

}

}

RDD队列

步骤

一、开发代码

package cn.itcast.sparkstreaming.demo3

import org.apache.spark.rdd.RDD

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import scala.collection.mutable

object QueneReceiver {

def main(args: Array[String]): Unit = {

//获取SparkConf

val sparkConf: SparkConf = new SparkConf().setMaster("local[6]").setAppName("queneReceiver").set("spark.driver.host", "localhost")

//获取SparkContext

val sparkContext = new SparkContext(sparkConf)

sparkContext.setLogLevel("WARN")

//获取StreamingContext

val streamingContext = new StreamingContext(sparkContext, Seconds(5))

val queue = new mutable.SynchronizedQueue[RDD[Int]]

// 需要参数 queue: Queue[RDD[T]]

val inputStream: InputDStream[Int] = streamingContext.queueStream(queue)

// 对DStream进行操作

val mapStream: DStream[Int] = inputStream.map(x => x * 2)

mapStream.print()

streamingContext.start()

//定义一个RDD队列

for (x <- 1 to 100){

queue += streamingContext.sparkContext.makeRDD(1 to 10)

Thread.sleep(3000)

}

streamingContext.awaitTermination()

}

}