Hadoop MapReduce 读写Elasticsearch

1. 背景

最近需要调研hadoop MR和ES进行交互。自然就用到了ES官方的Elasticsearch-Hadoop插件。然而官方的资料,尤其是实现部分,写的感觉不太详细。跳了点坑后,总结了这篇文章,本文很大程度上是这篇官网资料的具体代码实现。

Elasticsearch-Hadoop的GIT项目地址如下:https://github.com/elastic/elasticsearch-hadoop

2. ElasticSearch-Hadoop概述

Elasticsearch-hadoop是一个深度集成Hadoop和ElasticSearch的项目,也是ES官方来维护的一个子项目,通过实现Hadoop和ES之间的输入输出,可以在Hadoop里面对ES集群的数据进行读取和写入,充分发挥Map-Reduce并行处理的优势,为Hadoop数据带来实时搜索的可能。

ES-Hadoop插件支持Map-Reduce、Cascading、Hive、Pig、Spark、Storm、yarn等组件。

3. ElasticSearch-Hadoop MR实现

环境准备

- 装好Hadoop

- 装好ElasticSearch

- 装好maven、eclipse

- 测试数据集下载:

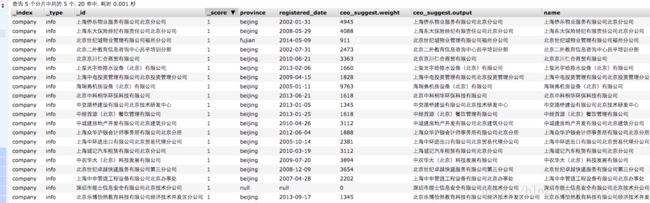

https://pan.baidu.com/s/1dE6mOhj - 批量导入测试数据集到ElasticSearch:

curl -XPOST 'localhost:9200/company/info/_bulk?pretty' --data-binary "@companys.json"Hadoop-ES Maven 依赖如下

<dependency>

<groupId>org.elasticsearchgroupId>

<artifactId>elasticsearch-hadoopartifactId>

<version>5.0.0version>

dependency>代码实现

以下为我实践的代码,实现基本功能。

MR从ES读全部数据写到HDFS

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.elasticsearch.hadoop.mr.EsInputFormat;

public class ESWriteHdfsTest {

public static class ESMap extends Mapper {

@Override

public void map(Writable key, Writable value, Mapper.Context context)

throws IOException, InterruptedException {

// 假如我这边只是想导出数据到HDFS

Text docVal = new Text();

docVal.set(value.toString());

context.write(NullWritable.get(), docVal);

}

}

public static void main(String[] args) throws Exception {

long start_time = System.currentTimeMillis();

Configuration conf = new Configuration();

conf.set("es.nodes", "localhost:9200");

conf.set("es.resource", "company/info");

Job job = Job.getInstance(conf,"hadoop elasticsearch");

// 指定自定义的Mapper阶段的任务处理类

job.setMapperClass(ESMap.class);

job.setNumReduceTasks(0);

// 设置map输出格式

job.setMapOutputKeyClass(NullWritable.class);

job.setMapOutputValueClass(Text.class);

// 设置输入格式

job.setInputFormatClass(EsInputFormat.class);

// 设置输出路径

FileOutputFormat.setOutputPath(job, new Path("hdfs://localhost:9000/es_output_company _info"));

// 运行MR程序

job.waitForCompletion(true);

System.out.println(System.currentTimeMillis()-start_time);

}

} MR从ES查询数据写到HDFS

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.elasticsearch.hadoop.mr.EsInputFormat;

public class ESQueryTest {

public static class ESMap extends Mapper {

@Override

public void map(Writable key, Writable value, Mapper.Context context)

throws IOException, InterruptedException {

context.write(new Text(key.toString()), new Text(value.toString()));

}

}

public static void main(String[] args) throws Exception {

long start_time = System.currentTimeMillis();

Configuration conf = new Configuration();

conf.set("es.nodes", "localhost:9200");

conf.set("es.resource", "company/info");

conf.set("es.output.json", "true"); // 生成json格式数据?

conf.set("es.query", "?q=name:北京");

Job job = Job.getInstance(conf, "hadoop elasticsearch");

// 指定自定义的Mapper阶段的任务处理类

job.setMapperClass(ESMap.class);

job.setNumReduceTasks(0);

// 设置map输出格式

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

// 设置输入格式

job.setInputFormatClass(EsInputFormat.class);

// 设置输出路径

FileOutputFormat.setOutputPath(job, new Path("hdfs://localhost:9000/es_output"));

// 运行MR程序

job.waitForCompletion(true);

System.out.println(System.currentTimeMillis()-start_time);

}

} MR 从ES一个索引读数据再写到另一个索引

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.elasticsearch.hadoop.mr.EsInputFormat;

import org.elasticsearch.hadoop.mr.EsOutputFormat;

import org.elasticsearch.hadoop.mr.LinkedMapWritable;

public class WriteES2ES {

public static class ESMap extends Mapper {

@Override

public void map(Text key, LinkedMapWritable value,

Mapper.Context context)

throws IOException, InterruptedException {

context.write(key, value);

}

}

public static class ESReduce extends Reducer {

@Override

public void reduce(Text key, Iterable values,

Reducer.Context context)

throws IOException, InterruptedException {

for (LinkedMapWritable value : values) {

context.write(key, value);

}

}

}

public static void main(String[] args) throws Exception {

long start_time = System.currentTimeMillis();

Configuration conf = new Configuration();

conf.set("es.nodes", "localhost:9200");

conf.set("es.resource.read", "company/info");

conf.set("es.resource.write", "company_new/info");

conf.set("es.query", "?q=name:北京");

Job job = Job.getInstance(conf,"hadoop elasticsearch");

// 指定自定义的Mapper阶段的任务和reduce阶段处理任务。

job.setMapperClass(ESMap.class);

job.setReducerClass(ESReduce.class);

// 设置输出格式

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LinkedMapWritable.class);

// 设置输入格式

job.setInputFormatClass(EsInputFormat.class);

job.setOutputFormatClass(EsOutputFormat.class);

// 运行MR程序

job.waitForCompletion(true);

System.out.println(System.currentTimeMillis()-start_time);

}

} 把HDFS上的json格式数据导入ES

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.BytesWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.elasticsearch.hadoop.mr.EsOutputFormat;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class WriteJsonToES {

public static class SomeMapper extends Mapper {

private static final Logger LOG = LoggerFactory.getLogger(SomeMapper.class);

@Override

public void map(Object key, Text value, Mapper.Context context) throws IOException, InterruptedException {

byte[] source = value.toString().trim().getBytes();

BytesWritable jsonDoc = new BytesWritable(source);

context.write(NullWritable.get(), jsonDoc);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.setBoolean("mapred.map.tasks.speculative.execution", false);

conf.setBoolean("mapred.reduce.tasks.speculative.execution", false);

conf.set("es.nodes", "localhost:9200");

conf.set("es.resource", "index_1/test");

conf.set("es.input.json", "yes");

Job job = Job.getInstance(conf,"hadoop es write test");

job.setMapperClass(SomeMapper.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(EsOutputFormat.class);

job.setMapOutputKeyClass(NullWritable.class);

job.setMapOutputValueClass(BytesWritable.class);

// 设置输入路径

FileInputFormat.setInputPaths(job, new Path("hdfs://localhost:9000/input_json"));

job.waitForCompletion(true);

}

} 4. 参考资料

Hadoop MR-ElasticSearch资料

http://www.cnblogs.com/kaisne/p/3930677.html

http://jingyan.baidu.com/article/7e440953386e0a2fc0e2efe0.html

http://bigbo.github.io/pages/2015/02/28/elasticsearch_hadoop/