机器学习中几个关键操作

固定参数不进行训练

pytorch

1. 模型中设置

# Fix the layers before conv3:

for layer in range(10):

for p in self.vgg.features[layer].parameters():

p.requires_grad = False2. 变量声明时设置

# 方法1

from torch.autograd import Variable

x = Variable(torch.randn(5, 5), requires_grad=False)

print(x.numpy())

# 方法2

y = torch.ones(10, requires_grad=False)

print(y.numpy())tensorflow

1. 模型中设置

import tensorflow.contrib.slim as slim

# 卷积的

net = slim.repeat(self._image, 2, slim.conv2d, 64, [3, 3], trainable=False, scope='conv1')

# 全连接的

fc6 = slim.fully_connected(pool5_flat, 4096, scope='fc6', trainable=False)

# dropout

fc6 = slim.dropout(fc6, keep_prob=0.5, is_training=True, scope='dropout6')2. 变量声明时设置

w = tf.Variable(1.0, name="w", trainable=False)paddlepaddle

1. 模型中设置

import paddle.fluid as fluid

# fluid.ParamAttr 方法中的 initializer 可以设为None的,这样在fc层的时候,会自动生成新的超参数值的。

w_param_attrs = fluid.ParamAttr(name="fc_weight",

learning_rate=1.0, # 这个学习率是要乘以 global learning_rate 和 scheduler_factor的

regularizer=fluid.regularizer.L2Decay(1.0),

trainable=True) # 代表是否可训练

x = fluid.layers.data(name='X', shape=[1], dtype='float32')

y_predict = fluid.layers.fc(input=x, size=10, param_attr=w_param_attrs)2. 变量声明中设置

class paddle.fluid.ParamAttr(name=None, initializer=None, learning_rate=1.0, regularizer=None, trainable=True, do_model_average=False)

训练过程中修改学习率

pytorch

for param_group in optimizer.param_groups:

param_group['lr'] *= scaletensorflow

lr = tf.Variable(cfg.TRAIN.LEARNING_RATE, trainable=False)

self.optimizer = tf.train.MomentumOptimizer(lr, cfg.TRAIN.MOMENTUM)

rate *= cfg.TRAIN.GAMMA

sess.run(tf.assign(lr, rate))paddlepaddle

paddle.fluid.layers里面有学习率https://www.paddlepaddle.org.cn/documentation/docs/zh/1.5/api_cn/layers_cn/learning_rate_scheduler_cn.html

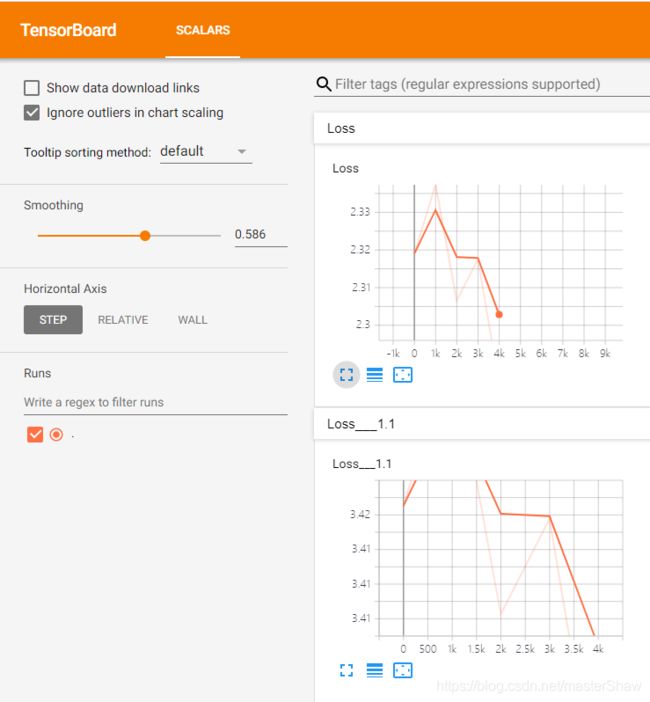

Tensorboard 使用

命令

tensorboard --logdir=./ --port=8008pytorch

from tensorboardX import SummaryWriter

writer = SummaryWriter(logdir="./tensorboard")

for epoch_id in range(train_epoch):

for batch_id in range(total_size):

# 省略了好多计算loss tensor的步骤........

avg_loss = fluid.layers.mean(loss)

writer.add_scalar("Loss", avg_loss.numpy(), epoch_id * batch_id)

writer.add_scalar("Loss__1.1", avg_loss.numpy() + 1.1, epoch_id * batch_id)

writer.close()结果:

后面还有graph, histogram等图 https://www.jianshu.com/p/46eb3004beca

tensorflow

import tensorflow as tf

writer = tf.summary.FileWriter(self.tbdir, sess.graph)

valwriter = tf.summary.FileWriter(self.tbvaldir)

A = tf.summary.histogram('A_' + tensor.op.name, tensor)

B = tf.summary.scalar('B_' + tensor.op.name, tf.nn.zero_fraction(tensor))

val_summaries = []

val_summaries.append(B)

summary_op = tf.summary.merge_all() # A,B 两个图都有

summary_op_val = tf.summary.merge(val_summaries) # 只有B

writer.add_summary(session.run(summary_op, feed_dict={}), float(iter))

valwriter.add_summary(session.run(summary_op_val, feed_dict={}), float(iter))

writer.close()

valwriter.close()paddlepaddle

使用VisualDL, 里面有 安装 和 使用 代码

路径:https://blog.csdn.net/qq_33200967/article/details/79127175