Spark submit opencypher Morpheus examples教程

spark-submit opencypher morpheus exmaples教程

- 1. 项目准备

- 1.1 安装spark

- 1.2 下载编译Morpheus

- 2. 将Morpheus Example提交到Spark集群执行

- 2.1 打包morpheus-examples

- 2.2 Spark集群运行morpheus-examples

1. 项目准备

我的集群环境是CentOS7集群,十台机器,每台64G内存,4个CPU。

在spark集群运行opencypher-morpheus-example,得先在集群上安装spark,然后克隆morpheus项目到spark的master节点上。

1.1 安装spark

安装spark集群需要依赖Scala, JDK, Hadoop。

具体安装spark的教程可以参考这篇博客:Linux集群安装Spark, 这篇博客里面也有如何安装Hadoop集群的教程:Linux上安装Hadoop集群

我安装的是Scala 2.12.8,JDK 1.8,Hadoop 2.8.1,Spark 2.4.2。Scala 2.4.2的下载地址为spark-2.4.2-bin-hadoop-2.7.tgz Morpheus的Git地址为opencypher/morpheus。

一定要注意版本号! Spark 2.4.2是用Scala 12编译的,而Spark 2.4.1和Spark 2.4.3都是用Scala 2.11编译的,Morpheus需要用Scala 2.12编译,因此如果你装的是Spark 2.4.3,你提交morpheus程序到spark上运行时会报NoSuchMethodError,这其实是morpheus和Spark依赖的Scala版本不一致导致的。博主已踩过这个坑,望注意版本号!

接下来贴一下我在集群里每台机器上他们的安装路径:

- Spark 路径(SPARK_HOME):/opt/spark-2.4.2-bin-hadoop2.7/

- Hadoop路径(HADOOP_HOME):/opt/hadoop/

- Jdk路径(JAVA_HOME):/usr/java/jdk1.8.0_201-amd64/

- Scala路径(SCALA_HOME):/opt/scala-2.12.8/

请检查是否将这些安装路径添加到环境变量中,若没有,请vim /etc/profile, 向其中添加好环境变量后保存并退出,再source /etc/profile使其生效。 下面是我的/etc/profile部分环境变量

export JAVA_HOME=/usr/java/jdk1.8.0_201-amd64

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/opt/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export SCALA_HOME=/opt/scala-2.12.8

export PATH=$PATH:$SCALA_HOME/bin

export SPARK_HOME=/opt/spark-2.4.2-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin

export SBT_HOME=/usr/local/sbt/sbt

export PATH=$PATH:$SBT_HOME/bin

export NGINX_HOME=/usr/local/nginx

export PATH=$PATH:$NGINX_HOME/sbin

接下来确认spark配置文件是否正确,首先是spark安装目录下的conf/spark-env.sh文件,我的spark-env.sh配置是这样的, spark-executor-memory可以不设置,运行spark程序时可以通过参数手动设置。SPARK_MASTER_IP是你集群的公网IP地址。

export HADOOP_HOME=/opt/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export SCALA_HOME=/opt/scala-2.12.8

export SPARK_HOME=/opt/spark-2.4.2-bin-hadoop2.7

export SPARK_MASTER_IP=59.78.194.63

export SPARK_EXCUTOR_MEMORY=10G

接下来是spark安装目录下的conf/spark-defauts.conf文件

spark.driver.memory 5g

spark.executor.memory 10g

spark.sql.warehouse.dir /opt/spark-2.4.2-bin-hadoop2.7/tmp/wareHouse/

CATALOG_IMPLEMENTATION.key hive

spark安装目录下的slaves文件里配置slave节点IP地址,例如

192.168.2.3

192.168.2.4

...

192.168.2.11

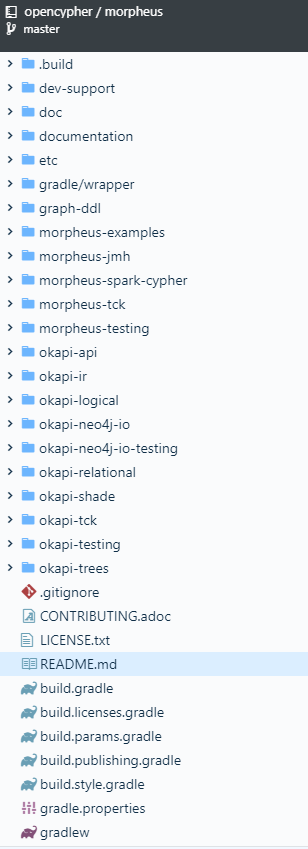

1.2 下载编译Morpheus

下载Morpheus-0.4.0-release,解压后进入morpheus-0.4.0/文件夹,运行编译命令编译morpheus

./gradlew build

花费时间较长,请耐心等待。

2. 将Morpheus Example提交到Spark集群执行

下面介绍如何将Morpheus的examples打包成jar并用spark-submit命令将morpheus example提交到spark集群执行。

2.1 打包morpheus-examples

打包子模块morpheus-examples并且将它的依赖也打包进去,以便于我们可以运行打包后的jar文件,这里博主试了很多方法,花了一天时间才找到一个可行方案。方案如下:

进入morpheus-0.4.0/morpheus-examples/文件夹下,修改build.gradle文件如下:

import com.github.jengelman.gradle.plugins.shadow.tasks.ShadowJar

description = 'Collection of examples for Cypher for Apache Spark'

dependencies {

compile project(':morpheus-spark-cypher')

compile project(':morpheus-testing')

compile group: 'org.apache.logging.log4j', name: 'log4j-core', version: ver.log4j.main

compile group: 'org.apache.spark', name: "spark-graphx".scala(), version: ver.spark

compile group: 'io.netty', name: 'netty-all', version: ver.netty

compile group: 'com.h2database', name: 'h2', version: ver.h2

}

task allJarEg(type: ShadowJar) {

classifier = 'all'

zip64 = true

from project.sourceSets.main.output

configurations = [project.configurations.runtime]

dependencies {

exclude(dependency('org.scala-lang:'))

exclude(dependency('org.scala-lang.modules:'))

}

exclude "META-INF/versions/**/*"

}

jar {

zip64 true

archiveName = "morpheus-examples.jar"

from {

configurations.compile.collect {

it.isDirectory() ? it : zipTree(it)

}

}

manifest {

attributes 'Main-Class': 'Main'

}

exclude 'META-INF/*.RSA', 'META-INF/*.SF','META-INF/*.DSA'

}

pub.full.artifacts += 'allJarEg'

tasks.test.dependsOn(":okapi-neo4j-io-testing:neo4jStart")

tasks.test.finalizedBy(":okapi-neo4j-io-testing:neo4jStop")

然后进入morpheus-0.4.0/目录,执行

./gradlew jar -x test

运行完成后你就可以发现在morpheus-examples/build/libs/目录下有一个morpheus-examples.jar文件。这就是我们用来提交到spark集群上的jar文件!

2.2 Spark集群运行morpheus-examples

首先确保你的spark集群和hadoop集群已经启动。

然后进入morpheus-0.4.0/文件夹,使用命令运行morpheus-examples下的CaseClassExample

spark-submit --class "org.opencypher.morpheus.examples.CaseClassExample" --master spark://192.168.2.2:7077 morpheus-examples/build/libs/morpheus-examples.jar

其中192.168.2.2是我的Spark集群主节点的内网IP地址。

如果你要运行morpheus-examples下的LdbcHiveExample,请注意其所读取的Ldbc数据集地址是否正确。下面给出我修改的LdbcHiveExample.scala文件:

package org.opencypher.morpheus.examples

import java.io.File

import java.nio.file.Files

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.internal.StaticSQLConf.CATALOG_IMPLEMENTATION

import org.opencypher.morpheus.api.io.sql.SqlDataSourceConfig.Hive

import org.opencypher.morpheus.api.{GraphSources, MorpheusSession}

import org.opencypher.morpheus.testing.utils.FileSystemUtils._

import org.opencypher.morpheus.util.LdbcUtil._

import org.opencypher.morpheus.util.{App, LdbcUtil}

import org.opencypher.okapi.api.graph.Namespace

/**

* This demo reads data generated by the LDBC SNB data generator and performs the following steps:

*

* 1) Loads the raw CSV files into Hive tables

* 2) Normalizes tables according to the LDBC schema (i.e. place -> [City, Country, Continent]

* 3) Generates a Graph DDL script based on LDBC naming conventions (if not already existing)

* 4) Initializes a SQL PGDS based on the generated Graph DDL file

* 5) Runs a Cypher query over the LDBC graph in Spark

*

* More detail about the LDBC SNB data generator are available under https://github.com/ldbc/ldbc_snb_datagen

*/

object LdbcHiveExampleQ1 extends App {

val sparkSession: SparkSession =

SparkSession.builder().appName(s"morpheus-dg60-exp-q1").enableHiveSupport().getOrCreate()

val datasourceName = "warehouse"

val database = "LDBC"

//每台机器上LDBC数据集的存放目录,目录下都是csv文件

val path = "home/aida/Downloads/DATA/DG10/social_network_60_interactive"

implicit val session: MorpheusSession = MorpheusSession.create(sparkSession)

implicit val spark: SparkSession = session.sparkSession

val csvFiles = new File(s"/${path}/").list()

println(s"/${path}/")

spark.sql(s"DROP DATABASE IF EXISTS $database CASCADE")

spark.sql(s"CREATE DATABASE $database")

// 读取LDBC csv格式文件并且读的过程中为其创建schema

csvFiles.foreach { csvFile =>

spark.read

.format("csv")

.option("header", value = true)

.option("inferSchema", value = true)

.option("delimiter", "|")

.load(s"file:///$path/$csvFile")

// cast e.g. Timestamp to String

.withCompatibleTypes

.write

.saveAsTable(s"$database.${csvFile.dropRight("_0_0.csv".length)}")

}

// generate GraphDdl file

val graphDdlString = LdbcUtil.toGraphDDL(datasourceName, database)

val graphDdlFile = Files.createTempFile("ldbc", ".ddl").toFile.getAbsolutePath

writeFile(graphDdlFile, graphDdlString)

// create SQL PGDS

val sqlGraphSource = GraphSources

.sql(graphDdlFile)

.withSqlDataSourceConfigs(datasourceName -> Hive)

session.registerSource(Namespace("sql"), sqlGraphSource)

println("----------------START RUNNING CYPHER q1----------------")

val start = System.currentTimeMillis

session.cypher(

s"""

|FROM GRAPH sql.LDBC

|MATCH (p:Person)-[:islocatedin]->(c:City)

|RETURN count(*)

""".stripMargin).show

val end = System.currentTimeMillis

println(s"********Query Time: ${(end - start)*0.001}s ********** \n \

----------------FINISH RUNNING CYPHER q1----------------\n \t ")

}

修改后,进入morpheus-0.4.0/目录,执行重新打包命令

./gradlew jar -x test

然后将LdbcHiveExample提交到spark集群执行:

spark-submit --class "org.opencypher.morpheus.examples.LdbcHiveExample" --master spark://192.168.2.2:7077 morpheus-examples/build/libs/morpheus-examples.jar

完成!