使用kubeadm搭建kubernetes集群或者单节点环境(1.9版本)

最近需要上k8s容器云平台,首先面临的就是安装问题,参考了官方文档也是踩了不少坑,在这里把使用kubeadm安装k8s的方法和踩的坑和大家分享一下。

一 前期准备:

我用来测试的环境是是centos7,如下

1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

2 关闭swap内存

swapoff -a

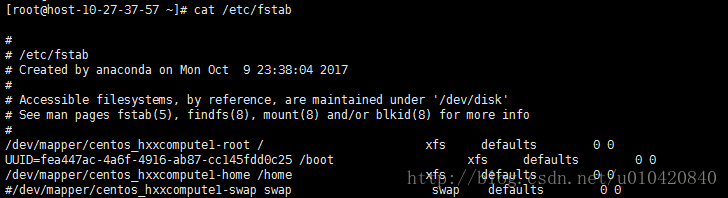

同时修改/etc/fstab文件,注释掉SWAP的自动挂载,

使用free -m确认swap已经关闭。

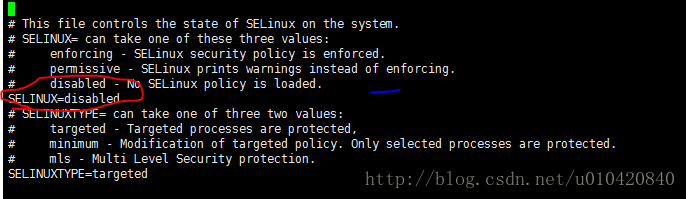

3 关闭selinux

vi /etc/sysconfig/selinux

将SELINUX修改为disabled

4 调整内核参数

修改/etc/sysctl.d/k8s.conf文件如下

[root@host-10-27-37-57 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

5 修改sshd

echo "ClientAliveInterval 10" >> /etc/ssh/sshd_config

echo "TCPKeepAlive yes" >> /etc/ssh/sshd_config

systemctl restart sshd.service

二 安装

1 安装docker yum install -y docker-enginet 并重启docker服务

不同的yum源中docker的命名不尽相同 可以查看源中docker包的具体名称之后再安装

2 添加k8srepo 并安装kubeadm kubelet 和kubectl

[root@host-10-27-37-57 ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

然后安装

yum install -y kubelet kubeadm kubectl

sudo systemctl enable kubelet && sudo systemctl start kubelet

3 上面的1和2两步需要在所有的节点安装 针对于master节点需要进行下面操作

(1)kubeadm init --kubernetes-version=v1.9.2 --pod-network-cidr=10.244.0.0/16

如果失败了可以kubeadm reset 重置一下 再重新试试,该命令如下:

kubeadm init --kubernetes-version=v1.9.2 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.9.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.12.0-ce. Max validated version: 17.03

[WARNING FileExisting-crictl]: crictl not found in system path

[WARNING HTTPProxy]: Connection to "https://10.27.37.57:6443" uses proxy "http://15061125:[email protected]:8181/". If that is not intended, adjust your proxy settings

[WARNING HTTPProxyCIDR]: connection to "10.96.0.0/12" uses proxy "http://15061125:[email protected]:8181/". This may lead to malfunctional cluster setup. Make sure that Pod and Services IP ranges specified correctly as exceptions in proxy configuration

[WARNING HTTPProxyCIDR]: connection to "10.244.0.0/16" uses proxy "http://15061125:[email protected]:8181/". This may lead to malfunctional cluster setup. Make sure that Pod and Services IP ranges specified correctly as exceptions in proxy configuration

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [host-10-27-37-57 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.27.37.57]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 31.789435 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node host-10-27-37-57 as master by adding a label and a taint

[markmaster] Master host-10-27-37-57 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: f184be.f642e5b3fe60b7a7

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token f184be.f642e5b3fe60b7a7 10.27.37.57:6443 --discovery-token-ca-cert-hash sha256:d7079fa466b6c552ff79a2cca50d33919489ef25ebd15ff64e4eaa90d3110473 ----该命名会在后面用到,用来添加其他节点

(2)为了使得kubectl控制集群,需要做

对于非root用户

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 对于root用户

$ export KUBECONFIG=/etc/kubernetes/admin.conf

# 也可以直接放到~/.bash_profile

$ echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

(3)安装一个network addon

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml

安装完之后 使用kubectl get pods –all-namespaces命令看看kube-dns是否安装成功,当kube-dns 显示running则表示成功,如下:

(4)为了保证master的安全,master节点默认是不会被调度的。当你只有单个节点的时候,或者想使用master节点的话可以使用可以通过下面的命令取消这个限制

kubectl taint nodes --all node-role.kubernetes.io/master-

4 如果只有单个节点的话 上面的步骤已经可以搭建一个单节点的kubenetes环境,如果需要加入其他节点作为node节点进行下面操作:

登录其他节点,执行上面提到的kubeadm join --token f184be.f642e5b3fe60b7a7 10.27.37.57:6443 --discovery-token-ca-cert-hash sha256:d7079fa466b6c552ff79a2cca50d33919489ef25ebd15ff64e4eaa90d3110473

命令,将其他节点加入kubenetes集群

常见的一些故障可以参考官方文档:

https://kubernetes.io/docs/setup/independent/troubleshooting-kubeadm/

安装中遇到的问题可以留言,我遇到过的就会解答