多线程爬取学习通题库

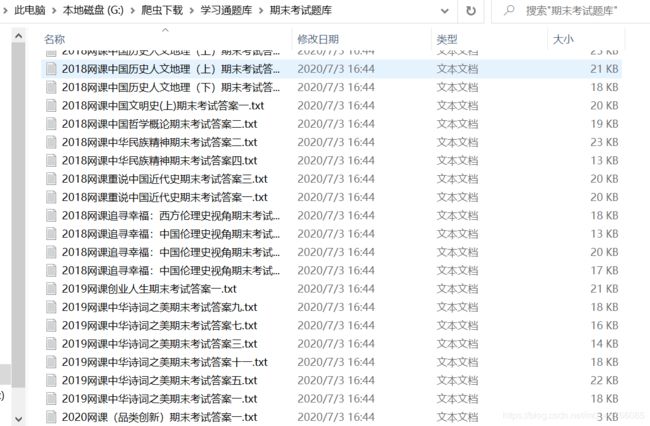

今天在网上发现了一个学习通题库网站,正好最近也在研究怎么搭建题库,于是就写了一个多线程爬虫,爬取网站所有的题目。

下面是我写的代码

import requests

import re

from lxml import etree

import queue

import threading

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36',

}

# 保存位置

PATH = "G:\爬虫下载\学习通题库\期末考试题库\\"

# 队列

Q = queue.Queue()

# 往队列里放内容(采集函数)

def craw_list(i):

url = 'http://www.cxeytk.com/ajax/ajaxLoadModuleDom_h.jsp'

data = {

'cmd': 'getWafNotCk_getAjaxPageModuleInfo',

'_colId': '105',

'_extId': '0',

'moduleId': '328',

'href': '/col.jsp?m328pageno={}&id=105'.format(i),

'newNextPage': 'false',

'needIncToVue': 'false',

}

r = requests.post(url=url, headers=headers, data=data)

# 题库链接

ret_list = re.findall(r"", r.text)

for ret in ret_list:

link = 'http://www.cxeytk.com/' + ret[0].replace(r'\"', '')

title = ret[1].replace(r'\"', '')

# print(link, title)

Q.put([link, title])

# 从队列里取东西(下载函数)

def run():

# 循环从队列中读取

while True:

if not t1.is_alive():

# print("采集已完毕!!!")

if Q.empty():

break

link = Q.get()[0]

title = Q.get()[1]

# 对链接发送get请求

r = requests.get(url=link, headers=headers)

tree = etree.HTML(r.text)

# 获取所有文本

try:

text_list = tree.xpath('//div[@class="richContent richContent3"]//text()')

except:

text_list = []

# print(text_list)

content = ''

for text in text_list:

content = content + text + '\n'

if not os.path.exists(PATH):

os.makedirs(PATH)

with open(PATH + '{}.txt'.format(title), 'w', encoding='utf-8') as f:

f.write(content)

# 界面友好提示

print('==={}===已下载'.format(title))

def main(start, end):

for i in range(start, end):

craw_list(i)

if __name__ == '__main__':

# 开启多线程

# 采集

t1 = threading.Thread(target=main, args=(1, 37))

# 下载

t2 = threading.Thread(target=run)

t3 = threading.Thread(target=run)

t4 = threading.Thread(target=run)

t1.start()

t2.start()

t3.start()

t4.start()