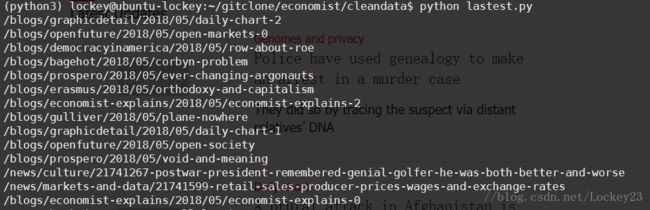

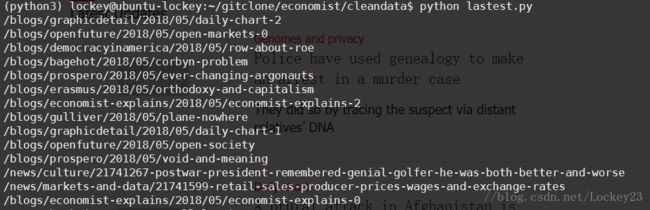

先把图上了:

1、首先从最新文章列表页爬取最新文章的链接

import json

import re

import urllib.request

from lxml import etree

import random

import requests

import time

import os

paperRecords = {}

with open('spiRecords.json','r') as fel:

paperRecords = json.load(fel)

try:

lastLst = paperRecords['lastLst']

except Exception as err:

lastLst = []

dateStr = '2018-05-02'

toYear,toMonth,toDay = list(map(int,dateStr.split('-')))

strY = 'a' + str(toYear)

strM = 'a' + str(toMonth)

strD = 'a' + str(toDay)

try:

if paperRecords[strY]:

pass

else:

paperRecords[strY] = {}

except Exception as err:

paperRecords[strY] = {}

try:

if paperRecords[strY][strM]: pass

else:

paperRecords[strY][strM] = {}

except Exception as err:

paperRecords[strY][strM] = {}

try:

if paperRecords[strY][strM][strD]:

pass

else:

paperRecords[strY][strM][strD] = []

except Exception as err:

paperRecords[strY][strM][strD] = []

headers = {'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36',}

def get_ahrefs():

url = 'https://economist.com/latest-updates'

req = urllib.request.Request(url=url,headers=headers, method='GET')

response = urllib.request.urlopen(req)

html = response.read()

selector = etree.HTML(html.decode('utf-8'))

aa='//a[@class="teaser__link"]/@href'

ahref = selector.xpath(aa)

arr = []

for a in ahref:

arr.append(a)

print(a)

return arr

2、然后根据链接列表挨个爬取每篇文章的标题,dec以及文章正文

def get_artiles(url):

req = urllib.request.Request(url=url,headers=headers, method='GET')

try:

response = urllib.request.urlopen(req)

html = response.read()

pout = []

hout = []

selector = etree.HTML(html.decode('utf-8'))

headline = '//h1[@class="flytitle-and-title__body"]'

head = selector.xpath(headline)

for item in head:

a = item.xpath('span[1]/text()')[0]

b = item.xpath('span[2]/text()')[0]

hout.append(a)

hout.append(b)

des = '//p[@class="blog-post__rubric"]/text()'

desa = selector.xpath(des)

for de in desa:

hout.append(de)

print(hout)

ps = '//div[@class="blog-post__text"]'

p=selector.xpath(ps)

for item in p:

pt = item.xpath('p/text()')

for po in pt:

pout.append(po)

print(pout)

result = {'head':hout,'ps':pout}

return result

except Exception as err:

print(err,url)

3、运行起来:

if __name__ == '__main__':

linkArr = getMain()

time.sleep(20)

tmpLast = []

toDayDir = './mds/' + dateStr +'/papers/'

if not os.path.exists(toDayDir):

os.makedirs(toDayDir)

for item in linkArr:

print(item)

if item not in lastLst:

tmpLast.append(item)

url = 'https://economist.com' + item

article = getPaper(url)

try:

paperRecords[strY][strM][strD].append([item,article['head'][1]])

paperName = '_'.join(article['head'][1].split(' '))

saveMd = toDayDir + paperName+'.md'

result = article['head']+article['ps']

output = '\n\n'.join(result)

with open(saveMd,'w') as fw:

fw.write(output)

time.sleep(60)

except Exception as err:

print(err)

paperRecords['lastLst'] = tmpLast

with open('spiRecords.json','w') as fwp:

json.dump(paperRecords,fwp)