内核启动过程

上一篇文章解释了计算机如何启动,直到引导加载程序将内核映像填充到内存中之后即将跳入内核入口点。

关于引导的最后一篇文章介绍了内核的内幕,以了解操作系统如何开始运行。

由于我有经验,我将在Linux Cross Reference上大量链接到Linux内核2.6.25.6的资源。

如果您熟悉类似C的语法,那么这些源将非常容易阅读。

即使您错过一些细节,您也可以了解正在发生的事情。

主要障碍是某些代码缺乏上下文,例如何时或为何运行代码或计算机的基本功能。

我希望提供一些背景信息。

由于简洁(哈!),很多有趣的东西(例如中断和内存)暂时只得到了点头。

该帖子以Windows启动的要点结尾。

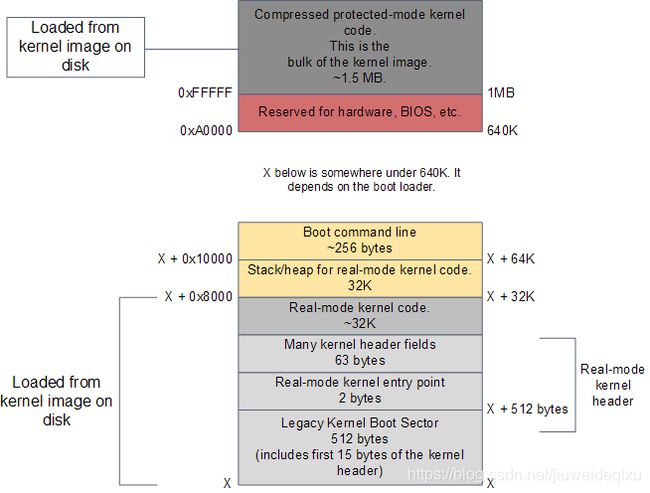

在英特尔x86引导store中的这一点上,处理器以实模式运行,能够寻址1 MB内存,而对于现代Linux系统,RAM如下所示:

引导加载程序完成后的RAM内容

引导程序已使用BIOS磁盘I / O服务将内核映像加载到内存中。

此图像是硬盘中包含内核的文件的精确副本,例如

/boot/vmlinuz-2.6.22-14-server。

该映像分为两部分:一小部分包含实模式内核代码,加载到640K屏障以下;

在受保护模式下运行的内核的大部分将在第一个兆字节的内存之后加载。

该操作从上图所示的实模式内核头开始。

此内存区域用于在引导加载程序和内核之间实现Linux引导协议。

引导加载程序在执行工作时会读取其中的某些值。

这些功能包括便利性,例如包含内核版本的可读字符串,还包括关键信息,例如实模式内核片段的大小。

引导加载程序还将值写入该区域,例如用户在引导菜单中给定的命令行参数的内存地址。

引导加载程序完成后,它将填写内核头文件所需的所有参数。

然后是时候进入内核入口点了。

下图显示了内核初始化的代码序列,以及源目录,文件和行号:

特定于体系结构的Linux内核初始化

英特尔架构的早期内核启动位于arch / x86 / boot / header.S文件中。

/*

* header.S

*

* Copyright (C) 1991, 1992 Linus Torvalds

*

* Based on bootsect.S and setup.S

* modified by more people than can be counted

*

* Rewritten as a common file by H. Peter Anvin (Apr 2007)

*

* BIG FAT NOTE: We're in real mode using 64k segments. Therefore segment

* addresses must be multiplied by 16 to obtain their respective linear

* addresses. To avoid confusion, linear addresses are written using leading

* hex while segment addresses are written as segment:offset.

*

*/

#include 它使用汇编语言,这对于整个内核来说是很少见的,但对于启动代码却很常见。

该文件的开头实际上包含引导扇区代码,这是Linux在没有引导加载程序的情况下可以工作的剩余时间。

如今,此引导扇区(如果执行)仅向用户打印“ bugger_off_msg”并重新引导。

现代引导加载程序会忽略此遗留代码。

在引导扇区代码之后,我们有实模式内核头文件的前15个字节。

这两部分加起来最多为512字节,这是Intel硬件上典型磁盘扇区的大小。

在这512个字节之后,在偏移量0x200处,我们找到了作为Linux内核的一部分运行的第一条指令:实模式入口点。

它位于header.S:110中,是直接以机器代码0x3aeb写入的2字节跳转。

您可以通过在内核映像上运行hexdump并查看该偏移量处的字节来验证这一点-只是进行健全性检查,以确保这并非梦dream以求。

引导加载程序完成后会跳到该位置,然后又跳到header.S:229,这里有一个常规的汇编例程,称为start_of_setup。

这个简短的例程设置了堆栈,将实模式内核的bss段(包含静态变量的区域,因此从零值开始)归零,然后跳转至arch / x86 / boot / main上的旧C代码。

c:122。

1/* -*- linux-c -*- ------------------------------------------------------- *

2 *

3 * Copyright (C) 1991, 1992 Linus Torvalds

4 * Copyright 2007 rPath, Inc. - All Rights Reserved

5 *

6 * This file is part of the Linux kernel, and is made available under

7 * the terms of the GNU General Public License version 2.

8 *

9 * ----------------------------------------------------------------------- */

10

11/*

12 * arch/i386/boot/main.c

13 *

14 * Main module for the real-mode kernel code

15 */

16

17#include "boot.h"

18

19struct boot_params boot_params __attribute__((aligned(16)));

20

21char *HEAP = _end;

22char *heap_end = _end; /* Default end of heap = no heap */

23

24/*

25 * Copy the header into the boot parameter block. Since this

26 * screws up the old-style command line protocol, adjust by

27 * filling in the new-style command line pointer instead.

28 */

29

30static void copy_boot_params(void)

31{

32 struct old_cmdline {

33 u16 cl_magic;

34 u16 cl_offset;

35 };

36 const struct old_cmdline * const oldcmd =

37 (const struct old_cmdline *)OLD_CL_ADDRESS;

38

39 BUILD_BUG_ON(sizeof boot_params != 4096);

40 memcpy(&boot_params.hdr, &hdr, sizeof hdr);

41

42 if (!

main()做一些内部工作,例如检测内存布局,设置视频模式等。然后调用go_to_protected_mode()。

1/* -*- linux-c -*- ------------------------------------------------------- *

2 *

3 * Copyright (C) 1991, 1992 Linus Torvalds

4 * Copyright 2007 rPath, Inc. - All Rights Reserved

5 *

6 * This file is part of the Linux kernel, and is made available under

7 * the terms of the GNU General Public License version 2.

8 *

9 * ----------------------------------------------------------------------- */

10

11/*

12 * arch/i386/boot/pm.c

13 *

14 * Prepare the machine for transition to protected mode.

15 */

16

17#include "boot.h"

18#include <asm/segment.h>

19

20/*

21 * Invoke the realmode switch hook if present; otherwise

22 * disable all interrupts.

23 */

24static void realmode_switch_hook(void)

25{

26 if (boot_params.hdr.realmode_swtch) {

27 asm volatile("lcallw *%0"

28 : : "m" (boot_params.hdr.realmode_swtch)

29 : "eax", "ebx", "ecx", "edx");

30 } else {

31 asm volatile("cli");

32 outb(0x80, 0x70); /* Disable NMI */

33 io_delay();

34 }

35}

36

37/*

38 * A zImage kernel is loaded at 0x10000 but wants to run at 0x1000.

39 * A bzImage kernel is loaded and runs at 0x100000.

40 */

41static void move_kernel_around(void)

42{

43 /* Note: rely on the compile-time option here rather than

44 the LOADED_HIGH flag. The Qemu kernel loader unconditionally

45 sets the loadflags to zero. */

46#ifndef __BIG_KERNEL__

47 u16 dst_seg, src_seg;

48 u32 syssize;

49

50 dst_seg = 0x1000 >> 4;

51 src_seg = 0x10000 >> 4;

52 syssize = boot_params.hdr.syssize; /* Size in 16-byte paragraphs */

53

54 while (syssize) {

55 int paras = (syssize >= 0x1000) ? 0x1000 : syssize;

56 int dwords = paras << 2;

57

58 asm volatile("pushw %%es ; "

59 "pushw %%ds ; "

60 "movw %1,%%es ; "

61 "movw %2,%%ds ; "

62 "xorw %%di,%%di ; "

63 "xorw %%si,%%si ; "

64 "rep;movsl ; "

65 "popw %%ds ; "

66 "popw %%es"

67 : "+c" (dwords)

68 : "r" (dst_seg), "r" (src_seg)

69 : "esi", "edi");

70

71 syssize -= paras;

72 dst_seg += paras;

73 src_seg += paras;

74 }

75#endif

76}

77

78/*

79 * Disable all interrupts at the legacy PIC.

80 */

81static void mask_all_interrupts(void)

82{

83 outb(0xff, 0xa1); /* Mask all interrupts on the secondary PIC */

84 io_delay();

85 outb(0xfb, 0x21); /* Mask all but cascade on the primary PIC */

86 io_delay();

87}

88

89/*

90 * Reset IGNNE# if asserted in the FPU.

91 */

92static void reset_coprocessor(void)

93{

94 outb(0, 0xf0);

95 io_delay();

96 outb(0, 0xf1);

97 io_delay();

98}

99

100/*

101 * Set up the GDT

102 */

103#define GDT_ENTRY(flags,base,limit) \

104 (((u64)(base & 0xff000000) << 32) | \

105 ((u64)flags << 40) | \

106 ((u64)(limit & 0x00ff0000) << 32) | \

107 ((u64)(base & 0x00ffffff) << 16) | \

108 ((u64)(limit & 0x0000ffff)))

109

110struct gdt_ptr {

111 u16 len;

112 u32 ptr;

113} __attribute__((packed));

114

115static void setup_gdt(void)

116{

117 /* There are machines which are known to not boot with the GDT

118 being 8-byte unaligned. Intel recommends 16 byte alignment. */

119 static const u64 boot_gdt[] __attribute__((aligned(16))) = {

120 /* CS: code, read/execute, 4 GB, base 0 */

121 [GDT_ENTRY_BOOT_CS] = GDT_ENTRY(0xc09b, 0, 0xfffff),

122 /* DS: data, read/write, 4 GB, base 0 */

123 [GDT_ENTRY_BOOT_DS] = GDT_ENTRY(0xc093, 0, 0xfffff),

124 /* TSS: 32-bit tss, 104 bytes, base 4096 */

125 /* We only have a TSS here to keep Intel VT happy;

126 we don't actually use it for anything. */

127 [GDT_ENTRY_BOOT_TSS] = GDT_ENTRY(0x0089, 4096, 103),

128 };

129 /* Xen HVM incorrectly stores a pointer to the gdt_ptr, instead

130 of the gdt_ptr contents. Thus, make it static so it will

131 stay in memory, at least long enough that we switch to the

132 proper kernel GDT. */

133 static struct gdt_ptr gdt;

134

135 gdt.len = sizeof(boot_gdt)-1;

136 gdt.ptr = (u32)&boot_gdt + (ds() << 4);

137

138 asm volatile("lgdtl %0" : : "m" (gdt));

139}

140

141/*

142 * Set up the IDT

143 */

144static void setup_idt(void)

145{

146 static const struct gdt_ptr null_idt = {0, 0};

147 asm volatile("lidtl %0" : : "m" (null_idt));

148}

149

150/*

151 * Actual invocation sequence

152 */

153void go_to_protected_mode(void)

154{

155 /* Hook before leaving real mode, also disables interrupts */

156 realmode_switch_hook();

157

158 /* Move the kernel/setup to their final resting places */

159 move_kernel_around();

160

161 /* Enable the A20 gate */

162 if (enable_a20()) {

163 puts("A20 gate not responding, unable to boot...\n");

164 die();

165 }

166

167 /* Reset coprocessor (IGNNE#) */

168 reset_coprocessor();

169

170 /* Mask all interrupts in the PIC */

171 mask_all_interrupts();

172

173 /* Actual transition to protected mode... */

174 setup_idt();

175 setup_gdt();

176 protected_mode_jump(boot_params.hdr.code32_start,

177 (u32)&boot_params + (ds() << 4));

178}

179

但是,在将CPU设置为保护模式之前,必须完成一些任务。

主要有两个问题:中断和内存。

在实模式下,处理器的中断向量表(interrupt vector table)始终位于内存地址0,而在保护模式下,中断向量表的位置存储在称为IDTR的CPU寄存器中。

同时,在实模式和保护模式之间,逻辑存储器地址(程序要处理的)到线性存储器地址(从0到存储器顶部的原始数字)的转换是不同的。

保护模式要求将名为GDTR的寄存器装入全局描述符表( Global Descriptor Table )的地址以进行存储。

因此,go_to_protected_mode()调用setup_idt()和setup_gdt()【位于pm.c中】来安装临时中断描述符表和全局描述符表。

现在我们准备进入保护模式,这是由另一个汇编例程(pmjump.S) protected_mode_jump完成的。

1/* ----------------------------------------------------------------------- *

2 *

3 * Copyright (C) 1991, 1992 Linus Torvalds

4 * Copyright 2007 rPath, Inc. - All Rights Reserved

5 *

6 * This file is part of the Linux kernel, and is made available under

7 * the terms of the GNU General Public License version 2.

8 *

9 * ----------------------------------------------------------------------- */

10

11/*

12 * arch/i386/boot/pmjump.S

13 *

14 * The actual transition into protected mode

15 */

16

17#include <asm/boot.h>

18#include <asm/processor-flags.h>

19#include <asm/segment.h>

20

21 .text

22

23 .globl protected_mode_jump

24 .type protected_mode_jump, @function

25

26 .code16

27

28/*

29 * void protected_mode_jump(u32 entrypoint, u32 bootparams);

30 */

31protected_mode_jump:

32 movl %edx, %esi # Pointer to boot_params table

33

34 xorl %ebx, %ebx

35 movw %cs, %bx

36 shll $4, %ebx

37 addl %ebx, 2f

38

39 movw $__BOOT_DS, %cx

40 movw $__BOOT_TSS, %di

41

42 movl %cr0, %edx

43 orb $X86_CR0_PE, %dl # Protected mode

44 movl %edx, %cr0

45 jmp 1f # Short jump to serialize on 386/486

461:

47

48 # Transition to 32-bit mode

49 .byte 0x66, 0xea # ljmpl opcode

502: .long in_pm32 # offset

51 .word __BOOT_CS # segment

52

53 .size protected_mode_jump, .-protected_mode_jump

54

55 .code32

56 .type in_pm32, @function

57in_pm32:

58 # Set up data segments for flat 32-bit mode

59 movl %ecx, %ds

60 movl %ecx, %es

61 movl %ecx, %fs

62 movl %ecx, %gs

63 movl %ecx, %ss

64 # The 32-bit code sets up its own stack, but this way we do have

65 # a valid stack if some debugging hack wants to use it.

66 addl %ebx, %esp

67

68 # Set up TR to make Intel VT happy

69 ltr %di

70

71 # Clear registers to allow for future extensions to the

72 # 32-bit boot protocol

73 xorl %ecx, %ecx

74 xorl %edx, %edx

75 xorl %ebx, %ebx

76 xorl %ebp, %ebp

77 xorl %edi, %edi

78

79 # Set up LDTR to make Intel VT happy

80 lldt %cx

81

82 jmpl *%eax # Jump to the 32-bit entrypoint

83

84 .size in_pm32, .-in_pm32

85

该例程通过将CR0 CPU寄存器中的PE位置1来启用保护模式。

至此,我们正在禁用分页运行;

分页是处理器的一项可选功能,即使是在保护模式下,也不需要它。

重要的是,我们不再局限于640K障碍,现在可以处理多达4GB的RAM。

然后,例程调用32位内核入口点,对于压缩内核,该入口点为startup_32。

1/*

2 * linux/boot/head.S

3 *

4 * Copyright (C) 1991, 1992, 1993 Linus Torvalds

5 */

6

7/*

8 * head.S contains the 32-bit startup code.

9 *

10 * NOTE!!! Startup happens at absolute address 0x00001000, which is also where

11 * the page directory will exist. The startup code will be overwritten by

12 * the page directory. [According to comments etc elsewhere on a compressed

13 * kernel it will end up at 0x1000 + 1Mb I hope so as I assume this. - AC]

14 *

15 * Page 0 is deliberately kept safe, since System Management Mode code in

16 * laptops may need to access the BIOS data stored there. This is also

17 * useful for future device drivers that either access the BIOS via VM86

18 * mode.

19 */

20

21/*

22 * High loaded stuff by Hans Lermen & Werner Almesberger, Feb. 1996

23 */

24.text

25

26#include <linux/linkage.h>

27#include <asm/segment.h>

28#include <asm/page.h>

29#include <asm/boot.h>

30#include <asm/asm-offsets.h>

31

32.section ".text.head","ax",@progbits

33 .globl startup_32

34

35startup_32:

36 cld

37 /* test KEEP_SEGMENTS flag to see if the bootloader is asking

38 * us to not reload segments */

39 testb $(1<<6), BP_loadflags(%esi)

40 jnz 1f

41

42 cli

43 movl $(__BOOT_DS),%eax

44 movl %eax,%ds

45 movl %eax,%es

46 movl %eax,%fs

47 movl %eax,%gs

48 movl %eax,%ss

491:

50

51/* Calculate the delta between where we were compiled to run

52 * at and where we were actually loaded at. This can only be done

53 * with a short local call on x86. Nothing else will tell us what

54 * address we are running at. The reserved chunk of the real-mode

55 * data at 0x1e4 (defined as a scratch field) are used as the stack

56 * for this calculation. Only 4 bytes are needed.

57 */

58 leal (0x1e4+4)(%esi), %esp

59 call 1f

601: popl %ebp

61 subl $1b, %ebp

62

63/* %ebp contains the address we are loaded at by the boot loader and %ebx

64 * contains the address where we should move the kernel image temporarily

65 * for safe in-place decompression.

66 */

67

68#ifdef CONFIG_RELOCATABLE

69 movl %ebp, %ebx

70 addl $(CONFIG_PHYSICAL_ALIGN - 1), %ebx

71 andl $(~(CONFIG_PHYSICAL_ALIGN - 1)), %ebx

72#else

73 movl $LOAD_PHYSICAL_ADDR, %ebx

74#endif

75

76 /* Replace the compressed data size with the uncompressed size */

77 subl input_len(%ebp), %ebx

78 movl output_len(%ebp), %eax

79 addl %eax, %ebx

80 /* Add 8 bytes for every 32K input block */

81 shrl $12, %eax

82 addl %eax, %ebx

83 /* Add 32K + 18 bytes of extra slack */

84 addl $(32768 + 18), %ebx

85 /* Align on a 4K boundary */

86 addl $4095, %ebx

87 andl $~4095, %ebx

88

89/* Copy the compressed kernel to the end of our buffer

90 * where decompression in place becomes safe.

91 */

92 pushl %esi

93 leal _end(%ebp), %esi

94 leal _end(%ebx), %edi

95 movl $(_end - startup_32), %ecx

96 std

97 rep

98 movsb

99 cld

100 popl %esi

101

102/* Compute the kernel start address.

103 */

104#ifdef CONFIG_RELOCATABLE

105 addl $(CONFIG_PHYSICAL_ALIGN - 1), %ebp

106 andl $(~(CONFIG_PHYSICAL_ALIGN - 1)), %ebp

107#else

108 movl $LOAD_PHYSICAL_ADDR, %ebp

109#endif

110

111/*

112 * Jump to the relocated address.

113 */

114 leal relocated(%ebx), %eax

115 jmp *%eax

116.section ".text"

117relocated:

118

119/*

120 * Clear BSS

121 */

122 xorl %eax,%eax

123 leal _edata(%ebx),%edi

124 leal _end(%ebx), %ecx

125 subl %edi,%ecx

126 cld

127 rep

128 stosb

129

130/*

131 * Setup the stack for the decompressor

132 */

133 leal stack_end(%ebx), %esp

134

135/*

136 * Do the decompression, and jump to the new kernel..

137 */

138 movl output_len(%ebx), %eax

139 pushl %eax

140 pushl %ebp # output address

141 movl input_len(%ebx), %eax

142 pushl %eax # input_len

143 leal input_data(%ebx), %eax

144 pushl %eax # input_data

145 leal _end(%ebx), %eax

146 pushl %eax # end of the image as third argument

147 pushl %esi # real mode pointer as second arg

148 call decompress_kernel

149 addl $20, %esp

150 popl %ecx

151

152#if CONFIG_RELOCATABLE

153/* Find the address of the relocations.

154 */

155 movl %ebp, %edi

156 addl %ecx, %edi

157

158/* Calculate the delta between where vmlinux was compiled to run

159 * and where it was actually loaded.

160 */

161 movl %ebp, %ebx

162 subl $LOAD_PHYSICAL_ADDR, %ebx

163 jz 2f /* Nothing to be done if loaded at compiled addr. */

164/*

165 * Process relocations.

166 */

167

1681: subl $4, %edi

169 movl 0(%edi), %ecx

170 testl %ecx, %ecx

171 jz 2f

172 addl %ebx, -__PAGE_OFFSET(%ebx, %ecx)

173 jmp 1b

1742:

175#endif

176

177/*

178 * Jump to the decompressed kernel.

179 */

180 xorl %ebx,%ebx

181 jmp *%ebp

182

183.bss

184.balign 4

185stack:

186 .fill 4096, 1, 0

187stack_end:

188

该例程执行一些基本的寄存器初始化,并调用decompress_kernel(),这是C函数进行实际的解压缩。

368asmlinkage void decompress_kernel(void *rmode, memptr heap,

369 uch *input_data, unsigned long input_len,

370 uch *output)

371{

372 real_mode = rmode;

373

374 if (RM_SCREEN_INFO.orig_video_mode == 7) {

375 vidmem = (char *) 0xb0000;

376 vidport = 0x3b4;

377 } else {

378 vidmem = (char *) 0xb8000;

379 vidport = 0x3d4;

380 }

381

382 lines = RM_SCREEN_INFO.orig_video_lines;

383 cols = RM_SCREEN_INFO.orig_video_cols;

384

385 window = output; /* Output buffer (Normally at 1M) */

386 free_mem_ptr = heap; /* Heap */

387 free_mem_end_ptr = heap + HEAP_SIZE;

388 inbuf = input_data; /* Input buffer */

389 insize = input_len;

390 inptr = 0;

391

392#ifdef CONFIG_X86_64

393 if ((ulg)output & (__KERNEL_ALIGN - 1))

394 error("Destination address not 2M aligned");

395 if ((ulg)output >= 0xffffffffffUL)

396 error("Destination address too large");

397#else

398 if ((u32)output & (CONFIG_PHYSICAL_ALIGN -1))

399 error("Destination address not CONFIG_PHYSICAL_ALIGN aligned");

400 if (heap > ((-__PAGE_OFFSET-(512<<20)-1) & 0x7fffffff))

401 error("Destination address too large");

402#ifndef CONFIG_RELOCATABLE

403 if ((u32)output != LOAD_PHYSICAL_ADDR)

404 error("Wrong destination address");

405#endif

406#endif

407

408 makecrc();

409 putstr("\nDecompressing Linux... ");

410 gunzip();

411 putstr("done.\nBooting the kernel.\n");

412 return;

413}

414

decompress_kernel()打印熟悉的“正在解压缩Linux …”消息。

解压缩就地进行,完成后,未压缩的内核映像将覆盖第一个图中所示的压缩映像。

因此,未压缩的内容也从1MB开始。

然后,decompress_kernel()打印“完成”。

和令人欣慰的“引导内核”。

“启动”是指跳到整个故事的最后一个入口点,由上帝本人在山Halti上为Linus赋予了Linus,这是第二兆RAM(0x100000)开头的保护模式内核入口点。

这个神圣的地方包含一个例程,呃,startup_32。

但是,您看到的是,它在另一个目录中。

1/*

2 * linux/arch/i386/kernel/head.S -- the 32-bit startup code.

3 *

4 * Copyright (C) 1991, 1992 Linus Torvalds

5 *

6 * Enhanced CPU detection and feature setting code by Mike Jagdis

7 * and Martin Mares, November 1997.

8 */

9

10.text

11#include <linux/threads.h>

12#include <linux/init.h>

13#include <linux/linkage.h>

14#include <asm/segment.h>

15#include <asm/page.h>

16#include <asm/pgtable.h>

17#include <asm/desc.h>

18#include <asm/cache.h>

19#include <asm/thread_info.h>

20#include <asm/asm-offsets.h>

21#include <asm/setup.h>

22#include <asm/processor-flags.h>

23

24/* Physical address */

25#define pa(X) ((X) - __PAGE_OFFSET)

26

27/*

28 * References to members of the new_cpu_data structure.

29 */

30

31#define X86 new_cpu_data+CPUINFO_x86

32#define X86_VENDOR new_cpu_data+CPUINFO_x86_vendor

33#define X86_MODEL new_cpu_data+CPUINFO_x86_model

34#define X86_MASK new_cpu_data+CPUINFO_x86_mask

35#define X86_HARD_MATH new_cpu_data+CPUINFO_hard_math

36#define X86_CPUID new_cpu_data+CPUINFO_cpuid_level

37#define X86_CAPABILITY new_cpu_data+CPUINFO_x86_capability

38#define X86_VENDOR_ID new_cpu_data+CPUINFO_x86_vendor_id

39

40/*

41 * This is how much memory *in addition to the memory covered up to

42 * and including _end* we need mapped initially.

43 * We need:

44 * - one bit for each possible page, but only in low memory, which means

45 * 2^32/4096/8 = 128K worst case (4G/4G split.)

46 * - enough space to map all low memory, which means

47 * (2^32/4096) / 1024 pages (worst case, non PAE)

48 * (2^32/4096) / 512 + 4 pages (worst case for PAE)

49 * - a few pages for allocator use before the kernel pagetable has

50 * been set up

51 *

52 * Modulo rounding, each megabyte assigned here requires a kilobyte of

53 * memory, which is currently unreclaimed.

54 *

55 * This should be a multiple of a page.

56 */

57LOW_PAGES = 1<<(32-PAGE_SHIFT_asm)

58

59/*

60 * To preserve the DMA pool in PAGEALLOC kernels, we'll allocate

61 * pagetables from above the 16MB DMA limit, so we'll have to set

62 * up pagetables 16MB more (worst-case):

63 */

64#ifdef CONFIG_DEBUG_PAGEALLOC

65LOW_PAGES = LOW_PAGES + 0x1000000

66#endif

67

68#if PTRS_PER_PMD > 1

69PAGE_TABLE_SIZE = (LOW_PAGES / PTRS_PER_PMD) + PTRS_PER_PGD

70#else

71PAGE_TABLE_SIZE = (LOW_PAGES / PTRS_PER_PGD)

72#endif

73BOOTBITMAP_SIZE = LOW_PAGES / 8

74ALLOCATOR_SLOP = 4

75

76INIT_MAP_BEYOND_END = BOOTBITMAP_SIZE + (PAGE_TABLE_SIZE + ALLOCATOR_SLOP)*PAGE_SIZE_asm

77

78/*

79 * 32-bit kernel entrypoint; only used by the boot CPU. On entry,

80 * %esi points to the real-mode code as a 32-bit pointer.

81 * CS and DS must be 4 GB flat segments, but we don't depend on

82 * any particular GDT layout, because we load our own as soon as we

83 * can.

84 */

85.section .text.head,"ax",@progbits

86ENTRY(startup_32)

87 /* test KEEP_SEGMENTS flag to see if the bootloader is asking

88 us to not reload segments */

89 testb $(1<<6), BP_loadflags(%esi)

90 jnz 2f

91

92/*

93 * Set segments to known values.

94 */

95 lgdt pa(boot_gdt_descr)

96 movl $(__BOOT_DS),%eax

97 movl %eax,%ds

98 movl %eax,%es

99 movl %eax,%fs

100 movl %eax,%gs

1012:

102

103/*

104 * Clear BSS first so that there are no surprises...

105 */

106 cld

107 xorl %eax,%eax

108 movl $pa(__bss_start),%edi

109 movl $pa(__bss_stop),%ecx

110 subl %edi,%ecx

111 shrl $2,%ecx

112 rep ; stosl

113/*

114 * Copy bootup parameters out of the way.

115 * Note: %esi still has the pointer to the real-mode data.

116 * With the kexec as boot loader, parameter segment might be loaded beyond

117 * kernel image and might not even be addressable by early boot page tables.

118 * (kexec on panic case). Hence copy out the parameters before initializing

119 * page tables.

120 */

121 movl $pa(boot_params),%edi

122 movl $(PARAM_SIZE/4),%ecx

123 cld

124 rep

125 movsl

126 movl pa(boot_params) + NEW_CL_POINTER,%esi

127 andl %esi,%esi

128 jz 1f # No comand line

129 movl $pa(boot_command_line),%edi

130 movl $(COMMAND_LINE_SIZE/4),%ecx

131 rep

132 movsl

1331:

134

135#ifdef CONFIG_PARAVIRT

136 /* This is can only trip for a broken bootloader... */

137 cmpw $0x207, pa(boot_params + BP_version)

138 jb default_entry

139

140 /* Paravirt-compatible boot parameters. Look to see what architecture

141 we're booting under. */

142 movl pa(boot_params + BP_hardware_subarch), %eax

143 cmpl $num_subarch_entries, %eax

144 jae bad_subarch

145

146 movl pa(subarch_entries)(,%eax,4), %eax

147 subl $__PAGE_OFFSET, %eax

148 jmp *%eax

149

150bad_subarch:

151WEAK(lguest_entry)

152WEAK(xen_entry)

153 /* Unknown implementation; there's really

154 nothing we can do at this point. */

155 ud2a

156

157 __INITDATA

158

159subarch_entries:

160 .long default_entry /* normal x86/PC */

161 .long lguest_entry /* lguest hypervisor */

162 .long xen_entry /* Xen hypervisor */

163num_subarch_entries = (. - subarch_entries) / 4

164.previous

165#endif /* CONFIG_PARAVIRT */

166

167/*

168 * Initialize page tables. This creates a PDE and a set of page

169 * tables, which are located immediately beyond _end. The variable

170 * init_pg_tables_end is set up to point to the first "safe" location.

171 * Mappings are created both at virtual address 0 (identity mapping)

172 * and PAGE_OFFSET for up to _end+sizeof(page tables)+INIT_MAP_BEYOND_END.

173 *

174 * Note that the stack is not yet set up!

175 */

176#define PTE_ATTR 0x007 /* PRESENT+RW+USER */

177#define PDE_ATTR 0x067 /* PRESENT+RW+USER+DIRTY+ACCESSED */

178#define PGD_ATTR 0x001 /* PRESENT (no other attributes) */

179

180default_entry:

181#ifdef CONFIG_X86_PAE

182

183 /*

184 * In PAE mode swapper_pg_dir is statically defined to contain enough

185 * entries to cover the VMSPLIT option (that is the top 1, 2 or 3

186 * entries). The identity mapping is handled by pointing two PGD

187 * entries to the first kernel PMD.

188 *

189 * Note the upper half of each PMD or PTE are always zero at

190 * this stage.

191 */

192

193#define KPMDS ((0x100000000-__PAGE_OFFSET) >> 30) /* Number of kernel PMDs */

194

195 xorl %ebx,%ebx /* %ebx is kept at zero */

196

197 movl $pa(pg0), %edi

198 movl $pa(swapper_pg_pmd), %edx

199 movl $PTE_ATTR, %eax

20010:

201 leal PDE_ATTR(%edi),%ecx /* Create PMD entry */

202 movl %ecx,(%edx) /* Store PMD entry */

203 /* Upper half already zero */

204 addl $8,%edx

205 movl $512,%ecx

20611:

207 stosl

208 xchgl %eax,%ebx

209 stosl

210 xchgl %eax,%ebx

211 addl $0x1000,%eax

212 loop 11b

213

214 /*

215 * End condition: we must map up to and including INIT_MAP_BEYOND_END

216 * bytes beyond the end of our own page tables.

217 */

218 leal (INIT_MAP_BEYOND_END+PTE_ATTR)(%edi),%ebp

219 cmpl %ebp,%eax

220 jb 10b

2211:

222 movl %edi,pa(init_pg_tables_end)

223

224 /* Do early initialization of the fixmap area */

225 movl $pa(swapper_pg_fixmap)+PDE_ATTR,%eax

226 movl %eax,pa(swapper_pg_pmd+0x1000*KPMDS-8)

227#else /* Not PAE */

228

229page_pde_offset = (__PAGE_OFFSET >> 20);

230

231 movl $pa(pg0), %edi

232 movl $pa(swapper_pg_dir), %edx

233 movl $PTE_ATTR, %eax

23410:

235 leal PDE_ATTR(%edi),%ecx /* Create PDE entry */

236 movl %ecx,(%edx) /* Store identity PDE entry */

237 movl %ecx,page_pde_offset(%edx) /* Store kernel PDE entry */

238 addl $4,%edx

239 movl $1024, %ecx

24011:

241 stosl

242 addl $0x1000,%eax

243 loop 11b

244 /*

245 * End condition: we must map up to and including INIT_MAP_BEYOND_END

246 * bytes beyond the end of our own page tables; the +0x007 is

247 * the attribute bits

248 */

249 leal (INIT_MAP_BEYOND_END+PTE_ATTR)(%edi),%ebp

250 cmpl %ebp,%eax

251 jb 10b

252 movl %edi,pa(init_pg_tables_end)

253

254 /* Do early initialization of the fixmap area */

255 movl $pa(swapper_pg_fixmap)+PDE_ATTR,%eax

256 movl %eax,pa(swapper_pg_dir+0xffc)

257#endif

258 jmp 3f

259/*

260 * Non-boot CPU entry point; entered from trampoline.S

261 * We can't lgdt here, because lgdt itself uses a data segment, but

262 * we know the trampoline has already loaded the boot_gdt for us.

263 *

264 * If cpu hotplug is not supported then this code can go in init section

265 * which will be freed later

266 */

267

268#ifndef CONFIG_HOTPLUG_CPU

269.section .init.text,"ax",@progbits

270#endif

271

272#ifdef CONFIG_SMP

273ENTRY(startup_32_smp)

274 cld

275 movl $(__BOOT_DS),%eax

276 movl %eax,%ds

277 movl %eax,%es

278 movl %eax,%fs

279 movl %eax,%gs

280#endif /* CONFIG_SMP */

2813:

282

283/*

284 * New page tables may be in 4Mbyte page mode and may

285 * be using the global pages.

286 *

287 * NOTE! If we are on a 486 we may have no cr4 at all!

288 * So we do not try to touch it unless we really have

289 * some bits in it to set. This won't work if the BSP

290 * implements cr4 but this AP does not -- very unlikely

291 * but be warned! The same applies to the pse feature

292 * if not equally supported. --macro

293 *

294 * NOTE! We have to correct for the fact that we're

295 * not yet offset PAGE_OFFSET..

296 */

297#define cr4_bits pa(mmu_cr4_features)

298 movl cr4_bits,%edx

299 andl %edx,%edx

300 jz 6f

301 movl %cr4,%eax # Turn on paging options (PSE,PAE,..)

302 orl %edx,%eax

303 movl %eax,%cr4

304

305 btl $5, %eax # check if PAE is enabled

306 jnc 6f

307

308 /* Check if extended functions are implemented */

309 movl $0x80000000, %eax

310 cpuid

311 cmpl $0x80000000, %eax

312 jbe 6f

313 mov $0x80000001, %eax

314 cpuid

315 /* Execute Disable bit supported? */

316 btl $20, %edx

317 jnc 6f

318

319 /* Setup EFER (Extended Feature Enable Register) */

320 movl $0xc0000080, %ecx

321 rdmsr

322

323 btsl $11, %eax

324 /* Make changes effective */

325 wrmsr

326

3276:

328

329/*

330 * Enable paging

331 */

332 movl $pa(swapper_pg_dir),%eax

333 movl %eax,%cr3 /* set the page table pointer.. */

334 movl %cr0,%eax

335 orl $X86_CR0_PG,%eax

336 movl %eax,%cr0 /* ..and set paging (PG) bit */

337 ljmp $__BOOT_CS,$1f /* Clear prefetch and normalize %eip */

3381:

339 /* Set up the stack pointer */

340 lss stack_start,%esp

341

342/*

343 * Initialize eflags. Some BIOS's leave bits like NT set. This would

344 * confuse the debugger if this code is traced.

345 * XXX - best to initialize before switching to protected mode.

346 */

347 pushl $0

348 popfl

349

350#ifdef CONFIG_SMP

351 cmpb $0, ready

352 jz 1f /* Initial CPU cleans BSS */

353 jmp checkCPUtype

3541:

355#endif /* CONFIG_SMP */

356

357/*

358 * start system 32-bit setup. We need to re-do some of the things done

359 * in 16-bit mode for the "real" operations.

360 */

361 call setup_idt

362

363checkCPUtype:

364

365 movl $-1,X86_CPUID # -1 for no CPUID initially

366

367/* check if it is 486 or 386. */

368/*

369 * XXX - this does a lot of unnecessary setup. Alignment checks don't

370 * apply at our cpl of 0 and the stack ought to be aligned already, and

371 * we don't need to preserve eflags.

372 */

373

374 movb $3,X86 # at least 386

375 pushfl # push EFLAGS

376 popl %eax # get EFLAGS

377 movl %eax,%ecx # save original EFLAGS

378 xorl $0x240000,%eax # flip AC and ID bits in EFLAGS

379 pushl %eax # copy to EFLAGS

380 popfl # set EFLAGS

381 pushfl # get new EFLAGS

382 popl %eax # put it in eax

383 xorl %ecx,%eax # change in flags

384 pushl %ecx # restore original EFLAGS

385 popfl

386 testl $0x40000,%eax # check if AC bit changed

387 je is386

388

389 movb $4,X86 # at least 486

390 testl $0x200000,%eax # check if ID bit changed

391 je is486

392

393 /* get vendor info */

394 xorl %eax,%eax # call CPUID with 0 -> return vendor ID

395 cpuid

396 movl %eax,X86_CPUID # save CPUID level

397 movl %ebx,X86_VENDOR_ID # lo 4 chars

398 movl %edx,X86_VENDOR_ID+4 # next 4 chars

399 movl %ecx,X86_VENDOR_ID+8 # last 4 chars

400

401 orl %eax,%eax # do we have processor info as well?

402 je is486

403

404 movl $1,%eax # Use the CPUID instruction to get CPU type

405 cpuid

406 movb %al,%cl # save reg for future use

407 andb $0x0f,%ah # mask processor family

408 movb %ah,X86

409 andb $0xf0,%al # mask model

410 shrb $4,%al

411 movb %al,X86_MODEL

412 andb $0x0f,%cl # mask mask revision

413 movb %cl,X86_MASK

414 movl %edx,X86_CAPABILITY

415

416is486: movl $0x50022,%ecx # set AM, WP, NE and MP

417 jmp 2f

418

419is386: movl $2,%ecx # set MP

4202: movl %cr0,%eax

421 andl $0x80000011,%eax # Save PG,PE,ET

422 orl %ecx,%eax

423 movl %eax,%cr0

424

425 call check_x87

426 lgdt early_gdt_descr

427 lidt idt_descr

428 ljmp $(__KERNEL_CS),$1f

4291: movl $(__KERNEL_DS),%eax # reload all the segment registers

430 movl %eax,%ss # after changing gdt.

431 movl %eax,%fs # gets reset once there's real percpu

432

433 movl $(__USER_DS),%eax # DS/ES contains default USER segment

434 movl %eax,%ds

435 movl %eax,%es

436

437 xorl %eax,%eax # Clear GS and LDT

438 movl %eax,%gs

439 lldt %ax

440

441 cld # gcc2 wants the direction flag cleared at all times

442 pushl $0 # fake return address for unwinder

443#ifdef CONFIG_SMP

444 movb ready, %cl

445 movb $1, ready

446 cmpb $0,%cl # the first CPU calls start_kernel

447 je 1f

448 movl $(__KERNEL_PERCPU), %eax

449 movl %eax,%fs # set this cpu's percpu

450 jmp initialize_secondary # all other CPUs call initialize_secondary

4511:

452#endif /* CONFIG_SMP */

453 jmp start_kernel

454

455/*

456 * We depend on ET to be correct. This checks for 287/387.

457 */

458check_x87:

459 movb $0,X86_HARD_MATH

460 clts

461 fninit

462 fstsw %ax

463 cmpb $0,%al

464 je 1f

465 movl %cr0,%eax /* no coprocessor: have to set bits */

466 xorl $4,%eax /* set EM */

467 movl %eax,%cr0

468 ret

469 ALIGN

4701: movb $1,X86_HARD_MATH

471 .byte 0xDB,0xE4 /* fsetpm for 287, ignored by 387 */

472 ret

473

474/*

475 * setup_idt

476 *

477 * sets up a idt with 256 entries pointing to

478 * ignore_int, interrupt gates. It doesn't actually load

479 * idt - that can be done only after paging has been enabled

480 * and the kernel moved to PAGE_OFFSET. Interrupts

481 * are enabled elsewhere, when we can be relatively

482 * sure everything is ok.

483 *

484 * Warning: %esi is live across this function.

485 */

486setup_idt:

487 lea ignore_int,%edx

488 movl $(__KERNEL_CS << 16),%eax

489 movw %dx,%ax /* selector = 0x0010 = cs */

490 movw $0x8E00,%dx /* interrupt gate - dpl=0, present */

491

492 lea idt_table,%edi

493 mov $256,%ecx

494rp_sidt:

495 movl %eax,(%edi)

496 movl %edx,4(%edi)

497 addl $8,%edi

498 dec %ecx

499 jne rp_sidt

500

501.macro set_early_handler handler,trapno

502 lea \handler,%edx

503 movl $(__KERNEL_CS << 16),%eax

504 movw %dx,%ax

505 movw $0x8E00,%dx /* interrupt gate - dpl=0, present */

506 lea idt_table,%edi

507 movl %eax,8*\trapno(%edi)

508 movl %edx,8*\trapno+4(%edi)

509.endm

510

511 set_early_handler handler=early_divide_err,trapno=0

512 set_early_handler handler=early_illegal_opcode,trapno=6

513 set_early_handler handler=early_protection_fault,trapno=13

514 set_early_handler handler=early_page_fault,trapno=14

515

516 ret

517

518early_divide_err:

519 xor %edx,%edx

520 pushl $0 /* fake errcode */

521 jmp early_fault

522

523early_illegal_opcode:

524 movl $6,%edx

525 pushl $0 /* fake errcode */

526 jmp early_fault

527

528early_protection_fault:

529 movl $13,%edx

530 jmp early_fault

531

532early_page_fault:

533 movl $14,%edx

534 jmp early_fault

535

536early_fault:

537 cld

538#ifdef CONFIG_PRINTK

539 pusha

540 movl $(__KERNEL_DS),%eax

541 movl %eax,%ds

542 movl %eax,%es

543 cmpl $2,early_recursion_flag

544 je hlt_loop

545 incl early_recursion_flag

546 movl %cr2,%eax

547 pushl %eax

548 pushl %edx /* trapno */

549 pushl $fault_msg

550#ifdef CONFIG_EARLY_PRINTK

551 call early_printk

552#else

553 call printk

554#endif

555#endif

556 call dump_stack

557hlt_loop:

558 hlt

559 jmp hlt_loop

560

561/* This is the default interrupt "handler" :-) */

562 ALIGN

563ignore_int:

564 cld

565#ifdef CONFIG_PRINTK

566 pushl %eax

567 pushl %ecx

568 pushl %edx

569 pushl %es

570 pushl %ds

571 movl $(__KERNEL_DS),%eax

572 movl %eax,%ds

573 movl %eax,%es

574 cmpl $2,early_recursion_flag

575 je hlt_loop

576 incl early_recursion_flag

577 pushl 16(%esp)

578 pushl 24(%esp)

579 pushl 32(%esp)

580 pushl 40(%esp)

581 pushl $int_msg

582#ifdef CONFIG_EARLY_PRINTK

583 call early_printk

584#else

585 call printk

586#endif

587 addl $(5*4),%esp

588 popl %ds

589 popl %es

590 popl %edx

591 popl %ecx

592 popl %eax

593#endif

594 iret

595

596.section .text

597/*

598 * Real beginning of normal "text" segment

599 */

600ENTRY(stext)

601ENTRY(_stext)

602

603/*

604 * BSS section

605 */

606.section ".bss.page_aligned","wa"

607 .align PAGE_SIZE_asm

608#ifdef CONFIG_X86_PAE

609swapper_pg_pmd:

610 .fill 1024*KPMDS,4,0

611#else

612ENTRY(swapper_pg_dir)

613 .fill 1024,4,0

614#endif

615swapper_pg_fixmap:

616 .fill 1024,4,0

617ENTRY(empty_zero_page)

618 .fill 4096,1,0

619/*

620 * This starts the data section.

621 */

622#ifdef CONFIG_X86_PAE

623.section ".data.page_aligned","wa"

624 /* Page-aligned for the benefit of paravirt? */

625 .align PAGE_SIZE_asm

626ENTRY(swapper_pg_dir)

627 .long pa(swapper_pg_pmd+PGD_ATTR),0 /* low identity map */

628# if KPMDS == 3

629 .long pa(swapper_pg_pmd+PGD_ATTR),0

630 .long pa(swapper_pg_pmd+PGD_ATTR+0x1000),0

631 .long pa(swapper_pg_pmd+PGD_ATTR+0x2000),0

632# elif KPMDS == 2

633 .long 0,0

634 .long pa(swapper_pg_pmd+PGD_ATTR),0

635 .long pa(swapper_pg_pmd+PGD_ATTR+0x1000),0

636# elif KPMDS == 1

637 .long 0,0

638 .long 0,0

639 .long pa(swapper_pg_pmd+PGD_ATTR),0

640# else

641# error "Kernel PMDs should be 1, 2 or 3"

642# endif

643 .align PAGE_SIZE_asm /* needs to be page-sized too */

644#endif

645

646.data

647ENTRY(stack_start)

648 .long init_thread_union+THREAD_SIZE

649 .long __BOOT_DS

650

651ready: .byte 0

652

653early_recursion_flag:

654 .long 0

655

656int_msg:

657 .asciz "Unknown interrupt or fault at EIP %p %p %p\n"

658

659fault_msg:

660 .asciz \

661/* fault info: */ "BUG: Int %d: CR2 %p\n" \

662/* pusha regs: */ " EDI %p ESI %p EBP %p ESP %p\n" \

663 " EBX %p EDX %p ECX %p EAX %p\n" \

664/* fault frame: */ " err %p EIP %p CS %p flg %p\n" \

665 \

666 "Stack: %p %p %p %p %p %p %p %p\n" \

667 " %p %p %p %p %p %p %p %p\n" \

668 " %p %p %p %p %p %p %p %p\n"

669

670#include "../../x86/xen/xen-head.S"

671

672/*

673 * The IDT and GDT 'descriptors' are a strange 48-bit object

674 * only used by the lidt and lgdt instructions. They are not

675 * like usual segment descriptors - they consist of a 16-bit

676 * segment size, and 32-bit linear address value:

677 */

678

679.globl boot_gdt_descr

680.globl idt_descr

681

682 ALIGN

683# early boot GDT descriptor (must use 1:1 address mapping)

684 .word 0 # 32 bit align gdt_desc.address

685boot_gdt_descr:

686 .word __BOOT_DS+7

687 .long boot_gdt - __PAGE_OFFSET

688

689 .word 0 # 32-bit align idt_desc.address

690idt_descr:

691 .word IDT_ENTRIES*8-1 # idt contains 256 entries

692 .long idt_table

693

694# boot GDT descriptor (later on used by CPU#0):

695 .word 0 # 32 bit align gdt_desc.address

696ENTRY(early_gdt_descr)

697 .word GDT_ENTRIES*8-1

698 .long per_cpu__gdt_page /* Overwritten for secondary CPUs */

699

700/*

701 * The boot_gdt must mirror the equivalent in setup.S and is

702 * used only for booting.

703 */

704 .align L1_CACHE_BYTES

705ENTRY(boot_gdt)

706 .fill GDT_ENTRY_BOOT_CS,8,0

707 .quad 0x00cf9a000000ffff /* kernel 4GB code at 0x00000000 */

708 .quad 0x00cf92000000ffff /* kernel 4GB data at 0x00000000 */

startup_32的第二种形式也是一个汇编例程,但是它包含32位模式的初始化。

它将清除保护模式内核的bss段(这是真正的内核,它将在机器重新启动或关闭之前运行),设置最终的内存全局描述符表,构建页表以便可以打开分页,启用分页,初始化堆栈,创建最终的中断描述符表,最后跳转到与体系结构无关的内核启动start_kernel()。

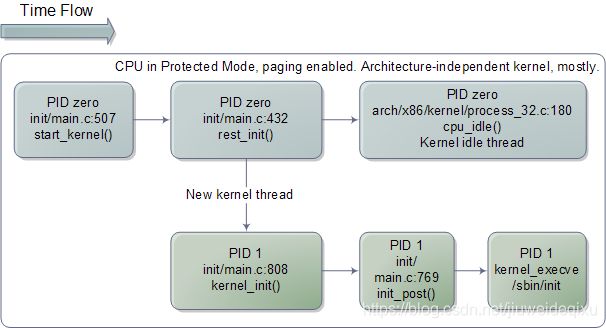

下图显示了引导最后一站的代码流:

与体系结构无关的Linux内核初始化

1/*

2 * linux/init/main.c

3 *

4 * Copyright (C) 1991, 1992 Linus Torvalds

5 *

6 * GK 2/5/95 - Changed to support mounting root fs via NFS

7 * Added initrd & change_root: Werner Almesberger & Hans Lermen, Feb '96

8 * Moan early if gcc is old, avoiding bogus kernels - Paul Gortmaker, May '96

9 * Simplified starting of init: Michael A. Griffith

10 */

11

12#include <linux/types.h>

13#include <linux/module.h>

14#include <linux/proc_fs.h>

15#include <linux/kernel.h>

16#include <linux/syscalls.h>

17#include <linux/string.h>

18#include <linux/ctype.h>

19#include <linux/delay.h>

20#include <linux/utsname.h>

21#include <linux/ioport.h>

22#include <linux/init.h>

23#include <linux/smp_lock.h>

24#include <linux/initrd.h>

25#include <linux/hdreg.h>

26#include <linux/bootmem.h>

27#include <linux/tty.h>

28#include <linux/gfp.h>

29#include <linux/percpu.h>

30#include <linux/kmod.h>

31#include <linux/kernel_stat.h>

32#include <linux/start_kernel.h>

33#include <linux/security.h>

34#include <linux/workqueue.h>

35#include <linux/profile.h>

36#include <linux/rcupdate.h>

37#include <linux/moduleparam.h>

38#include <linux/kallsyms.h>

39#include <linux/writeback.h>

40#include <linux/cpu.h>

41#include <linux/cpuset.h>

42#include <linux/cgroup.h>

43#include <linux/efi.h>

44#include <linux/tick.h>

45#include <linux/interrupt.h>

46#include <linux/taskstats_kern.h>

47#include <linux/delayacct.h>

48#include <linux/unistd.h>

49#include <linux/rmap.h>

50#include <linux/mempolicy.h>

51#include <linux/key.h>

52#include <linux/unwind.h>

53#include <linux/buffer_head.h>

54#include <linux/debug_locks.h>

55#include <linux/lockdep.h>

56#include <linux/pid_namespace.h>

57#include <linux/device.h>

58#include <linux/kthread.h>

59#include <linux/sched.h>

60#include <linux/signal.h>

61

62#include <asm/io.h>

63#include <asm/bugs.h>

64#include <asm/setup.h>

65#include <asm/sections.h>

66#include <asm/cacheflush.h>

67

68#ifdef CONFIG_X86_LOCAL_APIC

69#include <asm/smp.h>

70#endif

71

72/*

73 * This is one of the first .c files built. Error out early if we have compiler

74 * trouble.

75 */

76

77#if __GNUC__ == 4 && __GNUC_MINOR__ == 1 && __GNUC_PATCHLEVEL__ == 0

78#warning gcc-4.1.0 is known to miscompile the kernel. A different compiler version is recommended.

79#endif

80

81static int kernel_init(void *);

82

83extern void init_IRQ(void);

84extern void fork_init(unsigned long);

85extern void mca_init(void);

86extern void sbus_init(void);

87extern void pidhash_init(void);

88extern void pidmap_init(void);

89extern void prio_tree_init(void);

90extern void radix_tree_init(void);

91extern void free_initmem(void);

92#ifdef CONFIG_ACPI

93extern void acpi_early_init(void);

94#else

95static inline void acpi_early_init(void) { }

96#endif

97#ifndef CONFIG_DEBUG_RODATA

98static inline void mark_rodata_ro(void) { }

99#endif

100

101#ifdef CONFIG_TC

102extern void tc_init(void);

103#endif

104

105enum system_states system_state;

106EXPORT_SYMBOL(system_state);

107

108/*

109 * Boot command-line arguments

110 */

111#define MAX_INIT_ARGS CONFIG_INIT_ENV_ARG_LIMIT

112#define MAX_INIT_ENVS CONFIG_INIT_ENV_ARG_LIMIT

113

114extern void time_init(void);

115/* Default late time init is NULL. archs can override this later. */

116void (*late_time_init)(void);

117extern void softirq_init(void);

118

119/* Untouched command line saved by arch-specific code. */

120char __initdata boot_command_line[COMMAND_LINE_SIZE];

121/* Untouched saved command line (eg. for /proc) */

122char *saved_command_line;

123/* Command line for parameter parsing */

124static char *static_command_line;

125

126static char *execute_command;

127static char *ramdisk_execute_command;

128

129#ifdef CONFIG_SMP

130/* Setup configured maximum number of CPUs to activate */

131unsigned int __initdata setup_max_cpus = NR_CPUS;

132

133/*

134 * Setup routine for controlling SMP activation

135 *

136 * Command-line option of "nosmp" or "maxcpus=0" will disable SMP

137 * activation entirely (the MPS table probe still happens, though).

138 *

139 * Command-line option of "maxcpus=", where is an integer

140 * greater than 0, limits the maximum number of CPUs activated in

141 * SMP mode to .

142 */

143#ifndef CONFIG_X86_IO_APIC

144static inline void disable_ioapic_setup(void) {};

145#endif

146

147static int __init nosmp(char *str)

148{

149 setup_max_cpus = 0;

150 disable_ioapic_setup();

151 return 0;

152}

153

154early_param("nosmp", nosmp);

155

156static int __init maxcpus(char *str)

157{

158 get_option(&str, &setup_max_cpus);

159 if (setup_max_cpus == 0)

160 disable_ioapic_setup();

161

162 return 0;

163}

164

165early_param("maxcpus", maxcpus);

166#else

167#define setup_max_cpus NR_CPUS

168#endif

169

170/*

171 * If set, this is an indication to the drivers that reset the underlying

172 * device before going ahead with the initialization otherwise driver might

173 * rely on the BIOS and skip the reset operation.

174 *

175 * This is useful if kernel is booting in an unreliable environment.

176 * For ex. kdump situaiton where previous kernel has crashed, BIOS has been

177 * skipped and devices will be in unknown state.

178 */

179unsigned int reset_devices;

180EXPORT_SYMBOL(reset_devices);

181

182static int __init set_reset_devices(char *str)

183{

184 reset_devices = 1;

185 return 1;

186}

187

188__setup("reset_devices", set_reset_devices);

189

190static char * argv_init[MAX_INIT_ARGS+2] = { "init", NULL, };

191char * envp_init[MAX_INIT_ENVS+2] = { "HOME=/", "TERM=linux", NULL, };

192static const char *panic_later, *panic_param;

193

194extern struct obs_kernel_param __setup_start[], __setup_end[];

195

196static int __init obsolete_checksetup(char *line)

197{

198 struct obs_kernel_param *p;

199 int had_early_param = 0;

200

201 p = __setup_start;

202 do {

203 int n = strlen(p->str);

204 if (!strncmp(line, p->str, n)) {

205 if (p->early) {

206 /* Already done in parse_early_param?

207 * (Needs exact match on param part).

208 * Keep iterating, as we can have early

209 * params and __setups of same names 8( */

210 if (line[n] == '\0' || line[n] == '=')

211 had_early_param = 1;

212 } else if (!p->setup_func) {

213 printk(KERN_WARNING "Parameter %s is obsolete,"

214 " ignored\n", p->str);

215 return 1;

216 } else if (p->setup_func(line + n))

217 return 1;

218 }

219 p++;

220 } while (p < __setup_end);

221

222 return had_early_param;

223}

224

225/*

226 * This should be approx 2 Bo*oMips to start (note initial shift), and will

227 * still work even if initially too large, it will just take slightly longer

228 */

229unsigned long loops_per_jiffy = (1<<12);

230

231EXPORT_SYMBOL(loops_per_jiffy);

232

233static int __init debug_kernel(char *str)

234{

235 console_loglevel = 10;

236 return 0;

237}

238

239static int __init quiet_kernel(char *str)

240{

241 console_loglevel = 4;

242 return 0;

243}

244

245early_param("debug", debug_kernel);

246early_param("quiet", quiet_kernel);

247

248static int __init loglevel(char *str)

249{

250 get_option(&str, &console_loglevel);

251 return 0;

252}

253

254early_param("loglevel", loglevel);

255

256/*

257 * Unknown boot options get handed to init, unless they look like

258 * failed parameters

259 */

260static int __init unknown_bootoption(char *param, char *val)

261{

262 /* Change NUL term back to "=", to make "param" the whole string. */

263 if (val) {

264 /* param=val or param="val"? */

265 if (val == param+strlen(param)+1)

266 val[-1] = '=';

267 else if (val == param+strlen(param)+2) {

268 val[-2] = '=';

269 memmove(val-1, val, strlen(val)+1);

270 val--;

271 } else

272 BUG();

273 }

274

275 /* Handle obsolete-style parameters */

276 if (obsolete_checksetup(param))

277 return 0;

278

279 /*

280 * Preemptive maintenance for "why didn't my misspelled command

281 * line work?"

282 */

283 if (strchr(param, '.') && (!val || strchr(param, '.') < val)) {

284 printk(KERN_ERR "Unknown boot option `%s': ignoring\n", param);

285 return 0;

286 }

287

288 if (panic_later)

289 return 0;

290

291 if (val) {

292 /* Environment option */

293 unsigned int i;

294 for (i = 0; envp_init[i]; i++) {

295 if (i == MAX_INIT_ENVS) {

296 panic_later = "Too many boot env vars at `%s'";

297 panic_param = param;

298 }

299 if (!strncmp(param, envp_init[i], val - param))

300 break;

301 }

302 envp_init[i] = param;

303 } else {

304 /* Command line option */

305 unsigned int i;

306 for (i = 0; argv_init[i]; i++) {

307 if (i == MAX_INIT_ARGS) {

308 panic_later = "Too many boot init vars at `%s'";

309 panic_param = param;

310 }

311 }

312 argv_init[i] = param;

313 }

314 return 0;

315}

316

317#ifdef CONFIG_DEBUG_PAGEALLOC

318int __read_mostly debug_pagealloc_enabled = 0;

319#endif

320

321static int __init init_setup(char *str)

322{

323 unsigned int i;

324

325 execute_command = str;

326 /*

327 * In case LILO is going to boot us with default command line,

328 * it prepends "auto" before the whole cmdline which makes

329 * the shell think it should execute a script with such name.

330 * So we ignore all arguments entered _before_ init=... [MJ]

331 */

332 for (i = 1; i < MAX_INIT_ARGS; i++)

333 argv_init[i] = NULL;

334 return 1;

335}

336__setup("init=", init_setup);

337

338static int __init rdinit_setup(char *str)

339{

340 unsigned int i;

341

342 ramdisk_execute_command = str;

343 /* See "auto" comment in init_setup */

344 for (i = 1; i < MAX_INIT_ARGS; i++)

345 argv_init[i] = NULL;

346 return 1;

347}

348__setup("rdinit=", rdinit_setup);

349

350#ifndef CONFIG_SMP

351

352#ifdef CONFIG_X86_LOCAL_APIC

353static void __init smp_init(void)

354{

355 APIC_init_uniprocessor();

356}

357#else

358#define smp_init() do { } while (0)

359#endif

360

361static inline void setup_per_cpu_areas(void) { }

362static inline void smp_prepare_cpus(unsigned int maxcpus) { }

363

364#else

365

366#ifndef CONFIG_HAVE_SETUP_PER_CPU_AREA

367unsigned long __per_cpu_offset[NR_CPUS] __read_mostly;

368

369EXPORT_SYMBOL(__per_cpu_offset);

370

371static void __init setup_per_cpu_areas(void)

372{

373 unsigned long size, i;

374 char *ptr;

375 unsigned long nr_possible_cpus = num_possible_cpus();

376

377 /* Copy section for each CPU (we discard the original) */

378 size = ALIGN(PERCPU_ENOUGH_ROOM, PAGE_SIZE);

379 ptr = alloc_bootmem_pages(size * nr_possible_cpus);

380

381 for_each_possible_cpu(i) {

382 __per_cpu_offset[i] = ptr - __per_cpu_start;

383 memcpy(ptr, __per_cpu_start, __per_cpu_end - __per_cpu_start);

384 ptr += size;

385 }

386}

387#endif /* CONFIG_HAVE_SETUP_PER_CPU_AREA */

388

389/* Called by boot processor to activate the rest. */

390static void __init smp_init(void)

391{

392 unsigned int cpu;

393

394 /* FIXME: This should be done in userspace --RR */

395 for_each_present_cpu(cpu) {

396 if (num_online_cpus() >= setup_max_cpus)

397 break;

398 if (!cpu_online(cpu))

399 cpu_up(cpu);

400 }

401

402 /* Any cleanup work */

403 printk(KERN_INFO "Brought up %ld CPUs\n", (long)num_online_cpus());

404 smp_cpus_done(setup_max_cpus);

405}

406

407#endif

408

409/*

410 * We need to store the untouched command line for future reference.

411 * We also need to store the touched command line since the parameter

412 * parsing is performed in place, and we should allow a component to

413 * store reference of name/value for future reference.

414 */

415static void __init setup_command_line(char *command_line)

416{

417 saved_command_line = alloc_bootmem(strlen (boot_command_line)+1);

418 static_command_line = alloc_bootmem(strlen (command_line)+1);

419 strcpy (saved_command_line, boot_command_line);

420 strcpy (static_command_line, command_line);

421}

422

423/*

424 * We need to finalize in a non-__init function or else race conditions

425 * between the root thread and the init thread may cause start_kernel to

426 * be reaped by free_initmem before the root thread has proceeded to

427 * cpu_idle.

428 *

429 * gcc-3.4 accidentally inlines this function, so use noinline.

430 */

431

432static void noinline __init_refok rest_init(void)

433 __releases(kernel_lock)

434{

435 int pid;

436

437 kernel_thread(kernel_init, NULL, CLONE_FS | CLONE_SIGHAND);

438 numa_default_policy();

439 pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

440 kthreadd_task = find_task_by_pid(pid);

441 unlock_kernel();

442

443 /*

444 * The boot idle thread must execute schedule()

445 * at least once to get things moving:

446 */

447 init_idle_bootup_task(current);

448 preempt_enable_no_resched();

449 schedule();

450 preempt_disable();

451

452 /* Call into cpu_idle with preempt disabled */

453 cpu_idle();

454}

455

456/* Check for early params. */

457static int __init do_early_param(char *param, char *val)

458{

459 struct obs_kernel_param *p;

460

461 for (p = __setup_start; p < __setup_end; p++) {

462 if ((p->early && strcmp(param, p->str) == 0) ||

463 (strcmp(param, "console") == 0 &&

464 strcmp(p->str, "earlycon") == 0)

465 ) {

466 if (p->setup_func(val) != 0)

467 printk(KERN_WARNING

468 "Malformed early option '%s'\n", param);

469 }

470 }

471 /* We accept everything at this stage. */

472 return 0;

473}

474

475/* Arch code calls this early on, or if not, just before other parsing. */

476void __init parse_early_param(void)

477{

478 static __initdata int done = 0;

479 static __initdata char tmp_cmdline[COMMAND_LINE_SIZE];

480

481 if (done)

482 return;

483

484 /* All fall through to do_early_param. */

485 strlcpy(tmp_cmdline, boot_command_line, COMMAND_LINE_SIZE);

486 parse_args("early options", tmp_cmdline, NULL, 0, do_early_param);

487 done = 1;

488}

489

490/*

491 * Activate the first processor.

492 */

493

494static void __init boot_cpu_init(void)

495{

496 int cpu = smp_processor_id();

497 /* Mark the boot cpu "present", "online" etc for SMP and UP case */

498 cpu_set(cpu, cpu_online_map);

499 cpu_set(cpu, cpu_present_map);

500 cpu_set(cpu, cpu_possible_map);

501}

502

503void __init __attribute__((weak)) smp_setup_processor_id(void)

504{

505}

506

507asmlinkage void __init start_kernel(void)

508{

509 char * command_line;

510 extern struct kernel_param __start___param[], __stop___param[];

511

512 smp_setup_processor_id();

513

514 /*

515 * Need to run as early as possible, to initialize the

516 * lockdep hash:

517 */

518 unwind_init();

519 lockdep_init();

520 cgroup_init_early();

521

522 local_irq_disable();

523 early_boot_irqs_off();

524 early_init_irq_lock_class();

525

526/*

527 * Interrupts are still disabled. Do necessary setups, then

528 * enable them

529 */

530 lock_kernel();

531 tick_init();

532 boot_cpu_init();

533 page_address_init();

534 printk(KERN_NOTICE);

535 printk(linux_banner);

536 setup_arch(&command_line);

537 setup_command_line(command_line);

538 unwind_setup();

539 setup_per_cpu_areas();

540 smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

541

542 /*

543 * Set up the scheduler prior starting any interrupts (such as the

544 * timer interrupt). Full topology setup happens at smp_init()

545 * time - but meanwhile we still have a functioning scheduler.

546 */

547 sched_init();

548 /*

549 * Disable preemption - early bootup scheduling is extremely

550 * fragile until we cpu_idle() for the first time.

551 */

552 preempt_disable();

553 build_all_zonelists();

554 page_alloc_init();

555 printk(KERN_NOTICE "Kernel command line: %s\n", boot_command_line);

556 parse_early_param();

557 parse_args("Booting kernel", static_command_line, __start___param,

558 __stop___param - __start___param,

559 &unknown_bootoption);

560 if (!irqs_disabled()) {

561 printk(KERN_WARNING "start_kernel(): bug: interrupts were "

562 "enabled *very* early, fixing it\n");

563 local_irq_disable();

564 }

565 sort_main_extable();

566 trap_init();

567 rcu_init();

568 init_IRQ();

569 pidhash_init();

570 init_timers();

571 hrtimers_init();

572 softirq_init();

573 timekeeping_init();

574 time_init();

575 profile_init();

576 if (!irqs_disabled())

577 printk("start_kernel(): bug: interrupts were enabled early\n");

578 early_boot_irqs_on();

579 local_irq_enable();

580

581 /*

582 * HACK ALERT! This is early. We're enabling the console before

583 * we've done PCI setups etc, and console_init() must be aware of

584 * this. But we do want output early, in case something goes wrong.

585 */

586 console_init();

587 if (panic_later)

588 panic(panic_later, panic_param);

589

590 lockdep_info();

591

592 /*

593 * Need to run this when irqs are enabled, because it wants

594 * to self-test [hard/soft]-irqs on/off lock inversion bugs

595 * too:

596 */

597 locking_selftest();

598

599#ifdef CONFIG_BLK_DEV_INITRD

600 if (initrd_start && !initrd_below_start_ok &&

601 initrd_start < min_low_pfn << PAGE_SHIFT) {

602 printk(KERN_CRIT "initrd overwritten (0x%08lx < 0x%08lx) - "

603 "disabling it.\n",initrd_start,min_low_pfn << PAGE_SHIFT);

604 initrd_start = 0;

605 }

606#endif

607 vfs_caches_init_early();

608 cpuset_init_early();

609 mem_init();

610 enable_debug_pagealloc();

611 cpu_hotplug_init();

612 kmem_cache_init();

613 setup_per_cpu_pageset();

614 numa_policy_init();

615 if (late_time_init)

616 late_time_init();

617 calibrate_delay();

618 pidmap_init();

619 pgtable_cache_init();

620 prio_tree_init();

621 anon_vma_init();

622#ifdef CONFIG_X86

623 if (efi_enabled)

624 efi_enter_virtual_mode();

625#endif

626 fork_init(num_physpages);

627 proc_caches_init();

628 buffer_init();

629 unnamed_dev_init();

630 key_init();

631 security_init();

632 vfs_caches_init(num_physpages);

633 radix_tree_init();

634 signals_init();

635 /* rootfs populating might need page-writeback */

636 page_writeback_init();

637#ifdef CONFIG_PROC_FS

638 proc_root_init();

639#endif

640 cgroup_init();

641 cpuset_init();

642 taskstats_init_early();

643 delayacct_init();

644

645 check_bugs();

646

647 acpi_early_init(); /* before LAPIC and SMP init */

648

649 /* Do the rest non-__init'ed, we're now alive */

650 rest_init();

651}

652

653static int __initdata initcall_debug;

654

655static int __init initcall_debug_setup(char *str)

656{

657 initcall_debug = 1;

658 return 1;

659}

660__setup("initcall_debug", initcall_debug_setup);

661

662extern initcall_t __initcall_start[], __initcall_end[];

663

664static void __init do_initcalls(void)

665{

666 initcall_t *call;

667 int count = preempt_count();

668

669 for (call = __initcall_start; call < __initcall_end; call++) {

670 ktime_t t0, t1, delta;

671 char *msg = NULL;

672 char msgbuf[40];

673 int result;

674

675 if (initcall_debug) {

676 printk("Calling initcall 0x%p", *call);

677 print_fn_descriptor_symbol(": %s()",

678 (unsigned long) *call);

679 printk("\n");

680 t0 = ktime_get();

681 }

682

683 result = (*call)();

684

685 if (initcall_debug) {

686 t1 = ktime_get();

687 delta = ktime_sub(t1, t0);

688

689 printk("initcall 0x%p", *call);

690 print_fn_descriptor_symbol(": %s()",

691 (unsigned long) *call);

692 printk(" returned %d.\n", result);

693

694 printk("initcall 0x%p ran for %Ld msecs: ",

695 *call, (unsigned long long)delta.tv64 >> 20);

696 print_fn_descriptor_symbol("%s()\n",

697 (unsigned long) *call);

698 }

699

700 if (result && result != -ENODEV && initcall_debug) {

701 sprintf(msgbuf, "error code %d", result);

702 msg = msgbuf;

703 }

704 if (preempt_count() != count) {

705 msg = "preemption imbalance";

706 preempt_count() = count;

707 }

708 if (irqs_disabled()) {

709 msg = "disabled interrupts";

710 local_irq_enable();

711 }

712 if (msg) {

713 printk(KERN_WARNING "initcall at 0x%p", *call);

714 print_fn_descriptor_symbol(": %s()",

715 (unsigned long) *call);

716 printk(": returned with %s\n", msg);

717 }

718 }

719

720 /* Make sure there is no pending stuff from the initcall sequence */

721 flush_scheduled_work();

722}

723

724/*

725 * Ok, the machine is now initialized. None of the devices

726 * have been touched yet, but the CPU subsystem is up and

727 * running, and memory and process management works.

728 *

729 * Now we can finally start doing some real work..

730 */

731static void __init do_basic_setup(void)

732{

733 /* drivers will send hotplug events */

734 init_workqueues();

735 usermodehelper_init();

736 driver_init();

737 init_irq_proc();

738 do_initcalls();

739}

740

741static int __initdata nosoftlockup;

742

743static int __init nosoftlockup_setup(char *str)

744{

745 nosoftlockup = 1;

746 return 1;

747}

748__setup("nosoftlockup", nosoftlockup_setup);

749

750static void __init do_pre_smp_initcalls(void)

751{

752 extern int spawn_ksoftirqd(void);

753

754 migration_init();

755 spawn_ksoftirqd();

756 if (!nosoftlockup)

757 spawn_softlockup_task();

758}

759

760static void run_init_process(char *init_filename)

761{

762 argv_init[0] = init_filename;

763 kernel_execve(init_filename, argv_init, envp_init);

764}

765

766/* This is a non __init function. Force it to be noinline otherwise gcc

767 * makes it inline to init() and it becomes part of init.text section

768 */

769static int noinline init_post(void)

770{

771 free_initmem();

772 unlock_kernel();

773 mark_rodata_ro();

774 system_state = SYSTEM_RUNNING;

775 numa_default_policy();

776

777 if (sys_open((const char __user *) "/dev/console", O_RDWR, 0) < 0)

778 printk(KERN_WARNING "Warning: unable to open an initial console.\n");

779

780 (void) sys_dup(0);

781 (void) sys_dup(0);

782

783 if (ramdisk_execute_command) {

784 run_init_process(ramdisk_execute_command);

785 printk(KERN_WARNING "Failed to execute %s\n",

786 ramdisk_execute_command);

787 }

788

789 /*

790 * We try each of these until one succeeds.

791 *

792 * The Bourne shell can be used instead of init if we are

793 * trying to recover a really broken machine.

794 */

795 if (execute_command) {

796 run_init_process(execute_command);

797 printk(KERN_WARNING "Failed to execute %s. Attempting "

798 "defaults...\n", execute_command);

799 }

800 run_init_process("/sbin/init");

801 run_init_process("/etc/init");

802 run_init_process("/bin/init");

803 run_init_process("/bin/sh");

804

805 panic("No init found. Try passing init= option to kernel.");

806}

807

808static int __init kernel_init(void * unused)

809{

810 lock_kernel();

811 /*

812 * init can run on any cpu.

813 */

814 set_cpus_allowed(current, CPU_MASK_ALL);

815 /*

816 * Tell the world that we're going to be the grim

817 * reaper of innocent orphaned children.

818 *

819 * We don't want people to have to make incorrect

820 * assumptions about where in the task array this

821 * can be found.

822 */

823 init_pid_ns.child_reaper = current;

824

825 cad_pid = task_pid(current);

826

827 smp_prepare_cpus(setup_max_cpus);

828

829 do_pre_smp_initcalls();

830

831 smp_init();

832 sched_init_smp();

833

834 cpuset_init_smp();

835

836 do_basic_setup();

837

838 /*

839 * check if there is an early userspace init. If yes, let it do all

840 * the work

841 */

842

843 if (!ramdisk_execute_command)

844 ramdisk_execute_command = "/init";

845

846 if (sys_access((const char __user *) ramdisk_execute_command, 0) != 0) {

847 ramdisk_execute_command = NULL;

848 prepare_namespace();

849 }

850

851 /*

852 * Ok, we have completed the initial bootup, and

853 * we're essentially up and running. Get rid of the

854 * initmem segments and start the user-mode stuff..

855 */

856 init_post();

857 return 0;

858}

859

start_kernel()看起来更像典型的内核代码,它几乎与C和机器无关。

该函数是对各种内核子系统和数据结构的初始化的一长串调用。

这些包括调度程序,内存区域,时间保持等等。

然后,start_kernel()调用rest_init(),此时几乎所有事情都可以进行。

rest_init()创建一个内核线程,该线程将另一个函数kernel_init()作为入口点。

然后rest_init()调用schedule()来启动任务调度,并通过调用cpu_idle()进入睡眠状态,cpu_idle()是Linux内核的空闲线程。

cpu_idle()会永远运行,因此进程零将由它托管。

只要有工作要做-可运行的进程-进程0就会从CPU引导出来,只有在没有可运行的进程可用时才返回。

但是,这是我们的出发点。

这个空闲循环是自启动以来我们followed的长线程的结尾,它是加电后处理器执行的第一个跳转的最终后代。

从复位向量到BIOS再到MBR再到引导加载程序再到实模式内核再到保护模式内核,所有这些混乱都在这里导致,一跳一跳地结束于引导处理器cpu_idle的空闲循环中这真的很酷。但是,这不可能是全部,否则计算机将无法工作。

此时,先前启动的内核线程已准备就绪,可以开始运行,从而替换进程0及其空闲线程。

事情确实如此,因为kernel_init()被指定为线程入口点,所以从这一点开始运行。

kernel_init()负责初始化系统中剩余的,自引导以来已暂停的CPU。

到目前为止,我们已经看到的所有代码都在称为引导处理器的单个CPU中执行。

在启动其他称为应用程序处理器的CPU时,它们以实模式启动,并且还必须运行多次初始化。

您可以在startup_32的代码中看到许多代码路径是通用的,但后来的应用程序处理器会使用一些分支。

最后,kernel_init()调用init_post(),它尝试按以下顺序执行用户模式进程:/ sbin / init,/ etc / init,/ bin / init和/ bin / sh。

如果全部失败,内核将崩溃。

幸运的是,init通常在那里,并以PID 1的身份开始运行。它会检查其配置文件以找出要启动的进程,其中可能包括X11 Windows,在控制台上登录的程序,网络守护程序等。

这样就结束了启动过程,因为另一个Linux盒开始在某处运行。

可能您的正常运行时间长而麻烦。

给定通用的体系结构,Windows的过程在许多方面都相似。

面临许多相同的问题,必须完成类似的初始化。

引导时,最大的区别之一是Windows将所有实模式内核代码以及一些初始的受保护模式代码打包到引导加载程序本身(C:\ NTLDR)中。

因此,Windows使用不同的二进制映像而不是在同一内核映像中包含两个区域。

另外,Linux将引导加载程序和内核完全分开;

这以某种方式自动脱离了开源过程。

下图显示了Windows内核的main bits:

Windows内核初始化

Windows用户模式的启动自然非常不同。

没有/ sbin / init,而是Csrss.exe和Winlogon.exe。

Winlogon生成启动所有Windows Services的Services.exe和本地安全身份验证子系统Lsass.exe。

经典的Windows登录对话框在Winlogon的上下文中运行。

这是本引导系列的结尾。

感谢大家的阅读和反馈。

对不起,有些事情得到了肤浅的对待。

我必须从某个地方开始,并且非常适合博客大小的讨论。

但是,没有什么比第二天更美好了。

我的计划是像其他系列一样,定期刊登本系列的“软件插图”文章。

同时,这里有一些资源:

最好,最重要的资源是用于Linux或BSD之一的真实内核的源代码。

英特尔发布了出色的《软件开发人员手册》,您可以免费下载。

了解Linux内核是一本不错的书,它遍历了许多Linux内核资源。

它已经过时了并且很干,但是我仍然推荐给任何想要使用内核的人。

Linux设备驱动程序更有趣,教学效果很好,但是范围有限。

最后,Patrick Moroney在本文的评论中建议了Robert Love的Linux Kernel Development。

我听说过该书的其他正面评论,因此听起来值得一试。

对于Windows,迄今为止最好的参考是Sysinternals的著名人物David Solomon和Mark Russinovich撰写的Windows Internals。

这是一本很棒的书,写得好而且透彻。

主要缺点是缺少源代码。

[更新:在下面的评论中,Nix在我掩盖的初始根文件系统上涵盖了很多基础。

感谢Marius Barbu在我写“ CR3”而不是GDTR时遇到了一个错误。