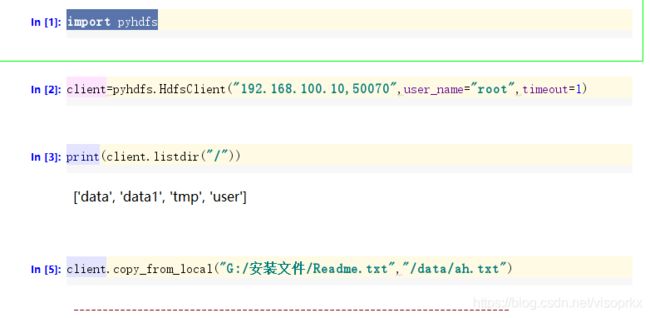

使用python pyhdfs操作hadoop 出现ConnectionError: HTTPConnectionPool(host='bigdata-senior03.chybinmy.com'

gaierror Traceback (most recent call last)

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connection.py in _new_conn(self)

158 conn = connection.create_connection(

--> 159 (self._dns_host, self.port), self.timeout, **extra_kw)

160

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\util\connection.py in create_connection(address, timeout, source_address, socket_options)

56

---> 57 for res in socket.getaddrinfo(host, port, family, socket.SOCK_STREAM):

58 af, socktype, proto, canonname, sa = res

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\socket.py in getaddrinfo(host, port, family, type, proto, flags)

732 addrlist = []

--> 733 for res in _socket.getaddrinfo(host, port, family, type, proto, flags):

734 af, socktype, proto, canonname, sa = res

gaierror: [Errno 11001] getaddrinfo failed

During handling of the above exception, another exception occurred:

NewConnectionError Traceback (most recent call last)

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connectionpool.py in urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

599 body=body, headers=headers,

--> 600 chunked=chunked)

601

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connectionpool.py in _make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

353 else:

--> 354 conn.request(method, url, **httplib_request_kw)

355

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\http\client.py in request(self, method, url, body, headers)

1106 """Send a complete request to the server."""

-> 1107 self._send_request(method, url, body, headers)

1108

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\http\client.py in _send_request(self, method, url, body, headers)

1151 body = _encode(body, 'body')

-> 1152 self.endheaders(body)

1153

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\http\client.py in endheaders(self, message_body)

1102 raise CannotSendHeader()

-> 1103 self._send_output(message_body)

1104

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\http\client.py in _send_output(self, message_body)

933

--> 934 self.send(msg)

935 if message_body is not None:

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\http\client.py in send(self, data)

876 if self.auto_open:

--> 877 self.connect()

878 else:

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connection.py in connect(self)

180 def connect(self):

--> 181 conn = self._new_conn()

182 self._prepare_conn(conn)

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connection.py in _new_conn(self)

167 raise NewConnectionError(

--> 168 self, "Failed to establish a new connection: %s" % e)

169

NewConnectionError:

During handling of the above exception, another exception occurred:

MaxRetryError Traceback (most recent call last)

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\adapters.py in send(self, request, stream, timeout, verify, cert, proxies)

448 retries=self.max_retries,

--> 449 timeout=timeout

450 )

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\connectionpool.py in urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

637 retries = retries.increment(method, url, error=e, _pool=self,

--> 638 _stacktrace=sys.exc_info()[2])

639 retries.sleep()

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\urllib3\util\retry.py in increment(self, method, url, response, error, _pool, _stacktrace)

397 if new_retry.is_exhausted():

--> 398 raise MaxRetryError(_pool, url, error or ResponseError(cause))

399

MaxRetryError: HTTPConnectionPool(host='bigdata-senior03.chybinmy.com', port=50075): Max retries exceeded with url: /webhdfs/v1/data/ah.txt?op=CREATE&user.name=root&namenoderpcaddress=bigdata-senior01.chybinmy.com:8020&createflag=&createparent=true&overwrite=false (Caused by NewConnectionError('

During handling of the above exception, another exception occurred:

ConnectionError Traceback (most recent call last)

----> 1 client.copy_from_local("G:/安装文件/Readme.txt","/data/ah.txt")

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\pyhdfs.py in copy_from_local(self, localsrc, dest, **kwargs)

751 """

752 with io.open(localsrc, 'rb') as f:

--> 753 self.create(dest, f, **kwargs)

754

755 def copy_to_local(self, src, localdest, **kwargs):

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\pyhdfs.py in create(self, path, data, **kwargs)

424 assert not metadata_response.content

425 data_response = self._requests_session.put(

--> 426 metadata_response.headers['location'], data=data, **self._requests_kwargs)

427 _check_response(data_response, expected_status=httplib.CREATED)

428 assert not data_response.content

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\api.py in put(url, data, **kwargs)

129 """

130

--> 131 return request('put', url, data=data, **kwargs)

132

133

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\api.py in request(method, url, **kwargs)

58 # cases, and look like a memory leak in others.

59 with sessions.Session() as session:

---> 60 return session.request(method=method, url=url, **kwargs)

61

62

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\sessions.py in request(self, method, url, params, data, headers, cookies, files, auth, timeout, allow_redirects, proxies, hooks, stream, verify, cert, json)

531 }

532 send_kwargs.update(settings)

--> 533 resp = self.send(prep, **send_kwargs)

534

535 return resp

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\sessions.py in send(self, request, **kwargs)

644

645 # Send the request

--> 646 r = adapter.send(request, **kwargs)

647

648 # Total elapsed time of the request (approximately)

C:\Users\mr-chen\Anaconda3\envs\hadoop\lib\site-packages\requests\adapters.py in send(self, request, stream, timeout, verify, cert, proxies)

514 raise SSLError(e, request=request)

515

--> 516 raise ConnectionError(e, request=request)

517

518 except ClosedPoolError as e:

ConnectionError: HTTPConnectionPool(host='bigdata-senior03.chybinmy.com', port=50075): Max retries exceeded with url: /webhdfs/v1/data/ah.txt?op=CREATE&user.name=root&namenoderpcaddress=bigdata-senior01.chybinmy.com:8020&createflag=&createparent=true&overwrite=false (Caused by NewConnectionError('

很明显,ConnectionError: HTTPConnectionPool(host='bigdata-senior03.chybinmy.com', port=50075),这是我的数据节点的域名,没有在我python程序所在的主机上映射。

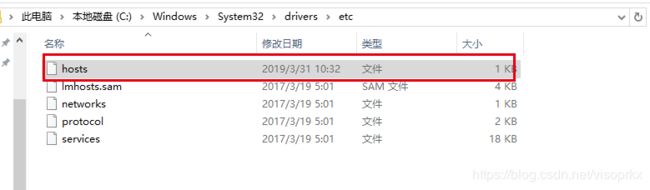

解决方案:

打开:C:\Windows\System32\drivers\etc

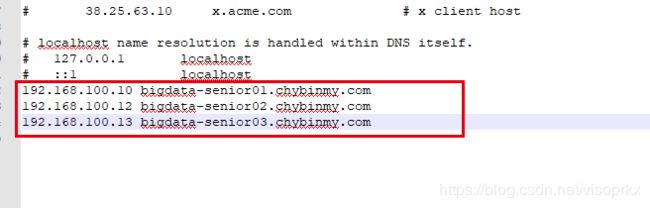

在hosts中加上ip和域名的映射:

问题成功解决。