|

|||

hostnamectl set-hostname k8s-m-1 ... hostnamectl set-hostname k8s-w-10

cat >>/etc/hosts<

ssh-keygen -t rsa ssh-copy-id root@k8s-m-1 … ssh-copy-id root@k8s-w-10

yum install -y epel-release yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget

systemctl stop firewalld systemctl disable firewalld iptables -F && iptables -X && iptables -F -t nat iptables -X -t nat && iptables -P FORWARD ACCEPT

setenforce 0 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

cat >> /etc/sysctl.d/kubernetes.conf <

timedatectl set-timezone Asia/Shanghai timedatectl set-timezone Asia/Tokyo

systemctl stop postfix && systemctl disable postfix

yum -y update rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm yum --disablerepo="*" --enablerepo="elrepo-kernel" list available yum --enablerepo=elrepo-kernel install kernel-lt.x86_64 -y sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg sudo grub2-set-default

mkdir -p /opt/k8s/{bin,work} /etc/{kubernetes,etcd}/cert

echo 'PATH=/opt/k8s/bin:$PATH' >>/root/.bashrc source /root/.bashrc

mkdir -p /opt/k8s/cert && cd /opt/k8s/work wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl_1.4.1_linux_amd64 mv cfssl_1.4.1_linux_amd64 /opt/k8s/bin/cfssl wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssljson_1.4.1_linux_amd64 mv cfssljson_1.4.1_linux_amd64 /opt/k8s/bin/cfssljson wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl-certinfo_1.4.1_linux_amd64 mv cfssl-certinfo_1.4.1_linux_amd64 /opt/k8s/bin/cfssl-certinfo chmod +x /opt/k8s/bin/* export PATH=/opt/k8s/bin:$PA

cd /opt/k8s/work cat > ca-config.json <

cd /opt/k8s/work cat > ca-csr.json <

cd /opt/k8s/work cfssl gencert -initca ca-csr.json | cfssljson -bare ca ls ca*

mkdir -p /etc/kubernetes/cert scp ca*.pem ca-config.json root@k8s-m-1:/etc/kubernetes/cert/ … scp ca*.pem ca-config.json root@k8s-w-10:/etc/kubernetes/cert/

yum install -y keepalived

cat > /etc/keepalived/keepalived.conf <

scp -pr /etc/keepalived/keepalived.conf root@k8s-m-1:/etc/keepalived/ (master节点)

systemctl enable keepalived.service systemctl start keepalived.service systemctl status keepalived.service ip address show eth0

yum install -y haproxy

cat >> /etc/haproxy/haproxy.cfg << EOF #--------------------------------------------------------------------- # Example configuration for a possible web application. See the # full configuration options online. # # http://haproxy.1wt.eu/download/1.4/doc/configuration.txt # #--------------------------------------------------------------------- #--------------------------------------------------------------------- # Global settings #--------------------------------------------------------------------- global # to have these messages end up in /var/log/haproxy.log you will # need to: # # 1) configure syslog to accept network log events. This is done # by adding the '-r' option to the SYSLOGD_OPTIONS in # /etc/sysconfig/syslog # # 2) configure local2 events to go to the /var/log/haproxy.log # file. A line like the following can be added to # /etc/sysconfig/syslog # # local2.* /var/log/haproxy.log # log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon # turn on stats unix socket stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #--------------------------------------------------------------------- defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- frontend kubernetes-apiserver mode tcp bind *:8443 option tcplog default_backend kubernetes-apiserver #--------------------------------------------------------------------- # round robin balancing between the various backends #--------------------------------------------------------------------- backend kubernetes-apiserver mode tcp balance roundrobin server k8s-m-1 10.66.10.26:6443 check server k8s-m-2 10.66.10.27:6443 check server k8s-m-3 10.66.10.28:6443 check #--------------------------------------------------------------------- # collection haproxy statistics message #--------------------------------------------------------------------- listen stats bind *:1080 stats auth admin:awesomePassword stats refresh 5s stats realm HAProxy\ Statistics stats uri /admi

scp -pr /etc/haproxy/haproxy.cfg root@k8s-m-1:/etc/haproxy/

systemctl enable haproxy.service systemctl start haproxy.service systemctl status haproxy.service ss -lnt | grep -E "8443|1080"

https://dl.k8s.io/v1.18.2/kubernetes-server-linux-amd64.tar.gz tar -zxvf kubernetes-server-linux-amd64.tar.gz -C /opt/k8s/work/

cd /opt/k8s/work/kubernetes/server/bin

scp -pr kube-apiserver kubectl kube-controller-manager kube-scheduler root@k8s-m-1:/opt/k8s/bin/ ... scp -pr kube-apiserver kubectl kube-controller-manager kube-scheduler root@k8s-m-3:/opt/k8s/bin/

scp -pr kubelet kube-proxy root@k8s-w-1:/opt/k8s/bin/ ... scp -pr kubelet kube-proxy root@k8s-w-10:/opt/k8s/bin/

cd /opt/k8s/work cat > admin-csr.json <

cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes admin-csr.json | cfssljson -bare admin

# 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server=https://10.66.10.3:8443 \ --kubeconfig=kubectl.kubeconfig # 设置客户端认证参数 kubectl config set-credentials admin \ --client-certificate=/opt/k8s/work/admin.pem \ --client-key=/opt/k8s/work/admin-key.pem \ --embed-certs=true \ --kubeconfig=kubectl.kubeconfig # 设置上下文参数 kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin \ --kubeconfig=kubectl.kubeconfig # 设置默认上下文 kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

mkdir -p ~/.kube scp kubectl.kubeconfig root@k8s-m-1:~/.kube/config … scp kubectl.kubeconfig root@k8s-m-3:~/.kube/config

wget https://github.com/coreos/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gz

cat > etcd-csr.json <

cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

mkdir -p /etc/etcd/cert scp -pr etcd*.pem root@k8s-m-1:/etc/etcd/cert/ ... scp -pr etcd*.pem root@k8s-m-2:/etc/etcd/cert/

cat >> /etc/systemd/system/etcd.service <

mkdir -p /data/k8s/etcd/data mkdir -p /data/k8s/etcd/{data,wal} systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd

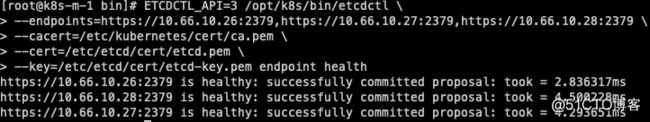

ETCDCTL_API=3 /opt/k8s/bin/etcdctl \ --endpoints=https://10.66.10.26:2379,https://10.66.10.27:2379,https://10.66.10.28:2379 \ --cacert=/etc/kubernetes/cert/ca.pem \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem endpoint health

ETCDCTL_API=3 /opt/k8s/bin/etcdctl \ -w table --cacert=/etc/kubernetes/cert/ca.pem \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem \ --endpoints=https://10.66.10.26:2379,https://10.66.10.27:2379,https://10.66.10.28:2379 endpoint status

mkdir -p /opt/k8s/work/flannel wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz tar -xzvf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/k8s/work/flannel

cd /opt/k8s/work scp flannel/{flanneld,mk-docker-opts.sh} root@k8s-w-1:/opt/k8s/bin/ ... scp flannel/{flanneld,mk-docker-opts.sh} root@k8s-w-10:/opt/k8s/bin/ chmod +x /opt/k8s/bin/*

cd /opt/k8s/work cat > flanneld-csr.json <

cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

cd /opt/k8s/work mkdir -p /etc/flanneld/cert scp flanneld*.pem root@k8s-w-1:/etc/flanneld/cert ... scp flanneld*.pem root@k8s-w-10:/etc/flanneld/cert

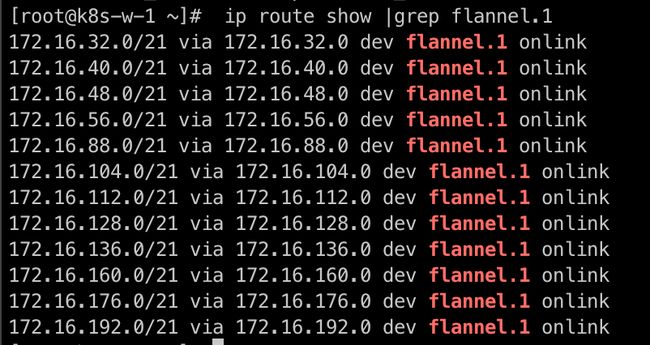

cd /opt/k8s/work etcdctl \ --endpoints=https://10.66.10.26:2379,https://10.66.10.27:2379,https://10.66.10.28:2379 \ --ca-file=/opt/k8s/work/ca.pem \ --cert-file=/opt/k8s/work/flanneld.pem \ --key-file=/opt/k8s/work/flanneld-key.pem \ set /kubernetes/network/config '{"Network":"'172.16.0.0/16'", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}'

cat >> /etc/systemd/system/flanneld.service <

systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld

etcdctl \ --endpoints=https://10.66.10.26:2379,https://10.66.10.27:2379,https://10.66.10.28:2379 \ --ca-file=/etc/kubernetes/cert/ca.pem \ --cert-file=/etc/flanneld/cert/flanneld.pem \ --key-file=/etc/flanneld/cert/flanneld-key.pem \ get /kubernetes/network/config

etcdctl \ --endpoints=https://10.66.10.26:2379,https://10.66.10.27:2379,https://10.66.10.28:2379 \ --ca-file=/etc/kubernetes/cert/ca.pem \ --cert-file=/etc/flanneld/cert/flanneld.pem \ --key-file=/etc/flanneld/cert/flanneld-key.pem \ ls /kubernetes/network/subnets

ip route show |grep flannel.1

ssh k8s-w-8 "/usr/sbin/ip addr show flannel.1|grep -w inet"

ssh k8s-w-8 "/usr/sbin/ip addr show flannel.1|grep -w inet"

cat >> kubernetes-csr.json <

cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

mkdir -p /etc/kubernetes/cert scp kubernetes*.pem root@k8s-m-1:/etc/kubernetes/cert/ ... scp kubernetes*.pem root@k8s-m-3:/etc/kubernetes/cert/

cd /opt/k8s/work cat > encryption-config.yaml <

/etc/kubernetesscp encryption-config.yaml root@k8s-m-1:/etc/kubernetes/ ... scp encryption-config.yaml root@k8s-m-1:/etc/kubernetes/

cat > audit-policy.yaml <

scp audit-policy.yaml root@k8s-m-1:/etc/kubernetes/audit-policy.yaml ... scp audit-policy.yaml root@k8s-m-2:/etc/kubernetes/audit-policy.yaml

创建后续访问 metrics-server 或 kube-prometheus 使用的证书

cd /opt/k8s/work cat > proxy-client-csr.json <

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \ -ca-key=/etc/kubernetes/cert/ca-key.pem \ -config=/etc/kubernetes/cert/ca-config.json \ -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client

scp proxy-client*.pem root@k8s-m-1:/etc/kubernetes/cert/ ... scp proxy-client*.pem root@k8s-m-3:/etc/kubernetes/cert/

cat > /etc/systemd/system/kube-apiserver.service <

scp /etc/systemd/system/kube-apiserver.service root@k8s-m-1:/etc/systemd/system/kube-apiserver.service ... scp /etc/systemd/system/kube-apiserver.service root@k8s-m-1:/etc/systemd/system/kube-apiserver.service

mkdir -p /data/k8s/k8s/kube-apiserver systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver

cd /opt/k8s/work cat > kube-controller-manager-csr.json <

cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

cd /opt/k8s/work scp kube-controller-manager*.pem root@k8s-m-1:/etc/kubernetes/cert/ ... scp kube-controller-manager*.pem root@k8s-m-3:/etc/kubernetes/cert/

kube-controller-manager 使用 kubeconfig 文件访问 apiserver,该文件提供了 apiserver 地址、嵌入的 CA 证书和 kube-controller-manager 证书等信息:

kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server="https://10.66.10.3:8443" \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-credentials system:kube-controller-manager \ --client-certificate=kube-controller-manager.pem \ --client-key=kube-controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-context system:kube-controller-manager \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconf

cp -pr kube-controller-manager.kubeconfig /etc/kubernetes/kube-controller-manager.kubeconfig

cat > /etc/systemd/system/kube-controller-manager.service<

scp /etc/systemd/system/kube-controller-manager.service root@k8s-m-1:/etc/systemd/system/kube-controller-manager.service ... scp /etc/systemd/system/kube-controller-manager.service root@k8s-m-3:/etc/systemd/system/kube-controller-manager.service

mkdir -p /data/k8s/k8s/kube-controller-manager systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager

cat > kube-scheduler-csr.json <

cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

scp -pr kube-scheduler*.pem root@k8s-m-1:/etc/kubernetes/cert/ … scp -pr kube-scheduler*.pem root@k8s-m-3:/etc/kubernetes/cert/

创建和分发 kubeconfig 文件

kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server="https://10.66.10.3:8443" \ --kubeconfig=kube-scheduler.kubeconfig kubectl config set-credentials system:kube-scheduler \ --client-certificate=kube-scheduler.pem \ --client-key=kube-scheduler-key.pem \ --embed-certs=true \ --kubeconfig=kube-scheduler.kubeconfig kubectl config set-context system:kube-scheduler \ --cluster=kubernetes \ --user=system:kube-scheduler \ --kubeconfig=kube-scheduler.kubeconfig kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconf

scp -pr kube-scheduler.kubeconfig root@k8s-m-1:/etc/kubernetes/kube-scheduler.kubeconfig … scp -pr kube-scheduler.kubeconfig root@k8s-m-3:/etc/kubernetes/kube-scheduler.kubeconfig

创建 kube-scheduler 配置文件

cat > /etc/kubernetes/kube-scheduler.yaml <

scp -pr /etc/kubernetes/kube-scheduler.yaml root@k8s-m-1:/etc/kubernetes/kube-scheduler.yaml … scp -pr /etc/kubernetes/kube-scheduler.yaml root@k8s-m-3:/etc/kubernetes/kube-scheduler.yaml

cat > /etc/systemd/system/kube-scheduler.service <

scp -pr /etc/systemd/system/kube-scheduler.service root@k8s-m-1:/etc/systemd/system/kube-scheduler.service … scp -pr /etc/systemd/system/kube-scheduler.service root@k8s-m-3:/etc/systemd/system/kube-scheduler.service

mkdir -p /data/k8s/k8s/kube-scheduler systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler

docker 下载页面

cd /opt/k8s/work wget https://download.docker.com/linux/static/stable/x86_64/docker-18.09.6.tgz tar -xvf docker-18.09.6.tgz

cd /opt/k8s/work scp docker/* root@k8s-w-1:/opt/k8s/bin/ ... scp docker/* root@k8s-w-10:/opt/k8s/bin/

cat > /etc/systemd/system/docker.service <

sudo iptables -P FORWARD ACCEPT /sbin/iptables -P FORWARD ACCEPT

cd /opt/k8s/work scp docker.service root@k8s-w-1:/etc/systemd/system/ ... scp docker.service root@k8s-w-10:/etc/systemd/system/

mkdir -p /etc/docker/ /data/k8s/docker/{data,exec}

cat /etc/docker/daemon.json { "registry-mirrors": ["https://docker.mirrors.ustc.edu.cn","https://hub-mirror.c.163.com"], "insecure-registries": ["docker02:35000"], "max-concurrent-downloads": 20, "live-restore": true, "max-concurrent-uploads": 10, "debug": true, "data-root": "/data/k8s/docker/data", "exec-root": "/data/k8s/docker/exec", "log-opts": { "max-size": "100m", "max-file": "5" } }

mkdir -p /etc/docker/ /data/k8s/docker/{data,exec} scp docker-daemon.json root@k8s-w-1:/etc/docker/daemon.json ... scp docker-daemon.json root@k8s-w-10:/etc/docker/daemon.json

systemctl daemon-reload && systemctl enable docker && systemctl restart docker

cat > /opt/k8s/bin/environment.sh <>> ${node_name}" # 创建 token export BOOTSTRAP_TOKEN=$(kubeadm token create \ --description kubelet-bootstrap-token \ --groups system:bootstrappers:${node_name} \ --kubeconfig ~/.kube/config) # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/cert/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubeadm token list --kubeconfig ~/.kube/config

cd /opt/k8s/work/ scp -pr kubelet-bootstrap-k8s-w-1.kubeconfig root@k8s-w-1:/etc/kubernetes/kubelet-bootstrap.kubeconfig ... scp -pr kubelet-bootstrap-k8s-w-10.kubeconfig root@k8s-w-10:/etc/kubernetes/kubelet-bootstrap.kubeconfig

cd /opt/k8s/work/ cat > kubelet-config.yaml <

(需要修改配置文件,address: "10.66.10.31"

scp kubelet-config.yaml root@k8s-w-1:/etc/kubernetes/kubelet-config.yaml ... scp kubelet-config.yaml root@k8s-w-10:/etc/kubernetes/kubelet-config.yaml

cat > /etc/systemd/system/kubelet.service <

scp /etc/systemd/system/kubelet.service root@$k8s-w-1:/etc/systemd/system/kubelet.service ... scp /etc/systemd/system/kubelet.service root@$k8s-w-10:/etc/systemd/system/kubelet.service

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes-master

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

mkdir -p /data/k8s/k8s/kubelet

/usr/sbin/swapoff -a

systemctl daemon-reload && systemctl restart kubelet && systemctl enable kubelet

After=docker.service Requires=docker.service

cd /opt/k8s/work/ cat > csr-crb.yaml <

安全性考虑

kubectl get csr kubectl certificate approve csr-bjtp4

cd /opt/k8s/work cat > kube-proxy-csr.json <

system:kube-proxy

system:node-proxiersystem:kube-proxysystem:node-proxierkube-apiserver

cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ls kube-proxy*

cd /opt/k8s/work source /opt/k8s/bin/environment.sh kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=kube-proxy.pem \ --client-key=kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconf

cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_name in ${NODE_NAMES[@]} do echo ">>> ${node_name}" scp kube-proxy.kubeconfig root@${node_name}:/etc/kubernetes/ done

cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > kube-proxy-config.yaml.template <

bindAddress

clientConnection.kubeconfig

clusterCIDR--cluster-cidr--cluster-cidr--masquerade-all

hostnameOverride

mode

cd /opt/k8s/work scp kube-proxy-config.yaml.template root@k8s-w-1:/etc/kubernetes/kube-proxy-config.yaml ... scp kube-proxy-config.yaml.template root@k8s-w-10:/etc/kubernetes/kube-proxy-config.yaml

cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > /etc/systemd/system/kube-proxy.service <

cd /opt/k8s/work scp kube-proxy.service root@k8s-w-1:/etc/systemd/system/ .. scp kube-proxy.service root@k8s-w-10:/etc/systemd/system/

cd /opt/k8s/work mkdir -p /data/k8s/k8s/kube-proxy modprobe ip_vs_rr systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy

cd /opt/k8s/work git clone https://github.com/coredns/deployment.git mv deployment coredns-deployment

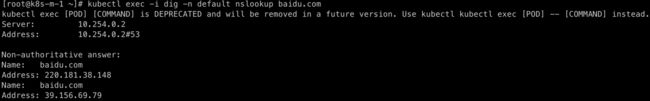

cd /opt/k8s/work/coredns-deployment/kubernetes ./deploy.sh -i 10.254.0.2 -d cluster.local | kubectl apply -f -

kubectl get svc,pods -n kube-system| grep coredns

cat > dig.yaml <

kubectl get pods

kubectl exec -i dig -n default nslookup kubernetes

kubectl exec -i dig -n default nslookup baidu.com

cd /opt/k8s/work/ wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-0.32.0/deploy/static/provider/cloud/deploy.yaml mv deploy.yaml ingress-nginx.yaml kubectl apply -f ingress-nginx.yaml

kubectl label k8s-w-1 usage=ingress ... kubectl label k8s-w-3 usage=ingress

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx --- # Source: ingress-nginx/templates/controller-serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx data: --- # Source: ingress-nginx/templates/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - '' resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - '' resources: - nodes verbs: - get - apiGroups: - '' resources: - services verbs: - get - list - update - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses verbs: - get - list - watch - apiGroups: - '' resources: - events verbs: - create - patch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io # k8s 1.14+ resources: - ingressclasses verbs: - get - list - watch --- # Source: ingress-nginx/templates/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - '' resources: - namespaces verbs: - get - apiGroups: - '' resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - '' resources: - services verbs: - get - list - update - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses verbs: - get - list - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io # k8s 1.14+ resources: - ingressclasses verbs: - get - list - watch - apiGroups: - '' resources: - configmaps resourceNames: - ingress-controller-leader-nginx verbs: - get - update - apiGroups: - '' resources: - configmaps verbs: - create - apiGroups: - '' resources: - endpoints verbs: - create - get - update - apiGroups: - '' resources: - events verbs: - create - patch --- # Source: ingress-nginx/templates/controller-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-service-webhook.yaml apiVersion: v1 kind: Service metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller-admission namespace: ingress-nginx spec: type: ClusterIP ports: - name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-service.yaml apiVersion: v1 kind: Service metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: LoadBalancer externalTrafficPolicy: Local ports: - name: http port: 80 protocol: TCP targetPort: http - name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: replicas: 3 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirst hostNetwork: true nodeSelector: usage: ingress containers: - name: controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.32.0 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --publish-service=ingress-nginx/ingress-nginx-controller - --election-id=ingress-controller-leader - --ingress-class=nginx - --configmap=ingress-nginx/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace livenessProbe: httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 3 readinessProbe: httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 3 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- # Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml apiVersion: admissionregistration.k8s.io/v1beta1 kind: ValidatingWebhookConfiguration metadata: labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook name: ingress-nginx-admission namespace: ingress-nginx webhooks: - name: validate.nginx.ingress.kubernetes.io rules: - apiGroups: - extensions - networking.k8s.io apiVersions: - v1beta1 operations: - CREATE - UPDATE resources: - ingresses failurePolicy: Fail clientConfig: service: namespace: ingress-nginx name: ingress-nginx-controller-admission path: /extensions/v1beta1/ingresses --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-create annotations: helm.sh/hook: pre-install,pre-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx spec: template: metadata: name: ingress-nginx-admission-create labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: hostNetwork: true nodeSelector: kubernetes.io/hostname: k8s-w-1 containers: - name: create image: jettech/kube-webhook-certgen:v1.2.0 imagePullPolicy: IfNotPresent args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.ingress-nginx.svc - --namespace=ingress-nginx - --secret-name=ingress-nginx-admission restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission securityContext: runAsNonRoot: true runAsUser: 2000 --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-patch annotations: helm.sh/hook: post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx spec: template: metadata: name: ingress-nginx-admission-patch labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: hostNetwork: true nodeSelector: kubernetes.io/hostname: k8s-w-1 containers: - name: patch image: jettech/kube-webhook-certgen:v1.2.0 imagePullPolicy: args: - patch - --webhook-name=ingress-nginx-admission - --namespace=ingress-nginx - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission securityContext: runAsNonRoot: true runAsUser: 2000 --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx rules: - apiGroups: - '' resources: - secrets verbs: - get - create --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-2.0.3 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.32.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-ngin

cat > nginx.yaml <

cat > service-nginx.yaml <

cat > ingress-nginx.yaml <